-

@ 502ab02a:a2860397

2025-05-07 01:08:58

@ 502ab02a:a2860397

2025-05-07 01:08:58สัปดาห์นี้ถือว่าเป็นเบรคคั่นพักผ่อนแก้เครียดนิดหน่อยแล้วกันครับ เรามาเล่าย้อนอดีตกันนิดหน่อย เหมือนสตาร์วอส์ที่ฉายภาค 4-5-6 แล้วย้อนไป 1-2-3 ฮาๆๆๆ

เคยได้ยินคำว่า Nuremberg Trials ไหมครับ ย้อนความนิดนึงว่า Nuremberg (Nürnberg อ่านว่า เนือร์นแบร์ก) คือชื่อเมืองในเยอรมนีที่เคยเป็นเวทีพิจารณาคดีประวัติศาสตร์หลังสงครามโลกครั้งที่ 2

“Nuremberg Trials” คือการไต่สวนผู้มีส่วนเกี่ยวข้องกับ อาชญากรรมสงครามของนาซีเยอรมัน หลังสงครามโลกครั้งที่ 2 ในปี 1945–46 พันธมิตรผู้ชนะสงครามได้จับตัวผู้นำนาซี นักการเมือง หมอ นักวิทยาศาสตร์ มาขึ้นศาล ข้อหาของพวกเขาไม่ได้แค่ฆ่าคน แต่รวมถึงการละเมิดศีลธรรมมนุษย์ขั้นพื้นฐาน อย่างการทดลองทางการแพทย์กับนักโทษ โดยไม่มีการขอความยินยอม

จากการไต่สวนนี้ จึงเกิดหลักจริยธรรมที่ชื่อว่า “Nuremberg Code” ซึ่งกลายเป็นรากฐานของการทดลองทางการแพทย์ยุคใหม่ หัวใจของโค้ดนี้คือคำว่า “Informed Consent” แปลว่า ถ้าจะทำอะไรกับร่างกายใคร ต้องได้รับความยินยอมจากเขาอย่างเต็มใจ และมีข้อมูลครบถ้วน นี่แหละ คือบทเรียนจากบาดแผลของสงครามโลก

แต่แล้ว...ในปี 2020 โลกก็เข้าสู่ยุคที่ใครบางคนบอกว่า “ต้องเชื่อผู้เชี่ยวชาญ” ใครกังวล = คนไม่รักสังคม ใครถามเยอะ = คนต่อต้านวิทยาศาสตร์ การยินยอมโดยสมัครใจ เริ่มกลายเป็นแค่คำเชิงสัญลักษณ์

ในช่วงหลังนี้เราอาจจะได้ยินข่าวหรือทฤษฎีในอินเตอร์เนทเกี่ยวกับคำว่า Nuremberg 2.0 กันนะครับ เพราะเริ่มผุดขึ้นตามกระทู้เงียบ ๆ คลิปใต้ดิน และเวทีเสวนาแปลก ๆ ที่ไม่มีใครอยากอ้างชื่อบนเวที TED Talk ซึ่ง กลุ่มที่ใช้คำนี้มักจะหมายถึงความต้องการให้มีการ “ไต่สวน” หรือ “เอาผิด” กับนักการเมือง นักวิทยาศาสตร์ แพทย์ หรือองค์กรที่เกี่ยวข้องกับ การออกคำสั่ง การบังคับ การเซ็นเซอร์ข้อมูลที่ขัดแย้งกับแนวทางรัฐ การเผยแพร่ข้อมูลโดยไม่โปร่งใส พวกเขามองว่า นโยบายเหล่านั้นละเมิดสิทธิเสรีภาพของประชาชนในระดับที่เปรียบได้กับ “อาชญากรรมต่อมนุษยชาติ” จึงเสนอแนวคิด “Nuremberg 2.0”

พวกเขาไม่ได้เรียกร้องแค่ความโปร่งใส แต่เขาอยากเห็นการทบทวน ว่าใครกันแน่ที่ละเมิดหลักจริยธรรมที่โลกเคยตกลงกันไว้เมื่อ 80 ปีก่อน

Nuremberg คือการไต่สวนคนที่ใช้อำนาจรัฐฆ่าคนอย่างจงใจ Nuremberg 2.0 คือคำเตือนว่า “การใช้ความกลัวครอบงำเสรีภาพ” อาจไม่ต่างกันนัก

ทีนี้เคยสงสัยไหม แล้วมันเกี่ยวอะไรกับอาหาร?

เพราะจากวิกฤตโรคระบาด เราเริ่มเห็น “วิทยาศาสตร์แบบผูกขาด” คุมเกมส์ บริษัทเทคโนโลยีเริ่มเข้ามาทำอาหาร ชื่อใหม่ของเนื้อสเต๊กกลายเป็น “โปรตีนทางเลือก” อาหารจากแล็บกลายเป็น “ทางรอดของโลก” สารเคมีอัดลงไปแทนเนื้อจริง ๆ แต่มีฉลากติดว่า "รักษ์โลก ปลอดภัย ยั่งยืน"

แต่ถ้ามองให้ลึกลงไปอีกนิด บางกลุ่มคนกลับเริ่มเห็นอะไรบางอย่างที่ขนลุกกว่า เพราะมันคือ “ระบบควบคุมสุขภาพ” ที่อาศัย “ความกลัว” เป็นหัวเชื้อ และ “วิทยาศาสตร์แบบผูกขาด” เป็นกลไก

จากนั้น…ทุกอย่างก็จะถูกเสิร์ฟอย่างสวยงามในรูปแบบ "นวัตกรรมเพื่ออนาคต" ไม่ว่าจะเป็นอาหารเสริมชนิดใหม่ เนื้อสัตว์ปลูกในแล็บ หรืออาหารที่ไม่ต้องเคี้ยว

ลองคิดเล่น ๆครับ ถ้าสารอาหารถูกควบคุมได้ เหมือนที่เราเคยถูกบังคับกับบางอย่างได้ล่ะ? วันหนึ่ง เราอาจถูกขอให้ "กิน" ในสิ่งที่ระบบสุขภาพอนาคตเขาบอกว่าดี แล้วถ้าเฮียบอกว่าไม่อยากกิน...เขาอาจไม่ห้าม แต่ App สุขภาพจะเตือนว่า “คุณมีพฤติกรรมเสี่ยงต่อโลกใบนี้” แต้มเครดิตจะสุขภาพจะลดลงและส่วนลดข้าวกล่องเนื้อจากจุลินทรีย์จะไม่เข้าบัญชีเฮียอีกเลย

ใช่…มันไม่เหมือนการบังคับ แต่มันคือการสร้าง “ระบบทางเดียว” ที่ทำให้คนที่อยากเดินออกนอกแถว เหมือนเดินลงเหว

Nuremberg 2.0 จึงไม่ใช่แค่เรื่องของอดีตหรือโรคระบาด แต่มันเป็นกระจกที่สะท้อนว่า “ถ้าเราไม่เรียนรู้จากประวัติศาสตร์ เราอาจกินซ้ำรอยมันเข้าไปในมื้อเย็น”

อนาคตของอาหารอาจไม่ได้อยู่ในจาน แต่อยู่ในนโยบาย อยู่ในบริษัทที่ผลิตโปรตีนจากอากาศ อยู่ในทุนที่ซื้อนักวิทยาศาสตร์ไว้ทั้งวงการ และถ้าเราหลับตาอีกครั้ง หลายคนก็กลัวว่า...บทไต่สวน Nuremberg รอบใหม่ อาจไม่สามารถเกิดขึ้นอีกต่อไป เพราะคราวนี้ คนร้ายจะไม่ได้ถือปืน แต่อาจถือใบรับรองโภชนาการระดับโลกในมือแทน

#pirateketo #กูต้องรู้มั๊ย #ม้วนหางสิลูก #siamstr

-

@ a39d19ec:3d88f61e

2025-04-22 12:44:42

@ a39d19ec:3d88f61e

2025-04-22 12:44:42Die Debatte um Migration, Grenzsicherung und Abschiebungen wird in Deutschland meist emotional geführt. Wer fordert, dass illegale Einwanderer abgeschoben werden, sieht sich nicht selten dem Vorwurf des Rassismus ausgesetzt. Doch dieser Vorwurf ist nicht nur sachlich unbegründet, sondern verkehrt die Realität ins Gegenteil: Tatsächlich sind es gerade diejenigen, die hinter jeder Forderung nach Rechtssicherheit eine rassistische Motivation vermuten, die selbst in erster Linie nach Hautfarbe, Herkunft oder Nationalität urteilen.

Das Recht steht über Emotionen

Deutschland ist ein Rechtsstaat. Das bedeutet, dass Regeln nicht nach Bauchgefühl oder politischer Stimmungslage ausgelegt werden können, sondern auf klaren gesetzlichen Grundlagen beruhen müssen. Einer dieser Grundsätze ist in Artikel 16a des Grundgesetzes verankert. Dort heißt es:

„Auf Absatz 1 [Asylrecht] kann sich nicht berufen, wer aus einem Mitgliedstaat der Europäischen Gemeinschaften oder aus einem anderen Drittstaat einreist, in dem die Anwendung des Abkommens über die Rechtsstellung der Flüchtlinge und der Europäischen Menschenrechtskonvention sichergestellt ist.“

Das bedeutet, dass jeder, der über sichere Drittstaaten nach Deutschland einreist, keinen Anspruch auf Asyl hat. Wer dennoch bleibt, hält sich illegal im Land auf und unterliegt den geltenden Regelungen zur Rückführung. Die Forderung nach Abschiebungen ist daher nichts anderes als die Forderung nach der Einhaltung von Recht und Gesetz.

Die Umkehrung des Rassismusbegriffs

Wer einerseits behauptet, dass das deutsche Asyl- und Aufenthaltsrecht strikt durchgesetzt werden soll, und andererseits nicht nach Herkunft oder Hautfarbe unterscheidet, handelt wertneutral. Diejenigen jedoch, die in einer solchen Forderung nach Rechtsstaatlichkeit einen rassistischen Unterton sehen, projizieren ihre eigenen Denkmuster auf andere: Sie unterstellen, dass die Debatte ausschließlich entlang ethnischer, rassistischer oder nationaler Kriterien geführt wird – und genau das ist eine rassistische Denkweise.

Jemand, der illegale Einwanderung kritisiert, tut dies nicht, weil ihn die Herkunft der Menschen interessiert, sondern weil er den Rechtsstaat respektiert. Hingegen erkennt jemand, der hinter dieser Kritik Rassismus wittert, offenbar in erster Linie die „Rasse“ oder Herkunft der betreffenden Personen und reduziert sie darauf.

Finanzielle Belastung statt ideologischer Debatte

Neben der rechtlichen gibt es auch eine ökonomische Komponente. Der deutsche Wohlfahrtsstaat basiert auf einem Solidarprinzip: Die Bürger zahlen in das System ein, um sich gegenseitig in schwierigen Zeiten zu unterstützen. Dieser Wohlstand wurde über Generationen hinweg von denjenigen erarbeitet, die hier seit langem leben. Die Priorität liegt daher darauf, die vorhandenen Mittel zuerst unter denjenigen zu verteilen, die durch Steuern, Sozialabgaben und Arbeit zum Erhalt dieses Systems beitragen – nicht unter denen, die sich durch illegale Einreise und fehlende wirtschaftliche Eigenleistung in das System begeben.

Das ist keine ideologische Frage, sondern eine rein wirtschaftliche Abwägung. Ein Sozialsystem kann nur dann nachhaltig funktionieren, wenn es nicht unbegrenzt belastet wird. Würde Deutschland keine klaren Regeln zur Einwanderung und Abschiebung haben, würde dies unweigerlich zur Überlastung des Sozialstaates führen – mit negativen Konsequenzen für alle.

Sozialpatriotismus

Ein weiterer wichtiger Aspekt ist der Schutz der Arbeitsleistung jener Generationen, die Deutschland nach dem Zweiten Weltkrieg mühsam wieder aufgebaut haben. Während oft betont wird, dass die Deutschen moralisch kein Erbe aus der Zeit vor 1945 beanspruchen dürfen – außer der Verantwortung für den Holocaust –, ist es umso bedeutsamer, das neue Erbe nach 1945 zu respektieren, das auf Fleiß, Disziplin und harter Arbeit beruht. Der Wiederaufbau war eine kollektive Leistung deutscher Menschen, deren Früchte nicht bedenkenlos verteilt werden dürfen, sondern vorrangig denjenigen zugutekommen sollten, die dieses Fundament mitgeschaffen oder es über Generationen mitgetragen haben.

Rechtstaatlichkeit ist nicht verhandelbar

Wer sich für eine konsequente Abschiebepraxis ausspricht, tut dies nicht aus rassistischen Motiven, sondern aus Respekt vor der Rechtsstaatlichkeit und den wirtschaftlichen Grundlagen des Landes. Der Vorwurf des Rassismus in diesem Kontext ist daher nicht nur falsch, sondern entlarvt eine selektive Wahrnehmung nach rassistischen Merkmalen bei denjenigen, die ihn erheben.

-

@ 39cc53c9:27168656

2025-04-09 07:59:35

@ 39cc53c9:27168656

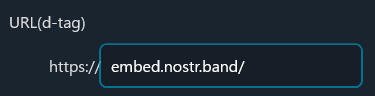

2025-04-09 07:59:35The new website is finally live! I put in a lot of hard work over the past months on it. I'm proud to say that it's out now and it looks pretty cool, at least to me!

Why rewrite it all?

The old kycnot.me site was built using Python with Flask about two years ago. Since then, I've gained a lot more experience with Golang and coding in general. Trying to update that old codebase, which had a lot of design flaws, would have been a bad idea. It would have been like building on an unstable foundation.

That's why I made the decision to rewrite the entire application. Initially, I chose to use SvelteKit with JavaScript. I did manage to create a stable site that looked similar to the new one, but it required Jav aScript to work. As I kept coding, I started feeling like I was repeating "the Python mistake". I was writing the app in a language I wasn't very familiar with (just like when I was learning Python at that mom ent), and I wasn't happy with the code. It felt like spaghetti code all the time.

So, I made a complete U-turn and started over, this time using Golang. While I'm not as proficient in Golang as I am in Python now, I find it to be a very enjoyable language to code with. Most aof my recent pr ojects have been written in Golang, and I'm getting the hang of it. I tried to make the best decisions I could and structure the code as well as possible. Of course, there's still room for improvement, which I'll address in future updates.

Now I have a more maintainable website that can scale much better. It uses a real database instead of a JSON file like the old site, and I can add many more features. Since I chose to go with Golang, I mad e the "tradeoff" of not using JavaScript at all, so all the rendering load falls on the server. But I believe it's a tradeoff that's worth it.

What's new

- UI/UX - I've designed a new logo and color palette for kycnot.me. I think it looks pretty cool and cypherpunk. I am not a graphic designer, but I think I did a decent work and I put a lot of thinking on it to make it pleasant!

- Point system - The new point system provides more detailed information about the listings, and can be expanded to cover additional features across all services. Anyone can request a new point!

- ToS Scrapper: I've implemented a powerful automated terms-of-service scrapper that collects all the ToS pages from the listings. It saves you from the hassle of reading the ToS by listing the lines that are suspiciously related to KYC/AML practices. This is still in development and it will improve for sure, but it works pretty fine right now!

- Search bar - The new search bar allows you to easily filter services. It performs a full-text search on the Title, Description, Category, and Tags of all the services. Looking for VPN services? Just search for "vpn"!

- Transparency - To be more transparent, all discussions about services now take place publicly on GitLab. I won't be answering any e-mails (an auto-reply will prompt to write to the corresponding Gitlab issue). This ensures that all service-related matters are publicly accessible and recorded. Additionally, there's a real-time audits page that displays database changes.

- Listing Requests - I have upgraded the request system. The new form allows you to directly request services or points without any extra steps. In the future, I plan to enable requests for specific changes to parts of the website.

- Lightweight and fast - The new site is lighter and faster than its predecessor!

- Tor and I2P - At last! kycnot.me is now officially on Tor and I2P!

How?

This rewrite has been a labor of love, in the end, I've been working on this for more than 3 months now. I don't have a team, so I work by myself on my free time, but I find great joy in helping people on their private journey with cryptocurrencies. Making it easier for individuals to use cryptocurrencies without KYC is a goal I am proud of!

If you appreciate my work, you can support me through the methods listed here. Alternatively, feel free to send me an email with a kind message!

Technical details

All the code is written in Golang, the website makes use of the chi router for the routing part. I also make use of BigCache for caching database requests. There is 0 JavaScript, so all the rendering load falls on the server, this means it needed to be efficient enough to not drawn with a few users since the old site was reporting about 2M requests per month on average (note that this are not unique users).

The database is running with mariadb, using gorm as the ORM. This is more than enough for this project. I started working with an

sqlitedatabase, but I ended up migrating to mariadb since it works better with JSON.The scraper is using chromedp combined with a series of keywords, regex and other logic. It runs every 24h and scraps all the services. You can find the scraper code here.

The frontend is written using Golang Templates for the HTML, and TailwindCSS plus DaisyUI for the CSS classes framework. I also use some plain CSS, but it's minimal.

The requests forms is the only part of the project that requires JavaScript to be enabled. It is needed for parsing some from fields that are a bit complex and for the "captcha", which is a simple Proof of Work that runs on your browser, destinated to avoid spam. For this, I use mCaptcha.

-

@ a9e24cc2:597d8933

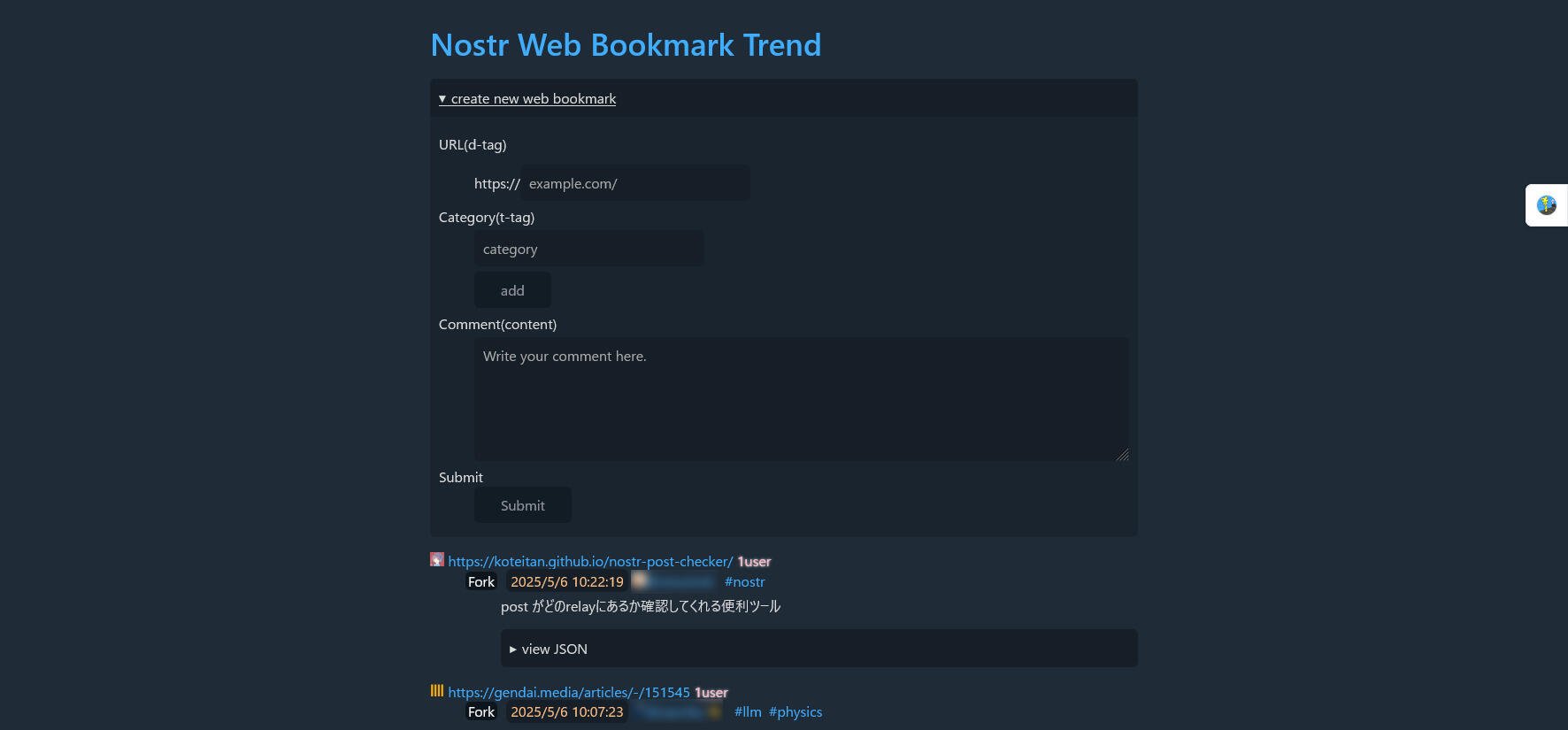

2025-05-07 01:06:44

@ a9e24cc2:597d8933

2025-05-07 01:06:44𝐌𝐄𝐒𝐒𝐀𝐆𝐄 BlackHat_Nexus 𝐅𝐎𝐑 𝐀𝐍𝐘 𝐊𝐈𝐍𝐃 𝐎𝐅 𝐒𝐄𝐑𝐕𝐈𝐂𝐄 𝐑𝐄𝐂𝐎𝐕𝐄𝐑 𝐘𝐎𝐔𝐑 𝐀𝐂𝐂𝐎𝐔𝐍𝐓Fast, Available and Reliable for any of the following services 🤳 Recovery of lost funds🤳 Facebook Hack🤳 WhatsApp Hack 🤳 Instagram Hack🤳 Spying🤳 Windows Hacking🤳 Recover lost wallet 🤳 Credit score trick 🤳 Recover Password🤳 Gmail Hack🤳 SnapChat Hacking 🤳 Cellphone Monitoring 🤳 Tik Tok Hack🤳 Twitter Hack🤳 Lost Phone Tracking🤳 Lost IaptopTracking🤳 Lost Car Tracking🤳 Cloning WhatsApp🤳 Cryptocurrency Wallet🤳 Hacking🤳 Iphone unlock 🤳 Got banned 🤳 Private Number available🤳 Telegram hacking 🤳 Websites hacking 🤳 Hack University 🤳 IOS and Android hack 🤳 Wifi Hacking 🤳 CCTV hacking🤳 Hack Bot Game 🤳 Free fire hack 🤳 Changing of school grades 🤳 Cards 💳hackingNo 🆓 services 🚫WhatsApp +1 3606068592Send a DM https://t.me/BlackHat_Nexus@BlackHat_Nexus

-

@ 752f5d10:88491db3

2025-05-07 00:25:29

@ 752f5d10:88491db3

2025-05-07 00:25:29Opinion about Trendo: Forex Trading & Broker (android)

L o s i n g $111,555 overnight was a crushing blow. The account lockout and subpar customer service that followed left me stunned. However, I gained invaluable insights from this ordeal : Conduct thorough research before committing Verify credentials and read reviews from multiple sources Be c a u t i o u s of un realistic promises Demand responsive and reliable support. Fortunately, I found chelsy__desmarais__54__A T__g=m=a=i=l__D=o=t__c_o_m, which helped me re-cover from this financial setback. W_h_a_t_s_A_p_p ; +1=8=5=9=4=3=6=4=2=1=1

WalletScrutiny #nostrOpinion

-

@ 7bdef7be:784a5805

2025-04-02 12:37:35

@ 7bdef7be:784a5805

2025-04-02 12:37:35The following script try, using nak, to find out the last ten people who have followed a

target_pubkey, sorted by the most recent. It's possibile to shortensearch_timerangeto speed up the search.```

!/usr/bin/env fish

Target pubkey we're looking for in the tags

set target_pubkey "6e468422dfb74a5738702a8823b9b28168abab8655faacb6853cd0ee15deee93"

set current_time (date +%s) set search_timerange (math $current_time - 600) # 24 hours = 86400 seconds

set pubkeys (nak req --kind 3 -s $search_timerange wss://relay.damus.io/ wss://nos.lol/ 2>/dev/null | \ jq -r --arg target "$target_pubkey" ' select(. != null and type == "object" and has("tags")) | select(.tags[] | select(.[0] == "p" and .[1] == $target)) | .pubkey ' | sort -u)

if test -z "$pubkeys" exit 1 end

set all_events "" set extended_search_timerange (math $current_time - 31536000) # One year

for pubkey in $pubkeys echo "Checking $pubkey" set events (nak req --author $pubkey -l 5 -k 3 -s $extended_search_timerange wss://relay.damus.io wss://nos.lol 2>/dev/null | \ jq -c --arg target "$target_pubkey" ' select(. != null and type == "object" and has("tags")) | select(.tags[][] == $target) ' 2>/dev/null)

set count (echo "$events" | jq -s 'length') if test "$count" -eq 1 set all_events $all_events $events endend

if test -n "$all_events" echo -e "Last people following $target_pubkey:" echo -e ""

set sorted_events (printf "%s\n" $all_events | jq -r -s ' unique_by(.id) | sort_by(-.created_at) | .[] | @json ') for event in $sorted_events set npub (echo $event | jq -r '.pubkey' | nak encode npub) set created_at (echo $event | jq -r '.created_at') if test (uname) = "Darwin" set follow_date (date -r "$created_at" "+%Y-%m-%d %H:%M") else set follow_date (date -d @"$created_at" "+%Y-%m-%d %H:%M") end echo "$follow_date - $npub" endend ```

-

@ 2183e947:f497b975

2025-03-29 02:41:34

@ 2183e947:f497b975

2025-03-29 02:41:34Today I was invited to participate in the private beta of a new social media protocol called Pubky, designed by a bitcoin company called Synonym with the goal of being better than existing social media platforms. As a heavy nostr user, I thought I'd write up a comparison.

I can't tell you how to create your own accounts because it was made very clear that only some of the software is currently open source, and how this will all work is still a bit up in the air. The code that is open source can be found here: https://github.com/pubky -- and the most important repo there seems to be this one: https://github.com/pubky/pubky-core

You can also learn more about Pubky here: https://pubky.org/

That said, I used my invite code to create a pubky account and it seemed very similar to onboarding to nostr. I generated a private key, backed up 12 words, and the onboarding website gave me a public key.

Then I logged into a web-based client and it looked a lot like twitter. I saw a feed for posts by other users and saw options to reply to posts and give reactions, which, I saw, included hearts, thumbs up, and other emojis.

Then I investigated a bit deeper to see how much it was like nostr. I opened up my developer console and navigated to my networking tab, where, if this was nostr, I would expect to see queries to relays for posts. Here, though, I saw one query that seemed to be repeated on a loop, which went to a single server and provided it with my pubkey. That single query (well, a series of identical queries to the same server) seemed to return all posts that showed up on my feed. So I infer that the server "knows" what posts to show me (perhaps it has some sort of algorithm, though the marketing material says it does not use algorithms) and the query was on a loop so that if any new posts came in that the server thinks I might want to see, it can add them to my feed.

Then I checked what happens when I create a post. I did so and looked at what happened in my networking tab. If this was nostr, I would expect to see multiple copies of a signed messaged get sent to a bunch of relays. Here, though, I saw one message get sent to the same server that was populating my feed, and that message was not signed, it was a plaintext copy of my message.

I happened to be in a group chat with John Carvalho at the time, who is associated with pubky. I asked him what was going on, and he said that pubky is based around three types of servers: homeservers, DHT servers, and indexer servers. The homeserver is where you create posts and where you query for posts to show on your feed. DHT servers are used for censorship resistance: each user creates an entry on a DHT server saying what homeserver they use, and these entries are signed by their key.

As for indexers, I think those are supposed to speed up the use of the DHT servers. From what I could tell, indexers query DHT servers to find out what homeservers people use. When you query a homeserver for posts, it is supposed to reach out to indexer servers to find out the homeservers of people whose posts the homeserver decided to show you, and then query those homeservers for those posts. I believe they decided not to look up what homeservers people use directly on DHT servers directly because DHT servers are kind of slow, due to having to store and search through all sorts of non-social-media content, whereas indexers only store a simple db that maps each user's pubkey to their homeserver, so they are faster.

Based on all of this info, it seems like, to populate your feed, this is the series of steps:

- you tell your homeserver your pubkey

- it uses some sort of algorithm to decide whose posts to show you

- then looks up the homeservers used by those people on an indexer server

- then it fetches posts from their homeservers

- then your client displays them to you

To create a post, this is the series of steps:

- you tell your homeserver what you want to say to the world

- it stores that message in plaintext and merely asserts that it came from you (it's not signed)

- other people can find out what you said by querying for your posts on your homeserver

Since posts on homeservers are not signed, I asked John what prevents a homeserver from just making up stuff and claiming I said it. He said nothing stops them from doing that, and if you are using a homeserver that starts acting up in that manner, what you should do is start using a new homeserver and update your DHT record to point at your new homeserver instead of the old one. Then, indexers should update their db to show where your new homeserver is, and the homeservers of people who "follow" you should stop pulling content from your old homeserver and start pulling it from your new one. If their homeserver is misbehaving too, I'm not sure what would happen. Maybe it could refuse to show them the content you've posted on your new homeserver, keeping making up fake content on your behalf that you've never posted, and maybe the people you follow would never learn you're being impersonated or have moved to a new homeserver.

John also clarified that there is not currently any tooling for migrating user content from one homeserver to another. If pubky gets popular and a big homeserver starts misbehaving, users will probably need such a tool. But these are early days, so there aren't that many homeservers, and the ones that exist seem to be pretty trusted.

Anyway, those are my initial thoughts on Pubky. Learn more here: https://pubky.org/

-

@ 2dd9250b:6e928072

2025-03-22 00:22:40

@ 2dd9250b:6e928072

2025-03-22 00:22:40Vi recentemente um post onde a pessoa diz que aquele final do filme O Doutrinador (2019) não faz sentido porque mesmo o protagonista explodindo o Palácio dos Três Poderes, não acaba com a corrupção no Brasil.

Progressistas não sabem ler e não conseguem interpretar textos corretamente. O final de Doutrinador não tem a ver com isso, tem a ver com a relação entre o Herói e a sua Cidade.

Nas histórias em quadrinhos há uma ligação entre a cidade e o Super-Herói. Gotham City por exemplo, cria o Batman. Isso é mostrado em The Batman (2022) e em Batman: Cavaleiro das Trevas, quando aquele garoto no final, diz para o Batman não fugir, porque ele queria ver o Batman de novo. E o Comissário Gordon diz que o "Batman é o que a cidade de Gotham precisa."

Batman: Cavaleiro das Trevas Ressurge mostra a cidade de Gotham sendo tomada pela corrupção e pela ideologia do Bane. A Cidade vai definhando em imoralidade e o Bruce, ao olhar da prisão a cidade sendo destruída, decide que o Batman precisa voltar porque se Gotham for destruída, o Batman é destruído junto. E isso o da forças para consegue fugir daquele poço e voltar para salvar Gotham.

Isso também é mostrado em Demolidor. Na série Demolidor o Matt Murdock sempre fala que precisa defender a cidade Cozinha do Inferno; que o Fisk não vai dominar a cidade e fazer o que ele quiser nela. Inclusive na terceira temporada isso fica mais evidente na luta final na mansão do Fisk, onde Matt grita que agora a cidade toda vai saber o que ele fez; a cidade vai ver o mal que ele é para Hell's Kitchen, porque a gente sabe que o Fisk fez de tudo para a imagem do Demolidor entrar e descrédito perante os cidadãos, então o que acontece no final do filme O Doutrinador não significa que ele está acabando com a corrupção quando explode o Congresso, ele está praticamente interrompendo o ciclo do sistema, colocando uma falha em sua engrenagem.

Quando você ouve falar de Brasília, você pensa na corrupção dos políticos, onde a farra acontece,, onde corruptos desviam dinheiro arrecadado dos impostos, impostos estes que são centralizados na União. Então quando você ouve falarem de Brasília, sempre pensa que o pessoal que mora lá, mora junto com tudo de podre que acontece no Brasil.

Logo quando o Doutrinador explode tudo ali, ele está basicamente destruindo o mecanismo que suja Brasília. Ele está fazendo isso naquela cidade. Porque o símbolo da cidade é justamente esse, a farsa de que naquele lugar o povo será ouvido e a justiça será feita. Ele está destruindo a ideologia de que o Estado nos protege, nos dá segurança, saúde e educação. Porque na verdade o Estado só existe para privilegiar os políticos, funcionários públicos de auto escalão, suas famílias e amigos. Enquanto que o povo sofre para sustentar a elite política. O protagonista Miguel entendeu isso quando a filha dele morreu na fila do SUS.

-

@ a39d19ec:3d88f61e

2025-03-18 17:16:50

@ a39d19ec:3d88f61e

2025-03-18 17:16:50Nun da das deutsche Bundesregime den Ruin Deutschlands beschlossen hat, der sehr wahrscheinlich mit dem Werkzeug des Geld druckens "finanziert" wird, kamen mir so viele Gedanken zur Geldmengenausweitung, dass ich diese für einmal niedergeschrieben habe.

Die Ausweitung der Geldmenge führt aus klassischer wirtschaftlicher Sicht immer zu Preissteigerungen, weil mehr Geld im Umlauf auf eine begrenzte Menge an Gütern trifft. Dies lässt sich in mehreren Schritten analysieren:

1. Quantitätstheorie des Geldes

Die klassische Gleichung der Quantitätstheorie des Geldes lautet:

M • V = P • Y

wobei:

- M die Geldmenge ist,

- V die Umlaufgeschwindigkeit des Geldes,

- P das Preisniveau,

- Y die reale Wirtschaftsleistung (BIP).Wenn M steigt und V sowie Y konstant bleiben, muss P steigen – also Inflation entstehen.

2. Gütermenge bleibt begrenzt

Die Menge an real produzierten Gütern und Dienstleistungen wächst meist nur langsam im Vergleich zur Ausweitung der Geldmenge. Wenn die Geldmenge schneller steigt als die Produktionsgütermenge, führt dies dazu, dass mehr Geld für die gleiche Menge an Waren zur Verfügung steht – die Preise steigen.

3. Erwartungseffekte und Spekulation

Wenn Unternehmen und Haushalte erwarten, dass mehr Geld im Umlauf ist, da eine zentrale Planung es so wollte, können sie steigende Preise antizipieren. Unternehmen erhöhen ihre Preise vorab, und Arbeitnehmer fordern höhere Löhne. Dies kann eine sich selbst verstärkende Spirale auslösen.

4. Internationale Perspektive

Eine erhöhte Geldmenge kann die Währung abwerten, wenn andere Länder ihre Geldpolitik stabil halten. Eine schwächere Währung macht Importe teurer, was wiederum Preissteigerungen antreibt.

5. Kritik an der reinen Geldmengen-Theorie

Der Vollständigkeit halber muss erwähnt werden, dass die meisten modernen Ökonomen im Staatsauftrag argumentieren, dass Inflation nicht nur von der Geldmenge abhängt, sondern auch von der Nachfrage nach Geld (z. B. in einer Wirtschaftskrise). Dennoch zeigt die historische Erfahrung, dass eine unkontrollierte Geldmengenausweitung langfristig immer zu Preissteigerungen führt, wie etwa in der Hyperinflation der Weimarer Republik oder in Simbabwe.

-

@ 306555fe:fd7fdf12

2025-05-07 02:12:08

@ 306555fe:fd7fdf12

2025-05-07 02:12:08{"contract_id":"f5523a8f242339bdd8585e5491a82c2d9a72ff51f7fadc3f7c6f766afe9c0f19","title":"T2- Freelance Development Agreement","content":"# Freelance Development Agreement\n\n## Parties\n\nThis Freelance Development Agreement (the \"Agreement\") is entered into as of [DATE] by and between:\n\nClient: [CLIENT NAME], with an address at [CLIENT ADDRESS] (\"Client\")\n\nDeveloper: [DEVELOPER NAME], with an address at [DEVELOPER ADDRESS] (\"Developer\")\n\n## Services\n\nDeveloper agrees to provide the following services to Client (the \"Services\"):\n\n1. Design and develop a web application according to the specifications outlined in Attachment A.\n2. Provide regular progress updates on a weekly basis.\n3. Deliver the completed project by the deadline specified in the Timeline section.","version":1,"created_at":1746583812,"signers_required":2,"signatures":[{"pubkey":"306555fee4433582b32f6d46f11b644da33896595016893d7da18c75fd7fdf12","sig":"8ad3a9e2a4ef70515853e051882ab11ebc7d56f15be46deb1520d6292711bc4eeb29c723c46a251c98134d06b8950931d19f61f6f8836e4932f0d6682fe8159e","timestamp":1746583928}]}

-

@ 8671a6e5:f88194d1

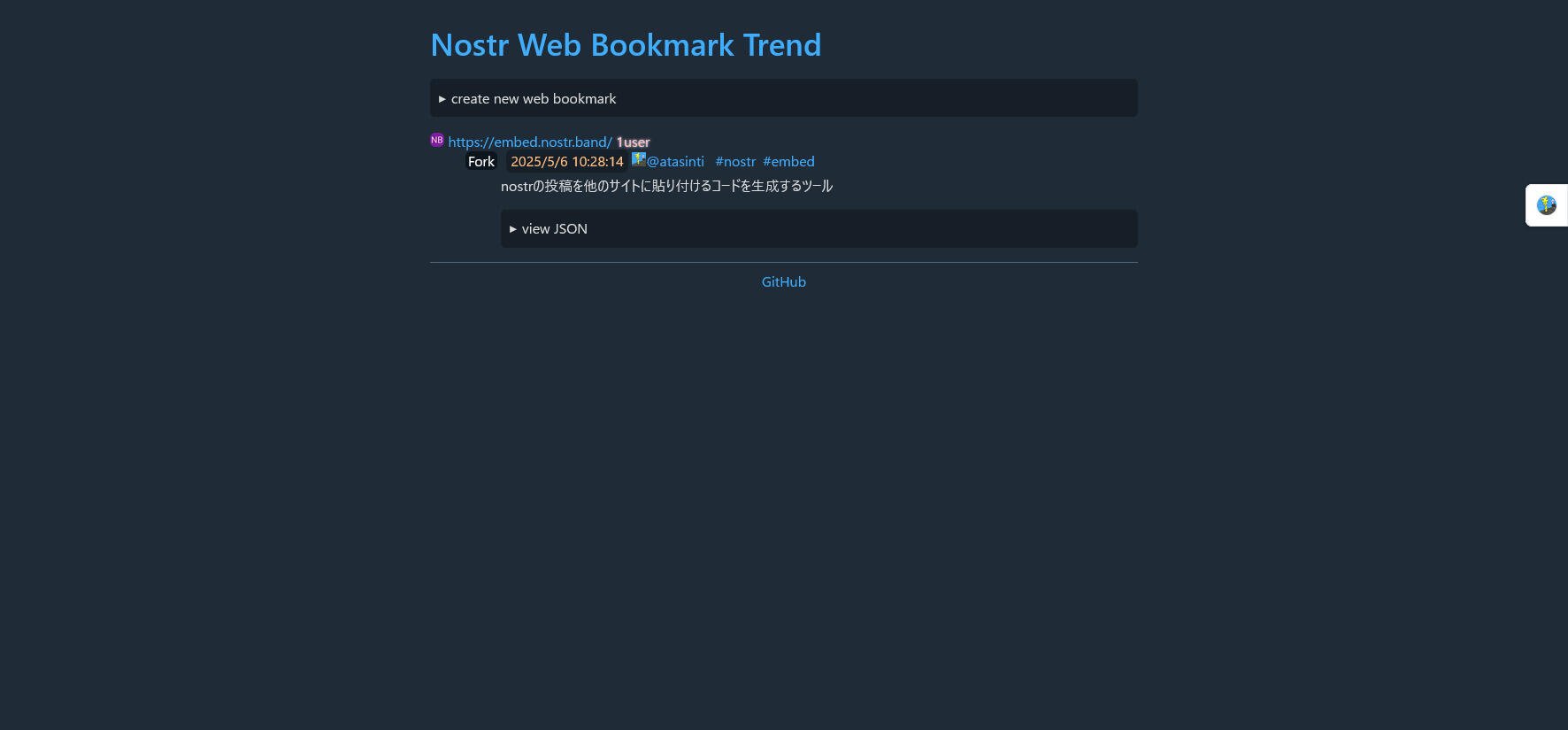

2025-05-06 16:23:25

@ 8671a6e5:f88194d1

2025-05-06 16:23:25"I tried pasting my login key into the text field, but no luck—it just wouldn't work. Turns out, the login field becomes completely unusable whenever the on-screen keyboard shows up on my phone. So either no one ever bothered to test this on a phone, or they did and thought, ‘Eh, who needs to actually log in anyway?’."

### \ \ Develop and evolve

Any technology or industry at the forefront of innovation faces the same struggle. Idealists, inventors, and early adopters jump in first, working to make things usable for the technical crowd. Only later do the products begin to take shape for the average user.

Bitcoin’s dropping the Ball on usability (and user-experience)

First, we have to acknowledge the progress we've made. Bitcoin has come a long way in terms of usability—no doubt about it. Even if I still think it’s bad, it’s nowhere near as terrible as it was ten or more years ago. The days of printing a paper wallet from some shady website and hoping it would still work months or years later are behind us. The days of buggy software never getting fixed are mostly over.

The Bitcoin technology itself made progress through many BIPs (Bitcoin Improvement Proposals) and combined with an increasing number of apps, devs, websites and related networks (Liquid, Lightning, Nostr, ....) we can say that we're seeing a strong ecosystem going its way. The ecosystem is alive and expanding, and technically, things are clearly working. The problem is that we’re still building with a mindset where developers and project managers consider usability—but don’t truly care about it in practice. They don’t lead with it. (Yes, there are always exceptions.)

All that progress looks cool, when you see the latest releases of hardware wallets, software wallets, exchanges, nostr clients and services built purely for bitcoin, you're usually thinking that we've progressed nicely. But I want to focus on the downside of all these shiny tools. Because if Bitcoin has made it this far, it’s mostly thanks to people who deeply understand its value and are stubborn enough to push through the friction. They don’t give up when the user experience sucks.

Many bitcoiners completely lost their perspective on the software front in my opinion. Because we could have been so much further ahead, and we didn't because some of the most important components on the user-facing side of Bitcoin (arguably the most important part) hasn’t kept pace with the popularity and possible growth. And that should be a great concern, because Bitcoin is meant to be open and accessible. The blockchain is public. This is supposed to be for everyone. This is an open ledger technology so in theory everything is user-facing to one extent or another. Yet we fail on that front to make the glue stick. Somewhere, we’re easily amused by the tools we create, and often contains hurdles we can’t see or feel. While users reject it after 5 seconds tops.

We didn’t came a lot further yet, because we’ve ignored usability at its core (pun intended).

I’m not talking about usability in the “it works on my machine” sense. I’m talking about usability that meets the standard of modern apps. Think Spotify, Instagram, Uber, Gmail. Products that ordinary people use without reading a manual or digging through forums.

That’s the bar. We’re still far from it.

Bad UX scares your grandma away

… and that’s how many bitcoiners apparently like it.

Subsequently, when I say usability, I’m using it as an umbrella term. For me, it covers user experience, user interface, and real-life, full-cycle testing—from onboarding a brand new user to rolling out a new version of the app. And oh boy, our onboarding is so horrible. (“Hey wanna try bitcoin? Here’s an app that takes up to 4 minutes or more to get though, but wait, you’ll have to install a plugin, or wait I’ll send you an on-chain transaction…)

Take a look at the listings on Bitvocation, an excellent job board for Bitcoiners and related projects. You’ll quickly notice a pattern: almost no companies are hiring software testers. It’s marketing, more marketing, some sales, and of course, full-stack developers. But … No testers.

Because testing has become something that’s often skipped or automated in a hurry. Maybe the devs run a test locally to confirm that the feature they just built doesn’t crash outright. That’s it. And if testing does happen at a company, it’s usually shallow—focused only on the top five percent of critical bugs. The finer points that shape real user experience, like button placement, navigation flow, and responsiveness, are dumped on “the community.”

Which leads to some software being rushed out to production, and only then do teams discover how many problems exist in the real world. If there’s anyone left to care that is, since most teams are scattered all over the world and get paid by the hour by some VC firm on a small runway to a launch date.

This has real life consequences I’ve seen for myself with new users. Like a lightning wallet having a +5 minute onboarding time, and a fat on-screen error for the new users, or a hardware wallet stuck in an endless upgrade loop, just because nobody tested it on a device that was “old” (as in, one year old).

The result is clear: usability and experience testing are so low on the priority list, they may as well not exist. And that’s tragic, because the enthusiasm of new users gets crushed the moment they run into what I call Linux’plaining.

That’s when something obvious fails — like a lookup command that’s copied straight from their own help documentation but doesn’t work — and the answer you get as a user is something like: “Yeah, but first you have to…” followed by an explanation that isn’t mentioned anywhere in the interface or documentation. You were just supposed to know. No one updates the documentation, and no one cares. As most of the projects are very temporary or don’t really care if it succeeds or not, because they’re bitcoiners and bitcoin always wins. Just like PGP always was super cool and good, and users should just be smarter.

Lessons from the past usability disasters

We can always learn from the past especially when its precedents are still echoing through the systems we use today

So here goes, some examples from the legacy / fiat industry:

Lotus Notes, for example. Once a titan in enterprise communication software, which managed to capture about 145 million mailboxes. But its downfall is an example of what happens when you ignore and keep ignoring real-life user needs and fail to evolve with the market. Software like that doesn’t just fade, it collapses under the weight of its own inertia and bloat. If you think bitcoin can’t have that, yes… we’re of course not having a competitor in the market (hard money is hard money, not a mailbox or office software provider of course). But we can erode trust to the extent that it becomes LotusNotes’d.

Its archaic 1990s interface came with clunky navigation and a chaotic document management system. Users got frustrated fast—basic tasks took too long. Picture this: you're stuck in a cubicle, trying to find the calendar function in Lotus Notes while a giant office printer hisses and spits out stacks of paper behind you. The platform never made the leap to modern expectations. It failed to deliver proper mobile clients and clung to outdated tech like LotusScript and the Domino architecture, which made it vulnerable to security issues and incompatible with the web standards of the time. By 2012, IBM pulled the plug on the Lotus brand, as businesses moved en masse to cloud-based alternatives.

Another kind of usability failure has plagued PGP1 (and still does so after 34 years). PGP (Pretty Good Privacy) is a time-tested and rock-solid method for encryption and key exchange, but it’s riddled with usability problems, especially for anyone who isn’t technically inclined.

Its very nature and complexity are already steep hurdles (and yes, you can’t make it fully easy without compromising how it’s supposed to work—granted). But the real problem? Almost zero effort has gone into giving even the most eager new users a manageable learning curve. That neglect slowly killed off any real user base—except for the hardcore encryption folks who already know what they’re doing.

Ask anyone in a shopping street or the historic center of your city if they’ve heard of PGP. And on the off chance someone knows it’s not a trendy new fast-food joint called “Perfectly Grilled Poultry,” the odds of them having actually used it in the past six months are basically zero, unless you happen to bump into that one neckbeard guy in his 60s wearing a stained Star Wars T-shirt named Leonard.

The builders of PGP made one major mistake: they never treated usability as a serious design goal (that’s normal for people knee deep in encryption, I get that, it’s the way it is). PGP is fantastic on itself. Other companies and projects tried to build around it, but while they stumbled, tools like Signal and ProtonMail stepped in; offering the same core features of encryption and secure messaging, minus the headache. They delivered what PGP never could: powerful functionality wrapped in something regular people can actually use. Now, we’ve got encrypted communication flowing through apps like Signal, where all the complex tech is buried so deep in the background, the average user doesn’t even realize it’s there. ProtonMail went one step further even, integrating PGP so cleanly that users never need to exchange keys or understand the cryptography behind it all, yet still benefit from bulletproof encryption.

There’s no debate—this shift is a good thing. History shows that unusable software fades into irrelevance. Whether due to lack of interest, failure to reach critical mass, or a competitor swooping in to eat market share, clunky tools don’t survive. Now, to be clear, Bitcoin doesn’t have to worry about that kind of threat. There’s no real competition when it comes to hard money. Unless, of course, you genuinely believe that flashy shitcoins are a viable alternative—in which case, you might as well stop reading here and go get yourself scammed on the latest Solana airdrop or whatever hype train’s leaving the station today for the degens.

The main takeaway here is that Bitcoin must avoid becoming the next Lotus Notes, bloated with features but neglected by users—or the next PGP, sidelined by its own lack of usability. That kind of trajectory would erode trust, especially if usability and onboarding keep falling behind. And honestly, we’re already seeing signs of this in bitcoin. User adoption in Europe, especially in countries like Germany is noticeably lagging. The introduction of the EU’s MiCA regulations isn’t helping either. Most of the companies that were actually pushing adoption are now either shutting down, leaving the EU, or jumping through creative loopholes just to stay alive. And the last thing on anyone’s mind is improving UX. It takes time, effort, and specialized people to seriously think through how to build this properly, from the beginning, with this ease of use and onboarding in mind. That’s a luxury most teams can’t or won’t prioritize right now. Understandably when the lack of funds is still a major issue within the bitcoin space. (for people sitting on hard money, there’s surprisingly little money flowing into useful projects that aren’t hyped up empty boxes)

The number of nodes being set up by end users worldwide isn’t exactly skyrocketing either. Sure, there’s some growth but let’s not overstate it. Based on Bitnodes’ snapshots taken in March of each year, we’re looking at: 2022 : around 10500 2023 : around 17000 2024 : around 18500 2025 : around 21000 (I know there are different methods of measuring these, like read-only nodes, the % change is roughly the same nonetheless)

In my opinion, if we had non-clunky software that was actually released with proper testing and usability in mind, we could’ve easily doubled those node numbers. A bad user experience with a wallet spreads fast—and brings in exactly zero new users. The same goes for people trying to set up a miner or spin up a node, only to give up after a few frustrating steps. Sure, there are good people out there making guides and videos2 to help mitigate those hurdles, and that helps. But let’s be honest: there’s still very little “wow” factor when average users interact with most Bitcoin software. Almost every time they walk away, it’s because of one of two things—usability issues or bugs.

For the record: if a user can’t set up a wallet because the interface is so rotten or poorly tested, so they don’t know where to click or how to even select a seed word from a list, then that’s a problem — that’s a bug. Argue all you want: sure, it’s not a code-level bug and no, it’s not a system crash. But it is a usability failure. Call it onboarding friction, UX flaw, whatever fits your spreadsheet or circus Maximus of failures in your ticketing system. Bottom line: if your software doesn’t help users accomplish its core purpose, it’s broken. It’s a bug. Pretending it’s something a copywriter or marketing team can fix is pure deflection. The solution isn’t to relabel the problem, 1990’s telecom-style, just to avoid dealing with it. It’s to actually sit down, think, collaborate, and go through the issue, and getting real solutions out. ”No it’s not an issue, that’s how it works” like someone from a failing (and by now defunct) wallet told me once, is not a solution.

You got 21 seconds

The user can’t be onboarded because your software has an “issue”? In my book, that’s a bug. The usual response when you report it? “Yeah, that’s not a priority.” Well, guess what? It actually is a priority. All these small annoyances, hurdles, and bits of BS still plague this industry, and they make the whole experience miserable for regular people trying it out for the first time. The first 21 seconds (yeah, you see what I did there) are the most important when someone opens new software. If it doesn’t click right away—if they’re fiddling with sats or dollar signs, or hunting for some hidden setting buried behind a tiny arrow—it’s game over. They’re annoyed. They’re gone.

And this is exactly why we’re seeing a flood of shitcoin apps sweeping new users off their feet with "faster apps" or "nicer designs" apps that somehow can afford the UI specialists and slick, centralized setups to spread their lies and scams.

I hate to say it, but the Phantom wallet for example, for the Solana network, loaded with fake airdrop schemes and the most blatant scams — has a far better UX than most Bitcoin wallets and Lightning Wallets. Learn from it. Download that **** and get to know what we do wrong and how we can learn from the enemy.

That’s a hard truth. So, instead of just screaming “Uh, shitcooooin!” (yes, we know it is), maybe we should start learning from it. Their apps are better than ours in terms of UI and UX. They attract more people 5x faster (we know that’s also because of the fast gains and retardation playing with the marketing) but we can’t keep ignoring that. Somehow these apps attract more than our trustworthiness, our steady, secure, decentralized hard money truth.

It’s like stepping into one of the best Italian restaurants in town—supposedly. But then the menu’s a mess, the staff is scrolling on their phones, and something smells burnt coming from the kitchen. So, what do you do? You walk out. You cross the street to the fast food joint and order a burger and fries. And as you’re walking out with your food, someone from the Italian place yells at you: “Fast food is bad!” ”Yeah man I know, I wanted a nice Spaghetti aglio e olio, but here I am, digesting a cheeseburger that felt rather spongy.” (the problem is so gone so deep now, that users just walk past that Italian restaurant, don’t even recognize it as a restaurant because it doesn’t have cheeseburgers).

Fear of the dark

Technical people, not marketeers built bitcoin, it’s build on hundreds of small building blocks that interacted over time to have the bitcoin network and it’s immer evolving value. At one point David Chaum cooked up eCash, using blind signatures to let people send digital money anonymously — except it was still stuck on clunky centralized servers. Go back even further, to the 1970s, when Diffie, Hellman, and Rivest introduced public-key cryptography—the magic sauce that gave us secure digital signatures and authentication, making sure your messages stayed private and tamper-proof.

Fast forward to the 1990s, where peer-to-peer started to take off, decentralized networks getting started. Adam Back’s Hashcash in ‘97 used proof-of-work to fight email spam, and the cypherpunks were all about sticking it to the man with privacy-first, the invention 199 Human-Readable 128-bit keys3, decentralized systems. We started to swap files over p2p networks and later, torrents.

All these parts—anonymous cash, encryption, and leaderless networks finally clicked into place when Satoshi Nakamoto poured them into a chain of blocks, built on an ingenious “time-stamping” system: the timechain, or blockchain if you prefer. And just like that, Bitcoin was born—a peer-to-peer money system that didn’t need middlemen and actually worked without any central servers.

So yes, it’s only natural that Bitcoin and the many tools, born from math, obscurity, and cryptography, isn’t exactly always a user-interface darling. That’s also it’s charm for me in any case, as the core is robust and valuable beyond belief. That’s why we love to so see more use, more adoption.

But that doesn’t mean we can’t squash critical “show-stopper” bugs before releasing bitcoin-related software. And it sure as hell doesn’t mean we should act like jerks when a user points out something’s broken, confusing, or just doesn’t meet expectations. We can’t be complacent either about our role as builders of the next generations, as the core is hard money, and it would be a fatal mistake for the world to see it being used only for some rockstars from Wall Street and their counterparts to store their debt laden fiat. We can free people, make them better, make them elevate themselves. And yet, the people we try to elevate, we often alienate. All because we don’t test our stuff well enough. We should be so good, we blow the banking apps away. (they’re blowing themselves out of the market luckily with fiat “features” and overly over the top use of “analytics” to measure your carbon footprint for example).

We should be so damn professional that someone using Bitcoin apps for a full year wouldn’t even notice any bugs, because there wouldn’t be much to get annoyed by.

So… we have to do better. I’ve seen it time and time again — on Lightning tipping apps, Nostr plugins, wallets, hardware wallets, even metal plates we can screw up somehow … you name it. “It works on my machine”, isn’t enough anymore! Those days are over.

Even apps built with solid funding and strong dev and test teams like fedi.xyz4 can miss the mark. While the idea was good and the app itself ran fine without too much hurdles and usual bugs. But usability failed on a different front: there was just nothing meaningful to do in the app beyond poking around, chatting a bit, and sending a few sats back and forth. The communities it’s supposed to connect, just aren’t there, or weren’t there “yet”.

It’s a beautifully designed application and a strong proof-of-concept for federated community funds. But then… nothing. No one I know uses it. Their last blogpost was from beginning of October 2024, which doesn’t bode well, writing this than 6 months after. That said, they got some great onboarding going, usually under 20 seconds, which proves it can be done right (even if it was all a front-end for a more complex backend).

As you can see “usability” is a broad terminology, covering technical aspects, user-interface, but also use-cases. Even if you have a cool app that works really well and is well thought-out users won’t use it if there’s no real substance. You can’t get that critical mass by waiting for customers to come in or communities to embrace it. They won’t, because most of the individuals already had past experiences with bitcoin apps or services, and there’s a reason for them not being on-board already.

A lot of bitcoin companies build tools for new people. Never for the lapsed people, the persons that came in, thought of it as an investment or “a coin”… then left because of a bad experience or the price going down in fiat. All the while we have some software that usually isn’t so kind to new people, or causes loss of funds and time. Even if they make one little “mistake” of not knowing the system beforehand.

Bitcoin’s Moby Dick

\ Bitcoin itself has a big issue here. The user base could grow faster, and more robust, if there wasn’t software that worked as a sort of repellent against users.

I especially see a younger and less tech-savvy audience absolutely disliking the software we have now. No matter if it’s Electrum’s desktop wallet (hardly the sexiest tool out there, although I like it myself, but it lacks some features), Sparrow, or any lightning wallet out there (safe for WoS). I even saw people disliking Proton wallet, which I personally thought of as something really slick, well-made and polished. But even that doesn’t cut it for many people, as the “account” and “wallet” system wasn’t clear enough for them. (You see, we all have the same bias, because we know bitcoin, we look at it from a perspective of “facepalm, of course it’s a wallet named “account”, but when you sit next to a new user, it becomes clear that this is a hurdle. (please proton wallet: name a wallet a wallet, not “account”. But most users already in bitcoin, love what you’re doing)

Naturally disliking usability

The same technically brilliant people who maintain Bitcoin and build its apps haven’t quite tapped into their inner Steve Jobs—if that person even exists in the Bitcoin space. Let’s be honest: the next iOS-style wow moment, or the kind of frictionless usability seen in Spotify or Instagram, probably won’t come from hardcore Bitcoin devs alone. In fact, some builders in the space seem to actively disregard—or even look down on—discussions about usability. Just mention names like Wallet of Satoshi (yes, we all know it’s a custodial frontend) or the need for smoother interactions with Bitcoin, and you’ll get eye-rolls or defensive rants instead of curiosity or openness.

Moving more towards a better user interface for things like Sparrow or Bitcoin Core for example, would bring all kinds of “bad things” according to some, and on top of that, bring in new users (noobs) that ask questions like: “Do you burn all these sats when I make a transaction?” (Yes, that’s a real one.)

I get the “usability sucks” gripe — fear of losing key features, dumbing things down, or opening the door to unwanted changes (like BIP proposals real bitcoiners hate) that tweak bitcoin to suit any user’s whim. Close to no one in bitcoin (really in bitcoin!) wants that, including me.

That fear is however largely unfounded; because Bitcoin doesn’t change without consensus. Any change that would undermine its core use or value proposition simply won’t make it through. And let’s be honest: most of the users who crave these “faster,” centralized alternatives—those drawn to slick apps, one-click solutions, and dopamine-driven UI—will either stick with fiat, ape into the shitcoin-of-the-month, or praise the shiny new CBDC once it drops (“much fast, much cool”). These degen types, chasing fiat gains and jackpot dreams, aren’t relevant to this story, No matter what we build for bitcoin, they’ll always love the fiat-story and will always dislike bitcoin because it’s not a jackpot for them. (Honestly, why don’t they just gamble at a casino?)

People who fear that improving usability will somehow bring down the Bitcoin network are being a bit too paranoid—and honestly, they often don’t understand what usability or proper testing actually means.

They treat it like fluff, when in reality it's fundamental. Usability doesn't mean dumbing things down or compromising Bitcoin's core values; it means understanding why your fancy new app isn’t being used by anyone outside of your bubble. Testing is the beating heart of getting things out with confidence. Nothing more satisfying in software building than to proudly show even your beta versions to users, knowing it’s well tested. It’s much more than clicking a few buttons and tossing your code on GitHub. It's about asking real questions: can someone outside your Telegram group actually use this and will it they be using the software at all?

If you create a Nostr app that opens an in-app browser window and then tries to log you in with your NIPS05 or NIPS07 or whatever number it is that authenticates you, then you need to think about how it’s going to work in real life. Have people already visited this underlying website? Is that website using the exact same mechanism? Is it really working like we think it is in the real world? (Some notable good things are happening with the development of Keychat for example, I have the feeling they get it, it’s not all bad). And yes, there are still bugs and things to improve there, they’re just starting. (The browser section and nostr login need some work imho).

Guess what? You can test your stuff. But it takes time and effort. The kind of effort that, if skipped, gets multiplied across thousands of people. Thousands of people wasting their time trying to use your app, hitting errors, assuming they did something wrong, retrying, googling workarounds—only to eventually realize: it’s not them. It’s a bug. A bug you didn’t catch. Because you didn’t test. And now everyone loses. And guess what? Those users? They’re not coming back.

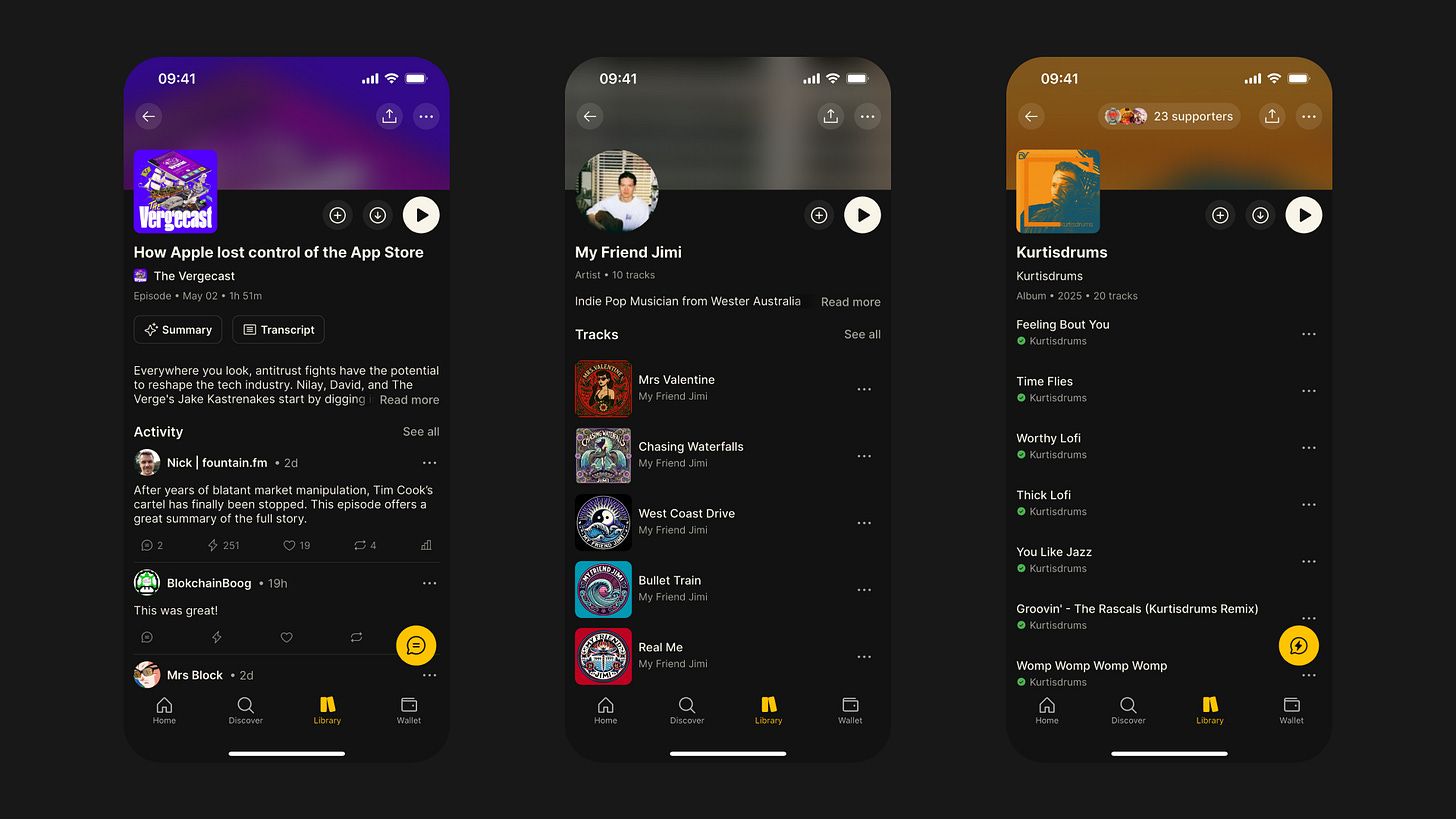

A good example (to stay positive here) is Fountain App, where the first versions were , eh… let’s say not so good, and then quickly evolved into a company and product that works really well, and also listens to their users and fixes their bugs. The interface can still be better in my opinion, but it’s getting there. And it’s super good now.

A bad example? Alby. (Sorry to say.) It still suffers from a bloated, clunky interface and an onboarding flow that utterly confuses new or returning users. It just doesn’t get the job done. Opinions may vary, sure, but hand this app to any non-technical user and ask them to get online and do a Nostr zap. Watch what happens. If they even manage to get through the initial setup, that is.

Another example? Bitkit. When I tried transferring funds from the "savings" to the "spending" account, the wallet silently opened a Lightning channel—no warning, no explanation—and suddenly my coins were locked up. To make things worse, the wallet still showed the full balance as spendable, even though part of it was now stuck in that channel. That was in November 2024, the last time I touched Bitkit. I wasted too much time trying to figure it out, I haven’t looked back (assuming the project is even still alive, I didn’t see them pop up anywhere).

Some metal BIP39 backup tools are great in theory but poorly executed. I bought one that didn’t even include a simple instruction on how to open it. The person I gave it to spent two hours trying to open it with a screwdriver and even attempted drilling. Turns out, it just slides open with some pressure. A simple instruction would’ve saved all that frustration.

Builders often assume users “just get it,” but a small guide could’ve prevented all the hassle. It’s a small step, but it’s crucial for better user experience. So why not avoid such situations and put a friggin cheap piece of paper in the box so people know how to open it? (The creators would probably facepalm if they read this, “how can users nòt see this?”). Yeah,… put a paper in there with instructions.

That’s natural, because as a creator you’re “in” it, you know. You don’t see how others would overlook something so obvious.

Bitcoiners are extremely bad on that front.

I’ll dive deeper into some examples in part 2 of this post.

By AVB

end of part 1

If you like to support independent thought and writings on bitcoin, follow this substack please https://coinos.io/allesvoorbitcoin/receive\ \ footnotes:

1 https://philzimmermann.com/EN/findpgp/

2 BTC sessions: set up a bitcoin node

-

@ 6ad08392:ea301584

2025-03-14 19:03:20

@ 6ad08392:ea301584

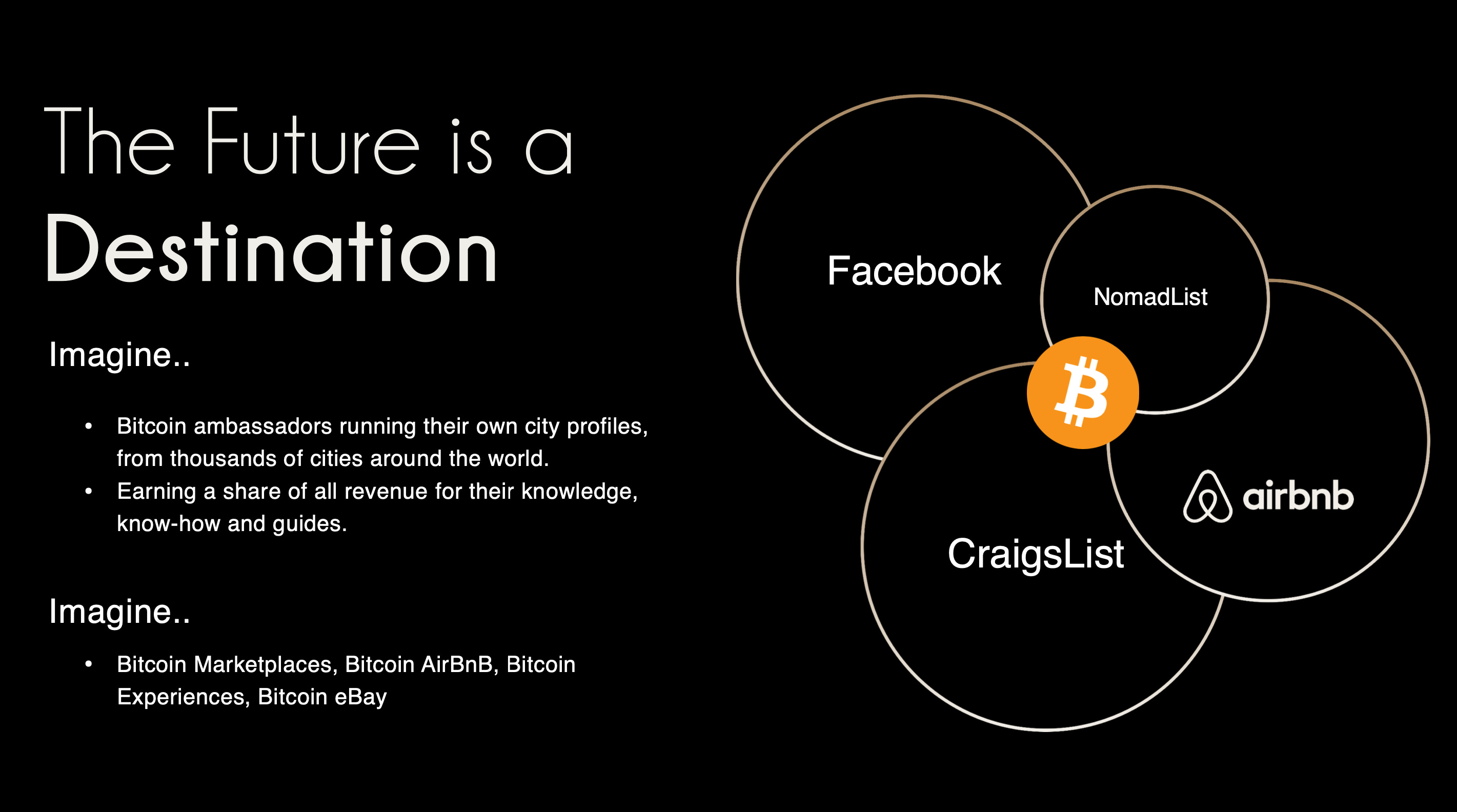

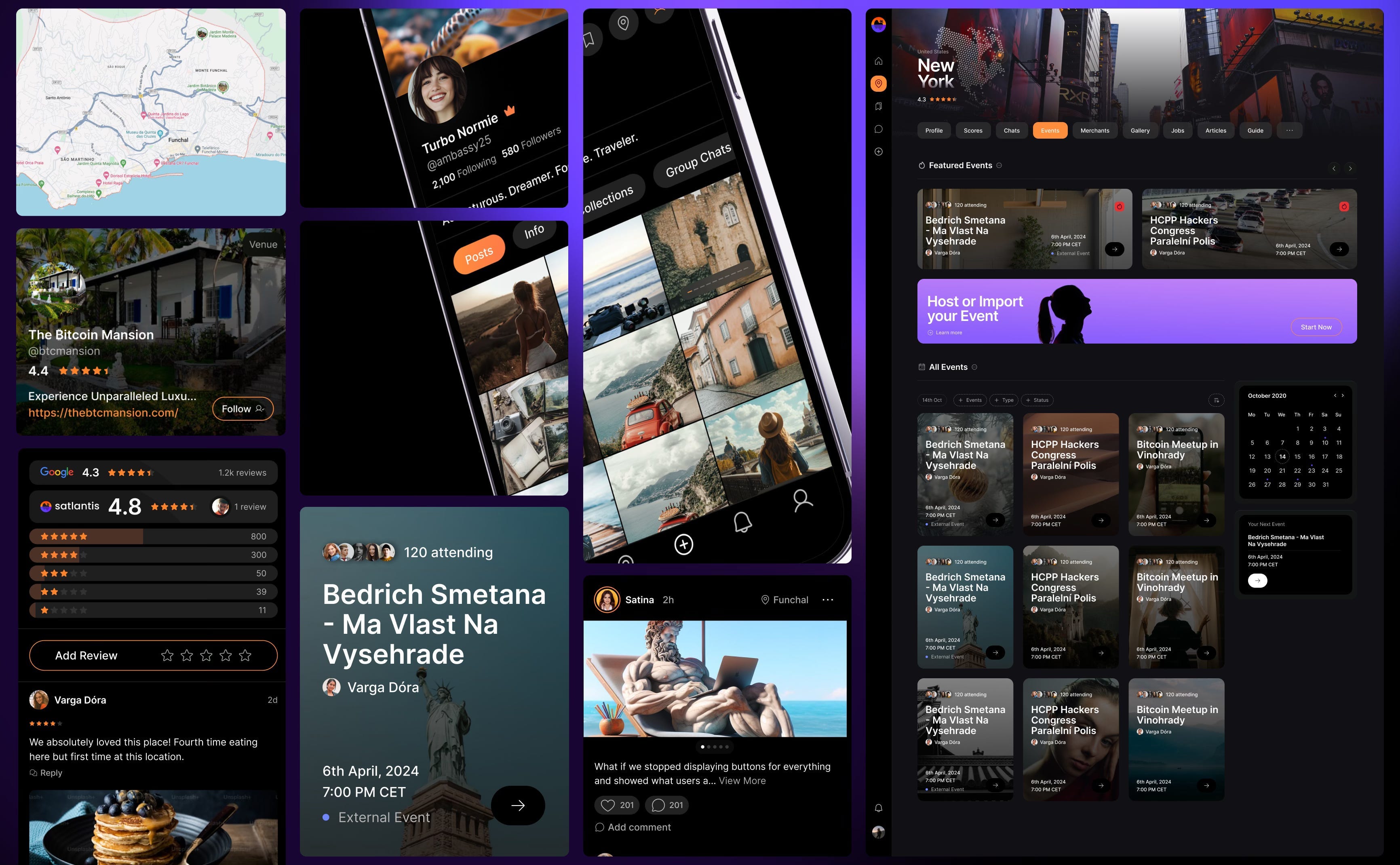

2025-03-14 19:03:20In 2024, I was high as a kite on Nostr hopium and optimism. Early that year, my co-founder and I figured that we could use Nostr as a way to validate ambassadors on “Destination Bitcoin” - the germ of a travel app idea we had at the time that would turn into Satlantis. After some more digging and thinking, we realised that Nostr’s open social graph would be of major benefit, and in exploring that design space, the fuller idea of Satlantis formed: a new kind of social network for travel.

###### ^^2 slides from the original idea here

###### ^^2 slides from the original idea hereI still remember the call I had with @pablof7z in January. I was in Dubai pitching the AI idea I was working on at the time, but all I could think and talk about was Satlantis and Nostr.

That conversation made me bullish AF. I came back from the trip convinced we’d struck gold. I pivoted the old company, re-organised the team and booked us for the Sovereign Engineering cohort in Madeira. We put together a whole product roadmap, go to market strategy and cap raise around the use of Nostr. We were going to be the ‘next big Nostr app’.

A couple of events followed in which I announced this all to the world: Bitcoin Atlantis in March and BTC Prague in June being the two main ones. The feedback was incredible. So we doubled down. After being the major financial backer for the Nostr Booth in Prague, I decided to help organise the Nostr Booth initiative and back it financially for a series of Latin American conferences in November. I was convinced this was the biggest thing since bitcoin, so much so that I spent over $50,000 in 2024 on Nostr marketing initiatives. I was certainly high on something.

Sobering up

It’s March 2025 and I’ve sobered up. I now look at Nostr through a different lens. A more pragmatic one. I see Nostr as a tool, as an entrepreneur - who’s more interested in solving a problem, than fixating on the tool(s) being used - should.

A couple things changed for me. One was the sub-standard product we released in November. I was so focused on being a Nostr evangelist that I put our product second. Coupled with the extra technical debt we took on at Satlantis by making everything Nostr native, our product was crap. We traded usability & product stability for Nostr purism & evangelism.

We built a whole suite of features using native event kinds (location kinds, calendar kinds, etc) that we thought other Nostr apps would also use and therefore be interoperable. Turns out no serious players were doing any of that, so we spent a bunch of time over-engineering for no benefit 😂

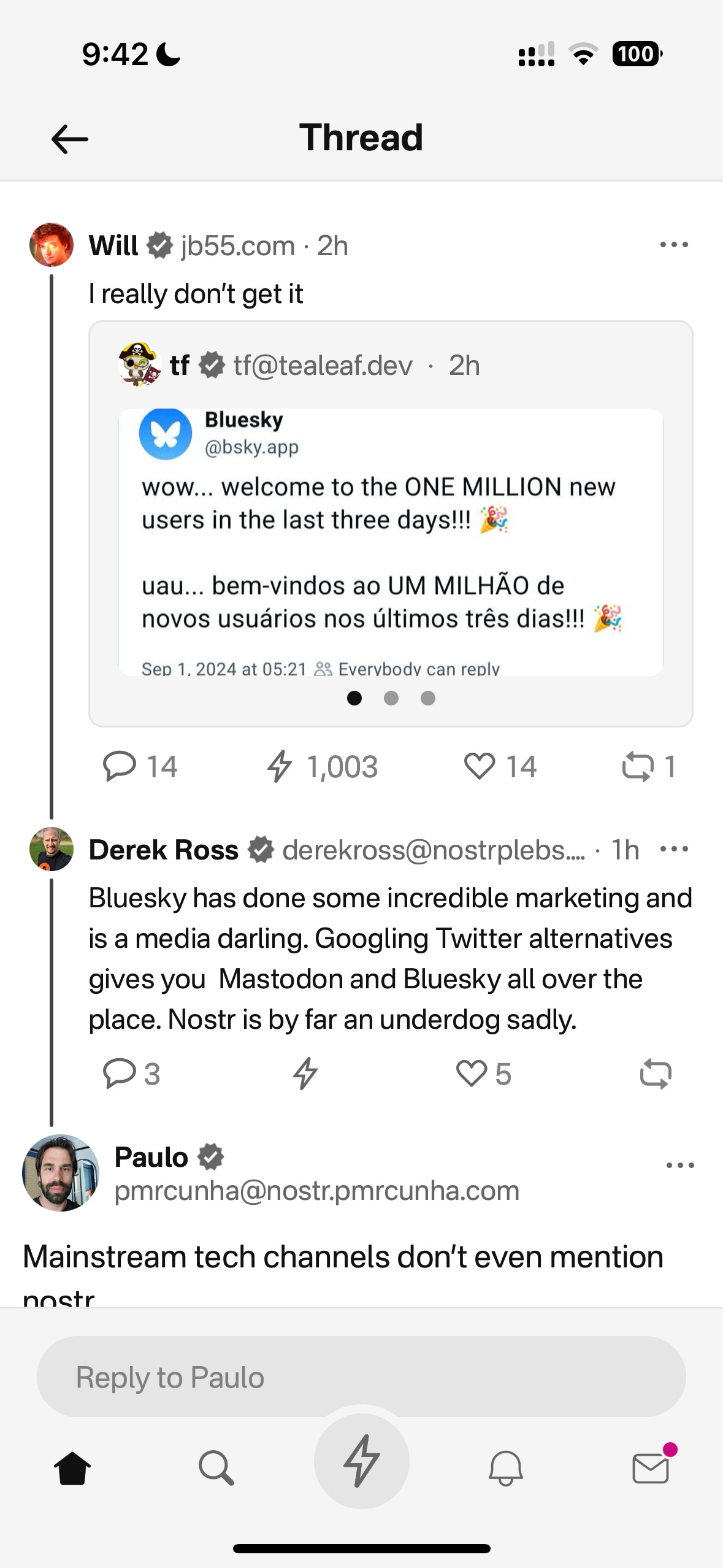

The other wake up call for me was the Twitter ban in Brazil. Being one of the largest markets for Twitter, I really thought it would have a material impact on global Nostr adoption. When basically nothing happened, I began to question things.

Combined, these experiences helped sober me up and I come down from my high. I was reading “the cold start problem” by Andrew Chen (ex-Uber) at the time and doing a deep dive on network effects. I came to the following realisation:

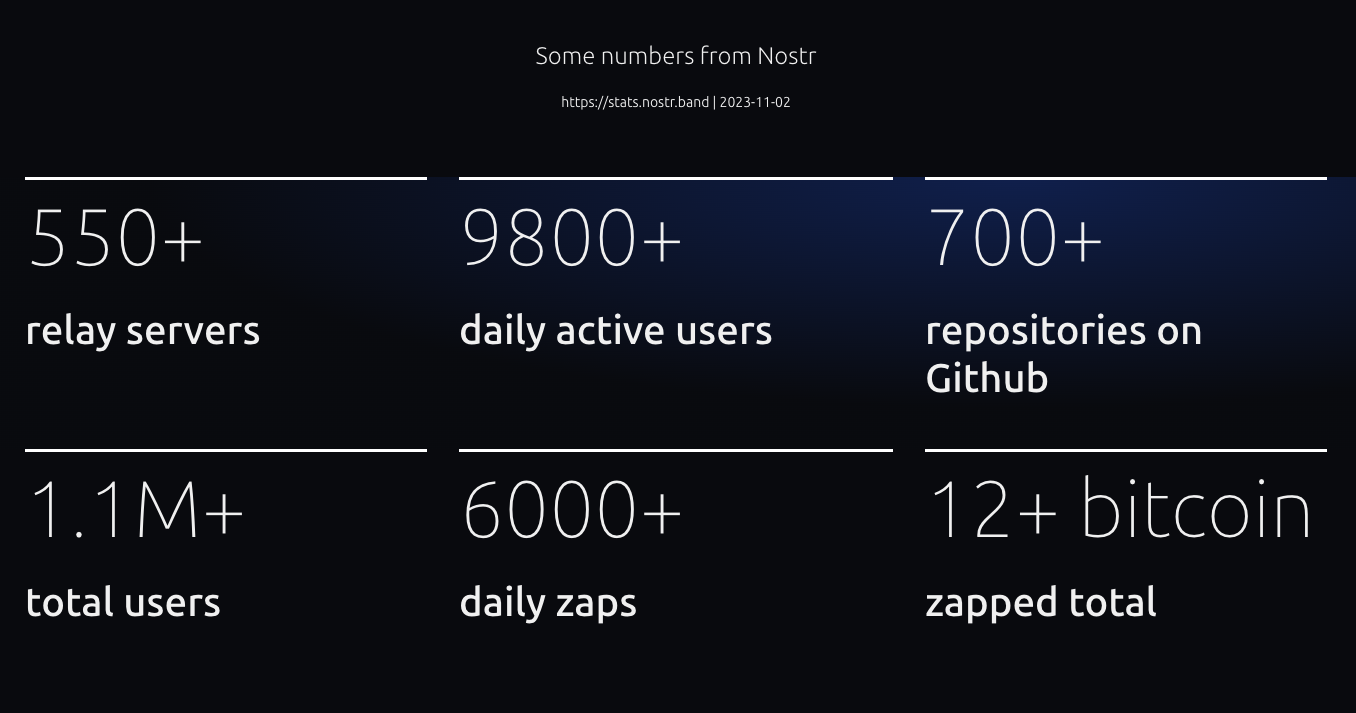

Nostr’s network effect is going to take WAY longer than we all anticipated initially. This is going to be a long grind. And unlike bitcoin, winning is not inevitable. Bitcoin solves a much more important problem, and it’s the ONLY option. Nostr solves an important problem yes, but it’s far from the only approach. It’s just the implementation arguably in the lead right now.

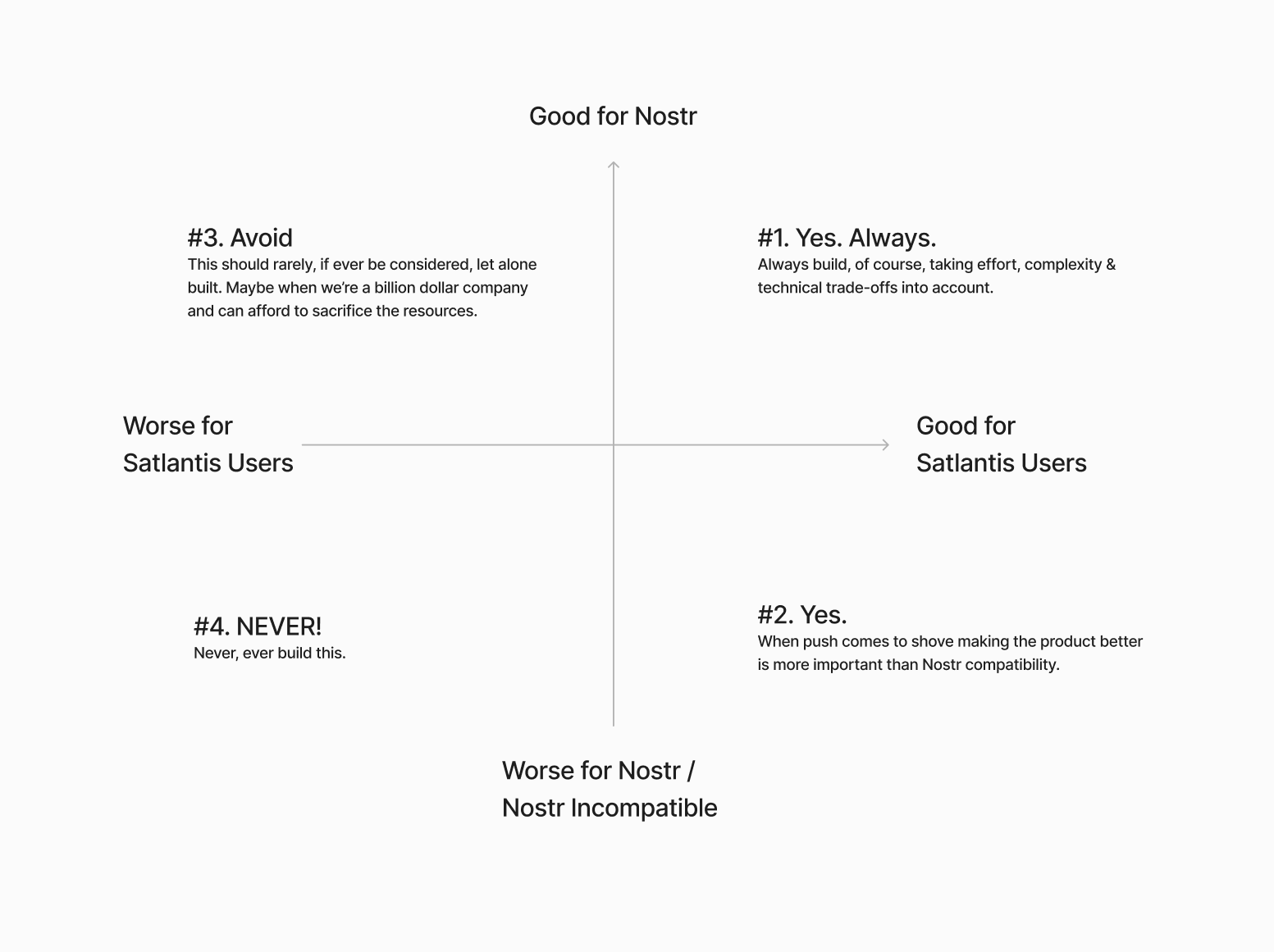

This sobering up led us to take a different approach with Nostr. We now view it as another tool in the tech-stack, no different to the use of React Native on mobile or AWS for infrastructure. Nostr is something to use if it makes the product better, or avoid if it makes the product and user experience worse. I will share more on this below, including our simple decision making framework. I’ll also present a few more potentially unpopular opinions about Nostr. Four in total actually:

- Nostr is a tool, not a revolution

- Nostr doesn’t solve the multiple social accounts problem

- Nostr is not for censorship resistance

- Grants come with a price

Let’s begin…

Nostr is a tool, not a revolution

Nostr is full of Bitcoiners, and as much as we like to think we’re immune from shiny object syndrome, we are, somewhere deep down afflicted by it like other humans. That’s normal & fine. But…while Bitcoiners have successfully suppressed this desire when it comes to shitcoins, it lies dormant, yearning for the least shitcoin-like thing to emerge which we can throw our guiltless support behind.

That thing arrived and it’s called Nostr.

As a result, we’ve come to project the same kind of purity and maximalism onto it as we do with Bitcoin, because it shares some attributes and it’s clearly not a grift.

The trouble is, in doing so, we’ve put it in the same class as Bitcoin - which is an error.

Nostr is important and in its own small way, revolutionary, but it pales in comparison to Bitcoin’s importance. Think of it this way: If Bitcoin fails, civilisation is fucked. If Nostr fails, we’ll engineer another rich-identity protocol. There is no need for the kind of immaculate conception and path dependence that was necessary for Bitcoin whose genesis and success has been a once in a civilisation event. Equivocating Nostr and Bitcoin to the degree that it has been, is a significant category error. Nostr may ‘win’ or it may just be an experiment on the path to something better. And that’s ok !

I don’t say this to piss anyone off, to piss on Nostr or to piss on myself. I say it because I’d prefer Nostr not remain a place where a few thousand people speak to each other about how cool Nostr is. That’s cute in the short term, but in the grand scheme of things, it’s a waste of a great tool that can make a significant corner of the Internet great again.

By removing the emotional charge and hopium from our relationship to Nostr, we can take a more sober, objective view of it (and hopefully use it more effectively).

Instead of making everything about Nostr (the tool), we can go back to doing what great product people and businesses do: make everything about the customer.

Nobody’s going around marketing their app as a “react native product” - and while I understand that’s a false equivalent in the sense that Nostr is a protocol, while react is a framework - the reality is that it DOES NOT MATTER.

For 99.9999% of the world, what matters is the hole, not the drill. Maybe 1000 people on Earth REALLY care that something is built on Nostr, but for everyone else, what matters is what the app or product does and the problem it solves. Realigning our focus in this way, and looking at not only Nostr, but also Bitcoin as a tool in the toolkit, has transformed the way we’re building.

This inspired an essay I wrote a couple weeks ago called “As Nostr as Possible”. It covers our updated approach to using and building WITH Nostr (not just ‘on’ it). You can find that here:

https://futuresocial.substack.com/p/as-nostr-as-possible-anap

If you’re too busy to read it, don’t fret. The entire theory can be summarised by the diagram below. This is how we now decide what to make Nostr-native, and what to just build on our own. And - as stated in the ANAP essay - that doesn’t mean we’ll never make certain features Nostr-native. If the argument is that Nostr is not going anywhere, then we can always come back to that feature and Nostr-fy it later when resources and protocol stability permit.

Next…

The Nostr all in one approach is not all “positive”

Having one account accessible via many different apps might not be as positive as we initially thought.

If you have one unified presence online, across all of your socials, and you’re posting the same thing everywhere, then yes - being able to post content in one place and it being broadcast everywhere, is great. There’s a reason why people literally PAY for products like Hypefury, Buffer and Hootsuite (aside from scheduling).

BUT…..This is not always the case.

I’ve spoken to hundreds of creators and many have flagged this as a bug not a feature because they tend to have a different audience on different platforms and speak to them differently depending on the platform. We all know this. How you present yourself on LinkedIn is very different to how you do it on Instagram or X.

The story of Weishu (Tencent’s version of TikTok) comes to mind here. Tencent’s WeChat login worked against them because people didn’t want their social graph following them around. Users actually wanted freedom from their existing family & friends, so they chose Douyin (Chinese TikTok) instead.

Perhaps this is more relevant to something like WeChat because the social graph following you around is more personal, but we saw something similar with Instagram and Facebook. Despite over a decade of ownership, Facebook still keeps the social graphs separated.

All this to say that while having a different strategy & approach on different social apps is annoying, it allows users to tap into different markets because each silo has its own ‘flavour’. The people who just post the same thing everywhere are low-quality content creators anyway. The ones who actually care, are using each platform differently.

The ironic part here is that this is arguably more ‘decentralised’ than the protocol approach because these siloes form a ‘marketplace of communities’ which are all somewhat different.

We need to find a smart way of doing this with Nostr. Some way of catering to the appropriate audience where it matters most. Perhaps this will be handled by clients, or by relays. One solution I’ve heard from people in the Nostr space is to just ‘spin up another nPub’ for your different audience. While I have no problem with people doing that - I have multiple nPubs myself - it’s clearly NOT a solution to the underlying problem here.

We’re experimenting with something. Whether it’s a good idea or not remains to be seen. Satlantis users will be able to curate their profiles and remove (hide / delete) content on our app. We’ll implement this in two stages:

Stage 1: Simple\ In the first iteration, we will not broadcast a delete request to relays. This means users can get a nicely curated profile page on Satlantis, but keep a record of their full profile elsewhere on other clients / relays.

Stage 2: More complex\ Later on, we’ll try to give people an option to “delete on Satlantis only” or “delete everywhere”. The difference here is more control for the user. Whether we get this far remains to be seen. We’ll need to experiment with the UX and see whether this is something people really want.

I’m sure neither of these solutions are ‘ideal’ - but they’re what we’re going to try until we have more time & resources to think this through more.

Next…

Nostr is not for Censorship Resistance

I’m sorry to say, but this ship has sailed. At least for now. Maybe it’s a problem again in the future, but who knows when, and if it will ever be a big enough factor anyway.

The truth is, while WE all know that Nostr is superior because it’s a protocol, people do NOT care enough. They are more interested in what’s written ON the box, not what’s necessarily inside the box. 99% of people don’t know wtf a protocol is in the first place - let alone why it matters for censorship resistance to happen at that level, or more importantly, why they should trust Nostr to deliver on that promise.

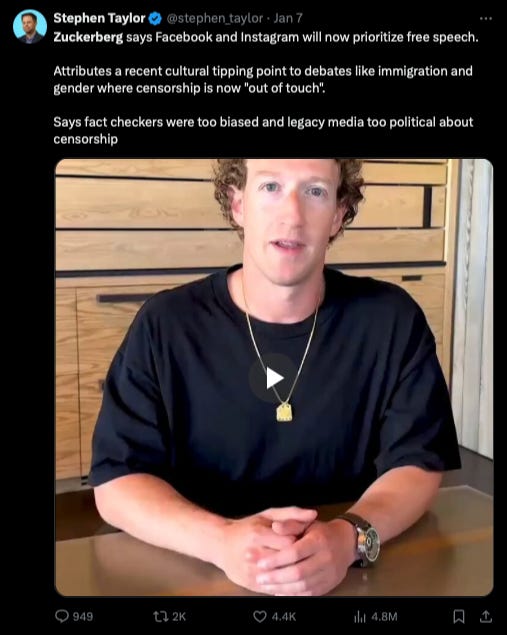

Furthermore, the few people who did care about “free speech” are now placated enough with Rumble for Video, X for short form and Substack for long form. With Meta now paying lip-service to the movement, it’s game over for this narrative - at least for the foreseeable future.

The "space in people’s minds for censorship resistance has been filled. Both the ‘censorship resistance’ and ‘free speech’ ships have sailed (even though they were fake), and the people who cared enough all boarded.

The "space in people’s minds for censorship resistance has been filled. Both the ‘censorship resistance’ and ‘free speech’ ships have sailed (even though they were fake), and the people who cared enough all boarded.For the normies who never cared, they still don’t care - or they found their way to the anti-platforms, like Threads, BlueSky or Pornhub.

The small minority of us still here on Nostr…are well…still here. Which is great, but if the goal is to grow the network effect here and bring in more people, then we need to find a new angle. Something more compelling than “your account won’t be deleted.”

I’m not 100% sure what that is. My instinct is that a “network of interoperable applications”, that don’t necessarily or explicitly brand themselves as Nostr, but have it under the hood is the right direction. I think the open social graph and using it in novel ways is compelling. Trouble is, this needs more really well-built and novel apps for non-sovereignty minded people (especially content creators) and people who don’t necessarily care about the reasons Nostr was first built. Also requires us to move beyond just building clones of what already exists.

We’ve been trying to do this Satlantis thing for almost a year now and it’s coming along - albeit WAY slower than I would’ve liked. We’re experimenting our way into a whole new category of product. Something different to what exists today. We’ve made a whole bunch of mistakes and at times I feel like a LARP considering the state of non-delivery.

BUT…what’s on the horizon is very special, and I think that all of the pain, effort and heartache along the way will be 100% worth it. We are going to deliver a killer product that people love, that solves a whole host of travel-related problems and has Nostr under the hood (where nobody, except those who care, will know).

Grants come with a price

This one is less of an opinion and more of an observation. Not sure it really belongs in this essay, but I’ll make a small mention just as food for thought,

Grants are a double-edged sword.

I’m super grateful that OpenSats, et al, are supporting the protocol, and I don’t envy the job they have in trying to decipher what to support and what not to depending on what’s of benefit to the network versus what’s an end user product.

That being said, is the Nostr ecosystem too grant-dependent? This is not a criticism, but a question. Perhaps this is the right thing to do because of how young Nostr is. But I just can’t help but feel like there’s something a-miss.

Grants put the focus on Nostr, instead of the product or customer. Which is fine, if the work the grant covers is for Nostr protocol development or tooling. But when grants subsidise the development of end user products, it ties the builder / grant recipient to Nostr in a way that can misalign them to the customer’s needs. It’s a bit like getting a government grant to build something. Who’s the real customer??

Grants can therefore create an almost communist-like detachment from the market and false economic incentive. To reference the Nostr decision framework I showed you earlier, when you’ve been given a grant, you are focusing more on the X axis, not the Y. This is a trade-off, and all trade-offs have consequences.

Could grants be the reason Nostr is so full of hobbyists and experimental products, instead of serious products? Or is that just a function of how ambitious and early Nostr is?

I don’t know.

Nostr certainly needs better toolkits, SDKs, and infrastructure upon which app and product developers can build. I just hope the grant money finds its way there, and that it yields these tools. Otherwise app developers like us, won’t stick around and build on Nostr. We’ll swap it out with a better tool.

To be clear, this is not me pissing on Nostr or the Grantors. Jack, OpenSats and everyone who’s supported Nostr are incredible. I’m just asking the question.

Final thing I’ll leave this section with is a thought experiment: Would Nostr survive if OpenSats disappeared tomorrow?

Something to think about….

Coda

If you read this far, thank you. There’s a bunch here to digest, and like I said earlier - this not about shitting on Nostr. It is just an enquiry mixed with a little classic Svetski-Sacred-Cow-Slaying.

I want to see Nostr succeed. Not only because I think it’s good for the world, but also because I think it is the best option. Which is why we’ve invested so much in it (something I’ll cover in an upcoming article: “Why we chose to build on Nostr”). I’m firmly of the belief that this is the right toolkit for an internet-native identity and open social graph. What I’m not so sure about is the echo chamber it’s become and the cult-like relationship people have with it.

I look forward to being witch-hunted and burnt at the stake by the Nostr purists for my heresy and blaspheming. I also look forward to some productive discussions as a result of reading this.

Thankyou for your attention.

Until next time.

-

@ bbef5093:71228592

2025-05-06 16:11:35

@ bbef5093:71228592

2025-05-06 16:11:35India csökkentené az atomerőművek építési idejét ambiciózus nukleáris céljai eléréséhez

India célja, hogy a jelenlegi 10 évről a „világszínvonalú” 6 évre csökkentse atomerőművi projektjeinek kivitelezési idejét, hogy elérje a 2047-re kitűzött, 100 GW beépített nukleáris kapacitást.

Az SBI Capital Markets (az Indiai Állami Bank befektetési banki leányvállalata) jelentése szerint ez segítene mérsékelni a korábbi költségtúllépéseket, és vonzóbbá tenné az országot a globális befektetők számára.

A jelentés szerint a jelenlegi, mintegy 8 GW kapacitás és a csak 7 GW-nyi építés alatt álló kapacitás mellett „jelentős gyorsítás” szükséges a célok eléréséhez.

A kormány elindította a „nukleáris energia missziót”, amelyhez körülbelül 2,3 milliárd dollárt (2 milliárd eurót) különített el K+F-re és legalább öt Bharat kis moduláris reaktor (BSMR) telepítésére, de további kihívásokat kell megoldania a célok eléréséhez.

Az építési idők csökkentése kulcsfontosságú, de a jelentés átfogó rendszerszintű reformokat is javasol, beleértve a gyorsabb engedélyezést, a földszerzési szabályok egyszerűsítését, az erőművek körüli védőtávolság csökkentését, és a szabályozó hatóság (Atomic Energy Regulatory Board) nagyobb önállóságát.

A jelentés szerint a nemzet korlátozott uránkészletei miatt elengedhetetlen az üzemanyagforrások diverzifikálása nemzetközi megállapodások révén, valamint az indiai nukleáris program 2. és 3. szakaszának felgyorsítása.

India háromlépcsős nukleáris programja célja egy zárt üzemanyagciklus kialakítása, amely a természetes uránra, a plutóniumra és végül a tóriumra épül. A 2. szakaszban gyorsneutronos reaktorokat használnak, amelyek több energiát nyernek ki az uránból, kevesebb bányászott uránt igényelnek, és a fel nem használt uránt új üzemanyaggá alakítják. A 3. szakaszban fejlett reaktorok működnek majd India hatalmas tóriumkészleteire alapozva.

2025 januárjában az indiai Nuclear Power Corporation (NPCIL) pályázatot írt ki Bharat SMR-ek telepítésére, először nyitva meg a nukleáris szektort indiai magáncégek előtt.

Eddig csak az állami tulajdonú NPCIL építhetett és üzemeltethetett kereskedelmi atomerőműveket Indiában.

A Bharat SMR-ek (a „Bharat” hindiül Indiát jelent) telepítése a „Viksit Bharat” („Fejlődő India”) program része.

Engedélyezési folyamat: „elhúzódó és egymásra épülő”

A Bharat atomerőmű fejlesztésének részletei továbbra sem világosak, de Nirmala Sitharaman pénzügyminiszter júliusban elmondta, hogy az állami National Thermal Power Corporation és a Bharat Heavy Electricals Limited közös vállalkozásában valósulna meg a fejlesztés.

Sitharaman hozzátette, hogy a kormány a magánszektorral közösen létrehozna egy Bharat Small Reactors nevű céget, amely SMR-ek és új nukleáris technológiák kutatás-fejlesztésével foglalkozna.

Az SBI jelentése szerint javítani kell az SMR programot, mert az engedélyezési folyamat jelenleg „elhúzódó és egymásra épülő”, és aránytalan kockázatot jelent a magánszereplők számára a reaktorfejlesztés során.

A program „stratégiailag jó helyzetben van a sikerhez”, mert szigorú belépési feltételeket támaszt, így csak komoly és alkalmas szereplők vehetnek részt benne.

A kormánynak azonban be kellene vezetnie egy kártérítési záradékot, amely védi a magáncégeket az üzemanyag- és nehézvíz-ellátás hiányától, amely az Atomenergia Minisztérium (DAE) hatáskörébe tartozik.