-

@ b99efe77:f3de3616

2025-05-04 18:43:28

@ b99efe77:f3de3616

2025-05-04 18:43:28TEST SERGEY

TEST SERGEY TEST SERGEY TEST SERGEY TEST SERGEY TEST SERGEY TEST SERGEY

Places & Transitions

- Places:

-

Bla bla bla: some text

-

Transitions:

- start: Initializes the system.

- logTask: bla bla bla.

petrinet ;startDay () -> working ;stopDay working -> () ;startPause working -> paused ;endPause paused -> working ;goSmoke working -> smoking ;endSmoke smoking -> working ;startEating working -> eating ;stopEating eating -> working ;startCall working -> onCall ;endCall onCall -> working ;startMeeting working -> inMeetinga ;endMeeting inMeeting -> working ;logTask working -> working -

@ c9badfea:610f861a

2025-05-04 18:39:06

@ c9badfea:610f861a

2025-05-04 18:39:06- Install Kiwix (it's free and open source)

- Download ZIM files from the Kiwix Library (you will find complete offline versions of Wikipedia, Stack Overflow, Bitcoin Wiki, DevDocs and many more)

- Open the downloaded ZIM files within the Kiwix app

ℹ️ You can also package any website using either Kiwix Zimit (online tool) or the Zimit Docker Container (for technical users)

ℹ️.zimis the file format used for packaged websites -

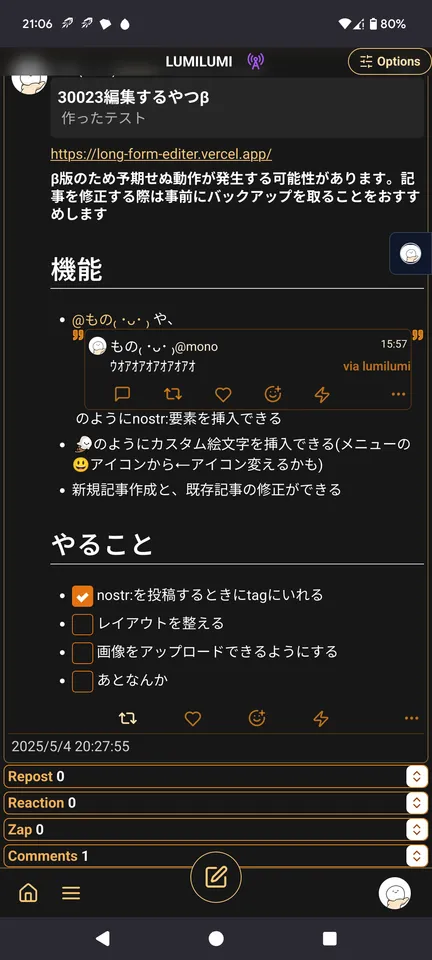

@ 7ef5f1b1:0e0fcd27

2025-05-04 18:28:05

@ 7ef5f1b1:0e0fcd27

2025-05-04 18:28:05A monthly newsletter by The 256 Foundation

May 2025

Introduction:

Welcome to the fifth newsletter produced by The 256 Foundation! April was a jam-packed month for the Foundation with events ranging from launching three grant projects to the first official Ember One release. The 256 Foundation has been laser focused on our mission to dismantle the proprietary mining empire, signing off on a productive month with the one-finger salute to the incumbent mining cartel.

[IMG-001] Hilarious meme from @CincoDoggos

[IMG-001] Hilarious meme from @CincoDoggosDive in to catch up on the latest news, mining industry developments, progress updates on grant projects, Actionable Advice on helping test Hydra Pool, and the current state of the Bitcoin network.

Definitions:

DOJ = Department of Justice

SDNY = Southern District of New York

BTC = Bitcoin

SD = Secure Digital

Th/s = Terahash per second

OSMU = Open Source Miners United

tx = transaction

PSBT = Partially Signed Bitcoin Transaction

FIFO = First In First Out

PPLNS = Pay Per Last N Shares

GB = Gigabyte

RAM = Random Access Memory

ASIC = Application Specific Integrated Circuit

Eh/s = Exahash per second

Ph/s = Petahash per second

News:

April 7: the first of a few notable news items that relate to the Samourai Wallet case, the US Deputy Attorney General, Todd Blanche, issued a memorandum titled “Ending Regulation By Prosecution”. The memo makes the DOJ’s position on the matter crystal clear, stating; “Specifically, the Department will no longer target virtual currency exchanges, mixing and tumbling services, and offline wallets for the acts of their end users or unwitting violations of regulations…”. However, despite the clarity from the DOJ, the SDNY (sometimes referred to as the “Sovereign District” for it’s history of acting independently of the DOJ) has yet to budge on dropping the charges against the Samourai Wallet developers. Many are baffled at the SDNY’s continued defiance of the Trump Administration’s directives, especially in light of the recent suspensions and resignations that swept through the SDNY office in the wake of several attorneys refusing to comply with the DOJ’s directive to drop the charges against New York City Mayor, Eric Adams. There is speculation that the missing piece was Trump’s pick to take the helm at the SDNY, Jay Clayton, who was yet to receive his Senate confirmation and didn’t officially start in his new role until April 22. In light of the Blanche Memo, on April 29, the prosecution and defense jointly filed a letter requesting additional time for the prosecution to determine it’s position on the matter and decide if they are going to do the right thing, comply with the DOJ, and drop the charges. Catch up on what’s at stake in this case with an appearance by Diverter on the Unbounded Podcast from April 24, the one-year anniversary of the Samourai Wallet developer’s arrest. This is the most important case facing Bitcoiners as the precedence set in this matter will have ripple effects that touch all areas of the ecosystem. The logic used by SDNY prosecutors argues that non-custodial wallet developers transfer money in the same way a frying pan transfers heat but does not “control” the heat. Essentially saying that facilitating the transfer of funds on behalf of the public by any means constitutes money transmission and thus requires a money transmitter license. All non-custodial wallets (software or hardware), node operators, and even miners would fall neatly into these dangerously generalized and vague definitions. If the SDNY wins this case, all Bitcoiners lose. Make a contribution to the defense fund here.

April 11: solo miner with ~230Th/s solves Block #891952 on Solo CK Pool, bagging 3.11 BTC in the process. This will never not be exciting to see a regular person with a modest amount of hashrate risk it all and reap all the mining reward. The more solo miners there are out there, the more often this should occur.

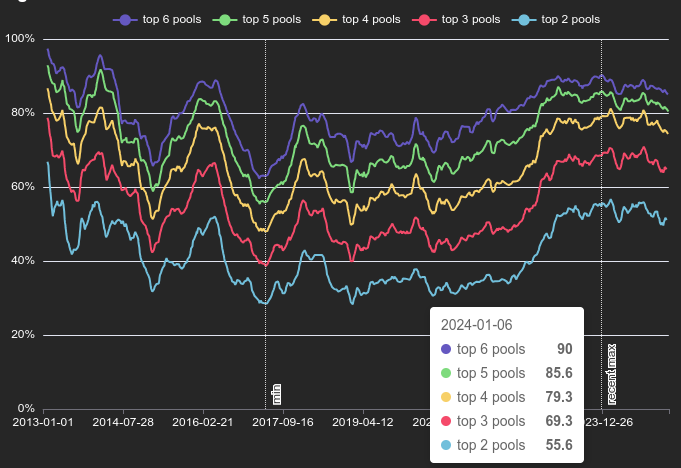

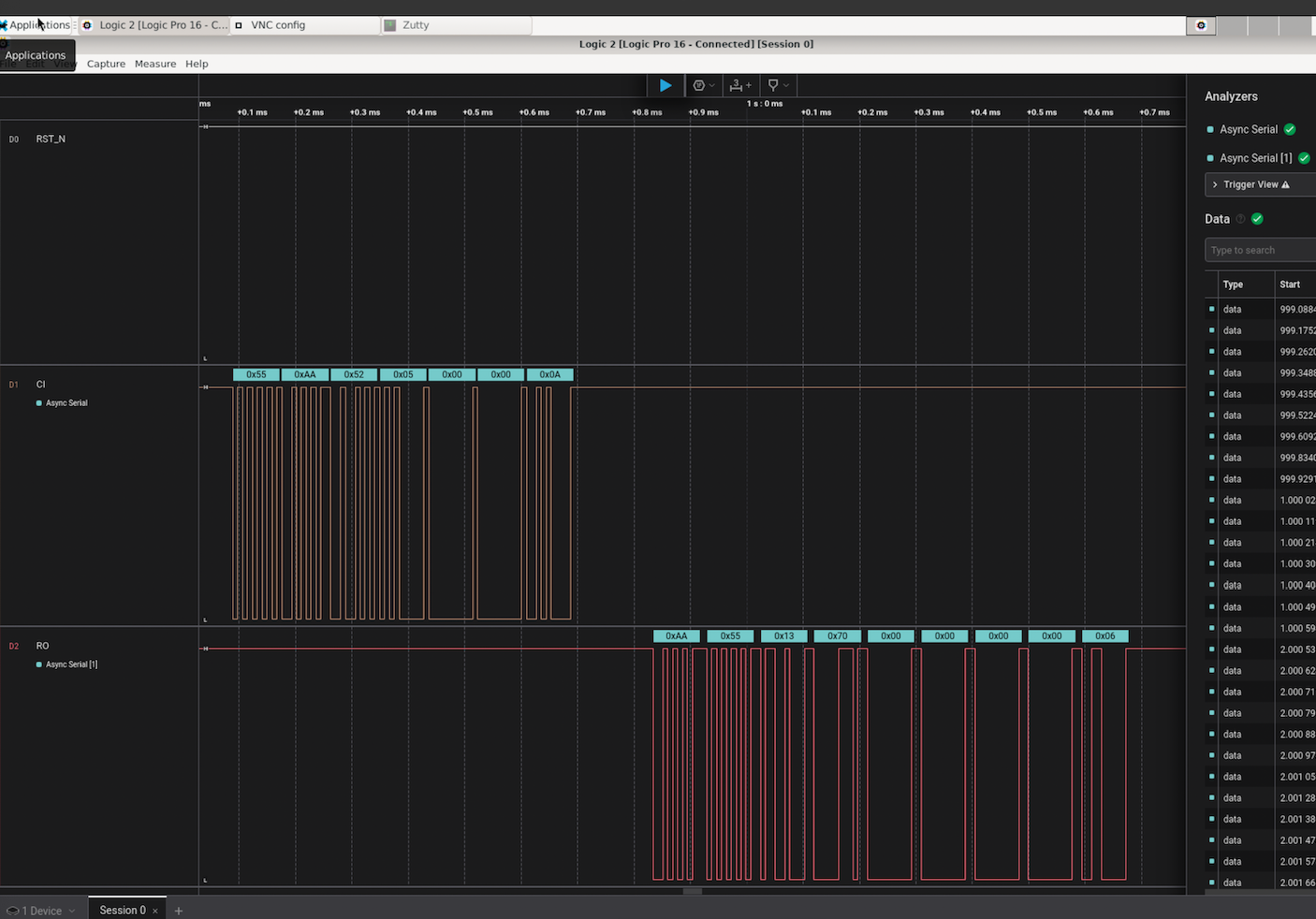

April 15: B10C publishes new article on mining centralization. The article analyzes the hashrate share of the currently five biggest pools and presents a Mining Centralization Index. The results demonstrate that only six pools are mining more than 95% of the blocks on the Bitcoin Network. The article goes on to explain that during the period between 2019 and 2022, the top two pools had ~35% of the network hashrate and the top six pools had ~75%. By December 2023 those numbers grew to the top two pools having 55% of the network hashrate and the top six having ~90%. Currently, the top six pools are mining ~95% of the blocks.

[IMG-002] Mining Centralization Index by @0xB10C

[IMG-002] Mining Centralization Index by @0xB10CB10C concludes the article with a solution that is worth highlighting: “More individuals home-mining with small miners help too, however, the home-mining hashrate is currently still negligible compared to the industrial hashrate.”

April 15: As if miner centralization and proprietary hardware weren’t reason enough to focus on open-source mining solutions, leave it to Bitmain to release an S21+ firmware update that blocks connections to OCEAN and Braiins pools. This is the latest known sketchy development from Bitmain following years of shady behavior like Antbleed where miners would phone home, Covert ASIC Boost where miners could use a cryptographic trick to increase efficiency, the infamous Fork Wars, mining empty blocks, and removing the SD card slots. For a mining business to build it’s entire operation on a fragile foundation like the closed and proprietary Bitmain hardware is asking for trouble. Bitcoin miners need to remain flexible and agile and they need to be able to adapt to changes instantly – the sort of freedoms that only open-source Bitcoin mining solutions are bringing to the table.

Free & Open Mining Industry Developments:

The development will not stop until Bitcoin mining is free and open… and then it will get even better. Innovators did not disappoint in April, here are nine note-worthy events:

April 5: 256 Foundation officially launches three more grant projects. These will be covered in detail in the Grant Project Updates section but April 5 was a symbolic day to mark the official start because of the 6102 anniversary. A reminder of the asymmetric advantage freedom tech like Bitcoin empowers individuals with to protect their rights and freedoms, with open-source development being central to those ends.

April 5: Low profile ICE Tower+ for the Bitaxe Gamma 601 introduced by @Pleb_Style featuring four heat pipes, 2 copper shims, and a 60mm Noctua fan resulting in up to 2Th/s. European customers can pick up the complete upgrade kit from the Pleb Style online store for $93.00.

IMG-003] Pleb Style ICE Tower+ upgrade kit

IMG-003] Pleb Style ICE Tower+ upgrade kitApril 8: Solo Satoshi spells out issues with Bitaxe knockoffs, like Lucky Miner, in a detailed article titled The Hidden Cost of Bitaxe Clones. This concept can be confusing for some people initially, Bitaxe is open-source, right? So anyone can do whatever they want… right? Based on the specific open-source license of the Bitaxe hardware, CERN-OHL-S, and the firmware, GPLv3, derivative works are supposed to make the source available. Respecting the license creates a feed back loop where those who benefit from the open-source work of those who came before them contribute back their own modifications and source files to the open-source community so that others can benefit from the new developments. Unfortunately, when the license is disrespected what ends up happening is that manufacturers make undocumented changes to the components in the hardware and firmware which yields unexpected results creating a number of issues like the Bitaxe overheating, not connecting to WiFi, or flat out failure. This issue gets further compounded when the people who purchased the knockoffs go to a community support forum, like OSMU, for help. There, a number of people rack their brains and spend their valuable time trying to replicate the issues only to find out that they cannot replicate the issues since the person who purchased the knockoff has something different than the known Bitaxe model and the distributor who sold the knockoff did not document those changes. The open-source licenses are maintaining the end-users’ freedom to do what they want but if the license is disrespected then that freedom vanishes along with details about whatever was changed. There is a list maintained on the Bitaxe website of legitimate distributors who uphold the open-source licenses, if you want to buy a Bitaxe, use this list to ensure the open-source community is being supported instead of leeched off of.

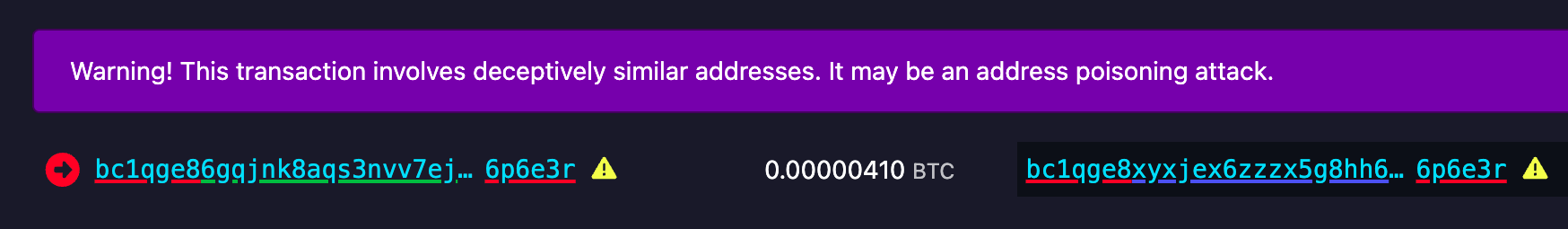

April 8: The Mempool Open Source Project v3.2.0 launches with a number of highlights including a new UTXO bubble chart, address poisoning detection, and a tx/PSBT preview feature. The GitHub repo can be found here if you want to self-host an instance from your own node or you can access the website here. The Mempool Open Source Project is a great blockchain explorer with a rich feature set and helpful visualization tools.

[IMG-004] Address poisoning example

[IMG-004] Address poisoning exampleApril 8: @k1ix publishes bitaxe-raw, a firmware for the ESP32S3 found on Bitaxes which enables the user to send and receive raw bytes over USB serial to and from the Bitaxe. This is a helpful tool for research and development and a tool that is being leveraged at The 256 Foundation for helping with the Mujina miner firmware development. The bitaxe-raw GitHub repo can be found here.

April 14: Rev.Hodl compiles many of his homestead-meets-mining adaptations including how he cooks meat sous-vide style, heats his tap water to 150°F, runs a hashing space heater, and how he upgraded his clothes dryer to use Bitcoin miners. If you are interested in seeing some creative and resourceful home mining integrations, look no further. The fact that Rev.Hodl was able to do all this with closed-source proprietary Bitcoin mining hardware makes a very bullish case for the innovations coming down the pike once the hardware and firmware are open-source and people can gain full control over their mining appliances.

April 21: Hashpool explained on The Home Mining Podcast, an innovative Bitcoin mining pool development that trades mining shares for ecash tokens. The pool issues an “ehash” token for every submitted share, the pool uses ecash epochs to approximate the age of those shares in a FIFO order as they accrue value, a rotating key set is used to eventually expire them, and finally the pool publishes verification proofs for each epoch and each solved block. The ehash is provably not inflatable and payouts are similar to the PPLNS model. In addition to the maturity window where ehash tokens are accruing value, there is also a redemption window where the ehash tokens can be traded in to the mint for bitcoin. There is also a bitcoin++ presentation from earlier this year where @vnprc explains the architecture.

April 26: Boerst adds a new page on stratum.work for block template details, you can click on any mining pool and see the extended details and visualization of their current block template. Updates happen in real-time. The page displays all available template data including the OP_RETURN field and if the pool is merge mining, like with RSK, then that will be displayed too. Stratum dot work is a great project that offers helpful mining insights, be sure to book mark it if you haven’t already.

[IMG-005] New stratum.work live template page

[IMG-005] New stratum.work live template pageApril 27: Public Pool patches Nerdminer exploit that made it possible to create the impression that a user’s Nerdminer was hashing many times more than it actually was. This exploit was used by scammers trying to convince people that they had a special firmware for the Nerminer that would make it hash much better. In actuality, Public Pool just wasn’t checking to see if submitted shares were duplicates or not. The scammers would just tweak the Nerdminer firmware so that valid shares were getting submitted five times, creating the impression that the miner was hashing at five times the actual hashrate. Thankfully this has been uncovered by the open-source community and Public Pool quickly addressed it on their end.

Grant Project Updates:

Three grant projects were launched on April 5, Mujina Mining Firmware, Hydra Pool, and Libre Board. Ember One was the first fully funded grant and launched in November 2024 for a six month duration.

Ember One:

@skot9000 is the lead engineer on the Ember One and April 30 marked the conclusion of the first grant cycle after six months of development culminating in a standardized hashboard featuring a ~100W power consumption, 12-24v input voltage range, USB-C data communication, on-board temperature sensors, and a 125mm x 125mm formfactor. There are several Ember One versions on the road map, each with a different kind of ASIC chip but staying true to the standardized features listed above. The first Ember One, the 00 version, was built with the Bitmain BM1362 ASIC chips. The first official release of the Ember One, v3, is available here. v4 is already being worked on and will incorporate a few circuit safety mechanisms that are pretty exciting, like protecting the ASIC chips in the event of a power supply failure. The firmware for the USB adaptor is available here. Initial testing firmware for the Ember One 00 can be found here and full firmware support will be coming soon with Mujina. The Ember One does not have an on-board controller so a separate, USB connected, control board is required. Control board support is coming soon with the Libre Board. There is an in-depth schematic review that was recorded with Skot and Ryan, the lead developer for Mujina, you can see that video here. Timing for starting the second Ember One cycle is to be determined but the next version of the Ember One is planned to have the Intel BZM2 ASICs. Learn more at emberone.org

Mujina Mining Firmware:

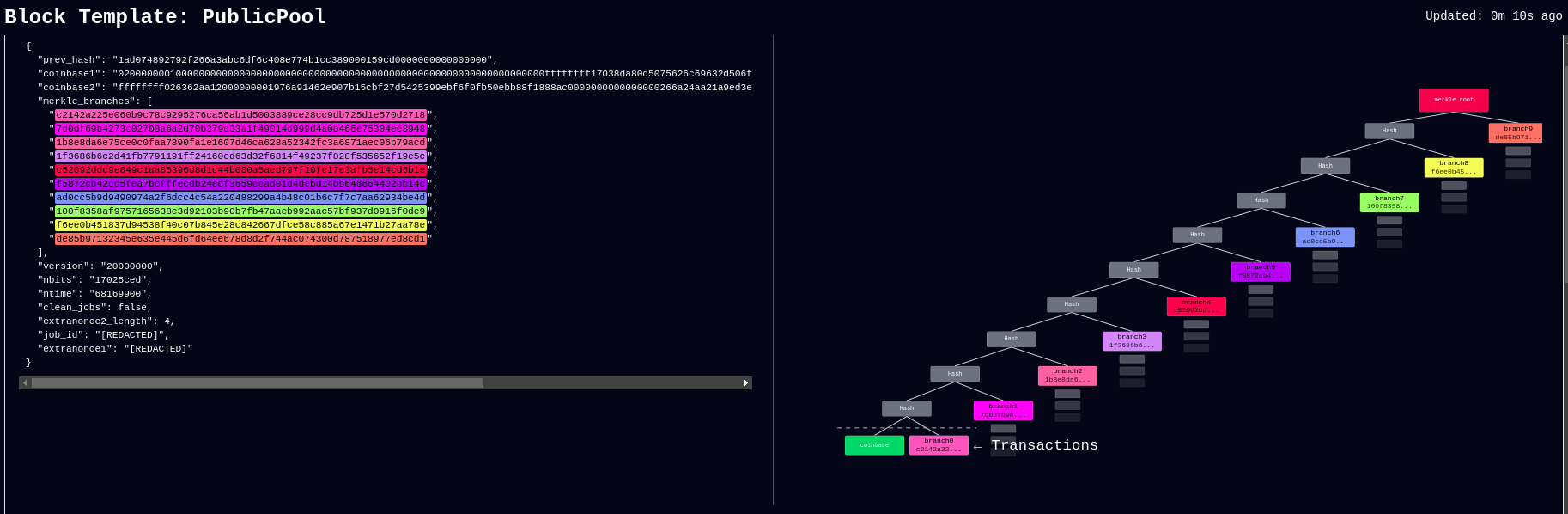

@ryankuester is the lead developer for the Mujina firmware project and since the project launched on April 5, he has been working diligently to build this firmware from scratch in Rust. By using the bitaxe-raw firmware mentioned above, over the last month Ryan has been able to use a Bitaxe to simulate an Ember One so that he can start building the necessary interfaces to communicate with the range of sensors, ASICs, work handling, and API requests that will be necessary. For example, using a logic analyzer, this is what the first signs of life look like when communicating with an ASIC chip, the orange trace is a message being sent to the ASIC and the red trace below it is the ASIC responding [IMG-006]. The next step is to see if work can be sent to the ASIC and results returned. The GitHub repo for Mujina is currently set to private until a solid foundation has been built. Learn more at mujina.org

[IMG-006] First signs of life from an ASIC

[IMG-006] First signs of life from an ASICLibre Board:

@Schnitzel is the lead engineer for the Libre Board project and over the last month has been modifying the Raspberry Pi Compute Module I/O Board open-source design to fit the requirements for this project. For example, removing one of the two HDMI ports, adding the 40-pin header, and adapting the voltage regulator circuit so that it can accept the same 12-24vdc range as the Ember One hashboards. The GitHub repo can be found here, although there isn’t much to look at yet as the designs are still in the works. If you have feature requests, creating an issue in the GitHub repo would be a good place to start. Learn more at libreboard.org

Hydra Pool:

@jungly is the lead developer for Hydra Pool and over the last month he has developed a working early version of Hydra Pool specifically for the upcoming Telehash #2. Forked from CK Pool, this early version has been modified so that the payout goes to the 256 Foundation bitcoin address automatically. This way, users who are supporting the funderaiser with their hashrate do not need to copy/paste in the bitcoin address, they can just use any vanity username they want. Jungly was also able to get a great looking statistics dashboard forked from CKstats and modify it so that the data is populated from the Hydra Pool server instead of website crawling. After the Telehash, the next steps will be setting up deployment scripts for running Hydra Pool on a cloud server, support for storing shares in a database, and adding PPLNS support. The 256 Foundation is only running a publicly accessible server for the Telehash and the long term goals for Hydra Pool are that the users host their own instance. The 256 Foundation has no plans on becoming a mining pool operator. The following Actionable Advice column shows you how you can help test Hydra Pool. The GitHub repo for Hydra Pool can be found here. Learn more at hydrapool.org

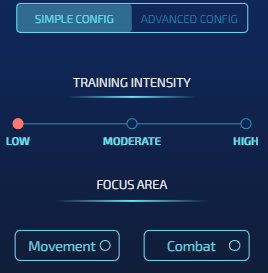

Actionable Advice:

The 256 Foundation is looking for testers to help try out Hydra Pool. The current instance is on a hosted bare metal server in Florida and features 64 cores and 128 GB of RAM. One tester in Europe shared that they were only experiencing ~70ms of latency which is good. If you want to help test Hydra Pool out and give any feedback, you can follow the directions below and join The 256 Foundation public forum on Telegram here.

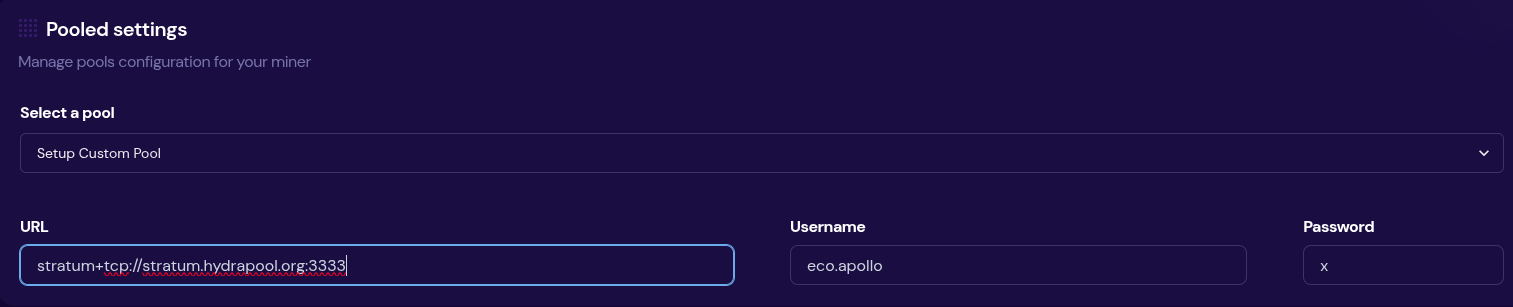

The first step is to configure your miner so that it is pointed to the Hydra Pool server. This can look different depending on your specific miner but generally speaking, from the settings page you can add the following URL:

stratum+tcp://stratum.hydrapool.org:3333On some miners, you don’t need the “stratum+tcp://” part or the port, “:3333”, in the URL dialog box and there may be separate dialog boxes for the port.

Use any vanity username you want, no need to add a BTC address. The test iteration of Hydra Pool is configured to payout to the 256 Foundation BTC address.

If your miner has a password field, you can just put “x” or “1234”, it doesn’t matter and this field is ignored.

Then save your changes and restart your miner. Here are two examples of what this can look like using a Futurebit Apollo and a Bitaxe:

[IMG-007] Apollo configured to Hydra Pool

[IMG-007] Apollo configured to Hydra Pool [IMG-008] Bitaxe Configured to Hydra Pool

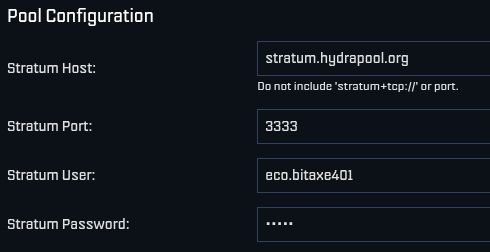

[IMG-008] Bitaxe Configured to Hydra PoolOnce you get started, be sure to check stats.hydrapool.org to monitor the solo pool statistics.

[IMG-009] Ember One hashing to Hydra Pool

[IMG-009] Ember One hashing to Hydra PoolAt the last Telehash there were over 350 entities pointing as much as 1.12Eh/s at the fundraiser at the peak. At the time the block was found there was closer to 800 Ph/s of hashrate. At this next Telehash, The 256 Foundation is looking to beat the previous records across the board. You can find all the Telehash details on the Meetup page here.

State of the Network:

Hashrate on the 14-day MA according to mempool.space increased from ~826 Eh/s to a peak of ~907 Eh/s on April 16 before cooling off and finishing the month at ~841 Eh/s, marking ~1.8% growth for the month.

[IMG-010] 2025 hashrate/difficulty chart from mempool.space

[IMG-010] 2025 hashrate/difficulty chart from mempool.spaceDifficulty was 113.76T at it’s lowest in April and 123.23T at it’s highest, which is a 8.3% increase for the month. But difficulty dropped with Epoch #444 just after the end of the month on May 3 bringing a -3.3% downward adjustment. All together for 2025 up to Epoch #444, difficulty has gone up ~8.5%.

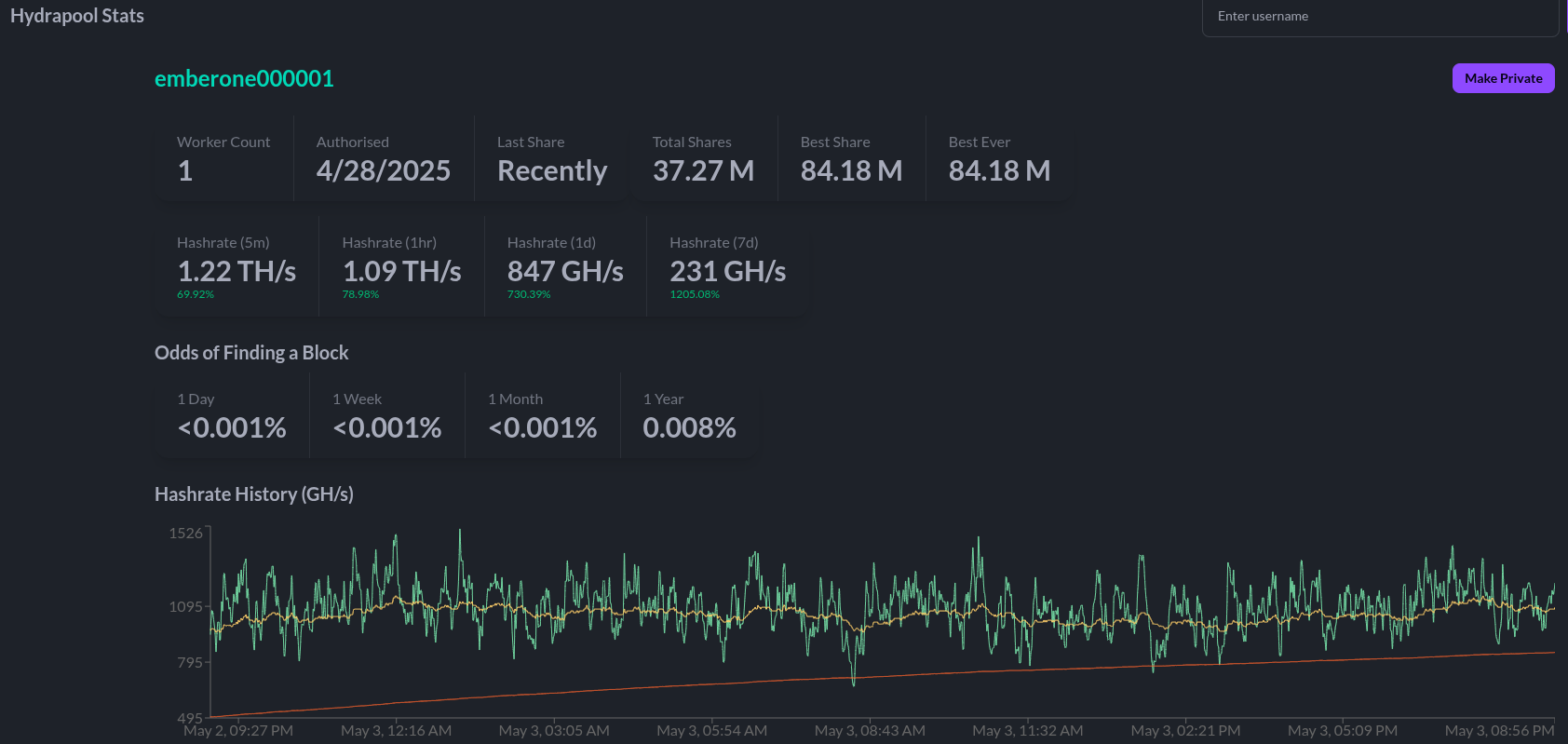

According to the Hashrate Index, ASIC prices have flat-lined over the last month. The more efficient miners like the <19 J/Th models are fetching $17.29 per terahash, models between 19J/Th – 25J/Th are selling for $11.05 per terahash, and models >25J/Th are selling for $3.20 per terahash. You can expect to pay roughly $4,000 for a new-gen miner with 230+ Th/s.

[IMG-011] Miner Prices from Luxor’s Hashrate Index

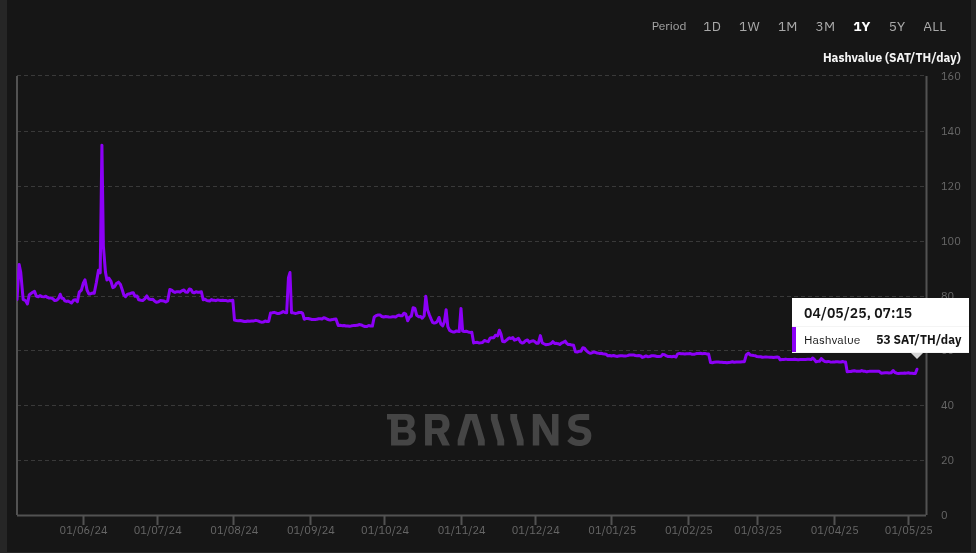

[IMG-011] Miner Prices from Luxor’s Hashrate IndexHashvalue over the month of April dropped from ~56,000 sats/Ph per day to ~52,000 sats/Ph per day, according to the new and improved Braiins Insights dashboard [IMG-012]. Hashprice started out at $46.00/Ph per day at the beginning of April and climbed to $49.00/Ph per day by the end of the month.

[IMG-012] Hashprice/Hashvalue from Braiins Insights

[IMG-012] Hashprice/Hashvalue from Braiins InsightsThe next halving will occur at block height 1,050,000 which should be in roughly 1,063 days or in other words ~154,650 blocks from time of publishing this newsletter.

Conclusion:

Thank you for reading the fifth 256 Foundation newsletter. Keep an eye out for more newsletters on a monthly basis in your email inbox by subscribing at 256foundation.org. Or you can download .pdf versions of the newsletters from there as well. You can also find these newsletters published in article form on Nostr.

If you haven’t done so already, be sure to RSVP for the Texas Energy & Mining Summit (“TEMS”) in Austin, Texas on May 6 & 7 for two days of the highest Bitcoin mining and energy signal in the industry, set in the intimate Bitcoin Commons, so you can meet and mingle with the best and brightest movers and shakers in the space.

[IMG-013] TEMS 2025 flyer

[IMG-013] TEMS 2025 flyer While you’re at it, extend your stay and spend Cinco De Mayo with The 256 Foundation at our second fundraiser, Telehash #2. Everything is bigger in Texas, so set your expectations high for this one. All of the lead developers from the grant projects will be present to talk first-hand about how to dismantle the proprietary mining empire.

If you are interested in helping The 256 Foundation test Hydra Pool, then hopefully you found all the information you need to configure your miner in this issue.

[IMG-014] FREE SAMOURAI

[IMG-014] FREE SAMOURAIIf you want to continue seeing developers build free and open solutions be sure to support the Samourai Wallet developers by making a tax-deductible contribution to their legal defense fund here. The first step in ensuring a future of free and open Bitcoin development starts with freeing these developers.

Live Free or Die,

-econoalchemist

-

@ 51faaa77:2c26615b

2025-05-04 17:52:33

@ 51faaa77:2c26615b

2025-05-04 17:52:33There has been a lot of debate about a recent discussion on the mailing list and a pull request on the Bitcoin Core repository. The main two points are about whether a mempool policy regarding OP_RETURN outputs should be changed, and whether there should be a configuration option for node operators to set their own limit. There has been some controversy about the background and context of these topics and people are looking for more information. Please ask short (preferably one sentence) questions as top comments in this topic. @Murch, and maybe others, will try to answer them in a couple sentences. @Murch and myself have collected a few questions that we have seen being asked to start us off, but please add more as you see fit.

originally posted at https://stacker.news/items/971277

-

@ 90c656ff:9383fd4e

2025-05-04 17:48:58

@ 90c656ff:9383fd4e

2025-05-04 17:48:58The Bitcoin network was designed to be secure, decentralized, and resistant to censorship. However, as its usage grows, an important challenge arises: scalability. This term refers to the network's ability to manage an increasing number of transactions without affecting performance or security. This challenge has sparked the speed dilemma, which involves balancing transaction speed with the preservation of decentralization and security that the blockchain or timechain provides.

Scalability is the ability of a system to increase its performance to meet higher demands. In the case of Bitcoin, this means processing a greater number of transactions per second (TPS) without compromising the network's core principles.

Currently, the Bitcoin network processes about 7 transactions per second, a number considered low compared to traditional systems, such as credit card networks, which can process thousands of transactions per second. This limit is directly due to the fixed block size (1 MB) and the average 10-minute interval for creating a new block in the blockchain or timechain.

The speed dilemma arises from the need to balance three essential elements: decentralization, security, and speed.

The Timechain/"Blockchain" Trilemma:

01 - Decentralization: The Bitcoin network is composed of thousands of independent nodes that verify and validate transactions. Increasing the block size or making them faster could raise computational requirements, making it harder for smaller nodes to participate and affecting decentralization. 02 - Security: Security comes from the mining process and block validation. Increasing transaction speed could compromise security, as it would reduce the time needed to verify each block, making the network more vulnerable to attacks. 03 - Speed: The need to confirm transactions quickly is crucial for Bitcoin to be used as a payment method in everyday life. However, prioritizing speed could affect both security and decentralization.

This dilemma requires balanced solutions to expand the network without sacrificing its core features.

Solutions to the Scalability Problem

Several solutions have been suggested to address the scalability and speed challenges in the Bitcoin network.

- On-Chain Optimization

01 - Segregated Witness (SegWit): Implemented in 2017, SegWit separates signature data from transactions, allowing more efficient use of space in blocks and increasing capacity without changing the block size. 02 - Increasing Block Size: Some proposals have suggested increasing the block size to allow more transactions per block. However, this could make the system more centralized as it would require greater computational power.

- Off-Chain Solutions

01 - Lightning Network: A second-layer solution that enables fast and low-cost transactions off the main blockchain or timechain. These transactions are later settled on the main network, maintaining security and decentralization. 02 - Payment Channels: Allow direct transactions between two users without the need to record every action on the network, reducing congestion. 03 - Sidechains: Proposals that create parallel networks connected to the main blockchain or timechain, providing more flexibility and processing capacity.

While these solutions bring significant improvements, they also present issues. For example, the Lightning Network depends on payment channels that require initial liquidity, limiting its widespread adoption. Increasing block size could make the system more susceptible to centralization, impacting network security.

Additionally, second-layer solutions may require extra trust between participants, which could weaken the decentralization and resistance to censorship principles that Bitcoin advocates.

Another important point is the need for large-scale adoption. Even with technological advancements, solutions will only be effective if they are widely used and accepted by users and developers.

In summary, scalability and the speed dilemma represent one of the greatest technical challenges for the Bitcoin network. While security and decentralization are essential to maintaining the system's original principles, the need for fast and efficient transactions makes scalability an urgent issue.

Solutions like SegWit and the Lightning Network have shown promising progress, but still face technical and adoption barriers. The balance between speed, security, and decentralization remains a central goal for Bitcoin’s future.

Thus, the continuous pursuit of innovation and improvement is essential for Bitcoin to maintain its relevance as a reliable and efficient network, capable of supporting global growth and adoption without compromising its core values.

Thank you very much for reading this far. I hope everything is well with you, and sending a big hug from your favorite Bitcoiner maximalist from Madeira. Long live freedom!

-

@ a5ee4475:2ca75401

2025-05-04 17:22:36

@ a5ee4475:2ca75401

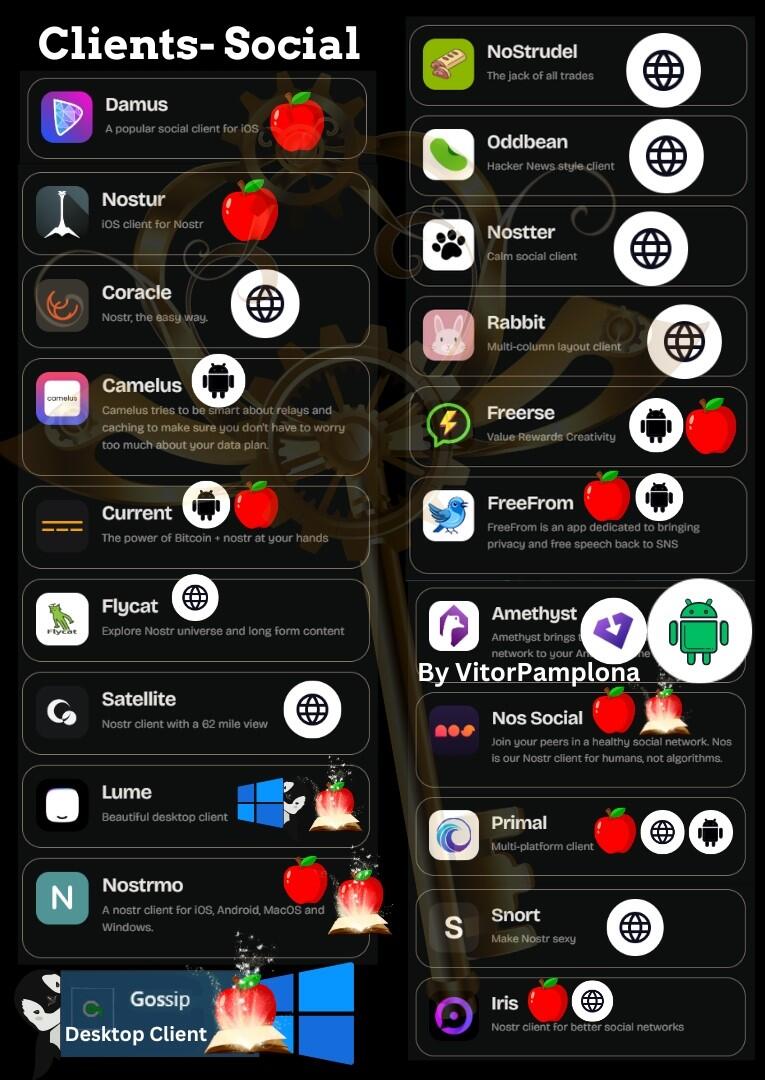

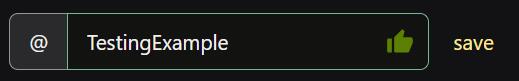

2025-05-04 17:22:36clients #list #descentralismo #english #article #finalversion

*These clients are generally applications on the Nostr network that allow you to use the same account, regardless of the app used, keeping your messages and profile intact.

**However, you may need to meet certain requirements regarding access and account NIP for some clients, so that you can access them securely and use their features correctly.

CLIENTS

Twitter like

- Nostrmo - [source] 🌐🤖🍎💻(🐧🪟🍎)

- Coracle - Super App [source] 🌐

- Amethyst - Super App with note edit, delete and other stuff with Tor [source] 🤖

- Primal - Social and wallet [source] 🌐🤖🍎

- Iris - [source] 🌐🤖🍎

- Current - [source] 🤖🍎

- FreeFrom 🤖🍎

- Openvibe - Nostr and others (new Plebstr) [source] 🤖🍎

- Snort 🌐(🤖[early access]) [source]

- Damus 🍎 [source]

- Nos 🍎 [source]

- Nostur 🍎 [source]

- NostrBand 🌐 [info] [source]

- Yana 🤖🍎🌐💻(🐧) [source]

- Nostribe [on development] 🌐 [source]

- Lume 💻(🐧🪟🍎) [info] [source]

- Gossip - [source] 💻(🐧🪟🍎)

- Camelus [early access] 🤖 [source]

Communities

- noStrudel - Gamified Experience [info] 🌐

- Nostr Kiwi [creator] 🌐

- Satellite [info] 🌐

- Flotilla - [source] 🌐🐧

- Chachi - [source] 🌐

- Futr - Coded in haskell [source] 🐧 (others soon)

- Soapbox - Comunnity server [info] [source] 🌐

- Ditto - Soapbox comunnity server 🌐 [source] 🌐

- Cobrafuma - Nostr brazilian community on Ditto [info] 🌐

- Zapddit - Reddit like [source] 🌐

- Voyage (Reddit like) [on development] 🤖

Wiki

Search

- Advanced nostr search - Advanced note search by isolated terms related to a npub profile [source] 🌐

- Nos Today - Global note search by isolated terms [info] [source] 🌐

- Nostr Search Engine - API for Nostr clients [source]

Website

App Store

ZapStore - Permitionless App Store [source]

Audio and Video Transmission

- Nostr Nests - Audio Chats 🌐 [info]

- Fountain - Podcast 🤖🍎 [info]

- ZapStream - Live streaming 🌐 [info]

- Corny Chat - Audio Chat 🌐 [info]

Video Streaming

Music

- Tidal - Music Streaming [source] [about] [info] 🤖🍎🌐

- Wavlake - Music Streaming [source] 🌐(🤖🍎 [early access])

- Tunestr - Musical Events [source] [about] 🌐

- Stemstr - Musical Colab (paid to post) [source] [about] 🌐

Images

- Pinstr - Pinterest like [source] 🌐

- Slidestr - DeviantArt like [source] 🌐

- Memestr - ifunny like [source] 🌐

Download and Upload

Documents, graphics and tables

- Mindstr - Mind maps [source] 🌐

- Docstr - Share Docs [info] [source] 🌐

- Formstr - Share Forms [info] 🌐

- Sheetstr - Share Spreadsheets [source] 🌐

- Slide Maker - Share slides 🌐 (advice: https://zaplinks.lol/ and https://zaplinks.lol/slides/ sites are down)

Health

- Sobrkey - Sobriety and mental health [source] 🌐

- NosFabrica - Finding ways for your health data 🌐

- LazerEyes - Eye prescription by DM [source] 🌐

Forum

- OddBean - Hacker News like [info] [source] 🌐

- LowEnt - Forum [info] 🌐

- Swarmstr - Q&A / FAQ [info] 🌐

- Staker News - Hacker News like 🌐 [info]

Direct Messenges (DM)

- 0xchat 🤖🍎 [source]

- Nostr Chat 🌐🍎 [source]

- Blowater 🌐 [source]

- Anigma (new nostrgram) - Telegram based [on development] [source]

- Keychat - Signal based [🤖🍎 on development] [source]

Reading

- Highlighter - Insights with a highlighted read 🌐 [info]

- Zephyr - Calming to Read 🌐 [info]

- Flycat - Clean and Healthy Feed 🌐 [info]

- Nosta - Check Profiles [on development] 🌐 [info]

- Alexandria - e-Reader and Nostr Knowledge Base (NKB) [source]

Writing

Lists

- Following - Users list [source] 🌐

- Listr - Lists [source] 🌐

- Nostr potatoes - Movies List source 💻(numpy)

Market and Jobs

- Shopstr - Buy and Sell [source] 🌐

- Nostr Market - Buy and Sell 🌐

- Plebeian Market - Buy and Sell [source] 🌐

- Ostrich Work - Jobs [source] 🌐

- Nostrocket - Jobs [source] 🌐

Data Vending Machines - DVM (NIP90)

(Data-processing tools)

AI

Games

- Chesstr - Chess 🌐 [source]

- Jestr - Chess [source] 🌐

- Snakestr - Snake game [source] 🌐

- DEG Mods - Decentralized Game Mods [info] [source] 🌐

Customization

Like other Services

- Olas - Instagram like [source] 🤖🍎🌐

- Nostree - Linktree like 🌐

- Rabbit - TweetDeck like [info] 🌐

- Zaplinks - Nostr links 🌐

- Omeglestr - Omegle-like Random Chats [source] 🌐

General Uses

- Njump - HTML text gateway source 🌐

- Filestr - HTML midia gateway [source] 🌐

- W3 - Nostr URL shortener [source] 🌐

- Playground - Test Nostr filters [source] 🌐

- Spring - Browser 🌐

Places

- Wherostr - Travel and show where you are

- Arc Map (Mapstr) - Bitcoin Map [info]

Driver and Delivery

- RoadRunner - Uber like [on development] ⏱️

- Arcade City - Uber like [on development] ⏱️ [info]

- Nostrlivery - iFood like [on development] ⏱️

OTHER STUFF

Lightning Wallets (zap)

- Alby - Native and extension [info] 🌐

- ZBD - Gaming and Social [info] 🤖🍎

- Wallet of Satoshi [info] 🤖🍎

- Minibits - Cashu mobile wallet [info] 🤖

- Blink - Opensource custodial wallet (KYC over 1000 usd) [source] 🤖🍎

- LNbits - App and extesion [source] 🤖🍎💻

- Zeus - [info] [source] 🤖🍎

Exchange

Media Server (Upload Links)

audio, image and video

- Nostr Build - [source] 🌐

- Nostr Check - [info] [source] 🌐

- NostPic - [source] 🌐

- Sovbit 🌐

- Voidcat - [source] 🌐

Without Nip: - Pomf - Upload larger videos [source] - Catbox - [source] - x0 - [source]

Donation and payments

- Zapper - Easy Zaps [source] 🌐

- Autozap [source] 🌐

- Zapmeacoffee 🌐

- Nostr Zap 💻(numpy)

- Creatr - Creators subscription 🌐

- Geyzer - Crowdfunding [info] [source] 🌐

- Heya! - Crowdfunding [source]

Security

- Secret Border - Generate offline keys 💻(java)

- Umbrel - Your private relay [source] 🌐

Extensions

- Nos2x - Account access keys 🌐

- Nsec.app 🌐 [info]

- Lume - [info] [source] 🐧🪟🍎

- Satcom - Share files to discuss - [info] 🌐

- KeysBand - Multi-key signing [source] 🌐

Code

- Nostrify - Share Nostr Frameworks 🌐

- Git Workshop (github like) [experimental] 🌐

- Gitstr (github like) [on development] ⏱️

- Osty [on development] [info] 🌐

- Python Nostr - Python Library for Nostr

Relay Check and Cloud

- Nostr Watch - See your relay speed 🌐

- NosDrive - Nostr Relay that saves to Google Drive

Bidges and Getways

- Matrixtr Bridge - Between Matrix & Nostr

- Mostr - Between Nostr & Fediverse

- Nostrss - RSS to Nostr

- Rsslay - Optimized RSS to Nostr [source]

- Atomstr - RSS/Atom to Nostr [source]

NOT RELATED TO NOSTR

Android Keyboards

Personal notes and texts

Front-ends

- Nitter - Twitter / X without your data [source]

- NewPipe - Youtube, Peertube and others, without account & your data [source] 🤖

- Piped - Youtube web without you data [source] 🌐

Other Services

- Brave - Browser [source]

- DuckDuckGo - Search [source]

- LLMA - Meta - Meta open source AI [source]

- DuckDuckGo AI Chat - Famous AIs without Login [source]

- Proton Mail - Mail [source]

Other open source index: Degoogled Apps

Some other Nostr index on:

-

@ c7aa97dc:0d12c810

2025-05-04 17:06:47

@ c7aa97dc:0d12c810

2025-05-04 17:06:47COLDCARDS’s new Co-Sign feature lets you use a multisig (2 of N) wallet where the second key (policy key) lives inside the same COLDCARD and signs only when a transaction meets the rules you set-for example:

- Maximum amount per send (e.g. 500k Sats)

- Wait time between sends, (e.g 144 blocks = 1 day)

- Only send to approved addresses,

- Only send after you provide a 2FA code

If a payment follows the rules, COLDCARD automatically signs the transaction with 2 keys which makes it feel like a single-sig wallet.

Break a rule and the device only signs with 1 key, so nothing moves unless you sign the transaction with a separate off-site recovery key.

It’s the convenience of singlesig with the guard-rails of multisig.

Use Cases Unlocked

Below you will find an overview of usecases unlocked by this security enhancing feature for everyday bitcoiners, families, and small businesses.

1. Travel Lock-Down Mode

Before you leave, set the wait-time to match the duration of your trip—say 14 days—and cap each spend at 50k sats. If someone finds the COLDCARD while you’re away, they can take only one 50k-sat nibble and then must wait the full two weeks—long after you’re back—to try again. When you notice your device is gone you can quickly restore your wallet with your backup seeds (not in your house of course) and move all the funds to a new wallet.

2. Shared-Safety Wallet for Parents or Friends

Help your parents or friends setup a COLDCARD with Co-Sign, cap each spend at 500 000 sats and enforce a 7-day gap between transactions. Everyday spending sails through; anything larger waits for your co-signature from your key. A thief can’t steal more than the capped amount per week, and your parents retains full sovereignty—if you disappear, they still hold two backup seeds and can either withdraw slowly under the limits or import those seeds into another signer and move everything at once.

3. My First COLDCARD Wallet

Give your kid a COLDCARD, but whitelist only their own addresses and set a 100k sat ceiling. They learn self-custody, yet external spends still need you to co-sign.

4. Weekend-Only Spending Wallet

Cap each withdrawal (e.g., 500k sats) and require a 72-hour gap between sends. You can still top-up Lightning channels or pay bills weekly, but attackers that have access to your device + pin will not be able to drain it immediately.

5. DIY Business Treasury

Finance staff use the COLDCARD to pay routine invoices under 0.1 BTC. Anything larger needs the co-founder’s off-site backup key.

6. Donation / Grant Disbursement Wallet

Publish the deposit address publicly, but allow outgoing payments only to a fixed list of beneficiary addresses. Even if attackers get the device, they can’t redirect funds to themselves—the policy key refuses to sign.

7. Phoenix Lightning Wallet Top-Up

Add a Phoenix Lightning wallet on-chain deposit addresses to the whitelist. The COLDCARD will co-sign only when you’re refilling channels. This is off course not limited to Phoenix wallet and can be used for any Lightning Node.

8. Deep Cold-Storage Bridge

Whitelist one or more addresses from your bitcoin vault. Day-to-day you sweep hot-wallet incoming funds (From a webshop or lightning node) into the COLDCARD, then push funds onward to deep cold storage. If the device is compromised, coins can only land safely in the vault.

9. Company Treasury → Payroll Wallets

List each employee’s salary wallet on the whitelist (watch out for address re-use) and cap the amount per send. Routine payroll runs smoothly, while attackers or rogue insiders can’t reroute funds elsewhere.

10. Phone Spending-Wallet Refills

Whitelist only some deposit addresses of your mobile wallet and set a small per-send cap. You can top up anytime, but an attacker with the device and PIN can’t drain more than the refill limit—and only to your own phone.

I hope these usecase are helpfull and I'm curious to hear what other use cases you think are possible with this co-signing feature.

For deeper technical details on how Co-Sign works, refer to the official documentation on the Coldcard website. https://coldcard.com/docs/coldcard-cosigning/

You can also watch their Video https://www.youtube.com/watch?v=MjMPDUWWegw

coldcard #coinkite #bitcoin #selfcustody #multisig #mk4 #ccq

nostr:npub1az9xj85cmxv8e9j9y80lvqp97crsqdu2fpu3srwthd99qfu9qsgstam8y8 nostr:npub12ctjk5lhxp6sks8x83gpk9sx3hvk5fz70uz4ze6uplkfs9lwjmsq2rc5ky

-

@ 90c656ff:9383fd4e

2025-05-04 17:06:06

@ 90c656ff:9383fd4e

2025-05-04 17:06:06In the Bitcoin system, the protection and ownership of funds are ensured by a cryptographic model that uses private and public keys. These components are fundamental to digital security, allowing users to manage and safeguard their assets in a decentralized way. This process removes the need for intermediaries, ensuring that only the legitimate owner has access to the balance linked to a specific address on the blockchain or timechain.

Private and public keys are part of an asymmetric cryptographic system, where two distinct but mathematically linked codes are used to guarantee the security and authenticity of transactions.

Private Key = A secret code, usually represented as a long string of numbers and letters.

Functions like a password that gives the owner control over the bitcoins tied to a specific address.

Must be kept completely secret, as anyone with access to it can move the corresponding funds.

Public Key = Mathematically derived from the private key, but it cannot be used to uncover the private key.

Functions as a digital address, similar to a bank account number, and can be freely shared to receive payments.

Used to verify the authenticity of signatures generated with the private key.

Together, these keys ensure that transactions are secure and verifiable, eliminating the need for intermediaries.

The functioning of private and public keys is based on elliptic curve cryptography. When a user wants to send bitcoins, they use their private key to digitally sign the transaction. This signature is unique for each operation and proves that the sender possesses the private key linked to the sending address.

Bitcoin network nodes check this signature using the corresponding public key to ensure that:

01 - The signature is valid. 02 - The transaction has not been altered since it was signed. 03 - The sender is the legitimate owner of the funds.

If the signature is valid, the transaction is recorded on the blockchain or timechain and becomes irreversible. This process protects funds against fraud and double-spending.

The security of private keys is one of the most critical aspects of the Bitcoin system. Losing this key means permanently losing access to the funds, as there is no central authority capable of recovering it.

- Best practices for protecting private keys include:

01 - Offline storage: Keep them away from internet-connected networks to reduce the risk of cyberattacks. 02 - Hardware wallets: Physical devices dedicated to securely storing private keys. 03 - Backups and redundancy: Maintain backup copies in safe and separate locations. 04 - Additional encryption: Protect digital files containing private keys with strong passwords and encryption.

- Common threats include:

01 - Phishing and malware: Attacks that attempt to trick users into revealing their keys. 02 - Physical theft: If keys are stored on physical devices. 03 - Loss of passwords and backups: Which can lead to permanent loss of funds.

Using private and public keys gives the owner full control over their funds, eliminating intermediaries such as banks or governments. This model places the responsibility of protection on the user, which represents both freedom and risk.

Unlike traditional financial systems, where institutions can reverse transactions or freeze accounts, in the Bitcoin system, possession of the private key is the only proof of ownership. This principle is often summarized by the phrase: "Not your keys, not your coins."

This approach strengthens financial sovereignty, allowing individuals to store and move value independently and without censorship.

Despite its security, the key-based system also carries risks. If a private key is lost or forgotten, there is no way to recover the associated funds. This has already led to the permanent loss of millions of bitcoins over the years.

To reduce this risk, many users rely on seed phrases, which are a list of words used to recover wallets and private keys. These phrases must be guarded just as carefully, as they can also grant access to funds.

In summary, private and public keys are the foundation of security and ownership in the Bitcoin system. They ensure that only rightful owners can move their funds, enabling a decentralized, secure, and censorship-resistant financial system.

However, this freedom comes with great responsibility, requiring users to adopt strict practices to protect their private keys. Loss or compromise of these keys can lead to irreversible consequences, highlighting the importance of education and preparation when using Bitcoin.

Thus, the cryptographic key model not only enhances security but also represents the essence of the financial independence that Bitcoin enables.

Thank you very much for reading this far. I hope everything is well with you, and sending a big hug from your favorite Bitcoiner maximalist from Madeira. Long live freedom!

-

@ b99efe77:f3de3616

2025-05-04 16:59:35

@ b99efe77:f3de3616

2025-05-04 16:59:35fafasdf

asdfasfasf

Places & Transitions

- Places:

-

Bla bla bla: some text

-

Transitions:

- start: Initializes the system.

- logTask: bla bla bla.

petrinet ;startDay () -> working ;stopDay working -> () ;startPause working -> paused ;endPause paused -> working ;goSmoke working -> smoking ;endSmoke smoking -> working ;startEating working -> eating ;stopEating eating -> working ;startCall working -> onCall ;endCall onCall -> working ;startMeeting working -> inMeetinga ;endMeeting inMeeting -> working ;logTask working -> working -

@ 90c656ff:9383fd4e

2025-05-04 16:49:19

@ 90c656ff:9383fd4e

2025-05-04 16:49:19The Bitcoin network is built on a decentralized infrastructure made up of devices called nodes. These nodes play a crucial role in validating, verifying, and maintaining the system, ensuring the security and integrity of the blockchain or timechain. Unlike traditional systems where a central authority controls operations, the Bitcoin network relies on the collaboration of thousands of nodes around the world, promoting decentralization and transparency.

In the Bitcoin network, a node is any computer connected to the system that participates in storing, validating, or distributing information. These devices run Bitcoin software and can operate at different levels of participation, from basic data transmission to full validation of transactions and blocks.

There are two main types of nodes:

- Full Nodes:

01 - Store a complete copy of the blockchain or timechain. 02 - Validate and verify all transactions and blocks according to the protocol rules. 03 - Ensure network security by rejecting invalid transactions or fraudulent attempts.

- Light Nodes:

01 - Store only parts of the blockchain or timechain, not the full structure. 02 - Rely on full nodes to access transaction history data. 03 - Are faster and less resource-intensive but depend on third parties for full validation.

Nodes check whether submitted transactions comply with protocol rules, such as valid digital signatures and the absence of double spending.

Only valid transactions are forwarded to other nodes and included in the next block.

Full nodes maintain an up-to-date copy of the network's entire transaction history, ensuring integrity and transparency. In case of discrepancies, nodes follow the longest and most valid chain, preventing manipulation.

Nodes transmit transaction and block data to other nodes on the network. This process ensures all participants are synchronized and up to date.

Since the Bitcoin network consists of thousands of independent nodes, it is nearly impossible for a single agent to control or alter the system.

Nodes also protect against attacks by validating information and blocking fraudulent attempts.

Full nodes are particularly important, as they act as independent auditors. They do not need to rely on third parties and can verify the entire transaction history directly.

By maintaining a full copy of the blockchain or timechain, these nodes allow anyone to validate transactions without intermediaries, promoting transparency and financial freedom.

- In addition, full nodes:

01 - Reinforce censorship resistance: No government or entity can delete or alter data recorded on the system. 02 - Preserve decentralization: The more full nodes that exist, the stronger and more secure the network becomes. 03 - Increase trust in the system: Users can independently confirm whether the rules are being followed.

Despite their value, operating a full node can be challenging, as it requires storage space, processing power, and bandwidth. As the blockchain or timechain grows, technical requirements increase, which can make participation harder for regular users.

To address this, the community continuously works on solutions, such as software improvements and scalability enhancements, to make network access easier without compromising security.

In summary, nodes are the backbone of the Bitcoin network, performing essential functions in transaction validation, verification, and distribution. They ensure the decentralization and security of the system, allowing participants to operate reliably without relying on intermediaries.

Full nodes, in particular, play a critical role in preserving the integrity of the blockchain or timechain, making the Bitcoin network resistant to censorship and manipulation.

While running a node may require technical resources, its impact on preserving financial freedom and system trust is invaluable. As such, nodes remain essential elements for the success and longevity of Bitcoin.

Thank you very much for reading this far. I hope everything is well with you, and sending a big hug from your favorite Bitcoiner maximalist from Madeira. Long live freedom!

-

@ 40b9c85f:5e61b451

2025-04-24 15:27:02

@ 40b9c85f:5e61b451

2025-04-24 15:27:02Introduction

Data Vending Machines (DVMs) have emerged as a crucial component of the Nostr ecosystem, offering specialized computational services to clients across the network. As defined in NIP-90, DVMs operate on an apparently simple principle: "data in, data out." They provide a marketplace for data processing where users request specific jobs (like text translation, content recommendation, or AI text generation)

While DVMs have gained significant traction, the current specification faces challenges that hinder widespread adoption and consistent implementation. This article explores some ideas on how we can apply the reflection pattern, a well established approach in RPC systems, to address these challenges and improve the DVM ecosystem's clarity, consistency, and usability.

The Current State of DVMs: Challenges and Limitations

The NIP-90 specification provides a broad framework for DVMs, but this flexibility has led to several issues:

1. Inconsistent Implementation

As noted by hzrd149 in "DVMs were a mistake" every DVM implementation tends to expect inputs in slightly different formats, even while ostensibly following the same specification. For example, a translation request DVM might expect an event ID in one particular format, while an LLM service could expect a "prompt" input that's not even specified in NIP-90.

2. Fragmented Specifications

The DVM specification reserves a range of event kinds (5000-6000), each meant for different types of computational jobs. While creating sub-specifications for each job type is being explored as a possible solution for clarity, in a decentralized and permissionless landscape like Nostr, relying solely on specification enforcement won't be effective for creating a healthy ecosystem. A more comprehensible approach is needed that works with, rather than against, the open nature of the protocol.

3. Ambiguous API Interfaces

There's no standardized way for clients to discover what parameters a specific DVM accepts, which are required versus optional, or what output format to expect. This creates uncertainty and forces developers to rely on documentation outside the protocol itself, if such documentation exists at all.

The Reflection Pattern: A Solution from RPC Systems

The reflection pattern in RPC systems offers a compelling solution to many of these challenges. At its core, reflection enables servers to provide metadata about their available services, methods, and data types at runtime, allowing clients to dynamically discover and interact with the server's API.

In established RPC frameworks like gRPC, reflection serves as a self-describing mechanism where services expose their interface definitions and requirements. In MCP reflection is used to expose the capabilities of the server, such as tools, resources, and prompts. Clients can learn about available capabilities without prior knowledge, and systems can adapt to changes without requiring rebuilds or redeployments. This standardized introspection creates a unified way to query service metadata, making tools like

grpcurlpossible without requiring precompiled stubs.How Reflection Could Transform the DVM Specification

By incorporating reflection principles into the DVM specification, we could create a more coherent and predictable ecosystem. DVMs already implement some sort of reflection through the use of 'nip90params', which allow clients to discover some parameters, constraints, and features of the DVMs, such as whether they accept encryption, nutzaps, etc. However, this approach could be expanded to provide more comprehensive self-description capabilities.

1. Defined Lifecycle Phases

Similar to the Model Context Protocol (MCP), DVMs could benefit from a clear lifecycle consisting of an initialization phase and an operation phase. During initialization, the client and DVM would negotiate capabilities and exchange metadata, with the DVM providing a JSON schema containing its input requirements. nip-89 (or other) announcements can be used to bootstrap the discovery and negotiation process by providing the input schema directly. Then, during the operation phase, the client would interact with the DVM according to the negotiated schema and parameters.

2. Schema-Based Interactions

Rather than relying on rigid specifications for each job type, DVMs could self-advertise their schemas. This would allow clients to understand which parameters are required versus optional, what type validation should occur for inputs, what output formats to expect, and what payment flows are supported. By internalizing the input schema of the DVMs they wish to consume, clients gain clarity on how to interact effectively.

3. Capability Negotiation

Capability negotiation would enable DVMs to advertise their supported features, such as encryption methods, payment options, or specialized functionalities. This would allow clients to adjust their interaction approach based on the specific capabilities of each DVM they encounter.

Implementation Approach

While building DVMCP, I realized that the RPC reflection pattern used there could be beneficial for constructing DVMs in general. Since DVMs already follow an RPC style for their operation, and reflection is a natural extension of this approach, it could significantly enhance and clarify the DVM specification.

A reflection enhanced DVM protocol could work as follows: 1. Discovery: Clients discover DVMs through existing NIP-89 application handlers, input schemas could also be advertised in nip-89 announcements, making the second step unnecessary. 2. Schema Request: Clients request the DVM's input schema for the specific job type they're interested in 3. Validation: Clients validate their request against the provided schema before submission 4. Operation: The job proceeds through the standard NIP-90 flow, but with clearer expectations on both sides

Parallels with Other Protocols

This approach has proven successful in other contexts. The Model Context Protocol (MCP) implements a similar lifecycle with capability negotiation during initialization, allowing any client to communicate with any server as long as they adhere to the base protocol. MCP and DVM protocols share fundamental similarities, both aim to expose and consume computational resources through a JSON-RPC-like interface, albeit with specific differences.

gRPC's reflection service similarly allows clients to discover service definitions at runtime, enabling generic tools to work with any gRPC service without prior knowledge. In the REST API world, OpenAPI/Swagger specifications document interfaces in a way that makes them discoverable and testable.

DVMs would benefit from adopting these patterns while maintaining the decentralized, permissionless nature of Nostr.

Conclusion

I am not attempting to rewrite the DVM specification; rather, explore some ideas that could help the ecosystem improve incrementally, reducing fragmentation and making the ecosystem more comprehensible. By allowing DVMs to self describe their interfaces, we could maintain the flexibility that makes Nostr powerful while providing the structure needed for interoperability.

For developers building DVM clients or libraries, this approach would simplify consumption by providing clear expectations about inputs and outputs. For DVM operators, it would establish a standard way to communicate their service's requirements without relying on external documentation.

I am currently developing DVMCP following these patterns. Of course, DVMs and MCP servers have different details; MCP includes capabilities such as tools, resources, and prompts on the server side, as well as 'roots' and 'sampling' on the client side, creating a bidirectional way to consume capabilities. In contrast, DVMs typically function similarly to MCP tools, where you call a DVM with an input and receive an output, with each job type representing a different categorization of the work performed.

Without further ado, I hope this article has provided some insight into the potential benefits of applying the reflection pattern to the DVM specification.

-

@ efc19139:a370b6a8

2025-05-04 16:42:24

@ efc19139:a370b6a8

2025-05-04 16:42:24Bitcoin has a controversial reputation, but in this essay, I argue that Bitcoin is actually a pretty cool thing; it could even be described as the hippie movement of the digital generations.

Mainstream media often portrays Bitcoin purely as speculation, with headlines focusing on price fluctuations or painting it as an environmental disaster. It has frequently been declared dead and buried, only to rise again—each time, it's labeled as highly risky and suspicious as a whole. Then there are those who find blockchain fascinating in general but dismiss Bitcoin as outdated, claiming it will soon be replaced by a new cryptocurrency (often one controlled by the very author making the argument). Let’s take a moment to consider why Bitcoin is interesting and how it can drive broad societal change, much like the hippie movement once did. Bitcoin is a global decentralized monetary system operating on a peer-to-peer network. Since nearly all of humanity lives within an economic system based on money, it’s easy to see how an overhaul of the financial system could have a profound impact across different aspects of society. Bitcoin differs from traditional money through several unique characteristics: it is scarce, neutral, decentralized, and completely permissionless. There is no central entity—such as a company—that develops and markets Bitcoin, meaning it cannot be corrupted.

Bitcoin is an open digital network, much like the internet. Due to its lack of a central governing entity and its organic origin, Bitcoin can be considered a commodity, whereas other cryptocurrencies resemble securities, comparable to stocks. Bitcoin’s decentralized nature makes it geopolitically neutral. Instead of being controlled by a central authority, it operates under predefined, unchangeable rules. No single entity in the world has the ability to arbitrarily influence decision-making within the Bitcoin network. This characteristic is particularly beneficial in today’s political climate, where global uncertainty is heightened by unpredictable leaders of major powers. The permissionless nature of Bitcoin and its built-in resistance to censorship are crucial for individuals living under unstable conditions. Bitcoin is used to raise funds for politically persecuted activists and for charitable purposes in regions where financial systems have been weaponized against political opponents or used to restrict people's ability to flee a country. These are factors that may not immediately come to mind in Western nations, where such challenges are not commonly faced. Additionally, according to the World Bank, an estimated 1.5 billion people worldwide still lack access to any form of banking services.

Mining is the only way to ensure that no one can seize control of the Bitcoin network or gain a privileged position within it. This keeps Bitcoin neutral as a protocol, meaning a set of rules without leaders. It is not governed in the same way a company is, where ownership of shares dictates control. Miners earn the right to record transactions in Bitcoin’s ledger by continuously proving that they have performed work to obtain that right. This proof-of-work algorithm is also one reason why Bitcoin has spread so organically. If recording new transactions were free, we would face a problem similar to spam: there would be an endless number of competing transactions, making it impossible to reach consensus on which should officially become part of the decentralized ledger. Mining can be seen as an auction for adding the next set of transactions, where the price is the amount of energy expended. Using energy for this purpose is the only way to ensure that mining remains globally decentralized while keeping the system open and permissionless—free from human interference. Bitcoin’s initial distribution was driven by random tech enthusiasts around the world who mined it as a hobby, using student electricity from their bedrooms. This is why Bitcoin’s spread can be considered organic, in contrast to a scenario where it was created by a precisely organized inner circle that typically would have granted itself advantages before the launch.

If energy consumption is considered concerning, the best regulatory approach would be to create optimal conditions for mining in Finland, where over half of energy production already comes from renewable sources. Modern miners are essentially datacenters, but they have a unique characteristic: they can adjust their electricity consumption seamlessly and instantly without delay. This creates synergy with renewable energy production, which often experiences fluctuations in supply. The demand flexibility offered by miners provides strong incentives to invest increasingly in renewable energy facilities. Miners can commit to long-term projects as last-resort consumers, making investments in renewables more predictable and profitable. Additionally, like other datacenters, miners produce heat as a byproduct. As a thought experiment, they could also be considered heating plants, with a secondary function of securing the Bitcoin network. In Finland, heat is naturally needed year-round. This combination of grid balancing and waste heat recovery would be key to Europe's energy self-sufficiency. Wouldn't it be great if the need to bow to fossil fuel powers for energy could be eliminated? Unfortunately, the current government has demonstrated a lack of understanding of these positive externalities by proposing tax increases on electricity. The so-called fiat monetary system also deserves criticism in Western nations, even though its flaws are not as immediately obvious as elsewhere. It is the current financial system in which certain privileged entities control the issuance of money as if by divine decree, which is what the term fiat (command) refers to. The system subtly creates and maintains inequality.

The Cantillon effect is an economic phenomenon in which entities closer to newly created money benefit at the expense of those farther away. Access to the money creation process is determined by credit ratings and loan terms, as fiat money is always debt. The Cantillon effect is a distorted version of the trickle-down theory, where the loss of purchasing power in a common currency gradually moves downward. Due to inflation, hard assets such as real estate, precious metals, and stocks become more expensive, just as food prices rise in stores. This process further enriches the wealthy while deepening poverty. The entire wealth of lower-income individuals is often held in cash or savings, which are eroded by inflation much like a borrowed bottle of Leijona liquor left out too long. Inflation is usually attributed to a specific crisis, but over the long term (spanning decades), monetary inflation—the expansion of the money supply—plays a significant role. Nobel Prize-winning economist Paul Krugman, known for his work on currencies, describes inflation in his book The Accidental Theorist as follows, loosely quoted: "It is really, really difficult to cut nominal wages. Even with low inflation, making labor cheaper would require a large portion of workers to accept wage cuts. Therefore, higher inflation leads to higher employment." Since no one wants to voluntarily give up their salary in nominal terms, the value of wages must be lowered in real terms by weakening the currency in which they are paid. Inflation effectively cuts wages—or, in other words, makes labor cheaper. This is one of the primary reasons why inflation is often said to have a "stimulating" effect on the economy.

It does seem somewhat unfair that employees effectively subsidize their employers’ labor costs to facilitate new hires, doesn’t it? Not to mention the inequities faced by the Global South in the form of neocolonialism, where Cantillon advantages are weaponized through reserve currencies like the US dollar or the French franc. This follows the exact same pattern, just on a larger scale. The Human Rights Foundation (hrf.org) has explored the interconnection between the fiat monetary system and neocolonialism in its publications, advocating for Bitcoin as part of the solution. Inflation can also be criticized from an environmental perspective. Since it raises time preference, it encourages people to make purchases sooner rather than delay them. As Krugman put it in the same book, “Extra money burns in your pocket.” Inflation thus drives consumption while reducing deliberation—it’s the fuel of the economy. If the goal from an environmental standpoint is to moderate economic activity, the first step should be to stop adding fuel to the fire. The impact of inflation on intergenerational inequality and the economic uncertainty faced by younger generations is rarely discussed. Boomers have benefited from the positive effects of the trend sparked by the Nixon shock in 1971, such as wealth accumulation in real estate and inflation-driven economic booms. Zoomers, meanwhile, are left to either fix the problems of the current system or find themselves searching for a lifeboat.

Bitcoin emerged as part of a long developmental continuum within the discussion forums of rebellious programmers known as cypherpunks, or encryption activists. It is an integral part of internet history and specifically a counterculture movement. Around Bitcoin, grassroots activists and self-organized communities still thrive, fostering an atmosphere that is welcoming, inspiring, and—above all—hopeful, which feels rare in today’s world. Although the rush of suits and traditional financial giants into Bitcoin through ETF funds a year ago may have painted it as opportunistic and dull in the headlines, delving into its history and culture reveals ever-fascinating angles and new layers within the Bitcoin sphere. Yet, at its core, Bitcoin is simply money. It possesses all seven characteristics required to meet the definition of money: it is easily divisible, transferable, recognizable, durable, fungible, uniform, and straightforward to receive. It serves as a foundation on which coders, startup enthusiasts, politicians, financial executives, activists, and anarchists alike can build. The only truly common denominator among the broad spectrum of Bitcoin users is curiosity—openness to new ideas. It merely requires the ability to recognize potential in an alternative system and a willingness to embrace fundamental change. Bitcoin itself is the most inclusive system in the world, as it is literally impossible to marginalize or exclude its users. It is a tool for peaceful and voluntary collaboration, designed so that violence and manipulation are rendered impossible in its code.

Pretty punk in the middle of an era of polarization and division, wouldn’t you say?

The original author (not me) is the organizer of the Bitcoin conference held in Helsinki, as well as a founding member and vice chairman of the Finnish Bitcoin Association. More information about the event can be found at: https://btchel.com and https://njump.me/nprofile1qqs89v5v46jcd8uzv3f7dudsvpt8ntdm3927eqypyjy37yx5l6a30fcknw5z5 ps. Zaps and sats will be forwarded to author!

originally posted at https://stacker.news/items/971219

-

@ 90c656ff:9383fd4e

2025-05-04 16:36:21

@ 90c656ff:9383fd4e

2025-05-04 16:36:21Bitcoin mining is a crucial process for the operation and security of the network. It plays an important role in validating transactions and generating new bitcoins, ensuring the integrity of the blockchain or timechain-based system. This process involves solving complex mathematical calculations and requires significant computational power. Additionally, mining has economic, environmental, and technological effects that must be carefully analyzed.

Bitcoin mining is the procedure through which new units of the currency are created and added to the network. It is also responsible for verifying and recording transactions on the blockchain or timechain. This system was designed to be decentralized, eliminating the need for a central authority to control issuance or validate operations.

Participants in the process, called miners, compete to solve difficult mathematical problems. Whoever finds the solution first earns the right to add a new block to the blockchain or timechain and receives a reward in bitcoins, along with the transaction fees included in that block. This mechanism is known as Proof of Work (PoW).

The mining process is highly technical and follows a series of steps:

Transaction grouping: Transactions sent by users are collected into a pending block that awaits validation.

Solving mathematical problems: Miners must find a specific number, called a nonce, which, when combined with the block’s data, generates a cryptographic hash that meets certain required conditions. This process involves trial and error and consumes a great deal of computational power.

Block validation: When a miner finds the correct solution, the block is validated and added to the blockchain or timechain. All network nodes verify the block’s authenticity before accepting it.

Reward: The winning miner receives a bitcoin reward, in addition to the fees paid for the transactions included in the block. This reward decreases over time in an event called halving, which happens approximately every four years.

Bitcoin mining has a significant economic impact, as it creates income opportunities for individuals and companies. It also drives the development of new technologies such as specialized processors (ASICs) and modern cooling systems.

Moreover, mining supports financial inclusion by maintaining a decentralized network, enabling fast and secure global transactions. In regions with unstable economies, Bitcoin provides a viable alternative for value preservation and financial transfers.

Despite its economic benefits, Bitcoin mining is often criticized for its environmental impact. The proof-of-work process consumes large amounts of electricity, especially in areas where the energy grid relies on fossil fuels.

It’s estimated that Bitcoin mining uses as much energy as some entire countries, raising concerns about its sustainability. However, there are ongoing efforts to reduce these impacts, such as the increasing use of renewable energy sources and the exploration of alternative systems like Proof of Stake (PoS) in other decentralized networks.

Mining also faces challenges related to scalability and the concentration of computational power. Large companies and mining pools dominate the sector, which can affect the network’s decentralization.

Another challenge is the growing complexity of the mathematical problems, which requires more advanced hardware and consumes more energy over time. To address these issues, researchers are studying solutions that optimize resource use and keep the network sustainable in the long term.

In summary, Bitcoin mining is an essential process for maintaining the network and creating new units of the currency. It ensures security, transparency, and decentralization, supporting the operation of the blockchain or timechain.

However, mining also brings challenges such as high energy consumption and the concentration of resources in large pools. Even so, the pursuit of sustainable solutions and technological innovations points to a promising future, where Bitcoin continues to play a central role in the digital economy.

Thank you very much for reading this far. I hope everything is well with you, and sending a big hug from your favorite Bitcoiner maximalist from Madeira. Long live freedom!

-

@ 700c6cbf:a92816fd

2025-05-04 16:34:01

@ 700c6cbf:a92816fd

2025-05-04 16:34:01Technically speaking, I should say blooms because not all of my pictures are of flowers, a lot of them, probably most, are blooming trees - but who cares, right?

It is that time of the year that every timeline on every social media is being flooded by blooms. At least in the Northern Hemisphere. I thought that this year, I wouldn't partake in it but - here I am, I just can't resist the lure of blooms when I'm out walking the neighborhood.

Spring has sprung - aaaachoo, sorry, allergies suck! - and the blooms are beautiful.

Yesterday, we had the warmest day of the year to-date. I went for an early morning walk before breakfast. Beautiful blue skies, no clouds, sunshine and a breeze. Most people turned on their aircons. We did not. We are rebels - hah!

We also had breakfast on the deck which I really enjoy during the weekend. Later I had my first session of the year painting on the deck while listening/watching @thegrinder streaming. Good times.

Today, the weather changed. Last night, we had heavy thunderstorms and rain. This morning, it is overcast with the occasional sunray peaking through or, as it is right now, raindrops falling.

We'll see what the day will bring. For me, it will definitely be: Back to painting. Maybe I'll even share some here later. But for now - this is a photo post, and here are the photos. I hope you enjoy as much as I enjoyed yesterday's walk!

Cheers, OceanBee

!(image)[https://cdn.satellite.earth/cc3fb0fa757c88a6a89823585badf7d67e32dee72b6d4de5dff58acd06d0aa36.jpg] !(image)[https://cdn.satellite.earth/7fe93c27c3bf858202185cb7f42b294b152013ba3c859544950e6c1932ede4d3.jpg] !(image)[https://cdn.satellite.earth/6cbd9fba435dbe3e6732d9a5d1f5ff0403935a4ac9d0d83f6e1d729985220e87.jpg] !(image)[https://cdn.satellite.earth/df94d95381f058860392737d71c62cd9689c45b2ace1c8fc29d108625aabf5d5.jpg] !(image)[https://cdn.satellite.earth/e483e65c3ee451977277e0cfa891ec6b93b39c7c4ea843329db7354fba255e64.jpg] !(image)[https://cdn.satellite.earth/a98fe8e1e0577e3f8218af31f2499c3390ba04dced14c2ae13f7d7435b4000d7.jpg] !(image)[https://cdn.satellite.earth/d83b01915a23eb95c3d12c644713ac47233ce6e022c5df1eeba5ff8952b99d67.jpg] !(image)[https://cdn.satellite.earth/9ee3256882e363680d8ea9bb6ed3baa5979c950cdb6e62b9850a4baea46721f3.jpg] !(image)[https://cdn.satellite.earth/201a036d52f37390d11b76101862a082febb869c8d0e58d6aafe93c72919f578.jpg] !(image)[https://cdn.satellite.earth/cd516d89591a4cf474689b4eb6a67db842991c4bf5987c219fb9083f741ce871.jpg]

-

@ a39d19ec:3d88f61e

2025-04-22 12:44:42

@ a39d19ec:3d88f61e