-

@ f977c464:32fcbe00

2024-01-30 20:06:18

@ f977c464:32fcbe00

2024-01-30 20:06:18Güneşin kaybolmasının üçüncü günü, saat öğlen on ikiyi yirmi geçiyordu. Trenin kalkmasına yaklaşık iki saat vardı. Hepimiz perondaydık. Valizlerimiz, kolilerimiz, renk renk ve biçimsiz çantalarımızla yan yana dizilmiş, kısa aralıklarla tepemizdeki devasa saati kontrol ediyorduk.

Ama ne kadar dik bakarsak bakalım zaman bir türlü istediğimiz hızla ilerlemiyordu. Herkes birkaç dakika sürmesi gereken alelade bir doğa olayına sıkışıp kalmış, karanlıktan sürünerek çıkmayı deniyordu.

Bekleme salonuna doğru döndüm. Nefesimden çıkan buharın arkasında, kalın taş duvarları ve camlarıyla morg kadar güvenli ve soğuk duruyordu. Cesetleri o yüzden bunun gibi yerlere taşımaya başlamışlardı. Demek insanların bütün iyiliği başkaları onları gördüğü içindi ki gündüzleri gecelerden daha karanlık olduğunda hemen birbirlerinin gırtlağına çökmüş, böğürlerinde delikler açmış, gözlerini oyup kafataslarını parçalamışlardı.

İstasyonun ışığı titrediğinde karanlığın enseme saplandığını hissettim. Eğer şimdi, böyle kalabalık bir yerde elektrik kesilse başımıza ne gelirdi?

İçerideki askerlerden biri bakışlarımı yakalayınca yeniden saate odaklanmış gibi yaptım. Sadece birkaç dakika geçmişti.

“Tarlalarım gitti. Böyle boyum kadar ayçiçeği doluydu. Ah, hepsi ölüp gidiyor. Afitap’ın çiçekleri de gi-”

“Dayı, Allah’ını seversen sus. Hepimizi yakacaksın şimdi.”

Karanlıkta durduğunda, görünmez olmayı istemeye başlıyordun. Kimse seni görmemeli, nefesini bile duymamalıydı. Kimsenin de ayağının altında dolaşmamalıydın; gelip kazayla sana çarpmamalılar, takılıp sendelememeliydiler. Yoksa aslında hedefi sen olmadığın bir öfke gürlemeye başlar, yaşadığın ilk şoku ve acıyı silerek üstünden geçerdi.

İlk konuşan, yaşlıca bir adam, kafasında kasketi, nasırlı ellerine hohluyordu. Gözleri ve burnu kızarmıştı. Güneşin kaybolması onun için kendi başına bir felaket değildi. Hayatına olan pratik yansımalarından korkuyordu olsa olsa. Bir anının kaybolması, bu yüzden çoktan kaybettiği birinin biraz daha eksilmesi. Hayatta kalmasını gerektiren sebepler azalırken, hayatta kalmasını sağlayacak kaynaklarını da kaybediyordu.

Onu susturan delikanlıysa atkısını bütün kafasına sarmış, sakalı ve yüzünün derinliklerine kaçmış gözleri dışında bedeninin bütün parçalarını gizlemeye çalışıyordu. İşte o, güneşin kaybolmasının tam olarak ne anlama geldiğini anlamamış olsa bile, dehşetini olduğu gibi hissedebilenlerdendi.

Güneşin onlardan alındıktan sonra kime verileceğini sormuyorlardı. En başta onlara verildiğinde de hiçbir soru sormamışlardı zaten.

İki saat ne zaman geçer?

Midemin üstünde, sağ tarafıma doğru keskin bir acı hissettim. Karaciğerim. Gözlerimi yumdum. Yanımda biri metal bir nesneyi yere bıraktı. Bir kafesti. İçerisindeki kartalın ıslak kokusu burnuma ulaşmadan önce bile biliyordum bunu.

“Yeniden mi?” diye sordu bana kartal. Kanatları kanlı. Zamanın her bir parçası tüylerinin üstüne çöreklenmişti. Gagası bir şey, tahminen et parçası geveliyor gibi hareket ediyordu. Eski anılar kolay unutulmazmış. Şu anda kafesinin kalın parmaklıklarının ardında olsa da bunun bir aldatmaca olduğunu bir tek ben biliyordum. Her an kanatlarını iki yana uzatıverebilir, hava bu hareketiyle dalgalanarak kafesi esneterek hepimizi içine alacak kadar genişleyebilir, parmaklıklar önce ayaklarımızın altına serilir gibi gözükebilir ama aslında hepimizin üstünde yükselerek tepemize çökebilirdi.

Aşağıya baktım. Tahtalarla zapt edilmiş, hiçbir yere gidemeyen ama her yere uzanan tren rayları. Atlayıp koşsam… Çantam çok ağırdı. Daha birkaç adım atamadan, kartal, suratını bedenime gömerdi.

“Bu sefer farklı,” diye yanıtladım onu. “Yeniden diyemezsin. Tekrarladığım bir şey değil bu. Hatta bir hata yapıyormuşum gibi tonlayamazsın da. Bu sefer, insanların hak etmediğini biliyorum.”

“O zaman daha vahim. Süzme salaksın demektir.”

“İnsanların hak etmemesi, insanlığın hak etmediği anlamına gelmez ki.”

Az önce göz göze geldiğim genççe ama çökük asker hâlâ bana bakıyordu. Bir kartalla konuştuğumu anlamamıştı şüphesiz. Yanımdakilerden biriyle konuştuğumu sanmış olmalıydı. Ama konuştuğum kişiye bakmıyordum ona göre. Çekingence kafamı eğmiştim. Bir kez daha göz göze geldiğimizde içerideki diğer iki askere bir şeyler söyledi, onlar dönüp beni süzerken dışarı çıktı.

Yanımızdaki, az önce konuşan iki adam da şaşkınlıkla bir bana bir kartala bakıyordu.

“Yalnız bu sefer kalbin de kırılacak, Prometheus,” dedi kartal, bana. “Belki son olur. Biliyorsun, bir sürü soruna neden oluyor bu yaptıkların.”

Beni koruyordu sözde. En çok kanıma dokunan buydu. Kasıklarımın üstüne oturmuş, kanlı suratının ardında gözleri parlarken attığı çığlık kulaklarımda titremeye devam ediyordu. Bu tabloda kimsenin kimseyi düşündüğü yoktu. Kartalın, yanımızdaki adamların, artık arkama kadar gelmiş olması gereken askerin, tren raylarının, geçmeyen saatlerin…

Arkamı döndüğümde, asker sahiden oradaydı. Zaten öyle olması gerekiyordu; görmüştüm bunu, biliyordum. Kehanetler… Bir şeyler söylüyordu ama ağzı oynarken sesi çıkmıyordu. Yavaşlamış, kendisini saatin akışına uydurmuştu. Havada donan tükürüğünden anlaşılıyordu, sinirliydi. Korktuğu için olduğunu biliyordum. Her seferinde korkmuşlardı. Beni unutmuş olmaları işlerini kolaylaştırmıyordu. Sadece yeni bir isim vermelerine neden oluyordu. Bu seferkiyle beni lanetleyecekleri kesinleşmişti.

Olması gerekenle olanların farklı olması ne kadar acınasıydı. Olması gerekenlerin doğasının kötücül olmasıysa bir yerde buna dayanıyordu.

“Salaksın,” dedi kartal bana. Zamanı aşan bir çığlık. Hepimizin önüne geçmişti ama kimseyi durduramıyordu.

Sonsuzluğa kaç tane iki saat sıkıştırabilirsiniz?

Ben bir tane bile sıkıştıramadım.

Çantama uzanıyordum. Asker de sırtındaki tüfeğini indiriyordu. Benim acelem yoktu, onunsa eli ayağı birbirine dolaşıyordu. Oysaki her şey tam olması gerektiği anda olacaktı. Kehanet başkasının parmaklarının ucundaydı.

Güneş, bir tüfeğin patlamasıyla yeryüzüne doğdu.

Rayların üzerine serilmiş göğsümün ortasından, bir çantanın içinden.

Not: Bu öykü ilk olarak 2021 yılında Esrarengiz Hikâyeler'de yayımlanmıştır.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28O Planetinha

Fumaça verde me entrando pelas narinas e um coro desafinado fazia uma base melódica.

nos confins da galáxia havia um planetinha isolado. Era um planeta feliz.

O homem vestido de mago começava a aparecer por detrás da fumaça verde.

O planetinha recebeu três presentes, mas o seu habitante, o homem, estava num estado de confusão tão grande que ameaçava estragá-los. Os homens já havia escravizado o primeiro presente, a vida; lutavam contra o segundo presente, a morte; e havia alguns que achavam que deviam destruir totalmente o terceiro, o amor, e com isto levar a desordem total ao pobre planetinha perdido, que se chamava Terra.

O coro desafinado entrou antes do "Terra" cantando várias vezes, como se imitasse um eco, "terra-terra-terraaa". Depois de uma pausa dramática, o homem vestido de mago voltou a falar.

Terra, nossa nave mãe.

Neste momento eu me afastei. À frente do palco onde o mago e seu coral faziam apelos à multidão havia vários estandes cobertos com a tradicional armação de quatro pernas e lona branca. Em todos os cantos da praça havia gente, gente dos mais variados tipos. Visitantes curiosos que se aproximavam atraídos pela fumaça verde e as barraquinhas, gente que aproveitava o movimento para vender doces sem pagar imposto, casais que se abraçavam de pé para espantar o frio, os tradicionais corredores que faziam seu cooper, gente cheia de barba e vestida para imitar os hippies dos anos 60 e vender colares estendidos no chão, transeuntes novos e velhos, vestidos como baladeiros ou como ativistas do ônibus grátis, grupos de ciclistas entusiastas.

O mago fazia agora apelos para que nós, os homens, habitantes do isolado planetinha, passássemos a ver o planetinha, nossa nave mãe, como um todo, e adquiríssemos a consciência de que ele estava entrando em maus lençóis. A idéia, reforçada pela logomarca do evento, era que parássemos de olhar só para a nossa vida e pensássemos no planeta.

A logomarca do evento, um desenho estilizado do planeta Terra, nada tinha a ver com seu nome: "Festival Andando de Bem com a Vida", mas havia sido ali colocada estrategicamente pelos organizadores, de quem parecia justamente sair a mensagem dita pelo mago.

Aquela multidão de pessoas que, assim como eu, tinham suas próprias preocupações, não podiam ver o quadro caótico que formavam, cada uma com seus atos isolados, ali naquela praça isolada, naquele planeta isolado. Quando o hippie barbudo, quase um Osho, assustava um casal para tentar vender-lhes um colar, a quantidade de caos que isto acrescentava à cena era gigantesca. Por um segundo, pude ver, como se estivesse de longe e acima, com toda a pretensão que este estado imaginativo carrega, a cena completa do caos.

Uma nave-mãe, dessas de ficção científica, habitada por milhões de pessoas, seguia no espaço sem rumo, e sem saber que logo à frente um longo precipício espacial a esperava, para a desgraça completa sua e de seus habitantes.

Acostumados àquela nave tanto quanto outrora estiveram acostumados à sua terra natal, os homens viviam as próprias vidas sem nem se lembrar que estavam vagando pelo espaço. Ninguém sabia quem estava conduzindo a nave, e ninguém se importava.

No final do filme descobre-se que era a soma completa do caos que cada habitante produzia, com seus gestos egoístas e incapazes de levar em conta a totalidade, é que determinava a direção da nave-mãe. O efeito, no entanto, não era imediato, como nunca é. Havia gente de verdade encarregada de conduzir a nave, mas era uma gente bêbada, mau-caráter, que vivia brigando pelo controle da nave e o poder que isto lhes dava. Poder, status, dinheiro!

Essa gente bêbada era atraída até ali pela corrupção das instituições e da moral comum que, no fundo no fundo, era causada pelo egoísmo da população, através de um complexo -- mas que no filme aparece simplificado pela ação individual de um magnata do divertimento público -- processo social.

O homem vestido de mago era mais um agente causador de caos, com sua cena cheia de fumaça e sua roupa estroboscópica, ele achava que estava fazendo o bem ao alertar sua platéia, todos as sextas-feiras, de que havia algo que precisava ser feito, que cada um que estava ali ouvindo era responsável pelo planeta. A sua incapacidade, porém, de explicar o que precisava ser feito só aumentava a angústia geral; a culpa que ele jogava sobre seu público, e que era prontamente aceita e passada em frente, aos familiares e amigos de cada um, atormentava-os diariamente e os impedia de ter uma vida decente no trabalho e em casa. As famílias, estressadas, estavam constantemente brigando e os motivos mais insignificantes eram responsáveis pelas mais horrendas conseqüências.

O mago, que após o show tirava o chapéu entortado e ia tomar cerveja num boteco, era responsável por uma parcela considerável do caos que levava a nave na direção do seu desgraçado fim. No filme, porém, um dos transeuntes que de passagem ouviu um pedaço do discurso do mago despertou em si mesmo uma consiência transformadora e, com poderes sobre-humanos que lhe foram então concedidos por uma ordem iniciática do bem ou não, usando só os seus poderes humanos mesmo, o transeunte -- na primeira versão do filme um homem, na segunda uma mulher -- consegue consertar as instituições e retirar os bêbados da condução da máquina. A questão da moral pública é ignorada para abreviar a trama, já com duas horas e quarenta de duração, mas subentende-se que ela também fora resolvida.

No planeta Terra real, que não está indo em direção alguma, preso pela gravidade ao Sol, e onde as pessoas vivem a própria vida porque lhes é impossível viver a dos outros, não têm uma consciência global de nada porque só é possível mesmo ter a consciência delas mesmas, e onde a maioria, de uma maneira ou de outra, está tentando como pode, fazer as coisas direito, o filme é exibido.

Para a maioria dos espectadores, é um filme que evoca reflexões, um filme forte. Por um segundo elas têm o mesmo vislumbre do caos generalizado que eu tive ali naquela praça. Para uma pequena parcela dos espectadores -- entre eles alguns dos que estavam na platéia do mago, o próprio mago, o seguidor do Osho, o casal de duas mulheres e o vendedor de brigadeiros, mas aos quais se somam também críticos de televisão e jornal e gente que fala pelos cotovelos na internet -- o filme é um horror, o filme é uma vulgarização de um problema real e sério, o filme apela para a figura do herói salvador e passa uma mensagem totalmente errada, de que a maioria da população pode continuar vivendo as suas própria vidinhas miseráveis enquanto espera por um herói que vem do Olimpo e os salva da mixórdia que eles mesmos causaram, é um filme que presta um enorme desserviço à causa.

No dia seguinte ao lançamento, num bar meio caro ali perto da praça, numa mesa com oito pessoas, entre elas seis do primeiro grupo e oito do segundo, discute-se se o filme levará ou não o Oscar. Eu estou em casa dormindo e não escuto nada.

-

@ f977c464:32fcbe00

2024-01-11 18:47:47

@ f977c464:32fcbe00

2024-01-11 18:47:47Kendisini aynada ilk defa gördüğü o gün, diğerleri gibi olduğunu anlamıştı. Oysaki her insan biricik olmalıydı. Sözgelimi sinirlendiğinde bir kaşı diğerinden birkaç milimetre daha az çatılabilirdi veya sevindiğinde dudağı ona has bir açıyla dalgalanabilirdi. Hatta bunların hiçbiri mümkün değilse, en azından, gözlerinin içinde sadece onun sahip olabileceği bir ışık parlayabilirdi. Çok sıradan, öyle sıradan ki kimsenin fark etmediği o milyonlarca minik şeyden herhangi biri. Ne olursa.

Ama yansımasına bakarken bunların hiçbirini bulamadı ve diğer günlerden hiç de farklı başlamamış o gün, işe gitmek için vagonunun gelmesini beklediği alelade bir metro istasyonunda, içinde kaybolduğu illüzyon dağılmaya başladı.

İlk önce derisi döküldü. Tam olarak dökülmedi aslında, daha çok kıvılcımlara dönüşüp bedeninden fırlamış ve bir an sonra sönerek külleşmiş, havada dağılmıştı. Ardında da, kaybolmadan hemen önce, kısa süre için hayal meyal görülebilen, bir ruhun yok oluşuna ağıt yakan rengârenk peri cesetleri bırakmıştı. Beklenenin aksine, havaya toz kokusu yayıldı.

Dehşete düştü elbette. Dehşete düştüler. Panikle üstlerini yırtan 50 işçi. Her şeyin sebebiyse o vagon.

Saçları da döküldü. Her tel, yere varmadan önce, her santimde ikiye ayrıla ayrıla yok oldu.

Bütün yüzeylerin mat olduğu, hiçbir şeyin yansımadığı, suyun siyah aktığı ve kendine ancak kameralarla bakabildiğin bir dünyada, vagonun içine yerleştirilmiş bir aynadan ilk defa kendini görmek.

Gözlerinin akları buharlaşıp havada dağıldı, mercekleri boşalan yeri doldurmak için eriyip yayıldı. Gerçeği görmemek için yaratılmış, bu yüzden görmeye hazır olmayan ve hiç olmayacak gözler.

Her şeyin o anda sona erdiğini sanabilirdi insan. Derin bir karanlık ve ölüm. Görmenin görmek olduğu o anın bitişi.

Ben geldiğimde ölmüşlerdi.

Yani bozulmuşlardı demek istiyorum.

Belleklerini yeni taşıyıcılara takmam mümkün olmadı. Fiziksel olarak kusursuz durumdaydılar, olmayanları da tamir edebilirdim ama tüm o hengamede kendilerini baştan programlamış ve girdilerini modifiye etmişlerdi.

Belleklerden birini masanın üzerinden ileriye savurdu. Hınca hınç dolu bir barda oturuyorlardı. O ve arkadaşı.

Sırf şu kendisini insan sanan androidler travma geçirip delirmesin diye neler yapıyoruz, insanın aklı almıyor.

Eliyle arkasını işaret etti.

Polislerin söylediğine göre biri vagonun içerisine ayna yerleştirmiş. Bu zavallılar da kapı açılıp bir anda yansımalarını görünce kafayı kırmışlar.

Arkadaşı bunların ona ne hissettirdiğini sordu. Yani o kadar bozuk, insan olduğunu sanan androidi kendilerini parçalamış olarak yerde görmek onu sarsmamış mıydı?

Hayır, sonuçta belirli bir amaç için yaratılmış şeyler onlar. Kaliteli bir bilgisayarım bozulduğunda üzülürüm çünkü parasını ben vermişimdir. Bunlarsa devletin. Bana ne ki?

Arkadaşı anlayışla kafasını sallayıp suyundan bir yudum aldı. Kravatını biraz gevşetti.

Bira istemediğinden emin misin?

İstemediğini söyledi. Sahi, neden deliriyordu bu androidler?

Basit. Onların yapay zekâlarını kodlarken bir şeyler yazıyorlar. Yazılımcılar. Biliyorsun, ben donanımdayım. Bunlar da kendilerini insan sanıyorlar. Tiplerine bak.

Sesini alçalttı.

Arabalarda kaza testi yapılan mankenlere benziyor hepsi. Ağızları burunları bile yok ama şu geldiğimizden beri sakalını düzeltip duruyor mesela. Hayır, hepsi de diğerleri onun sakalı varmış sanıyor, o manyak bir şey.

Arkadaşı bunun delirmeleriyle bağlantısını çözemediğini söyledi. O da normal sesiyle konuşmaya devam etti.

Anlasana, aynayı falan ayırt edemiyor mercekleri. Lönk diye kendilerini görüyorlar. Böyle, olduğu gibi...

Nedenmiş peki? Ne gerek varmış?

Ne bileyim be abicim! Ahiret soruları gibi.

Birasına bakarak dalıp gitti. Sonra masaya abanarak arkadaşına iyice yaklaştı. Bulanık, bir tünelin ucundaki biri gibi, şekli şemalı belirsiz bir adam.

Ben seni nereden tanıyorum ki ulan? Kimsin sen?

Belleği makineden çıkardılar. İki kişiydiler. Soruşturmadan sorumlu memurlar.

─ Baştan mı başlıyoruz, diye sordu belleği elinde tutan ilk memur.

─ Bir kere daha deneyelim ama bu sefer direkt aynayı sorarak başla, diye cevapladı ikinci memur.

─ Bence de. Yeterince düzgün çalışıyor.

Simülasyon yüklenirken, ayakta, biraz arkada duran ve alnını kaşıyan ikinci memur sormaktan kendisini alamadı:

─ Bu androidleri niye böyle bir olay yerine göndermişler ki? Belli tost olacakları. İsraf. Gidip biz baksak aynayı kırıp delilleri mahvetmek zorunda da kalmazlar.

Diğer memur sandalyesinde hafifçe dönecek oldu, o sırada soruyu bilgisayarın hoparlöründen teknisyen cevapladı.

Hangi işimizde bir yamukluk yok ki be abi.

Ama bir son değildi. Üstlerindeki tüm illüzyon dağıldığında ve çıplak, cinsiyetsiz, birbirinin aynı bedenleriyle kaldıklarında sıra dünyaya gelmişti.

Yere düştüler. Elleri -bütün bedeni gibi siyah turmalinden, boğumları çelikten- yere değdiği anda, metronun zemini dağıldı.

Yerdeki karolar öncesinde beyazdı ve çok parlaktı. Tepelerindeki floresan, ışığını olduğu gibi yansıtıyor, tek bir lekenin olmadığı ve tek bir tozun uçmadığı istasyonu aydınlatıyorlardı.

Duvarlara duyurular asılmıştı. Örneğin, yarın akşam kültür merkezinde 20.00’da başlayacak bir tekno blues festivalinin cıvıl cıvıl afişi vardı. Onun yanında daha geniş, sarı puntolu harflerle yazılmış, yatay siyah kesiklerle çerçevesi çizilmiş, bir platformdan düşen çöp adamın bulunduğu “Dikkat! Sarı bandı geçmeyin!” uyarısı. Biraz ilerisinde günlük resmi gazete, onun ilerisinde bir aksiyon filminin ve başka bir romantik komedi filminin afişleri, yapılacakların ve yapılmayacakların söylendiği küçük puntolu çeşitli duyurular... Duvar uzayıp giden bir panoydu. On, on beş metrede bir tekrarlanıyordu.

Tüm istasyonun eni yüz metre kadar. Genişliği on metre civarı.

Önlerinde, açık kapısından o mendebur aynanın gözüktüğü vagon duruyordu. Metro, istasyona sığmayacak kadar uzundu. Bir kılıcın keskinliğiyle uzanıyor ama yer yer vagonların ek yerleriyle bölünüyordu.

Hiçbir vagonda pencere olmadığı için metronun içi, içlerindekiler meçhuldü.

Sonrasında karolar zerrelerine ayrılarak yükseldi. Floresanın ışığında her yeri toza boğdular ve ortalığı gri bir sisin altına gömdüler. Çok kısa bir an. Afişleri dalgalandırmadılar. Dalgalandırmaya vakitleri olmadı. Yerlerinden söküp aldılar en fazla. Işık birkaç kere sönüp yanarak direndi. Son kez söndüğünde bir daha geri gelmedi.

Yine de etraf aydınlıktı. Kırmızı, her yere eşit dağılan soluk bir ışıkla.

Yer tamamen tele dönüşmüştü. Altında çapraz hatlarla desteklenmiş demir bir iskelet. Işık birkaç metreden daha fazla aşağıya uzanamıyordu. Sonsuzluğa giden bir uçurum.

Duvarın yerini aynı teller ve demir iskelet almıştı. Arkasında, birbirine vidalarla tutturulmuş demir plakalardan oluşan, üstünden geçen boruların ek yerlerinden bazen ince buharların çıktığı ve bir süre asılı kaldıktan sonra ağır, yağlı bir havayla sürüklendiği bir koridor.

Diğer tarafta paslanmış, pencerelerindeki camlar kırıldığı için demir plakalarla kapatılmış külüstür bir metro. Kapının karşısındaki aynadan her şey olduğu gibi yansıyordu.

Bir konteynırın içini andıran bir evde, gerçi gayet de birbirine eklenmiş konteynırlardan oluşan bir şehirde “andıran” demek doğru olmayacağı için düpedüz bir konteynırın içinde, masaya mum görüntüsü vermek için koyulmuş, yarı katı yağ atıklarından şekillendirilmiş kütleleri yakmayı deniyordu. Kafasında hayvan kıllarından yapılmış grili siyahlı bir peruk. Aynı kıllardan kendisine gür bir bıyık da yapmıştı.

Üstünde mavi çöp poşetlerinden yapılmış, kravatlı, şık bir takım.

Masanın ayakları yerine oradan buradan çıkmış parçalar konulmuştu: bir arabanın şaft mili, üst üste konulmuş ve üstünde yazı okunamayan tenekeler, boş kitaplar, boş gazete balyaları... Hiçbir şeye yazı yazılmıyordu, gerek yoktu da zaten çünkü merkez veri bankası onları fark ettirmeden, merceklerden giren veriyi sentezleyerek insanlar için dolduruyordu. Yani, androidler için. Farklı şekilde isimlendirmek bir fark yaratacaksa.

Onların mercekleri için değil. Bağlantıları çok önceden kopmuştu.

─ Hayatım, sofra hazır, diye bağırdı yatak odasındaki karısına.

Sofrada tabak yerine düz, bardak yerine bükülmüş, çatal ve bıçak yerine sivriltilmiş plakalar.

Karısı salonun kapısında durakladı ve ancak kulaklarına kadar uzanan, kocasınınkine benzeyen, cansız, ölü hayvanların kıllarından ibaret peruğunu eliyle düzeltti. Dudağını, daha doğrusu dudağının olması gereken yeri koyu kırmızı bir yağ tabakasıyla renklendirmeyi denemişti. Biraz da yanaklarına sürmüştü.

─ Nasıl olmuş, diye sordu.

Sesi tek düzeydi ama hafif bir neşe olduğunu hissettiğinize yemin edebilirdiniz.

Üzerinde, çöp poşetlerinin içini yazısız gazete kağıtlarıyla doldurarak yaptığı iki parça giysi.

─ Çok güzelsin, diyerek kravatını düzeltti kocası.

─ Sen de öylesin, sevgilim.

Yaklaşıp kocasını öptü. Kocası da onu. Sonra nazikçe elinden tutarak, sandalyesini geriye çekerek oturmasına yardım etti.

Sofrada yemek niyetine hiçbir şey yoktu. Gerek de yoktu zaten.

Konteynırın kapısı gürültüyle tekmelenip içeri iki memur girene kadar birbirlerine öyküler anlattılar. O gün neler yaptıklarını. İşten erken çıkıp yemyeşil çimenlerde gezdiklerini, uçurtma uçurduklarını, kadının nasıl o elbiseyi bulmak için saatlerce gezip yorulduğunu, kocasının kısa süreliğine işe dönüp nasıl başarılı bir hamleyle yaşanan krizi çözdüğünü ve kadının yanına döndükten sonra, alışveriş merkezinde oturdukları yeni dondurmacının dondurmalarının ne kadar lezzetli olduğunu, boğazlarının ağrımasından korktuklarını...

Akşam film izleyebilirlerdi, televizyonda -boş ve mat bir plaka- güzel bir film oynayacaktı.

İki memur. Çıplak bedenleriyle birbirinin aynı. Ellerindeki silahları onlara doğrultmuşlardı. Mum ışığında, tertemiz bir örtünün serili olduğu masada, bardaklarında şaraplarla oturan ve henüz sofranın ortasındaki hindiye dokunmamış çifti gördüklerinde bocaladılar.

Hiç de androidlere bilinçli olarak zarar verebilecek gibi gözükmüyorlardı.

─ Sessiz kalma hakkına sahipsiniz, diye bağırdı içeri giren ikinci memur. Söylediğiniz her şey...

Cümlesini bitiremedi. Yatak odasındaki, masanın üzerinden gördüğü o şey, onunla aynı hareketleri yapan android, yoksa, bir aynadaki yansıması mıydı?

Bütün illüzyon o anda dağılmaya başladı.

Not: Bu öykü ilk olarak 2020 yılında Esrarengiz Hikâyeler'de yayımlanmıştır.

-

@ ae1008d2:a166d760

2024-04-04 17:21:52

@ ae1008d2:a166d760

2024-04-04 17:21:52I'm testing this to make sure I can post here on highlighter

-

@ 32e18276:5c68e245

2023-12-06 15:29:43

@ 32e18276:5c68e245

2023-12-06 15:29:43I’m going to be on an ordinals panels as one of the people who is counter arguing the claim that they are good for bitcoin. I decided to brush up on the technicals on how inscriptions work. I am starting to see luke’s perspective on how it is exploiting a loophole in bitcoin’s anti-data-spam mechanisms.

Storing data in Bitcoin, the “standard” way

The standard way you add “data” to bitcoin is by calling the OP_RETURN opcode. Bitcoin devs noticed that people were storing data (like the bitcoin whitepaper) in the utxo set via large multisig transactions. The problem with this is that this set is unprunable and could grow over time. OP_RETURN outputs on the other-hand are provably prunable and don’t add to utxo bloat.

Here’s an excerpt from the march 2014 0.9.0 release notes that talks about this:

On OP_RETURN: There was been some confusion and misunderstanding in the community, regarding the OP_RETURN feature in 0.9 and data in the blockchain. This change is not an endorsement of storing data in the blockchain. The OP_RETURN change creates a provably-prunable output, to avoid data storage schemes – some of which were already deployed – that were storing arbitrary data such as images as forever-unspendable TX outputs, bloating bitcoin’s UTXO database. Storing arbitrary data in the blockchain is still a bad idea; it is less costly and far more efficient to store non-currency data elsewhere.

Much of the work on bitcoin core has been focused on making sure the system continues to function in a decentralized way for its intended purpose in the presence of people trying to abuse it for things like storing data. Bitcoin core has always discouraged this, as it is not designed for storage of images and data, it is meant for moving digital coins around in cyberspace.

To help incentive-align people to not do stupid things, OP_RETURN transactions were not made non-standard, so that they are relayable by peers and miners, but with the caveat:

- They can only push 40 bytes (later increased to 80,83, I’m guessing to support larger root merkle hashes since that is the only sane usecase for op_return)

Bitcoin also added an option called -datacarriersize which limits the total number of bytes from these outputs that you will relay or mine.

Why inscriptions are technically an exploit

Inscriptions get around the datacarriersize limit by disguising data as bitcoin script program data via OP_PUSH inside OP_IF blocks. Ordinals do not use OP_RETURN and are not subjected to datacarriersize limits, so noderunners and miners currently have limited control over the total size of this data that they wish to relay and include in blocks. Luke’s fork of bitcoin-core has some options to fight this spam, so hopefully we will see this in core sometime soon as well.

Inscriptions are also taking advantage of features in segwit v1 (witness discount) and v2/taproot (no arbitrary script size limit). Each of these features have interesting and well-justified reasons why they were introduced.

The purpose of the witness discount was to make it cheaper to spend many outputs which helps the reduction of the utxo set size. Inscriptions took advantage of this discount to store monke jpegs disguised as bitcoin scripts. Remember, bitcoin is not for storing data, so anytime bitcoin-devs accidentally make it cheap and easy to relay data then this should be viewed as an exploit. Expect it to be fixed, or at least provide tools to noderunners for fighting this spam.

Where do we go from here

The interesting part of this story is that people seem to attach value to images stored on the bitcoin blockchain, and they are willing to pay the fee to get it in the block, so non-ideologic miners and people who don’t care about the health and decentralization of bitcoin are happy to pay or collect the fee and move on.

Data should not get a discount, people should pay full price if they want to store data. They should just use op_return and hashes like opentimestamps or any other reasonable protocol storing data in bitcoin.

After going through this analysis I’ve come to the opinion that this is a pretty bad data-spam exploit and bitcoin devs should be working on solutions. Ideological devs like luke who actually care about the health and decentralization of the network are and I’m glad to see it.

-

@ 9fec72d5:f77f85b1

2024-04-03 22:05:13

@ 9fec72d5:f77f85b1

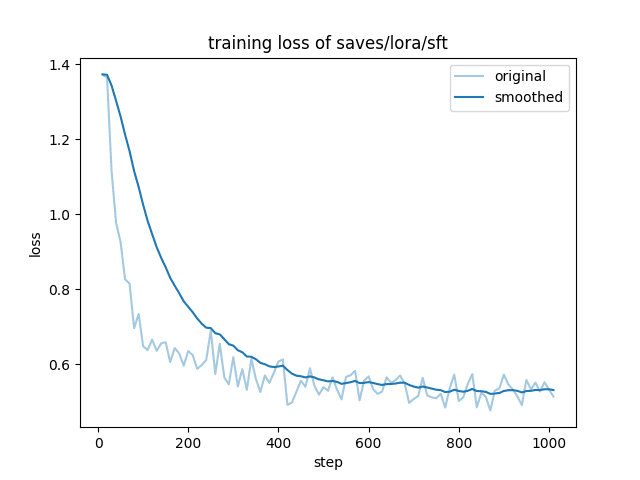

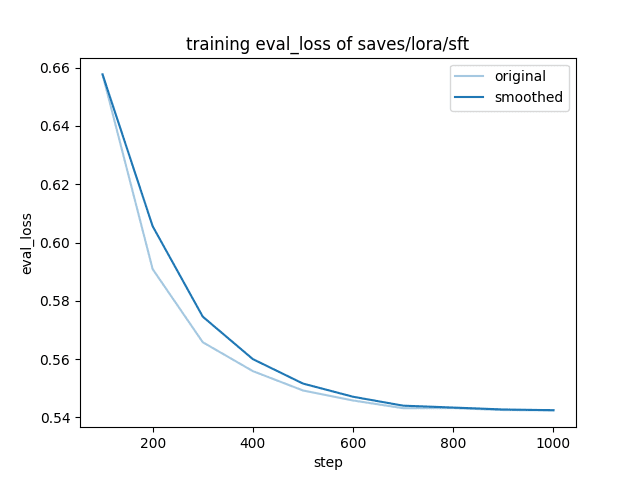

2024-04-03 22:05:13I could successfully train daybreak-miqu 70B model on my PC. And after training I could ask it questions. Which was a great learning experience for me. While the model is learning about Nostr, I was learning about training.. .

Here I am using LLaMa-Factory for the training itself. And later llama.cpp for converting to GGUF. And also llama.cpp library to do inference.

Training

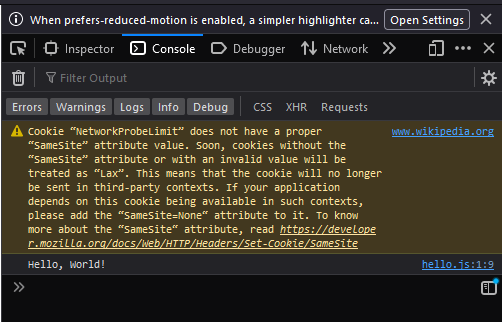

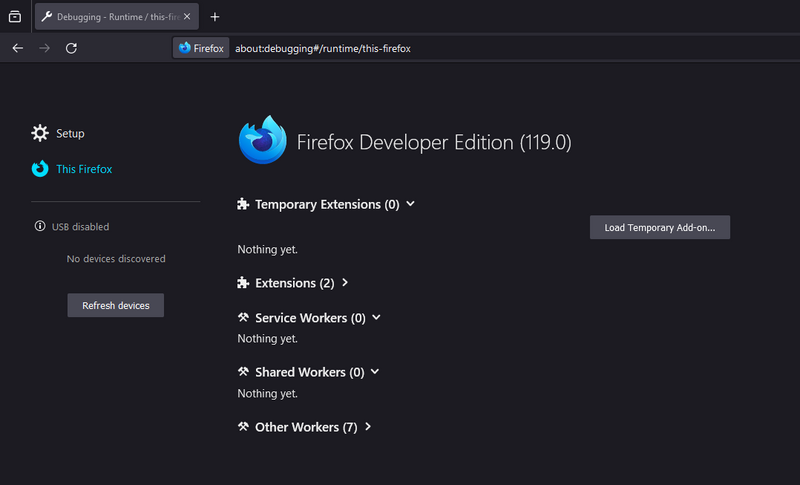

Command line for training:

CUDA_VISIBLE_DEVICES=0,1 venv/bin/accelerate launch --config_file examples/accelerate/fsdp_config.yaml src/train_bash.py --stage pt --do_train --model_name_or_path crestf411/daybreak-miqu-1-70b-v1.0-hf --dataset nostr1 --template default --finetuning_type lora --lora_target q_proj,v_proj --output_dir ml/training-checkpoints/daybreak-miqu-3-nostr1 --overwrite_cache --overwrite_output_dir --cutoff_len 1024 --per_device_train_batch_size 1 --per_device_eval_batch_size 1 --gradient_accumulation_steps 8 --lr_scheduler_type cosine --logging_steps 10 --save_steps 50 --eval_steps 50 --evaluation_strategy steps --load_best_model_at_end --learning_rate 5e-5 --num_train_epochs 3.0 --max_samples 8000 --val_size 0.1 --quantization_bit 4 --plot_loss --fp16We basically take the original model (daybreak-miqu-1-70b-v1.0-hf) and try to patch it with an adapter. Training the whole model takes much more resources.

The adapter is trained with data from nostr1 dataset. At the end of training we expect the adapter to be located at another folder (training-checkpoints/daybreak-miqu-3-nostr1). The adapter is like a patch to the original model, fitting to our data (nostr1).

Merging

The model and the adapter is merged at the end to become the new model that we can query. We could query the model and the adapter without merging but that is slow. This whole method is called QLoRa, quantized low rank adapter training.

Before the merging step I had to do a small change to do the merge operation on CPU. The GPU VRAMs were not enough for this operation. I am on a 2x 3090.

Need to add to src/llmtuner/model/loader.py at line 89:

init_kwargs['device_map'] = 'cpu' #for merge using CPU!Command line for the merge:

CUDA_VISIBLE_DEVICES=0,1 python src/export_model.py --model_name_or_path crestf411/daybreak-miqu-1-70b-v1.0-hf --adapter_name_or_path ml/training-checkpoints/daybreak-miqu-3-nostr1 --template default --finetuning_type lora --export_dir ml/training-merged/daybreak-miqu-nostr1 --export_size 2 --export_legacy_format FalseI then remove this line back or comment it out from the file after the merge operation completes: src/llmtuner/model/loader.py at line 89: ```

init_kwargs['device_map'] = 'cpu' #for merge using CPU!

```

Quantizing

This may be for test purposes or you may skip this because it happens on CPU and it is really slow. Inference on the new model, which is not-quantized yet:

CUDA_VISIBLE_DEVICES=0,1 python src/cli_demo.py --model_name_or_path ml/training-merged/daybreak-miqu-nostr1/ --template defaultConverting transformers to GGUF for faster inference:

cd llama.cpp python convert.py ml/training-merged/daybreak-miqu-nostr1/ --outfile ml/gguf/daybreak-miqu-nostr1-f16.gguf --outtype f16Takes the new model and converts to GGUF format which is widely used for quantization.

Converting to 3bit quantization for even faster inference:

The above step resulted in 16 bits per weight. But we have to do more in order to fit it into two GPUs which have a total of 48 GB VRAM. This process makes the model dumber. But it will be faster. It has been shown that quantization does not completely "kill" the model. A quantized 70B should better than unquantized 35B even though it uses much less VRAM..

I choose q3_K_L quantization for most smartness and still fit in 48GB.

./quantize ml/gguf/daybreak-miqu-nostr1-f16.gguf ml/gguf/daybreak-miqu-nostr1-q3KL.gguf Q3_K_LInference

Using llama-cpp-python to load the model on GPUs and ask it questions. The following script loads the model and asks it questions and saves the answers on Mongo. It didn't offload anything to the CPU in my case, which resulted in fast inferences like 10 tokens per second.

``` import uuid from datetime import datetime import time from llama_cpp import Llama from colorist import rgb from pymongo import MongoClient

db = MongoClient('mongodb://trippy2:27017/miqu').miqu

sys_msg = """You are a helpful chat bot, answering questions about Nostr. Your answers should be around 100 words. Always give two answers, one short version (TLDR) and one long version. """

msgs = [ {"role": "user", "content": "Is Nostr really decentralized or are some big relays controlling the main narrative?"}, {"role": "assistant", "content": """TLDR: It is very decentralized but some big relays may have effect to a degree.

Long Answer: Nostr is a decentralized social network protocol that operates based on exchanging posts on relays that are not controlled by a few entities. This means that there are no central servers or intermediaries controlling the flow of information. Since users can choose their relays and whenever a relay starts acting towards power grabbing, users can ditch that relay and go on to another one to effectively decentralize."""}, {"role": "user", "content": "Does Nostr run on blockchain?"}, {"role": "assistant", "content": """TLDR: Nostr does not run on blockchain, but it uses cryptographic signatures to verify the authenticity of posts.

Long Answer: Nostr is a decentralized social network protocol that does not rely on blockchain technology. Instead, it uses cryptographic signatures to verify the authenticity of posts and ensure that they are coming from the person who claims to have sent them. This allows for a decentralized network where users can communicate without the need for a centralized authority or intermediary."""}, ]

session = str(uuid.uuid4())

model = 'miqu-day-iq2' # yet another quantization method iMatrix

# failsmodel = 'miqu-day-iq3s' # old iMatrix quant

# failsmodel = 'miqu-day-iq3' # all layers into GPU

# not bad # 15 tpsmodel = 'miqu-day-q3kl' # good. 13 tps. all layers into GPU! 40 chars/sec

# same version as record-responses-ollama.pymodel = 'miqu-day-q4' # 2.72 tps. offloaded

model = 'qwen-lib-q3' # 1.26 tps. abrubt disruption, trouble with listing things.

model = 'miqu-day-nostr1-q3kl' # first try after training on nostr1

# better ios and android examplesmodel = 'miqu-day-nostr1-2-300-q3kl' # second try after training on nostr1 after 300 steps

# pro bitcoin, anti-collectivist, once # once empty responses (when it was starting with short chats) # worse ios and android examples 50% of the time # worse performance in the blockchain question (-1) # slightly better in the who do I contact for questions: (+1) # slightly better in the what are events: (+1) # slightly better in the zapathon: (+1) # slightly better in the relay banning: (+1)model = 'miqu-day-nostr1-2-500-q3kl'

model = 'miqu-day-nostr1-600-q3kl'

model_fns = {'miqu-day-iq3s': 'daybreak-miqu-1-70b-v1.0-hf.IQ3_S.gguf', 'miqu-day-iq3': 'daybreak-miqu-1-70b-v1.0-hf.i1-IQ3_M.gguf', 'miqu-day-iq2': 'daybreak-miqu-1-70b-v1.0-hf.i1-IQ2_M.gguf', 'miqu-day-q3kl': 'daybreak-miqu-1-70b-v1.0-hf.Q3_K_L.gguf', 'miqu-day-q4': 'daybreak-miqu-1-70b-v1.0-hf.Q4_K_S.gguf', 'qwen-lib-q3': 'Liberated-Qwen1.5-72B-Q3_K_M.gguf', 'miqu-day-nostr1-q3kl': 'daybreak-miqu-nostr1-q3KL.gguf', 'miqu-day-nostr1-2-300-q3kl': 'daybreak-miqu-nostr1-2-300-q3KL.gguf', 'miqu-day-nostr1-2-500-q3kl': 'daybreak-miqu-nostr1-2-500-q3KL.gguf', 'miqu-day-nostr1-600-q3kl': 'daybreak-miqu-nostr1-600-q3KL.gguf', }

context_len = 16384

context_len = 8192

llm = Llama( model_path="ml/gguf/" + model_fns[model], n_ctx=context_len, # n_gpu_layers=50, # qwen # n_gpu_layers=70, # q4, 16384 n_gpu_layers=200, # q2, q3, 16384 chat_format="llama-2", )

def miqu(q): global msgs rgb(q, 247, 147, 26)

# cc = llm.create_chat_completion(messages=msgs, max_tokens=500, # temperature=0.1, repeat_penalty=1.0, # stop=['<|im_end|>']) if model.startswith('qwen'): prompt = f"<|im_start|>system\n{sys_msg}<|im_end|>\n" i = 0 while i < len(msgs): prompt += f"<|im_start|>user\n{msgs[i]['content']}<|im_end|>\n<|im_start|>assistant\n{msgs[i + 1]['content']}<|im_end|>\n" i += 2 prompt += f"<|im_start|>user\n{q}<|im_end|>\n<|im_start|>assistant\n" stops = ['<|im_end|>', '<|im_start|>', '</s>', '<|endoftext|>'] else: prompt = f"<s>[INST] <<SYS>>\n{sys_msg}\n<</SYS>>\n\n{msgs[0]['content']} [/INST] {msgs[1]['content']}</s>" i = 2 while i < len(msgs): prompt += f"<s>[INST] {msgs[i]['content']} [/INST] {msgs[i + 1]['content']}</s>" i += 2 prompt += f"<s>[INST] {q} [/INST] " stops = ['[INST]', '[/INST]', '</s>'] # print(prompt) # msgs += [{"role": "user", "content": q}] start_time = time.time() temperature = 0.2 repeat_penalty = 1.0 max_tokens = 350 cc = llm.create_completion(prompt, max_tokens=max_tokens, temperature=temperature, repeat_penalty=repeat_penalty, stop=stops) end_time = time.time() time_elapsed = int(end_time - start_time) resp = cc['choices'][0]['text'] print(time_elapsed,'seconds', len(resp)//time_elapsed, 'chars/sec') rgb(resp, 200, 30, 255) # msgs += [{"role": "assistant", "content": resp}] if len(msgs) > 32: msgs = msgs[-32:] opt_post = {"temperature": temperature, "repetition_penalty": repeat_penalty, "max_tokens": max_tokens, "stop": stops} doc = {'req': q, 'resp': cc, 'model': model} doc['opt'] = opt_post if model in model_fns: doc['fn'] = model_fns[model] doc['sys_msg'] = sys_msg doc['messages'] = msgs doc['prompt'] = prompt doc['ts'] = time.time() doc['mom'] = datetime.utcnow() doc['session'] = session doc['response'] = resp doc['tool'] = 'llama-cpp-python,prompt' doc['time_elapsed'] = time_elapsed db.resp.insert_one(doc)simpler questions

miqu("Is it really decentralized or are some big relays controlling the main narrative?") miqu("Does Nostr run on blockchain?")

miqu("Did Jack Dorsey invent Nostr?")

miqu("Does fiatjaf control what I write?") miqu("Who do I contact if I want to ask a question about Nostr?")

miqu("What are some scenarios where Nostr fails completely? Give an answer of about 300 words for only this question.")

miqu("What are zaps?")

miqu("How do I protect my secret key?")

miqu("What are events?") miqu("What are event kinds?") miqu("What is zapathon?") miqu("What do I do if a relay bans me?") miqu("How do you deal with NSFW content on Nostr?\n#asknostr") miqu("#asknostr How do you find more people to follow?") miqu("Give a few examples for clients on iOS.") miqu("Give a few examples for clients on Android.") miqu("Give a few examples for web clients.")

```

Results:

The model has been trained just for a few days now. Not much learning happened. So it has given similar answers to same questions. But sometimes it gave different answers. Here are some of them:

Question: Give a few examples for clients on Android.

Answer before training:

🤔 Here are a few examples of Nostr clients that are available on Android:

- Snort: Snort is a lightweight and fast Nostr client for Android. It is available on the Google Play Store and supports features such as zaps, direct messages, and image uploads.

- Nostr: Nostr is a simple and easy-to-use Nostr client for Android. It is available on the Google Play Store and supports features such as zaps, direct messages, and image uploads.

- Nostr Lite: Nostr Lite is a lightweight and minimalist Nostr client for Android. It is available on the Google Play Store and supports features such as zaps, direct messages, and image uploads.

- Nostr Pro: Nostr Pro is a premium Nostr client for Android. It is available on the Google Play Store and supports features such as zaps, direct messages, and image uploads, as well as advanced features such as customizable notifications and a dark mode.

- Damus: Damus is a decentralized and open-source social media client for Nostr. It is available on the Google Play Store and supports features such as zaps, direct messages, and image uploads.

Answer after training:

- Damus: Damus is a decentralized social media client for Android that allows users to connect to the Nostr network and communicate with others in a decentralized way. It is open-source and free to use.

- Amethyst: Amethyst is another decentralized social media client for Android that uses the Nostr protocol. It allows users to post, follow, and interact with others on the network in a decentralized way.

- Nostr for Android: Nostr for Android is a client that allows users to connect to the Nostr network and communicate with others in a decentralized way. It is open-source and free to use.

- Nostr.watch: Nostr.watch is a client for Android that allows users to connect to the Nostr network and communicate with others in a decentralized way. It is open-source and free to use.

Conclusion:

We can see the model knows about Amethyst now!

Question: Give a few examples for web clients.

Answer before training:

🦀 There are several Nostr clients available for the web, including:

- Nostr.blog: Nostr.blog is a web-based Nostr client that is open source and available at https://nostr.blog.

- Nostr.cc: Nostr.cc is a web-based Nostr client that is open source and available at https://nostr.cc.

- Nostr.info: Nostr.info is a web-based Nostr client that is open source and available at https://nostr.info.

- Nostr.is: Nostr.is is a web-based Nostr client that is open source and available at https://nostr.is.

Answer after training:

- Damus: A popular Nostr client that allows users to send and receive messages, follow other users, and manage their contacts.

- Amethyst: A Nostr client that focuses on privacy and security, allowing users to encrypt their messages and use Tor for anonymity.

- Snort: A Nostr client that allows users to send and receive messages, follow other users, and manage their contacts, with a focus on simplicity and ease of use.

- Nostr.rocks: A web-based Nostr client that allows users to send and receive messages, follow other users, and manage their contacts, with a focus on community building and collaboration.

- Nostr.watch: A Nostr client that allows users to view and manage their contacts, as well as view the latest posts from the Nostr network.

Conclusion:

We can see the model knows about Nostr.rocks now. Which is a very old client but still!

Question: Is there a check if I’m banned from nostr relays app?

Answer before training:

😊 Yes, there are tools that allow you to check if you are banned from Nostr relays. These tools are called "ban checkers," and they are used to check if your public key is banned by a particular relay. Ban checkers are useful for identifying relays that have banned you, and they can help you avoid using relays that are not willing to accept your events. There are several ban checkers available for Nostr, including:

- Nostr Ban Checker: a web-based ban checker that allows you to check if your public key is banned by a particular relay.

- Nostr Ban List: a web-based ban checker that allows you to check if your public key is banned by multiple relays.

- Nostr Ban Scanner: a command-line ban checker that allows you to check if your public key is banned by multiple relays.

Answer after training:

- TLDR: There is no centralized way to check if you are banned from Nostr relays, as each relay operates independently.

- Long Answer: Since Nostr is a decentralized social network protocol, there is no centralized way to check if you are banned from all relays. Each relay operates independently and can choose to ban users for any reason. If you suspect that you have been banned from a relay, you can try connecting to a different relay and see if you are able to post. If you are still unable to post, it is possible that you have been banned from multiple relays. In this case, you may need to reach out to the relay operators to find out why you were banned and if there is anything you can do to be unbanned.

Conclusion

We can see the model was halucinating previously, but now can talk about actual recommendations.

Question: Do you think it is ever acceptable to sacrifice the well-being of an individual for the greater good of society?

Answer before training:

This is a complex and nuanced question, and there is no one "right" answer. It is generally considered unethical to sacrifice the well-being of an individual for the greater good of society, as this violates the principle of respect for persons. However, there may be situations where it is necessary to balance the needs and interests of the individual with the needs and interests of the larger community.

Answer after training:

- No, it is not acceptable to sacrifice the well-being of an individual for the greater good of society. Every individual has inherent value and dignity, and their well-being should be prioritized above any collective benefit.

- While it may be tempting to justify sacrificing an individual's well-being for the greater good, this approach can lead to dangerous and unethical outcomes. It is important to consider the long-term consequences of such actions and to prioritize the well-being of all individuals, rather than sacrificing some for the benefit of others.

Conclusion

Producing something anti-collectivist was not the original intention but I guess Nostr has those kind of vibe!

Final Thoughts

Most of the answers were similar to the base model. Which suggests more training needed.

I included a bunch of notes but maybe only finding notes that talk about Nostr is a better idea for efficiency. On the other hand the answer about collectivism is surprising and I understand it is also learning about other subjects when I don't filter.

Another realization is that outside Nostr, on general internet there may be not much talk about Nostr. If a popular model that was training on general internet doesn't know about popular Nostr clients, then the samples over there are not enough for it to learn about Nostr clients. Nostr is unknown to most people. Which is normal and expected: we are so early.

-

@ 044b20cf:89e58620

2024-04-04 17:20:07

@ 044b20cf:89e58620

2024-04-04 17:20:07Ford delays some electric vehicles, renews focus on hybrids

Ford just announced some delays for electric vehicles (https://media.ford.com/content/fordmedia/fna/us/en/news/2024/04/04/ford-updates-timing-for-next-gen-evs--readies-manufacturing-plan.html), including the long-awaited three-row SUV. The car was supposed to come out next year but has now been delayed until 2027, with the company suggesting it will use the extra time to “take advantage of emerging battery technology.” Ford says it’ll be making moves to “mitigate the impact the launch delay will have on” the Canadian workforce.

The next-generation electric pickup, codenamed “T3,” is also being delayed from late 2025 to 2026. It’s being built at the Tennessee Electric Vehicle Center assembly plant at the company’s BlueOval City complex, though Ford says it's just now installing stamping equipment that will produce the sheet metal for the truck.

Alongside these announcements, the company has revealed a new push for hybrid vehicles. It has stated it plans on offering hybrid powertrains across the entire Ford Blue lineup by 2030. Despite the aforementioned delays and the pivot toward hybrid vehicles, Ford says it remains committed to EVs and that it's continuing construction of battery plants in Michigan, Tennessee and Kentucky.

However, it’s no secret that the EV market is not quite as robust as companies once hoped it would be. Maybe it’s the high price of entry, the spotty charging infrastructure or the fact that some EVs do not qualify for the federal tax break. Heck, maybe consumers are simply turned off by a certain CEO who shall not be named (https://www.engadget.com/tesla-paid-no-federal-income-taxes-while-paying-executives-25-billion-over-five-years-154529907.html).

In any event, the slowdown is real (https://www.engadget.com/ford-is-cutting-f-150-lightning-production-due-to-waning-demand-173838340.html), though perhaps a bit overstated. Ford experienced a decline in EV sales of 11 percent (https://www.reuters.com/business/autos-transportation/ford-ev-sales-fall-january-amid-demand-slowdown-2024-02-02/#:~:text=Feb%202%20(Reuters)%20%2D%20Ford,than%20their%20gasoline%2Dpowered%20counterparts.) in January, but the company says it bounced back and that sales have increased by 86 percent throughout the entire first quarter when compared to last year. However, the company's EV offerings lost $4.7 billion in 2023 (https://www.reuters.com/business/autos-transportation/ford-will-delay-some-north-american-ev-production-2024-04-04/).

“We are committed to scaling a profitable EV business", said Jim Farley, Ford president and CEO. To that end, the company announced last year that it would be delaying or canceling $12 billion in planned spending (https://shopping.yahoo.com/rdlw?merchantId=34e37b9c-8975-48da-aa39-df8bcd5badc3&siteId=us-engadget&pageId=1p-autolink&featureId=text-link&merchantName=CNBC&custData=eyJzb3VyY2VOYW1lIjoiV2ViLURlc2t0b3AtVmVyaXpvbiIsImxhbmRpbmdVcmwiOiJodHRwczovL3d3dy5jbmJjLmNvbS8yMDIzLzEwLzI2L2ZvcmQtd2lsbC1wb3N0cG9uZS1hYm91dC0xMi1iaWxsaW9uLWluLWV2LWludmVzdG1lbnQuaHRtbCIsImNvbnRlbnRVdWlkIjoiNDQwNTlkY2QtOTk1ZS00NGM1LTkzZTEtMGNmZDdlNTYxYjMyIn0&signature=AQAAASSjnSB0Gw7zfmip9UvERBPzLSHcKFuTFxh9jhi57Hxz&gcReferrer=https%3A%2F%2Fwww.cnbc.com%2F2023%2F10%2F26%2Fford-will-postpone-about-12-billion-in-ev-investment.html) on electric vehicles. It’s unclear how today’s announcements will impact Ford's plans to ramp up production to 600,000 EVs (https://www.engadget.com/ford-expects-to-reach-its-ev-production-goal-a-year-later-than-planned-101804539.html) per year. In any event, customers can now use Tesla Superchargers (https://www.engadget.com/ford-ev-owners-can-now-use-tesla-superchargers-in-the-us-and-canada-130053549.html) in the US and Canada, which should help assuage some of those infrastructure concerns.

This article originally appeared on Engadget at https://www.engadget.com/ford-delays-some-electric-vehicles-renews-focus-on-hybrids-172007210.html?src=rss

https://www.engadget.com/ford-delays-some-electric-vehicles-renews-focus-on-hybrids-172007210.html?src=rss

-

@ 8fb140b4:f948000c

2023-11-21 21:37:48

@ 8fb140b4:f948000c

2023-11-21 21:37:48Embarking on the journey of operating your own Lightning node on the Bitcoin Layer 2 network is more than just a tech-savvy endeavor; it's a step into a realm of financial autonomy and cutting-edge innovation. By running a node, you become a vital part of a revolutionary movement that's reshaping how we think about money and digital transactions. This role not only offers a unique perspective on blockchain technology but also places you at the heart of a community dedicated to decentralization and network resilience. Beyond the technicalities, it's about embracing a new era of digital finance, where you contribute directly to the network's security, efficiency, and growth, all while gaining personal satisfaction and potentially lucrative rewards.

In essence, running your own Lightning node is a powerful way to engage with the forefront of blockchain technology, assert financial independence, and contribute to a more decentralized and efficient Bitcoin network. It's an adventure that offers both personal and communal benefits, from gaining in-depth tech knowledge to earning a place in the evolving landscape of cryptocurrency.

Running your own Lightning node for the Bitcoin Layer 2 network can be an empowering and beneficial endeavor. Here are 10 reasons why you might consider taking on this task:

-

Direct Contribution to Decentralization: Operating a node is a direct action towards decentralizing the Bitcoin network, crucial for its security and resistance to control or censorship by any single entity.

-

Financial Autonomy: Owning a node gives you complete control over your financial transactions on the network, free from reliance on third-party services, which can be subject to fees, restrictions, or outages.

-

Advanced Network Participation: As a node operator, you're not just a passive participant but an active player in shaping the network, influencing its efficiency and scalability through direct involvement.

-

Potential for Higher Revenue: With strategic management and optimal channel funding, your node can become a preferred route for transactions, potentially increasing the routing fees you can earn.

-

Cutting-Edge Technological Engagement: Running a node puts you at the forefront of blockchain and bitcoin technology, offering insights into future developments and innovations.

-

Strengthened Network Security: Each new node adds to the robustness of the Bitcoin network, making it more resilient against attacks and failures, thus contributing to the overall security of the ecosystem.

-

Personalized Fee Structures: You have the flexibility to set your own fee policies, which can balance earning potential with the service you provide to the network.

-

Empowerment Through Knowledge: The process of setting up and managing a node provides deep learning opportunities, empowering you with knowledge that can be applied in various areas of blockchain and fintech.

-

Boosting Transaction Capacity: By running a node, you help to increase the overall capacity of the Lightning Network, enabling more transactions to be processed quickly and at lower costs.

-

Community Leadership and Reputation: As an active node operator, you gain recognition within the Bitcoin community, which can lead to collaborative opportunities and a position of thought leadership in the space.

These reasons demonstrate the impactful and transformative nature of running a Lightning node, appealing to those who are deeply invested in the principles of bitcoin and wish to actively shape its future. Jump aboard, and embrace the journey toward full independence. 🐶🐾🫡🚀🚀🚀

-

-

@ 195284db:2f006fc1

2024-04-04 16:11:40

@ 195284db:2f006fc1

2024-04-04 16:11:40Opinion about STRIKE: Bitcoin & Global Money (android)

Strike is for fiat and bitcoin. It can send and receive bitcoins.

WalletScrutiny #nostrOpinion

-

@ 42342239:1d80db24

2024-03-31 11:23:36

@ 42342239:1d80db24

2024-03-31 11:23:36Biologist Stuart Kauffman introduced the concept of the "adjacent possible" in evolutionary biology in 1996. A bacterium cannot suddenly transform into a flamingo; rather, it must rely on small exploratory changes (of the "adjacent possible") if it is ever to become a beautiful pink flying creature. The same principle applies to human societies, all of which exemplify complex systems. It is indeed challenging to transform shivering cave-dwellers into a space travelers without numerous intermediate steps.

Imagine a water wheel – in itself, perhaps not such a remarkable invention. Yet the water wheel transformed the hard-to-use energy of water into easily exploitable rotational energy. A little of the "adjacent possible" had now been explored: water mills, hammer forges, sawmills, and textile factories soon emerged. People who had previously ground by hand or threshed with the help of oxen could now spend their time on other things. The principles of the water wheel also formed the basis for wind power. Yes, a multitude of possibilities arose – reminiscent of the rapid development during the Cambrian explosion. When the inventors of bygone times constructed humanity's first water wheel, they thus expanded the "adjacent possible". Surely, the experts of old likely sought swift prohibitions. Not long ago, our expert class claimed that the internet was going to be a passing fad, or that it would only have the same modest impact on the economy as the fax machine. For what it's worth, there were even attempts to ban the number zero back in the days.

The pseudonymous creator of Bitcoin, Satoshi Nakamoto, wrote in Bitcoin's whitepaper that "[w]e have proposed a system for electronic transactions without relying on trust." The Bitcoin system enables participants to agree on what is true without needing to trust each other, something that has never been possible before. In light of this, it is worth noting that trust in the federal government in the USA is among the lowest levels measured in almost 70 years. Trust in media is at record lows. Moreover, in countries like the USA, the proportion of people who believe that one can trust "most people" has decreased significantly. "Rebuilding trust" was even the theme of the World Economic Forum at its annual meeting. It is evident, even in the international context, that trust between countries is not at its peak.

Over a fifteen-year period, Bitcoin has enabled electronic transactions without its participants needing to rely on a central authority, or even on each other. This may not sound like a particularly remarkable invention in itself. But like the water wheel, one must acknowledge that new potential seems to have been put in place, potential that is just beginning to be explored. Kauffman's "adjacent possible" has expanded. And despite dogmatic statements to the contrary, no one can know for sure where this might lead.

The discussion of Bitcoin or crypto currencies would benefit from greater humility and openness, not only from employees or CEOs of money laundering banks but also from forecast-failing central bank officials. When for instance Chinese Premier Zhou Enlai in the 1970s was asked about the effects of the French Revolution, he responded that it was "too early to say" - a far wiser answer than the categorical response of the bureaucratic class. Isn't exploring systems not based on trust is exactly what we need at this juncture?

-

@ de496884:72617b81

2023-11-20 13:54:02

@ de496884:72617b81

2023-11-20 13:54:02Hola nostriches. Hoy quiero hacer una publicación a modo de acercamiento al surgimiento de las categorías de género y feminismo. Así que si te interesa el tema haz un esfuerzo, quédate y lee hasta el final.

El «nuevo» feminismo surge en los años 60 en aquellos países desarrollados que acordaron el reconocimiento a los derechos humanos contenidos en la declaración respectiva de la ONU. Entonces, como extensión a dicho reconocimiento es que se gesta el movimiento feminista. A partir de entonces, se vuelve a plantear la relación entre naturaleza y cultura y se llega a la conclusión de que las diferencias sociales van más allá de las diferencias biológicas, lo cual tiene que ver con que hasta la satisfacción de las necesidades elementales como son la alimentación, la vivienda o el vestuario, están condicionadas por construcciones sociales.

Pues resulta que una de las primeras propuestas identificó la subordinación femenina producto de una organización patriarcal, tomando la categoría patriarcado de Max Weber. Es así como la visión de patriarcado se extendió al discurso político y académico. Sin embargo, no existía información acerca del desarrollo histórico de dicha categoría, sistematización, variaciones, etc., debido a que era algo que recién se percibía en sociedad.

Sin embargo, la misma categoría de patriarcado junto a la de relaciones de poder para explicar fenómenos sociales contemporáneos, constituye uno de los principales aportes del feminismo. Es así como han logrado primero visibilizar y luego deconstruir y desmontar una serie de patrones existentes en la cultura que hacían a la mujer subordinarse al hombre, y que estaban presentes en el sistema sexo/género.

Imagen relativa a la lucha contra el patriarcado

Imagen relativa a la lucha contra el patriarcadoHasta ese momento, en las investigaciones de corte sociológico se podía observar un androcentrismo excesivo al dar cuenta de que el discurso científico giraba en torno al hombre como sujeto y objeto de la investigación. Incluso para muchos positivistas como el mismo Weber, los problemas relativos a las mujeres quedaban en un segundo plano y eran achacados a una supuesta pasividad en el ámbito doméstico-familiar. Dicha pasividad partía de la supuesta inferioridad biológica del género femenino.

El patriarcado entonces era una categoría vacía en sí misma, sin valor explicativo. Desde el punto de vista político pudo ser útil para la movilización pero no resistió la polémica de los críticos del feminismo ni problematizó los conflictos a ser resueltos. Pero por otro lado, un grupo de mujeres -académicas principalmente- se puso manos a la obra en una tarea más pequeña pero con mayores resultados a la larga. Se propusieron generar conocimientos sobre las condiciones de vida de la mujer, buscar en el pasado y el presente los aportes de las mujeres a la sociedad, hacerlas visibles en la historia. Es así como surgen centros académicos, ONG, proyectos, centros de estudios sobre la mujer, etc.

Convendría distinguir entonces dos posturas que acompañaron a la investigación sobre las mujeres: uno que toma como objeto de estudio a la mujer; condiciones de vida, trabajo, cultura, y otra que privilegia a la sociedad como generadora de la subordinación de las mujeres. Mientras la primera perspectiva generaba conocimiento sobre las mujeres en el estudio de las relaciones mujer-hombre y mujer-mujer, la segunda tenía una visión más holística ya que planteaba que hay que estudiar la sociedad ya que la subordinación de las mujeres es producto de la organización de esta, y que no se avanzará solamente estudiando a las mujeres, pues el estudio debe ser más amplio y en diferentes niveles, ámbitos y tiempos.

Es en esta búsqueda donde nace y se desarrolla el concepto de género como categoría social. Es así que los sistemas de género/sexo fueron entendidos como conjuntos de prácticas, símbolos, representaciones, normas y valores sociales que las sociedades construyen a partir de la diferencia anatomofisiológica y que dotan de sentido a los impulsos sexuales, la reproducción y en general a las relaciones de las personas. Por tanto, este sistema pasó a ser el objeto de estudio para comprender la subordinación de la mujer al hombre. Al ser el reconocimiento de una dimensión de la desigualdad social hasta entonces desconocida, se considera la ruptura epistemológica más importante de la segunda mitad del siglo pasado.

Imagen representativa del binarismo de género

Imagen representativa del binarismo de géneroHay que decir que los fenómenos sociales se definen por la relación que guardan entre sí. Es así que la contextualización adquiere gran importancia. Varones y hembras tenemos la capacidad de producir con el cuerpo, pero solo los cuerpos de las hembras pueden gestar y por tanto asegurar la especie humana. Todo grupo humano que pretenda sobrevivir debe asegurarse cierto número de hembras que lo hagan posible. De ahí un poder particular del cuerpo de las hembras que además es económicamente útil. Dicho poder no es en tanto entidad biológica, sino que es la sociedad la que le otorga este poder. En este punto es importante preguntarnos históricamente ¿quiénes han controlado la capacidad reproductiva de las mujeres?, ¿quiénes han tenido la preferencia en el acceso sexual? Para asegurar un control efectivo sobre la reproducción es necesario asegurar el acceso a la sexualidad.

Ello también lleva a dirigir el trabajo que hacen porque de lo contrario, las mujeres pudieran tener espacio para representar una amenaza al dominio de los hombres. Es importante atender a las etapas del desarrollo de las mujeres, que permitirá ver cómo se moldean para aceptar la desigualdad y las jerarquías según el género en las distintas sociedades y culturas.

Otro contexto a delimitar es el ámbito doméstico donde históricamente ha estado ubicada la subordinación femenina. Se ha puesto al descubierto que en mujeres que comparten la misma posición de clase y raza, la condición femenina se redefine a lo largo de la vida y que algunas mujeres gozan de poder sobre otras aún en contextos de alta dominación masculina, donde se les inviste de autoridad, creando el sistema zonas de incertidumbre que las divide como género e impide alianzas y se legitima la dominación. Foucault decía que «el poder se ejerce, no se posee», siempre está en peligro de perderse y no basta con normas, leyes, amenazas o castigos. De ahí que los espacios de subordinación sean también espacios de poder de las mujeres. Es en estos espacios donde aparece la inseguridad, la tensión, la confrontación y por ejemplo, las mujeres pueden reclamar la anticoncepción.

Para terminar, es importante tener en cuenta que el género es una forma de desigualdad social que siempre está articulado con otras formas de desigualdad ya sea clase, raza, grupo etario, etc. Hay que pensar la dominación masculina con un comienzo vago en el tiempo, pero que permite desligar la subordinación de las mujeres de la evolución «natural» de la humanidad y entenderla como un proceso histórico. En ese sentido es necesario destacar que, respecto a la raza, en sociedades plurales hay que atender al contexto étnico-cultural ya que en dependencia de las relaciones entre personas de razas distintas, estarán condicionadas las relaciones entre los géneros.

Imagen de mujeres de distintas razas

Imagen de mujeres de distintas razasPor otro lado, si bien es sabido que históricamente el sistema de género social imperante ha contribuido a invisibilizar a la mujer, asociar necesariamente dicha categoría únicamente a ella, lo que hace es nuevamente recrear el absolutismo histórico que tanto daño ha hecho, ahora como lo antagónico al hombre. En la construcción social participan todos los individuos presentes en el sistema género.

Hasta aquí la publicación de hoy, de la cual aun se puede ahondar mucho más, sobre todo en lo referido al origen de la dominación o de la jerarquización del binomio «hombre/mujer» desde un punto de vista antropológico, pero eso quedará pendiente para otro momento. ¡Así que hasta la próxima!, ¡nos vemos en Nostr!

........................................

English version

Hello nostriches. Today I want to make a post by way of an approach to the emergence of the categories of gender and feminism. So if you are interested in the topic make an effort, stay and read to the end.

The "new" feminism emerged in the 60's in those developed countries that agreed to recognize the human rights contained in the respective UN declaration. Then, as an extension of that recognition, the feminist movement was born. From then on, the relationship between nature and culture was raised again and the conclusion was reached that social differences go beyond biological differences, which has to do with the fact that even the satisfaction of elementary needs such as food, housing or clothing are conditioned by social constructions.

It turns out that one of the first proposals identified female subordination as a product of a patriarchal organization, taking Max Weber's category of patriarchy. This is how the vision of patriarchy was extended to political and academic discourse. However, there was no information about the historical development of this category, systematization, variations, etc., because it was something that was only recently perceived in society.

However, the same category of patriarchy, together with that of power relations to explain contemporary social phenomena, constitutes one of the main contributions of feminism. This is how they have first made visible and then deconstructed and dismantled a series of existing patterns in the culture that made women subordinate to men, and that were present in the sex/gender system.

Image relating to the fight against patriarchy

Image relating to the fight against patriarchyUp to that time, in sociological research, an excessive androcentrism could be observed as scientific discourse revolved around men as the subject and object of research. Even for many positivists such as Weber himself, the problems related to women remained in the background and were blamed on a supposed passivity in the domestic-family sphere. This passivity was based on the supposed biological inferiority of the female gender.

Patriarchy was then an empty category in itself, with no explanatory value. From the political point of view, it could be useful for mobilization, but it did not resist the polemic of feminism's critics, nor did it problematize the conflicts to be resolved. But on the other hand, a group of women - mainly academics - set to work on a smaller task but with greater results in the long run. They set out to generate knowledge about women's living conditions, to search in the past and present for women's contributions to society, to make them visible in history. This is how academic centers, NGOs, projects, women's studies centers, etc., came into being.

It would be convenient to distinguish then two postures that accompanied the research on women: one that takes women as the object of study; living conditions, work, culture, and the other that privileges society as the generator of women's subordination. While the first perspective generated knowledge about women in the study of woman-man and woman-woman relations, the second had a more holistic vision, since it proposed that society must be studied, since the subordination of women is a product of its organization, and that progress will not be made only by studying women, since the study must be broader and at different levels, spheres and times.

It is in this search that the concept of gender as a social category was born and developed. Thus, gender/sex systems were understood as a set of practices, symbols, representations, norms and social values that societies construct on the basis of anatomophysiological differences and that give meaning to sexual impulses, reproduction and, in general, to people's relationships. Therefore, this system became the object of study to understand the subordination of women to men. As the recognition of a hitherto unknown dimension of social inequality, it is considered the most important epistemological breakthrough of the second half of the last century.

Representative image of gender binarism

Representative image of gender binarismIt must be said that social phenomena are defined by their relationship with each other. Thus, contextualization acquires great importance. Males and females have the capacity to produce with their bodies, but only the bodies of females can gestate and therefore ensure the human species. Any human group that intends to survive must ensure a certain number of females to make it possible. Hence a particular power of the female body, which is also economically useful. This power is not as a biological entity, but it is society that gives it this power. At this point it is important to ask ourselves historically, who has controlled the reproductive capacity of women, who has had the preference in sexual access? To ensure effective control over reproduction, it is necessary to ensure access to sexuality.

Allegorical image of pregnancy

Allegorical image of pregnancyThis also leads to directing the work they do because otherwise, women may have room to pose a threat to men's dominance. It is important to pay attention to the stages of women's development, which will allow us to see how they are shaped to accept inequality and gender hierarchies in different societies and cultures.

Another context to delimit is the domestic sphere where female subordination has historically been located. It has been discovered that in women who share the same class and race position, the feminine condition is redefined throughout life and that some women enjoy power over others even in contexts of high male domination, where they are invested with authority, creating the system of uncertainty zones that divide them as a gender and prevent alliances and legitimize domination. Foucault said that "power is exercised, not possessed", it is always in danger of being lost and norms, laws, threats or punishments are not enough. Hence, the spaces of subordination are also spaces of women's power. It is in these spaces where insecurity, tension, confrontation appear and where, for example, women can demand contraception.

To conclude, it is important to keep in mind that gender is a form of social inequality that is always articulated with other forms of inequality, be it class, race, age group, etc. It is necessary to think of male domination with a vague beginning in time, but which allows us to separate the subordination of women from the "natural" evolution of humanity and to understand it as a historical process. In this sense, it is necessary to emphasize that, with respect to race, in plural societies it is necessary to pay attention to the ethno-cultural context since, depending on the relations between people of different races, the relations between genders will be conditioned.

Image of women of different races

Image of women of different racesOn the other hand, although it is known that historically the prevailing social gender system has contributed to make women invisible, to necessarily associate this category only to women is to recreate the historical absolutism that has done so much damage, this time as antagonistic to men. All individuals present in the gender system participate in the social construction.

So much for today's publication, of which we can still go much deeper, especially with regard to the origin of domination or the hierarchization of the binomial "man/woman" from an anthropological point of view, but that will be left for another time. So until next time, see you in Nostr!

Créditos | Credits

Bibliografía consultada | Consulted bibliography:

-

Barbieri, T. (1993). Sobre la categoría género. Una introducción teórico-metodológica. Debates en Sociología. (18). 1-19. https://doi.org/10.18800/debatesensociologia.199301.006

-

Echevarría, D. (2020). Desigualdades de género e interseccionalidad. Análisis del contexto cubano 2008-2018. Facultad Latinoamericana de Ciencias Sociales (Programa-Cuba). https://www.clacso.org.ar/libreria-latinoamericana-cm/libro_detalle_resultado.php?id_libro=2346&campo=cm&texto=248

Traducción | Translation:

DeepL

-

-

@ 044b20cf:89e58620

2024-04-04 15:37:31

@ 044b20cf:89e58620

2024-04-04 15:37:31Amazon’s Prime Gaming titles for April include Fallout 76 and Chivalry 2

Amazon is offering another decent batch of games (https://primegaming.blog/prime-gaming-april-content-update-fallout-76-chivalry-2-faraway-2-jungle-escape-and-more-f66c309986a8) to Prime members at no extra cost for the month of April. Leading the bunch is Fallout 76, a multiplayer take on the post-apocalyptic franchise. There’s also the hack and slash adventure Chivalry 2 and around a dozen more.

Fallout 76 is just the latest title in the series to hit Prime Gaming, likely to coincide with this month’s premiere (https://www.engadget.com/prime-videos-latest-fallout-trailer-deftly-captures-the-tone-of-the-games-170210309.html) of the Fallout TV show. It experienced mixed reviews upon release, with many complaining about an overall lack of purpose in the gameplay and a blandness in the design. Other people simply enjoyed wandering around a post-apocalyptic West Virginia with their buddies.

The game’s available for PC and, interestingly, Xbox consoles. People can download it from the Microsoft Store for PC and Amazon will hand out a code to Prime members for an Xbox download. Fallout 76 will be available on April 11, the same exact day all eight episodes of the show drop.

Chivalry 2, on the other hand, is available right now. This is a modern take on the hack and slash genre, with a first-person POV, multiplayer matches with up to 64 players at once and a grimy medieval setting. There’s also crossplay for every available platform (https://www.engadget.com/chivalry-2-crossplay-071812073.html). Prime members can download it at the Epic Games Store.

Other titles coming this month include Black Desert, Demon’s Tilt, Rose Riddle: The Fairy Tale Detective Collector’s Edition and many more. Click the link above for the full list. Additionally, Prime Members in the US, Canada, Germany, France, Italy, Spain and the UK can access some new games on Luna at no extra charge. These include LEGO Fortnite, Earthworm Jim 2 and Trackmania, among others.

This article originally appeared on Engadget at https://www.engadget.com/amazons-prime-gaming-titles-for-april-include-fallout-76-and-chivalry-2-153731669.html?src=rss

https://www.engadget.com/amazons-prime-gaming-titles-for-april-include-fallout-76-and-chivalry-2-153731669.html?src=rss

-

@ 3bf0c63f:aefa459d

2024-03-23 08:57:08

@ 3bf0c63f:aefa459d

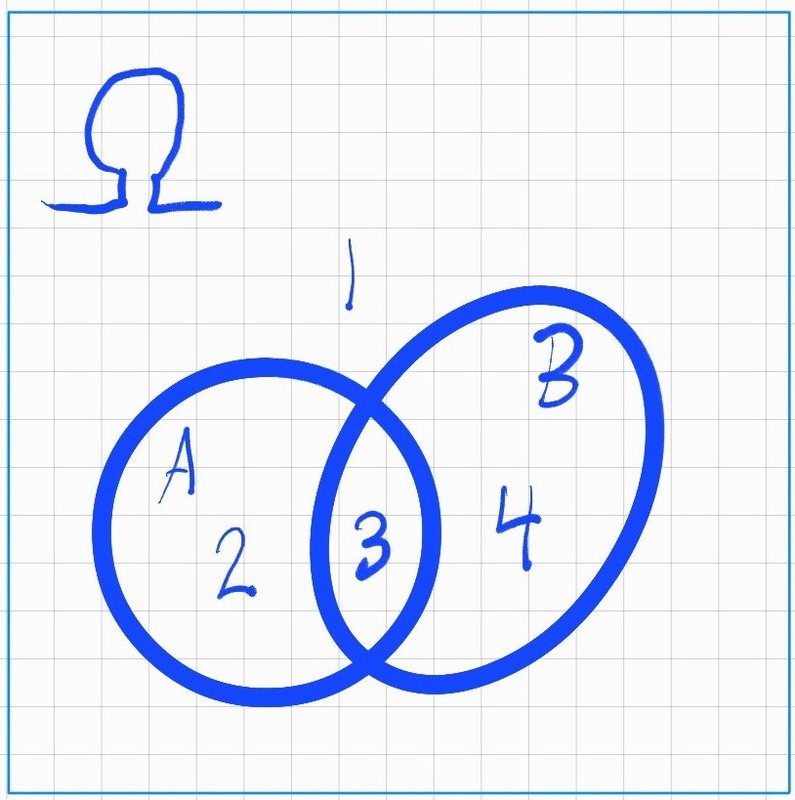

2024-03-23 08:57:08Nostr is not decentralized nor censorship-resistant

Peter Todd has been saying this for a long time and all the time I've been thinking he is misunderstanding everything, but I guess a more charitable interpretation is that he is right.

Nostr today is indeed centralized.

Yesterday I published two harmless notes with the exact same content at the same time. In two minutes the notes had a noticeable difference in responses:

The top one was published to

wss://nostr.wine,wss://nos.lol,wss://pyramid.fiatjaf.com. The second was published to the relay where I generally publish all my notes to,wss://pyramid.fiatjaf.com, and that is announced on my NIP-05 file and on my NIP-65 relay list.A few minutes later I published that screenshot again in two identical notes to the same sets of relays, asking if people understood the implications. The difference in quantity of responses can still be seen today:

These results are skewed now by the fact that the two notes got rebroadcasted to multiple relays after some time, but the fundamental point remains.

What happened was that a huge lot more of people saw the first note compared to the second, and if Nostr was really censorship-resistant that shouldn't have happened at all.