-

@ a008def1:57a3564d

2025-04-30 17:52:11

@ a008def1:57a3564d

2025-04-30 17:52:11A Vision for #GitViaNostr

Git has long been the standard for version control in software development, but over time, we has lost its distributed nature. Originally, Git used open, permissionless email for collaboration, which worked well at scale. However, the rise of GitHub and its centralized pull request (PR) model has shifted the landscape.

Now, we have the opportunity to revive Git's permissionless and distributed nature through Nostr!

We’ve developed tools to facilitate Git collaboration via Nostr, but there are still significant friction that prevents widespread adoption. This article outlines a vision for how we can reduce those barriers and encourage more repositories to embrace this approach.

First, we’ll review our progress so far. Then, we’ll propose a guiding philosophy for our next steps. Finally, we’ll discuss a vision to tackle specific challenges, mainly relating to the role of the Git server and CI/CD.

I am the lead maintainer of ngit and gitworkshop.dev, and I’ve been fortunate to work full-time on this initiative for the past two years, thanks to an OpenSats grant.

How Far We’ve Come

The aim of #GitViaNostr is to liberate discussions around code collaboration from permissioned walled gardens. At the core of this collaboration is the process of proposing and applying changes. That's what we focused on first.

Since Nostr shares characteristics with email, and with NIP34, we’ve adopted similar primitives to those used in the patches-over-email workflow. This is because of their simplicity and that they don’t require contributors to host anything, which adds reliability and makes participation more accessible.

However, the fork-branch-PR-merge workflow is the only model many developers have known, and changing established workflows can be challenging. To address this, we developed a new workflow that balances familiarity, user experience, and alignment with the Nostr protocol: the branch-PR-merge model.

This model is implemented in ngit, which includes a Git plugin that allows users to engage without needing to learn new commands. Additionally, gitworkshop.dev offers a GitHub-like interface for interacting with PRs and issues. We encourage you to try them out using the quick start guide and share your feedback. You can also explore PRs and issues with gitplaza.

For those who prefer the patches-over-email workflow, you can still use that approach with Nostr through gitstr or the

ngit sendandngit listcommands, and explore patches with patch34.The tools are now available to support the core collaboration challenge, but we are still at the beginning of the adoption curve.

Before we dive into the challenges—such as why the Git server setup can be jarring and the possibilities surrounding CI/CD—let’s take a moment to reflect on how we should approach the challenges ahead of us.

Philosophy

Here are some foundational principles I shared a few years ago:

- Let Git be Git

- Let Nostr be Nostr

- Learn from the successes of others

I’d like to add one more:

- Embrace anarchy and resist monolithic development.

Micro Clients FTW

Nostr celebrates simplicity, and we should strive to maintain that. Monolithic developments often lead to unnecessary complexity. Projects like gitworkshop.dev, which aim to cover various aspects of the code collaboration experience, should not stifle innovation.

Just yesterday, the launch of following.space demonstrated how vibe-coded micro clients can make a significant impact. They can be valuable on their own, shape the ecosystem, and help push large and widely used clients to implement features and ideas.

The primitives in NIP34 are straightforward, and if there are any barriers preventing the vibe-coding of a #GitViaNostr app in an afternoon, we should work to eliminate them.

Micro clients should lead the way and explore new workflows, experiences, and models of thinking.

Take kanbanstr.com. It provides excellent project management and organization features that work seamlessly with NIP34 primitives.

From kanban to code snippets, from CI/CD runners to SatShoot—may a thousand flowers bloom, and a thousand more after them.

Friction and Challenges

The Git Server

In #GitViaNostr, maintainers' branches (e.g.,

master) are hosted on a Git server. Here’s why this approach is beneficial:- Follows the original Git vision and the "let Git be Git" philosophy.

- Super efficient, battle-tested, and compatible with all the ways people use Git (e.g., LFS, shallow cloning).

- Maintains compatibility with related systems without the need for plugins (e.g., for build and deployment).

- Only repository maintainers need write access.

In the original Git model, all users would need to add the Git server as a 'git remote.' However, with ngit, the Git server is hidden behind a Nostr remote, which enables:

- Hiding complexity from contributors and users, so that only maintainers need to know about the Git server component to start using #GitViaNostr.

- Maintainers can easily swap Git servers by updating their announcement event, allowing contributors/users using ngit to automatically switch to the new one.

Challenges with the Git Server

While the Git server model has its advantages, it also presents several challenges:

- Initial Setup: When creating a new repository, maintainers must select a Git server, which can be a jarring experience. Most options come with bloated social collaboration features tied to a centralized PR model, often difficult or impossible to disable.

-

Manual Configuration: New repositories require manual configuration, including adding new maintainers through a browser UI, which can be cumbersome and time-consuming.

-

User Onboarding: Many Git servers require email sign-up or KYC (Know Your Customer) processes, which can be a significant turn-off for new users exploring a decentralized and permissionless alternative to GitHub.

Once the initial setup is complete, the system works well if a reliable Git server is chosen. However, this is a significant "if," as we have become accustomed to the excellent uptime and reliability of GitHub. Even professionally run alternatives like Codeberg can experience downtime, which is frustrating when CI/CD and deployment processes are affected. This problem is exacerbated when self-hosting.

Currently, most repositories on Nostr rely on GitHub as the Git server. While maintainers can change servers without disrupting their contributors, this reliance on a centralized service is not the decentralized dream we aspire to achieve.

Vision for the Git Server

The goal is to transform the Git server from a single point of truth and failure into a component similar to a Nostr relay.

Functionality Already in ngit to Support This

-

State on Nostr: Store the state of branches and tags in a Nostr event, removing reliance on a single server. This validates that the data received has been signed by the maintainer, significantly reducing the trust requirement.

-

Proxy to Multiple Git Servers: Proxy requests to all servers listed in the announcement event, adding redundancy and eliminating the need for any one server to match GitHub's reliability.

Implementation Requirements

To achieve this vision, the Nostr Git server implementation should:

-

Implement the Git Smart HTTP Protocol without authentication (no SSH) and only accept pushes if the reference tip matches the latest state event.

-

Avoid Bloat: There should be no user authentication, no database, no web UI, and no unnecessary features.

-

Automatic Repository Management: Accept or reject new repositories automatically upon the first push based on the content of the repository announcement event referenced in the URL path and its author.

Just as there are many free, paid, and self-hosted relays, there will be a variety of free, zero-step signup options, as well as self-hosted and paid solutions.

Some servers may use a Web of Trust (WoT) to filter out spam, while others might impose bandwidth or repository size limits for free tiers or whitelist specific npubs.

Additionally, some implementations could bundle relay and blossom server functionalities to unify the provision of repository data into a single service. These would likely only accept content related to the stored repositories rather than general social nostr content.

The potential role of CI / CD via nostr DVMs could create the incentives for a market of highly reliable free at the point of use git servers.

This could make onboarding #GitViaNostr repositories as easy as entering a name and selecting from a multi-select list of Git server providers that announce via NIP89.

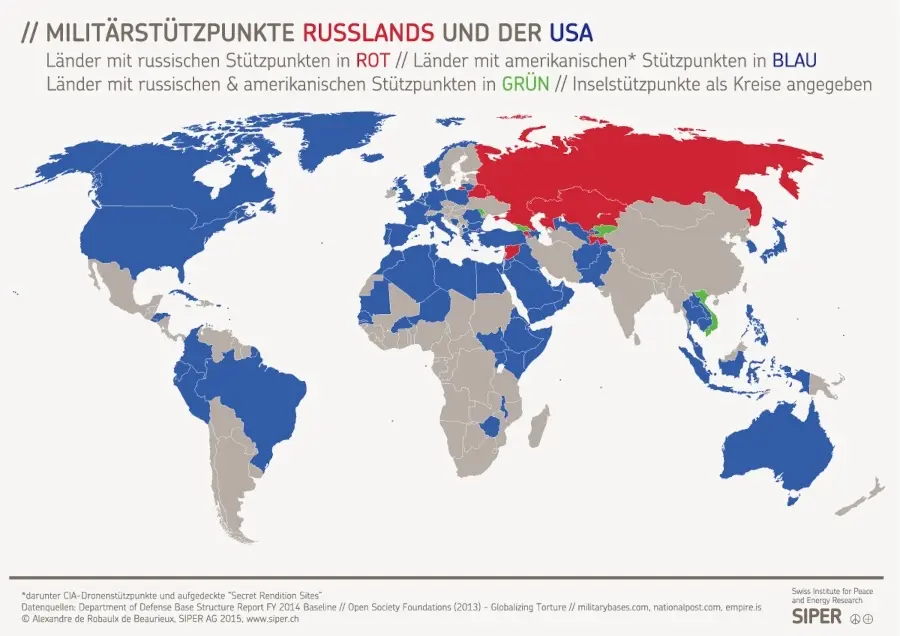

!(image)[https://image.nostr.build/badedc822995eb18b6d3c4bff0743b12b2e5ac018845ba498ce4aab0727caf6c.jpg]

Git Client in the Browser

Currently, many tasks are performed on a Git server web UI, such as:

- Browsing code, commits, branches, tags, etc.

- Creating and displaying permalinks to specific lines in commits.

- Merging PRs.

- Making small commits and PRs on-the-fly.

Just as nobody goes to the web UI of a relay (e.g., nos.lol) to interact with notes, nobody should need to go to a Git server to interact with repositories. We use the Nostr protocol to interact with Nostr relays, and we should use the Git protocol to interact with Git servers. This situation has evolved due to the centralization of Git servers. Instead of being restricted to the view and experience designed by the server operator, users should be able to choose the user experience that works best for them from a range of clients. To facilitate this, we need a library that lowers the barrier to entry for creating these experiences. This library should not require a full clone of every repository and should not depend on proprietary APIs. As a starting point, I propose wrapping the WASM-compiled gitlib2 library for the web and creating useful functions, such as showing a file, which utilizes clever flags to minimize bandwidth usage (e.g., shallow clone, noblob, etc.).

This approach would not only enhance clients like gitworkshop.dev but also bring forth a vision where Git servers simply run the Git protocol, making vibe coding Git experiences even better.

song

nostr:npub180cvv07tjdrrgpa0j7j7tmnyl2yr6yr7l8j4s3evf6u64th6gkwsyjh6w6 created song with a complementary vision that has shaped how I see the role of the git server. Its a self-hosted, nostr-permissioned git server with a relay baked in. Its currently a WIP and there are some compatability with ngit that we need to work out.

We collaborated on the nostr-permissioning approach now reflected in nip34.

I'm really excited to see how this space evolves.

CI/CD

Most projects require CI/CD, and while this is often bundled with Git hosting solutions, it is currently not smoothly integrated into #GitViaNostr yet. There are many loosely coupled options, such as Jenkins, Travis, CircleCI, etc., that could be integrated with Nostr.

However, the more exciting prospect is to use DVMs (Data Vending Machines).

DVMs for CI/CD

Nostr Data Vending Machines (DVMs) can provide a marketplace of CI/CD task runners with Cashu for micro payments.

There are various trust levels in CI/CD tasks:

- Tasks with no secrets eg. tests.

- Tasks using updatable secrets eg. API keys.

- Unverifiable builds and steps that sign with Android, Nostr, or PGP keys.

DVMs allow tasks to be kicked off with specific providers using a Cashu token as payment.

It might be suitable for some high-compute and easily verifiable tasks to be run by the cheapest available providers. Medium trust tasks could be run by providers with a good reputation, while high trust tasks could be run on self-hosted runners.

Job requests, status, and results all get published to Nostr for display in Git-focused Nostr clients.

Jobs could be triggered manually, or self-hosted runners could be configured to watch a Nostr repository and kick off jobs using their own runners without payment.

But I'm most excited about the prospect of Watcher Agents.

CI/CD Watcher Agents

AI agents empowered with a NIP60 Cashu wallet can run tasks based on activity, such as a push to master or a new PR, using the most suitable available DVM runner that meets the user's criteria. To keep them running, anyone could top up their NIP60 Cashu wallet; otherwise, the watcher turns off when the funds run out. It could be users, maintainers, or anyone interested in helping the project who could top up the Watcher Agent's balance.

As aluded to earlier, part of building a reputation as a CI/CD provider could involve running reliable hosting (Git server, relay, and blossom server) for all FOSS Nostr Git repositories.

This provides a sustainable revenue model for hosting providers and creates incentives for many free-at-the-point-of-use hosting providers. This, in turn, would allow one-click Nostr repository creation workflows, instantly hosted by many different providers.

Progress to Date

nostr:npub1hw6amg8p24ne08c9gdq8hhpqx0t0pwanpae9z25crn7m9uy7yarse465gr and nostr:npub16ux4qzg4qjue95vr3q327fzata4n594c9kgh4jmeyn80v8k54nhqg6lra7 have been working on a runner that uses GitHub Actions YAML syntax (using act) for the dvm-cicd-runner and takes Cashu payment. You can see example runs on GitWorkshop. It currently takes testnuts, doesn't give any change, and the schema will likely change.

Note: The actions tab on GitWorkshop is currently available on all repositories if you turn on experimental mode (under settings in the user menu).

It's a work in progress, and we expect the format and schema to evolve.

Easy Web App Deployment

For those disapointed not to find a 'Nostr' button to import a git repository to Vercel menu: take heart, they made it easy. vercel.com_import_options.png there is a vercel cli that can be easily called in CI / CD jobs to kick of deployments. Not all managed solutions for web app deployment (eg. netlify) make it that easy.

Many More Opportunities

Large Patches via Blossom

I would be remiss not to mention the large patch problem. Some patches are too big to fit into Nostr events. Blossom is perfect for this, as it allows these larger patches to be included in a blossom file and referenced in a new patch kind.

Enhancing the #GitViaNostr Experience

Beyond the large patch issue, there are numerous opportunities to enhance the #GitViaNostr ecosystem. We can focus on improving browsing, discovery, social and notifications. Receiving notifications on daily driver Nostr apps is one of the killer features of Nostr. However, we must ensure that Git-related notifications are easily reviewable, so we don’t miss any critical updates.

We need to develop tools that cater to our curiosity—tools that enable us to discover and follow projects, engage in discussions that pique our interest, and stay informed about developments relevant to our work.

Additionally, we should not overlook the importance of robust search capabilities and tools that facilitate migrations.

Concluding Thoughts

The design space is vast. Its an exciting time to be working on freedom tech. I encourage everyone to contribute their ideas and creativity and get vibe-coding!

I welcome your honest feedback on this vision and any suggestions you might have. Your insights are invaluable as we collaborate to shape the future of #GitViaNostr. Onward.

Contributions

To conclude, I want to acknowledge some the individuals who have made recent code contributions related to #GitViaNostr:

nostr:npub180cvv07tjdrrgpa0j7j7tmnyl2yr6yr7l8j4s3evf6u64th6gkwsyjh6w6 (gitstr, song, patch34), nostr:npub1useke4f9maul5nf67dj0m9sq6jcsmnjzzk4ycvldwl4qss35fvgqjdk5ks (gitplaza)

nostr:npub1elta7cneng3w8p9y4dw633qzdjr4kyvaparuyuttyrx6e8xp7xnq32cume (ngit contributions, git-remote-blossom),nostr:npub16p8v7varqwjes5hak6q7mz6pygqm4pwc6gve4mrned3xs8tz42gq7kfhdw (SatShoot, Flotilla-Budabit), nostr:npub1ehhfg09mr8z34wz85ek46a6rww4f7c7jsujxhdvmpqnl5hnrwsqq2szjqv (Flotilla-Budabit, Nostr Git Extension), nostr:npub1ahaz04ya9tehace3uy39hdhdryfvdkve9qdndkqp3tvehs6h8s5slq45hy (gnostr and experiments), and others.

nostr:npub1uplxcy63up7gx7cladkrvfqh834n7ylyp46l3e8t660l7peec8rsd2sfek (git-remote-nostr)

Project Management nostr:npub1ltx67888tz7lqnxlrg06x234vjnq349tcfyp52r0lstclp548mcqnuz40t (kanbanstr) Code Snippets nostr:npub1ygzj9skr9val9yqxkf67yf9jshtyhvvl0x76jp5er09nsc0p3j6qr260k2 (nodebin.io) nostr:npub1r0rs5q2gk0e3dk3nlc7gnu378ec6cnlenqp8a3cjhyzu6f8k5sgs4sq9ac (snipsnip.dev)

CI / CD nostr:npub16ux4qzg4qjue95vr3q327fzata4n594c9kgh4jmeyn80v8k54nhqg6lra7 nostr:npub1hw6amg8p24ne08c9gdq8hhpqx0t0pwanpae9z25crn7m9uy7yarse465gr

and for their nostr:npub1c03rad0r6q833vh57kyd3ndu2jry30nkr0wepqfpsm05vq7he25slryrnw nostr:npub1qqqqqq2stely3ynsgm5mh2nj3v0nk5gjyl3zqrzh34hxhvx806usxmln03 and nostr:npub1l5sga6xg72phsz5422ykujprejwud075ggrr3z2hwyrfgr7eylqstegx9z for their testing, feedback, ideas and encouragement.

Thank you for your support and collaboration! Let me know if I've missed you.

-

@ 6be5cc06:5259daf0

2025-04-28 01:05:49

@ 6be5cc06:5259daf0

2025-04-28 01:05:49Eu reconheço que Deus, e somente Deus, é o soberano legítimo sobre todas as coisas. Nenhum homem, nenhuma instituição, nenhum parlamento tem autoridade para usurpar aquilo que pertence ao Rei dos reis. O Estado moderno, com sua pretensão totalizante, é uma farsa blasfema diante do trono de Cristo. Não aceito outro senhor.

A Lei que me guia não é a ditada por burocratas, mas a gravada por Deus na própria natureza humana. A razão, quando iluminada pela fé, é suficiente para discernir o que é justo. Rejeito as leis arbitrárias que pretendem legitimar o roubo, o assassinato ou a escravidão em nome da ordem. A justiça não nasce do decreto, mas da verdade.

Acredito firmemente na propriedade privada como extensão da própria pessoa. Aquilo que é fruto do meu trabalho, da minha criatividade, da minha dedicação, dos dons a mim concedidos por Deus, pertence a mim por direito natural. Ninguém pode legitimamente tomar o que é meu sem meu consentimento. Todo imposto é uma agressão; toda expropriação, um roubo. Defendo a liberdade econômica não por idolatria ao mercado, mas porque a liberdade é condição necessária para a virtude.

Assumo o Princípio da Não Agressão como o mínimo ético que devo respeitar. Não iniciarei o uso da força contra ninguém, nem contra sua propriedade. Exijo o mesmo de todos. Mas sei que isso não basta. O PNA delimita o que não devo fazer — ele não me ensina o que devo ser. A liberdade exterior só é boa se houver liberdade interior. O mercado pode ser livre, mas se a alma estiver escravizada pelo vício, o colapso será inevitável.

Por isso, não me basta a ética negativa. Creio que uma sociedade justa precisa de valores positivos: honra, responsabilidade, compaixão, respeito, fidelidade à verdade. Sem isso, mesmo uma sociedade que respeite formalmente os direitos individuais apodrecerá por dentro. Um povo que ama o lucro, mas despreza a verdade, que celebra a liberdade mas esquece a justiça, está se preparando para ser dominado. Trocará um déspota visível por mil tiranias invisíveis — o hedonismo, o consumismo, a mentira, o medo.

Não aceito a falsa caridade feita com o dinheiro tomado à força. A verdadeira generosidade nasce do coração livre, não da coerção institucional. Obrigar alguém a ajudar o próximo destrói tanto a liberdade quanto a virtude. Só há mérito onde há escolha. A caridade que nasce do amor é redentora; a que nasce do fisco é propaganda.

O Estado moderno é um ídolo. Ele promete segurança, mas entrega servidão. Promete justiça, mas entrega privilégios. Disfarça a opressão com linguagem técnica, legal e democrática. Mas por trás de suas máscaras, vejo apenas a velha serpente. Um parasita que se alimenta do trabalho alheio e manipula consciências para se perpetuar.

Resistir não é apenas um direito, é um dever. Obedecer a Deus antes que aos homens — essa é a minha regra. O poder se volta contra a verdade, mas minha lealdade pertence a quem criou o céu e a terra. A tirania não se combate com outro tirano, mas com a desobediência firme e pacífica dos que amam a justiça.

Não acredito em utopias. Desejo uma ordem natural, orgânica, enraizada no voluntarismo. Uma sociedade que se construa de baixo para cima: a partir da família, da comunidade local, da tradição e da fé. Não quero uma máquina que planeje a vida alheia, mas um tecido de relações voluntárias onde a liberdade floresça à sombra da cruz.

Desejo, sim, o reinado social de Cristo. Não por imposição, mas por convicção. Que Ele reine nos corações, nas famílias, nas ruas e nos contratos. Que a fé guie a razão e a razão ilumine a vida. Que a liberdade seja meio para a santidade — não um fim em si. E que, livres do jugo do Leviatã, sejamos servos apenas do Senhor.

-

@ a39d19ec:3d88f61e

2025-04-22 12:44:42

@ a39d19ec:3d88f61e

2025-04-22 12:44:42Die Debatte um Migration, Grenzsicherung und Abschiebungen wird in Deutschland meist emotional geführt. Wer fordert, dass illegale Einwanderer abgeschoben werden, sieht sich nicht selten dem Vorwurf des Rassismus ausgesetzt. Doch dieser Vorwurf ist nicht nur sachlich unbegründet, sondern verkehrt die Realität ins Gegenteil: Tatsächlich sind es gerade diejenigen, die hinter jeder Forderung nach Rechtssicherheit eine rassistische Motivation vermuten, die selbst in erster Linie nach Hautfarbe, Herkunft oder Nationalität urteilen.

Das Recht steht über Emotionen

Deutschland ist ein Rechtsstaat. Das bedeutet, dass Regeln nicht nach Bauchgefühl oder politischer Stimmungslage ausgelegt werden können, sondern auf klaren gesetzlichen Grundlagen beruhen müssen. Einer dieser Grundsätze ist in Artikel 16a des Grundgesetzes verankert. Dort heißt es:

„Auf Absatz 1 [Asylrecht] kann sich nicht berufen, wer aus einem Mitgliedstaat der Europäischen Gemeinschaften oder aus einem anderen Drittstaat einreist, in dem die Anwendung des Abkommens über die Rechtsstellung der Flüchtlinge und der Europäischen Menschenrechtskonvention sichergestellt ist.“

Das bedeutet, dass jeder, der über sichere Drittstaaten nach Deutschland einreist, keinen Anspruch auf Asyl hat. Wer dennoch bleibt, hält sich illegal im Land auf und unterliegt den geltenden Regelungen zur Rückführung. Die Forderung nach Abschiebungen ist daher nichts anderes als die Forderung nach der Einhaltung von Recht und Gesetz.

Die Umkehrung des Rassismusbegriffs

Wer einerseits behauptet, dass das deutsche Asyl- und Aufenthaltsrecht strikt durchgesetzt werden soll, und andererseits nicht nach Herkunft oder Hautfarbe unterscheidet, handelt wertneutral. Diejenigen jedoch, die in einer solchen Forderung nach Rechtsstaatlichkeit einen rassistischen Unterton sehen, projizieren ihre eigenen Denkmuster auf andere: Sie unterstellen, dass die Debatte ausschließlich entlang ethnischer, rassistischer oder nationaler Kriterien geführt wird – und genau das ist eine rassistische Denkweise.

Jemand, der illegale Einwanderung kritisiert, tut dies nicht, weil ihn die Herkunft der Menschen interessiert, sondern weil er den Rechtsstaat respektiert. Hingegen erkennt jemand, der hinter dieser Kritik Rassismus wittert, offenbar in erster Linie die „Rasse“ oder Herkunft der betreffenden Personen und reduziert sie darauf.

Finanzielle Belastung statt ideologischer Debatte

Neben der rechtlichen gibt es auch eine ökonomische Komponente. Der deutsche Wohlfahrtsstaat basiert auf einem Solidarprinzip: Die Bürger zahlen in das System ein, um sich gegenseitig in schwierigen Zeiten zu unterstützen. Dieser Wohlstand wurde über Generationen hinweg von denjenigen erarbeitet, die hier seit langem leben. Die Priorität liegt daher darauf, die vorhandenen Mittel zuerst unter denjenigen zu verteilen, die durch Steuern, Sozialabgaben und Arbeit zum Erhalt dieses Systems beitragen – nicht unter denen, die sich durch illegale Einreise und fehlende wirtschaftliche Eigenleistung in das System begeben.

Das ist keine ideologische Frage, sondern eine rein wirtschaftliche Abwägung. Ein Sozialsystem kann nur dann nachhaltig funktionieren, wenn es nicht unbegrenzt belastet wird. Würde Deutschland keine klaren Regeln zur Einwanderung und Abschiebung haben, würde dies unweigerlich zur Überlastung des Sozialstaates führen – mit negativen Konsequenzen für alle.

Sozialpatriotismus

Ein weiterer wichtiger Aspekt ist der Schutz der Arbeitsleistung jener Generationen, die Deutschland nach dem Zweiten Weltkrieg mühsam wieder aufgebaut haben. Während oft betont wird, dass die Deutschen moralisch kein Erbe aus der Zeit vor 1945 beanspruchen dürfen – außer der Verantwortung für den Holocaust –, ist es umso bedeutsamer, das neue Erbe nach 1945 zu respektieren, das auf Fleiß, Disziplin und harter Arbeit beruht. Der Wiederaufbau war eine kollektive Leistung deutscher Menschen, deren Früchte nicht bedenkenlos verteilt werden dürfen, sondern vorrangig denjenigen zugutekommen sollten, die dieses Fundament mitgeschaffen oder es über Generationen mitgetragen haben.

Rechtstaatlichkeit ist nicht verhandelbar

Wer sich für eine konsequente Abschiebepraxis ausspricht, tut dies nicht aus rassistischen Motiven, sondern aus Respekt vor der Rechtsstaatlichkeit und den wirtschaftlichen Grundlagen des Landes. Der Vorwurf des Rassismus in diesem Kontext ist daher nicht nur falsch, sondern entlarvt eine selektive Wahrnehmung nach rassistischen Merkmalen bei denjenigen, die ihn erheben.

-

@ 52b4a076:e7fad8bd

2025-04-28 00:48:57

@ 52b4a076:e7fad8bd

2025-04-28 00:48:57I have been recently building NFDB, a new relay DB. This post is meant as a short overview.

Regular relays have challenges

Current relay software have significant challenges, which I have experienced when hosting Nostr.land: - Scalability is only supported by adding full replicas, which does not scale to large relays. - Most relays use slow databases and are not optimized for large scale usage. - Search is near-impossible to implement on standard relays. - Privacy features such as NIP-42 are lacking. - Regular DB maintenance tasks on normal relays require extended downtime. - Fault-tolerance is implemented, if any, using a load balancer, which is limited. - Personalization and advanced filtering is not possible. - Local caching is not supported.

NFDB: A scalable database for large relays

NFDB is a new database meant for medium-large scale relays, built on FoundationDB that provides: - Near-unlimited scalability - Extended fault tolerance - Instant loading - Better search - Better personalization - and more.

Search

NFDB has extended search capabilities including: - Semantic search: Search for meaning, not words. - Interest-based search: Highlight content you care about. - Multi-faceted queries: Easily filter by topic, author group, keywords, and more at the same time. - Wide support for event kinds, including users, articles, etc.

Personalization

NFDB allows significant personalization: - Customized algorithms: Be your own algorithm. - Spam filtering: Filter content to your WoT, and use advanced spam filters. - Topic mutes: Mute topics, not keywords. - Media filtering: With Nostr.build, you will be able to filter NSFW and other content - Low data mode: Block notes that use high amounts of cellular data. - and more

Other

NFDB has support for many other features such as: - NIP-42: Protect your privacy with private drafts and DMs - Microrelays: Easily deploy your own personal microrelay - Containers: Dedicated, fast storage for discoverability events such as relay lists

Calcite: A local microrelay database

Calcite is a lightweight, local version of NFDB that is meant for microrelays and caching, meant for thousands of personal microrelays.

Calcite HA is an additional layer that allows live migration and relay failover in under 30 seconds, providing higher availability compared to current relays with greater simplicity. Calcite HA is enabled in all Calcite deployments.

For zero-downtime, NFDB is recommended.

Noswhere SmartCache

Relays are fixed in one location, but users can be anywhere.

Noswhere SmartCache is a CDN for relays that dynamically caches data on edge servers closest to you, allowing: - Multiple regions around the world - Improved throughput and performance - Faster loading times

routerd

routerdis a custom load-balancer optimized for Nostr relays, integrated with SmartCache.routerdis specifically integrated with NFDB and Calcite HA to provide fast failover and high performance.Ending notes

NFDB is planned to be deployed to Nostr.land in the coming weeks.

A lot more is to come. 👀️️️️️️

-

@ a39d19ec:3d88f61e

2025-03-18 17:16:50

@ a39d19ec:3d88f61e

2025-03-18 17:16:50Nun da das deutsche Bundesregime den Ruin Deutschlands beschlossen hat, der sehr wahrscheinlich mit dem Werkzeug des Geld druckens "finanziert" wird, kamen mir so viele Gedanken zur Geldmengenausweitung, dass ich diese für einmal niedergeschrieben habe.

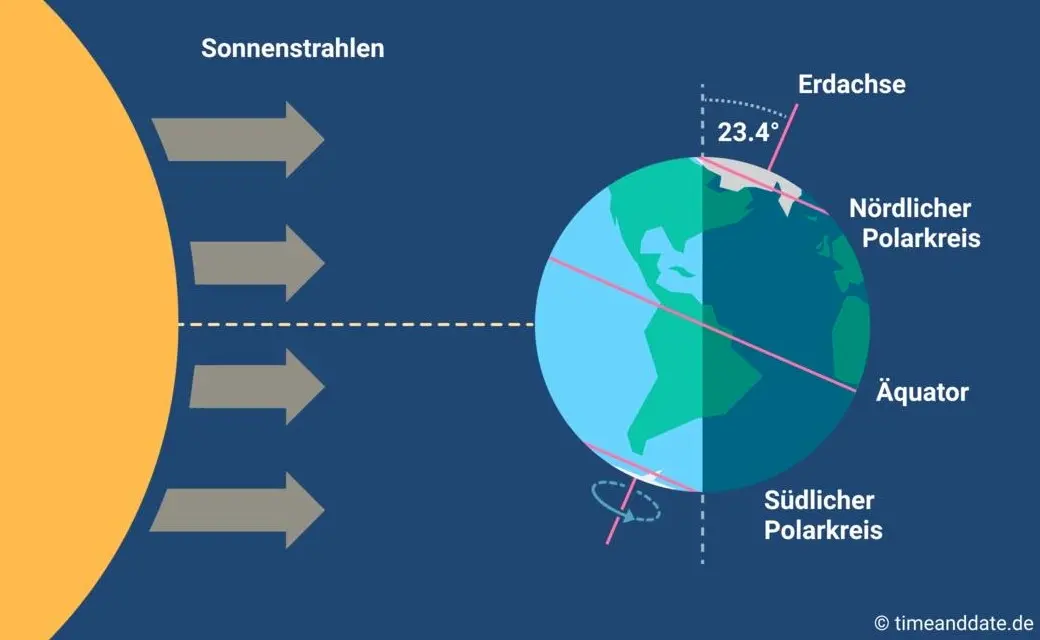

Die Ausweitung der Geldmenge führt aus klassischer wirtschaftlicher Sicht immer zu Preissteigerungen, weil mehr Geld im Umlauf auf eine begrenzte Menge an Gütern trifft. Dies lässt sich in mehreren Schritten analysieren:

1. Quantitätstheorie des Geldes

Die klassische Gleichung der Quantitätstheorie des Geldes lautet:

M • V = P • Y

wobei:

- M die Geldmenge ist,

- V die Umlaufgeschwindigkeit des Geldes,

- P das Preisniveau,

- Y die reale Wirtschaftsleistung (BIP).Wenn M steigt und V sowie Y konstant bleiben, muss P steigen – also Inflation entstehen.

2. Gütermenge bleibt begrenzt

Die Menge an real produzierten Gütern und Dienstleistungen wächst meist nur langsam im Vergleich zur Ausweitung der Geldmenge. Wenn die Geldmenge schneller steigt als die Produktionsgütermenge, führt dies dazu, dass mehr Geld für die gleiche Menge an Waren zur Verfügung steht – die Preise steigen.

3. Erwartungseffekte und Spekulation

Wenn Unternehmen und Haushalte erwarten, dass mehr Geld im Umlauf ist, da eine zentrale Planung es so wollte, können sie steigende Preise antizipieren. Unternehmen erhöhen ihre Preise vorab, und Arbeitnehmer fordern höhere Löhne. Dies kann eine sich selbst verstärkende Spirale auslösen.

4. Internationale Perspektive

Eine erhöhte Geldmenge kann die Währung abwerten, wenn andere Länder ihre Geldpolitik stabil halten. Eine schwächere Währung macht Importe teurer, was wiederum Preissteigerungen antreibt.

5. Kritik an der reinen Geldmengen-Theorie

Der Vollständigkeit halber muss erwähnt werden, dass die meisten modernen Ökonomen im Staatsauftrag argumentieren, dass Inflation nicht nur von der Geldmenge abhängt, sondern auch von der Nachfrage nach Geld (z. B. in einer Wirtschaftskrise). Dennoch zeigt die historische Erfahrung, dass eine unkontrollierte Geldmengenausweitung langfristig immer zu Preissteigerungen führt, wie etwa in der Hyperinflation der Weimarer Republik oder in Simbabwe.

-

@ 0d97beae:c5274a14

2025-01-11 16:52:08

@ 0d97beae:c5274a14

2025-01-11 16:52:08This article hopes to complement the article by Lyn Alden on YouTube: https://www.youtube.com/watch?v=jk_HWmmwiAs

The reason why we have broken money

Before the invention of key technologies such as the printing press and electronic communications, even such as those as early as morse code transmitters, gold had won the competition for best medium of money around the world.

In fact, it was not just gold by itself that became money, rulers and world leaders developed coins in order to help the economy grow. Gold nuggets were not as easy to transact with as coins with specific imprints and denominated sizes.

However, these modern technologies created massive efficiencies that allowed us to communicate and perform services more efficiently and much faster, yet the medium of money could not benefit from these advancements. Gold was heavy, slow and expensive to move globally, even though requesting and performing services globally did not have this limitation anymore.

Banks took initiative and created derivatives of gold: paper and electronic money; these new currencies allowed the economy to continue to grow and evolve, but it was not without its dark side. Today, no currency is denominated in gold at all, money is backed by nothing and its inherent value, the paper it is printed on, is worthless too.

Banks and governments eventually transitioned from a money derivative to a system of debt that could be co-opted and controlled for political and personal reasons. Our money today is broken and is the cause of more expensive, poorer quality goods in the economy, a larger and ever growing wealth gap, and many of the follow-on problems that have come with it.

Bitcoin overcomes the "transfer of hard money" problem

Just like gold coins were created by man, Bitcoin too is a technology created by man. Bitcoin, however is a much more profound invention, possibly more of a discovery than an invention in fact. Bitcoin has proven to be unbreakable, incorruptible and has upheld its ability to keep its units scarce, inalienable and counterfeit proof through the nature of its own design.

Since Bitcoin is a digital technology, it can be transferred across international borders almost as quickly as information itself. It therefore severely reduces the need for a derivative to be used to represent money to facilitate digital trade. This means that as the currency we use today continues to fare poorly for many people, bitcoin will continue to stand out as hard money, that just so happens to work as well, functionally, along side it.

Bitcoin will also always be available to anyone who wishes to earn it directly; even China is unable to restrict its citizens from accessing it. The dollar has traditionally become the currency for people who discover that their local currency is unsustainable. Even when the dollar has become illegal to use, it is simply used privately and unofficially. However, because bitcoin does not require you to trade it at a bank in order to use it across borders and across the web, Bitcoin will continue to be a viable escape hatch until we one day hit some critical mass where the world has simply adopted Bitcoin globally and everyone else must adopt it to survive.

Bitcoin has not yet proven that it can support the world at scale. However it can only be tested through real adoption, and just as gold coins were developed to help gold scale, tools will be developed to help overcome problems as they arise; ideally without the need for another derivative, but if necessary, hopefully with one that is more neutral and less corruptible than the derivatives used to represent gold.

Bitcoin blurs the line between commodity and technology

Bitcoin is a technology, it is a tool that requires human involvement to function, however it surprisingly does not allow for any concentration of power. Anyone can help to facilitate Bitcoin's operations, but no one can take control of its behaviour, its reach, or its prioritisation, as it operates autonomously based on a pre-determined, neutral set of rules.

At the same time, its built-in incentive mechanism ensures that people do not have to operate bitcoin out of the good of their heart. Even though the system cannot be co-opted holistically, It will not stop operating while there are people motivated to trade their time and resources to keep it running and earn from others' transaction fees. Although it requires humans to operate it, it remains both neutral and sustainable.

Never before have we developed or discovered a technology that could not be co-opted and used by one person or faction against another. Due to this nature, Bitcoin's units are often described as a commodity; they cannot be usurped or virtually cloned, and they cannot be affected by political biases.

The dangers of derivatives

A derivative is something created, designed or developed to represent another thing in order to solve a particular complication or problem. For example, paper and electronic money was once a derivative of gold.

In the case of Bitcoin, if you cannot link your units of bitcoin to an "address" that you personally hold a cryptographically secure key to, then you very likely have a derivative of bitcoin, not bitcoin itself. If you buy bitcoin on an online exchange and do not withdraw the bitcoin to a wallet that you control, then you legally own an electronic derivative of bitcoin.

Bitcoin is a new technology. It will have a learning curve and it will take time for humanity to learn how to comprehend, authenticate and take control of bitcoin collectively. Having said that, many people all over the world are already using and relying on Bitcoin natively. For many, it will require for people to find the need or a desire for a neutral money like bitcoin, and to have been burned by derivatives of it, before they start to understand the difference between the two. Eventually, it will become an essential part of what we regard as common sense.

Learn for yourself

If you wish to learn more about how to handle bitcoin and avoid derivatives, you can start by searching online for tutorials about "Bitcoin self custody".

There are many options available, some more practical for you, and some more practical for others. Don't spend too much time trying to find the perfect solution; practice and learn. You may make mistakes along the way, so be careful not to experiment with large amounts of your bitcoin as you explore new ideas and technologies along the way. This is similar to learning anything, like riding a bicycle; you are sure to fall a few times, scuff the frame, so don't buy a high performance racing bike while you're still learning to balance.

-

@ c631e267:c2b78d3e

2025-04-25 20:06:24

@ c631e267:c2b78d3e

2025-04-25 20:06:24Die Wahrheit verletzt tiefer als jede Beleidigung. \ Marquis de Sade

Sagen Sie niemals «Terroristin B.», «Schwachkopf H.», «korrupter Drecksack S.» oder «Meinungsfreiheitshasserin F.» und verkneifen Sie sich Memes, denn so etwas könnte Ihnen als Beleidigung oder Verleumdung ausgelegt werden und rechtliche Konsequenzen haben. Auch mit einer Frau M.-A. S.-Z. ist in dieser Beziehung nicht zu spaßen, sie gehört zu den Top-Anzeigenstellern.

«Politikerbeleidigung» als Straftatbestand wurde 2021 im Kampf gegen «Rechtsextremismus und Hasskriminalität» in Deutschland eingeführt, damals noch unter der Regierung Merkel. Im Gesetz nicht festgehalten ist die Unterscheidung zwischen schlechter Hetze und guter Hetze – trotzdem ist das gängige Praxis, wie der Titel fast schon nahelegt.

So dürfen Sie als Politikerin heute den Tesla als «Nazi-Auto» bezeichnen und dies ausdrücklich auf den Firmengründer Elon Musk und dessen «rechtsextreme Positionen» beziehen, welche Sie nicht einmal belegen müssen. [1] Vielleicht ernten Sie Proteste, jedoch vorrangig wegen der «gut bezahlten, unbefristeten Arbeitsplätze» in Brandenburg. Ihren Tweet hat die Berliner Senatorin Cansel Kiziltepe inzwischen offenbar dennoch gelöscht.

Dass es um die Meinungs- und Pressefreiheit in der Bundesrepublik nicht mehr allzu gut bestellt ist, befürchtet man inzwischen auch schon im Ausland. Der Fall des Journalisten David Bendels, der kürzlich wegen eines Faeser-Memes zu sieben Monaten Haft auf Bewährung verurteilt wurde, führte in diversen Medien zu Empörung. Die Welt versteckte ihre Kritik mit dem Titel «Ein Urteil wie aus einer Diktatur» hinter einer Bezahlschranke.

Unschöne, heutzutage vielleicht strafbare Kommentare würden mir auch zu einigen anderen Themen und Akteuren einfallen. Ein Kandidat wäre der deutsche Bundesgesundheitsminister (ja, er ist es tatsächlich immer noch). Während sich in den USA auf dem Gebiet etwas bewegt und zum Beispiel Robert F. Kennedy Jr. will, dass die Gesundheitsbehörde (CDC) keine Covid-Impfungen für Kinder mehr empfiehlt, möchte Karl Lauterbach vor allem das Corona-Lügengebäude vor dem Einsturz bewahren.

«Ich habe nie geglaubt, dass die Impfungen nebenwirkungsfrei sind», sagte Lauterbach jüngst der ZDF-Journalistin Sarah Tacke. Das steht in krassem Widerspruch zu seiner früher verbreiteten Behauptung, die Gen-Injektionen hätten keine Nebenwirkungen. Damit entlarvt er sich selbst als Lügner. Die Bezeichnung ist absolut berechtigt, dieser Mann dürfte keinerlei politische Verantwortung tragen und das Verhalten verlangt nach einer rechtlichen Überprüfung. Leider ist ja die Justiz anderweitig beschäftigt und hat außerdem selbst keine weiße Weste.

Obendrein kämpfte der Herr Minister für eine allgemeine Impfpflicht. Er beschwor dabei das Schließen einer «Impflücke», wie es die Weltgesundheitsorganisation – die «wegen Trump» in finanziellen Schwierigkeiten steckt – bis heute tut. Die WHO lässt aktuell ihre «Europäische Impfwoche» propagieren, bei der interessanterweise von Covid nicht mehr groß die Rede ist.

Einen «Klima-Leugner» würden manche wohl Nir Shaviv nennen, das ist ja nicht strafbar. Der Astrophysiker weist nämlich die Behauptung von einer Klimakrise zurück. Gemäß seiner Forschung ist mindestens die Hälfte der Erderwärmung nicht auf menschliche Emissionen, sondern auf Veränderungen im Sonnenverhalten zurückzuführen.

Das passt vielleicht auch den «Klima-Hysterikern» der britischen Regierung ins Konzept, die gerade Experimente zur Verdunkelung der Sonne angekündigt haben. Produzenten von Kunstfleisch oder Betreiber von Insektenfarmen würden dagegen vermutlich die Geschichte vom fatalen CO2 bevorzugen. Ihnen würde es besser passen, wenn der verantwortungsvolle Erdenbürger sein Verhalten gründlich ändern müsste.

In unserer völlig verkehrten Welt, in der praktisch jede Verlautbarung außerhalb der abgesegneten Narrative potenziell strafbar sein kann, gehört fast schon Mut dazu, Dinge offen anzusprechen. Im «besten Deutschland aller Zeiten» glaubten letztes Jahr nur noch 40 Prozent der Menschen, ihre Meinung frei äußern zu können. Das ist ein Armutszeugnis, und es sieht nicht gerade nach Besserung aus. Umso wichtiger ist es, dagegen anzugehen.

[Titelbild: Pixabay]

--- Quellen: ---

[1] Zur Orientierung wenigstens ein paar Hinweise zur NS-Vergangenheit deutscher Automobilhersteller:

- Volkswagen

- Porsche

- Daimler-Benz

- BMW

- Audi

- Opel

- Heute: «Auto-Werke für die Rüstung? Rheinmetall prüft Übernahmen»

Dieser Beitrag wurde mit dem Pareto-Client geschrieben und ist zuerst auf Transition News erschienen.

-

@ 30ceb64e:7f08bdf5

2025-04-26 20:33:30

@ 30ceb64e:7f08bdf5

2025-04-26 20:33:30Status: Draft

Author: TheWildHustleAbstract

This NIP defines a framework for storing and sharing health and fitness profile data on Nostr. It establishes a set of standardized event kinds for individual health metrics, allowing applications to selectively access specific health information while preserving user control and privacy.

In this framework exists - NIP-101h.1 Weight using kind 1351 - NIP-101h.2 Height using kind 1352 - NIP-101h.3 Age using kind 1353 - NIP-101h.4 Gender using kind 1354 - NIP-101h.5 Fitness Level using kind 1355

Motivation

I want to build and support an ecosystem of health and fitness related nostr clients that have the ability to share and utilize a bunch of specific interoperable health metrics.

- Selective access - Applications can access only the data they need

- User control - Users can choose which metrics to share

- Interoperability - Different health applications can share data

- Privacy - Sensitive health information can be managed independently

Specification

Kind Number Range

Health profile metrics use the kind number range 1351-1399:

| Kind | Metric | | --------- | ---------------------------------- | | 1351 | Weight | | 1352 | Height | | 1353 | Age | | 1354 | Gender | | 1355 | Fitness Level | | 1356-1399 | Reserved for future health metrics |

Common Structure

All health metric events SHOULD follow these guidelines:

- The content field contains the primary value of the metric

- Required tags:

['t', 'health']- For categorizing as health data['t', metric-specific-tag]- For identifying the specific metric['unit', unit-of-measurement]- When applicable- Optional tags:

['converted_value', value, unit]- For providing alternative unit measurements['timestamp', ISO8601-date]- When the metric was measured['source', application-name]- The source of the measurement

Unit Handling

Health metrics often have multiple ways to be measured. To ensure interoperability:

- Where multiple units are possible, one standard unit SHOULD be chosen as canonical

- When using non-standard units, a

converted_valuetag SHOULD be included with the canonical unit - Both the original and converted values should be provided for maximum compatibility

Client Implementation Guidelines

Clients implementing this NIP SHOULD:

- Allow users to explicitly choose which metrics to publish

- Support reading health metrics from other users when appropriate permissions exist

- Support updating metrics with new values over time

- Preserve tags they don't understand for future compatibility

- Support at least the canonical unit for each metric

Extensions

New health metrics can be proposed as extensions to this NIP using the format:

- NIP-101h.X where X is the metric number

Each extension MUST specify: - A unique kind number in the range 1351-1399 - The content format and meaning - Required and optional tags - Examples of valid events

Privacy Considerations

Health data is sensitive personal information. Clients implementing this NIP SHOULD:

- Make it clear to users when health data is being published

- Consider incorporating NIP-44 encryption for sensitive metrics

- Allow users to selectively share metrics with specific individuals

- Provide easy ways to delete previously published health data

NIP-101h.1: Weight

Description

This NIP defines the format for storing and sharing weight data on Nostr.

Event Kind: 1351

Content

The content field MUST contain the numeric weight value as a string.

Required Tags

- ['unit', 'kg' or 'lb'] - Unit of measurement

- ['t', 'health'] - Categorization tag

- ['t', 'weight'] - Specific metric tag

Optional Tags

- ['converted_value', value, unit] - Provides the weight in alternative units for interoperability

- ['timestamp', ISO8601 date] - When the weight was measured

Examples

json { "kind": 1351, "content": "70", "tags": [ ["unit", "kg"], ["t", "health"], ["t", "weight"] ] }json { "kind": 1351, "content": "154", "tags": [ ["unit", "lb"], ["t", "health"], ["t", "weight"], ["converted_value", "69.85", "kg"] ] }NIP-101h.2: Height

Status: Draft

Description

This NIP defines the format for storing and sharing height data on Nostr.

Event Kind: 1352

Content

The content field can use two formats: - For metric height: A string containing the numeric height value in centimeters (cm) - For imperial height: A JSON string with feet and inches properties

Required Tags

['t', 'health']- Categorization tag['t', 'height']- Specific metric tag['unit', 'cm' or 'imperial']- Unit of measurement

Optional Tags

['converted_value', value, 'cm']- Provides height in centimeters for interoperability when imperial is used['timestamp', ISO8601-date]- When the height was measured

Examples

```jsx // Example 1: Metric height Apply to App.jsx

// Example 2: Imperial height with conversion Apply to App.jsx ```

Implementation Notes

- Centimeters (cm) is the canonical unit for height interoperability

- When using imperial units, a conversion to centimeters SHOULD be provided

- Height values SHOULD be positive integers

- For maximum compatibility, clients SHOULD support both formats

NIP-101h.3: Age

Status: Draft

Description

This NIP defines the format for storing and sharing age data on Nostr.

Event Kind: 1353

Content

The content field MUST contain the numeric age value as a string.

Required Tags

['unit', 'years']- Unit of measurement['t', 'health']- Categorization tag['t', 'age']- Specific metric tag

Optional Tags

['timestamp', ISO8601-date]- When the age was recorded['dob', ISO8601-date]- Date of birth (if the user chooses to share it)

Examples

```jsx // Example 1: Basic age Apply to App.jsx

// Example 2: Age with DOB Apply to App.jsx ```

Implementation Notes

- Age SHOULD be represented as a positive integer

- For privacy reasons, date of birth (dob) is optional

- Clients SHOULD consider updating age automatically if date of birth is known

- Age can be a sensitive metric and clients may want to consider encrypting this data

NIP-101h.4: Gender

Status: Draft

Description

This NIP defines the format for storing and sharing gender data on Nostr.

Event Kind: 1354

Content

The content field contains a string representing the user's gender.

Required Tags

['t', 'health']- Categorization tag['t', 'gender']- Specific metric tag

Optional Tags

['timestamp', ISO8601-date]- When the gender was recorded['preferred_pronouns', string]- User's preferred pronouns

Common Values

While any string value is permitted, the following common values are recommended for interoperability: - male - female - non-binary - other - prefer-not-to-say

Examples

```jsx // Example 1: Basic gender Apply to App.jsx

// Example 2: Gender with pronouns Apply to App.jsx ```

Implementation Notes

- Clients SHOULD allow free-form input for gender

- For maximum compatibility, clients SHOULD support the common values

- Gender is a sensitive personal attribute and clients SHOULD consider appropriate privacy controls

- Applications focusing on health metrics should be respectful of gender diversity

NIP-101h.5: Fitness Level

Status: Draft

Description

This NIP defines the format for storing and sharing fitness level data on Nostr.

Event Kind: 1355

Content

The content field contains a string representing the user's fitness level.

Required Tags

['t', 'health']- Categorization tag['t', 'fitness']- Fitness category tag['t', 'level']- Specific metric tag

Optional Tags

['timestamp', ISO8601-date]- When the fitness level was recorded['activity', activity-type]- Specific activity the fitness level relates to['metrics', JSON-string]- Quantifiable fitness metrics used to determine level

Common Values

While any string value is permitted, the following common values are recommended for interoperability: - beginner - intermediate - advanced - elite - professional

Examples

```jsx // Example 1: Basic fitness level Apply to App.jsx

// Example 2: Activity-specific fitness level with metrics Apply to App.jsx ```

Implementation Notes

- Fitness level is subjective and may vary by activity

- The activity tag can be used to specify fitness level for different activities

- The metrics tag can provide objective measurements to support the fitness level

- Clients can extend this format to include activity-specific fitness assessments

- For general fitness apps, the simple beginner/intermediate/advanced scale is recommended

-

@ 59b96df8:b208bd59

2025-04-30 19:27:41

@ 59b96df8:b208bd59

2025-04-30 19:27:41Nostr is a decentralized protocol designed to be censorship-resistant.

However, this resilience can sometimes make data synchronization between relays more difficult—though not impossible.In my opinion, Nostr still lacks a few key features to ensure consistent and reliable operation, especially regarding data versioning.

Profile Versions

When I log in to a new Nostr client using my private key, I might end up with an outdated version of my profile, depending on how the client is built or configured.

Why does this happen?

The client fetches my profile data (kind:0 - NIP 1) from its own list of selected relays.

If I didn’t publish the latest version of my profile on those specific relays, the client will only display an older version.Relay List Metadata

The same issue occurs with the relay list metadata (kind:10002 - NIP 65).

When switching to a new client, it's common that my configured relay list isn't properly carried over because it also depends on where the data is fetched.Protocol Change Proposal

I believe the protocol should evolve, specifically regarding how

kind:0(user metadata) andkind:10002(relay list metadata) events are distributed to relays.Relays should be able to build a list of public relays automatically (via autodiscovery), and forward all received

kind:0andkind:10002events to every relay in that list.This would create a ripple effect:

``` Relay A relay list: [Relay B, Relay C]

Relay B relay list: [Relay A, Relay C]

Relay C relay list: [Relay A, Relay B]User A sends kind:0 to Relay A

→ Relay A forwards kind:0 to Relay B

→ Relay A forwards kind:0 to Relay C

→ Relay B forwards kind:0 to Relay A

→ Relay B forwards kind:0 to Relay C → Relay A forwards kind:0 to Relay B

→ etc. ```Solution: Event Encapsulation

To avoid infinite replication loops, the solution could be to wrap the user’s signed event inside a new event signed by the relay, using a dedicated

kind(e.g.,kind:9999).When Relay B receives a

kind:9999event from Relay A, it extracts the original event, checks whether it already exists or if a newer version is present. If not, it adds the event to its database.Here is an example of such encapsulated data:

json { "content": "{\"content\":\"{\\\"lud16\\\":\\\"dolu@npub.cash\\\",\\\"name\\\":\\\"dolu\\\",\\\"nip05\\\":\\\"dolu@dolu.dev\\\",\\\"picture\\\":\\\"!(image)[!(image)[https://pbs.twimg.com/profile_images/1577320325158682626/igGerO9A_400x400.jpg]]\\\",\\\"pubkey\\\":\\\"59b96df8d8b5e66b3b95a3e1ba159750a6edd69bcbba1857aeb652a5b208bd59\\\",\\\"npub\\\":\\\"npub1txukm7xckhnxkwu450sm59vh2znwm45mewaps4awkef2tvsgh4vsf7phrl\\\",\\\"created_at\\\":1688312044}\",\"created_at\":1728233747,\"id\":\"afc3629314aad00f8786af97877115de30c184a25a48440a480bff590a0f9ba8\",\"kind\":0,\"pubkey\":\"59b96df8d8b5e66b3b95a3e1ba159750a6edd69bcbba1857aeb652a5b208bd59\",\"sig\":\"989b250f7fd5d4cfc9a6ee567594c81ee0a91f972e76b61332005fb02aa1343854104fdbcb6c4f77ae8896acd886ab4188043c383e32a6bba509fd78fedb984a\",\"tags\":[]}", "created_at": 1746036589, "id": "efe7fa5844c5c4428fb06d1657bf663d8b256b60c793b5a2c5a426ec773c745c", "kind": 9999, "pubkey": "79be667ef9dcbbac55a06295ce870b07029bfcdb2dce28d959f2815b16f81798", "sig": "219f8bc840d12969ceb0093fb62f314a1f2e19a0cbe3e34b481bdfdf82d8238e1f00362791d17801548839f511533461f10dd45cd0aa4e264d71db6844f5e97c", "tags": [] } -

@ 37fe9853:bcd1b039

2025-01-11 15:04:40

@ 37fe9853:bcd1b039

2025-01-11 15:04:40yoyoaa

-

@ 62033ff8:e4471203

2025-01-11 15:00:24

@ 62033ff8:e4471203

2025-01-11 15:00:24收录的内容中 kind=1的部分,实话说 质量不高。 所以我增加了kind=30023 长文的article,但是更新的太少,多个relays 的服务器也没有多少长文。

所有搜索nostr如果需要产生价值,需要有高质量的文章和新闻。 而且现在有很多机器人的文章充满着浪费空间的作用,其他作用都用不上。

https://www.duozhutuan.com 目前放的是给搜索引擎提供搜索的原材料。没有做UI给人类浏览。所以看上去是粗糙的。 我并没有打算去做一个发microblog的 web客户端,那类的客户端太多了。

我觉得nostr社区需要解决的还是应用。如果仅仅是microblog 感觉有点够呛

幸运的是npub.pro 建站这样的,我觉得有点意思。

yakihonne 智能widget 也有意思

我做的TaskQ5 我自己在用了。分布式的任务系统,也挺好的。

-

@ 866e0139:6a9334e5

2025-04-30 18:47:50

@ 866e0139:6a9334e5

2025-04-30 18:47:50Autor: Ulrike Guérot. Dieser Beitrag wurde mit dem Pareto-Client geschrieben. Sie finden alle Texte der Friedenstaube und weitere Texte zum Thema Frieden hier.**

Die neuesten Artikel der Friedenstaube gibt es jetzt auch im eigenen Friedenstaube-Telegram-Kanal.

https://www.youtube.com/watch?v=KarwcXKmD3E

Liebe Freunde und Bekannte,

liebe Friedensbewegte,

liebe Dresdener, Dresden ist ja auch eine kriegsgeplagte Stadt,

dies ist meine dritte Rede auf einer Friedensdemonstration innerhalb von nur gut einem halben Jahr: München im September, München im Februar, Dresden im April. Und der Krieg rückt immer näher! Wer sich den „Operationsplan Deutschland über die zivil-militärische Kooperation als wesentlicher Bestandteil der Kriegsführung“ anschaut, dem kann nur schlecht werden zu sehen, wie weit die Kriegsvorbereitungen schon gediehen sind.

Doch bevor ich darauf eingehe, möchte ich mich als erstes distanzieren von dem wieder einmal erbärmlichen Framing dieser Demo als Querfront oder Schwurblerdemo. Durch dieses Framing wurde diese Demo vom Dresdener Marktplatz auf den Postplatz verwiesen, wurden wir geschmäht und wurde die Stadtverwaltung Dresden dazu gebracht, eine „genehmere“ Demo auf dem Marktplatz zuzulassen! Es wäre schön, wenn wir alle - alle! - solche Framings weglassen würden und uns als Friedensbewegte die Hand reichen! Der Frieden im eigenen Haus ist die Voraussetzung für unsere Friedensarbeit. Der Streit in unserem Haus nutzt nur denen, die den Krieg wollen und uns spalten!

Ich möchte hier noch einmal klarstellen, von welcher Position aus ich hier und heute wiederholt auf einer Bühne spreche: Ich spreche als engagierte Bürgerin der Bundesrepublik Deutschland. Ich spreche als Europäerin, die lange Jahre in und an dem einstigen Friedensprojekt EU gearbeitet hat. Ich spreche als Enkelin von zwei Großvätern. Der eine ist im Krieg gefallen, der andere kam ohne Beine zurück. Ich spreche als Tochter einer Mutter, die 1945, als 6-Jährige, unter traumatischen Umständen aus Schlesien vertrieben wurde, nach Delitzsch in Sachsen übrigens. Ich spreche als Mutter von zwei Söhnen, 33 und 31 Jahre, von denen ich nicht möchte, dass sie in einen Krieg müssen. Von dieser, und nur dieser Position aus spreche ich heute zu Ihnen und von keiner anderen! Ich bin nicht rechts, ich bin keine Schwurblerin, ich bin nicht radikal, ich bin keine Querfront.

Als Bürgerin wünsche ich mir – nein, verlange ich! – dass die Bundesrepublik Deutschland sich an ihre gesetzlichen Grundlagen und Vertragstexte hält. Das sind namentlich: Die Friedensklausel des Grundgesetzes aus Art. 125 und 126 GG, dass von deutschem Boden nie wieder Krieg ausgeht. Und der Zwei-plus-Vier-Vertrag, in dem Deutschland 1990 unterschrieben hat, dass es nie an einem bewaffneten Konflikt gegen Russland teilnimmt. Ich schäme mich dafür, dass mein Land dabei ist, vertragsbrüchig zu werden. Ich bitte Friedrich Merz, den designierten Bundeskanzler, keinen Vertragsbruch durch die Lieferung von Taurus-Raketen zu begehen!

Ich bitte ferner darum, dass sich dieses Land an seine didaktischen Vorgaben für Schulen hält, die im immer noch geltenden „Beutelsbacher Konsens“ aus den 1970er Jahren festgelegt wurden. In diesem steht in Artikel I. ein Überwältigungsverbot: „Es ist nicht erlaubt, den Schüler – mit welchen Mitteln auch immer – im Sinne erwünschter Meinungen zu überrumpeln und damit an der Gewinnung eines selbständigen Urteils zu hindern.“ Vor diesem Hintergrund ist es nicht erlaubt, Soldaten oder Gefreite in Schulen zu schicken und für die Bundeswehr zu werben. Vielmehr wäre es geboten, unsere Kinder über Art. 125 & 126 GG und die Friedenspflicht des Landes und seine Geschichte mit Blick auf Russland aufzuklären.

Als Europäerin wünsche ich mir, dass wir die europäische Hymne, Beethovens 9. Sinfonie, ernst nehmen, deren Text da lautet: Alle Menschen werden Brüder. Alle Menschen werden Brüder. Alle! Dazu gehören auch die Russen und natürlich auch die Ukrainer!

Als Europäerin, die in den 1990er Jahren für den großartigen EU-Kommissionspräsidenten Jacques Delors gearbeitet hat, Katholik, Sozialist und Gewerkschafter, wünsche ich mir, dass wir das Versprechen, #Europa ist nie wieder Krieg, ernst nehmen. Wir haben es 70 Jahre lang auf diesem Kontinent erzählt. Die Lügen und die Propaganda, mit der jetzt die Kriegsnotwendigkeit gegen Russland herbeigeredet wird, sind unerträglich. Die EU, Friedensnobelpreisträgerin von 2012, ist dabei – oder hat schon – ihr Ansehen in der Welt verloren. Es ist eine politische Tragödie! Neben ihrem Ansehen ist die EU jetzt dabei, das zivilisatorische Erbe Europas zu verspielen, die civilité européenne, wie der französische Historiker und Marxist, Étienne Balibar es nennt.

Ein Element dieses historischen Erbes ist es, dass uns in Europa eint, dass wir über Jahrhunderte alle zugleich Täter und Opfer gewesen sind. Ce que nous partageons, c’est ce que nous étions tous bourreaux et victimes. So schreibt es der französische Literat Laurent Gaudet in seinem europäischen Epos, L’Europe. Une Banquet des Peuples von 2016.

Das heißt, dass niemand in Europa, niemand – auch die Esten nicht! – das Recht hat, vorgängige Traumata, die die baltischen Staaten unbestrittenermaßen mit Stalin-Russland gehabt haben, zu verabsolutieren, auf die gesamte EU zu übertragen, die EU damit zu blockieren und die Politikgestaltung der EU einseitig auf einen Kriegskurs gegen Russland auszurichten. Ich wende mich mit dieser Feststellung direkt an Kaja Kalles, die Hohe Beauftragte für Sicherheits- und Außenpolitik der EU und hoffe, dass sie diese Rede hört und das Epos von Laurent Gaudet liest.

Es gibt keinen gerechten Krieg! Krieg ist immer nur Leid. In Straßburg, dem Sitz des Europäischen Parlaments, steht auf dem Place de la République eine Statue, eine Frau, die Republik. Sie hält in jedem Arm einen Sohn, einen Elsässer und einen Franzosen, die aus dem Krieg kommen. In der Darstellung der Bronzefigur haben die beiden Soldaten-Männer ihre Uniformen schon ausgezogen und werden von Madame la République gehalten und getröstet. An diesem Denkmal sollten sich alle Abgeordnete des Straßburger Europaparlamentes am 9. Mai versammeln. Ich zitiere noch einmal Cicero: Der ungerechteste Friede ist besser als der gerechteste Krieg. Für den Vortrag dieses Zitats eines der größten Staatsdenker des antiken Roms in einer Fernsehsendung bin ich 2022 mit einem Shitstorm überzogen worden. Allein das ist Ausdruck des Verfalls unserer Diskussionskultur in unfassbarem Ausmaß, ganz besonders in Deutschland.

Als Europäerin verlange ich die Überwindung unserer kognitiven Dissonanz. Wenn schon die New York Times am 27. März 2025 ein 27-seitiges Dossier veröffentlicht, das nicht nur belegt, was man eigentlich schon weiß, aber bisher nicht sagen durfte, nämlich, dass der ukrainisch-russische Krieg ein eindeutiger Stellvertreter-Krieg der USA ist, in der die Ukraine auf monströseste Weise instrumentalisiert wurde – was das Dossier der NYT unumwunden zugibt! – wäre es an der Zeit, die eindeutige Schuldzuweisung an Russland für den Krieg zurückzuziehen und die gezielt verbreitete Russophobie in Europa zu beenden. Anstatt dass – wofür es leider viele Verdachtsmomente gibt – die EU die Friedensverhandlungen in Saudi-Arabien nach Strich und Faden torpediert.

Der französische Philosoph Luc Ferry hat vor ein paar Tagen im prime time französischen Fernsehen ganz klar gesagt, dass der Krieg 2014 nach der Instrumentalisierung des Maidan durch die USA von der West-Ukraine ausging, dass Zelensky diesen Krieg wollte und – mit amerikanischer Rückendeckung – provoziert hat, dass Putin nicht Hitler ist und dass die einzigen mit faschistoiden Tendenzen in der ukrainischen Regierung sitzen. Ich wünschte mir, ein solches Statement wäre auch im Deutschen Fernsehen möglich und danke Richard David Precht, dass er, der noch in den Öffentlich-Rechtlichen Rundfunk vorgelassen wird, an dieser Stelle versucht, etwas Vernunft in die Debatte zu bringen.

Auch ist es gerade als Europäerin nicht hinzunehmen, dass russische Diplomaten von den Feierlichkeiten am 8. Mai 2025 ausgeschlossen werden sollen, ausgerechnet 80 Jahre nach Ende des II. Weltkrieges. Nicht nur sind Feierlichkeiten genau dazu da, sich die Hand zu reichen und den Frieden zu feiern. Doch gerade vor dem Hintergrund von 27 Millionen gefallenen sowjetischen Soldaten ist die Zurückweisung der Russen von den Feierlichkeiten geradezu eklatante Geschichtsvergessenheit.

***

Der Völkerbund hat 1925 die Frage erörtert, warum der I. Weltkrieg noch so lange gedauert hat, obgleich er militärisch bereits 1916 nach Eröffnung des Zweifrontenkrieges zu Lasten des Deutschen Reiches entschieden war. Wir erinnern uns: Für die Niederlage wurden mit der Dolchstoßlegende die jüdischen, kommunistischen und sozialistischen Pazifisten verantwortlich gemacht. Richtig ist, so der Bericht des Völkerbundes von 1925, dass allein die Rüstungsindustrie dafür gesorgt hat, dass der militärisch eigentlich schon entschiedene Krieg noch zwei weitere Jahre als Materialabnutzungs- und Stellungskrieg weiterbetrieben wurde, nur, damit noch ein bisschen Geld verdient werden konnte. Genauso scheint es heute zu sein. Der Krieg ist militärisch entschieden. Er kann und muss sofort beendet werden, und das passiert lediglich deswegen nicht, weil der Westen seine Niederlage nicht zugeben kann. Hochmut aber kommt vor dem Fall, und es darf nicht sein, dass für europäischen Hochmut jeden Tag rund 2000 ukrainische oder russische Soldaten und viele Zivilisten sterben. Die offenbare europäische Absicht, den Krieg jetzt einzufrieren, nur, um ihn 2029/ 2030 wieder zu entfachen, wenn Europa dann besser aufgerüstet ist, ist nur noch zynisch.

Als Kriegsenkelin von Kriegsversehrten, Tochter einer Flüchtlingsmutter und Mutter von zwei Söhnen, deren französischer Urgroßvater 6 Jahre in deutscher Kriegsgefangenschaft war, wünsche ich mir schließlich und zum Abschluss, dass wir die Kraft haben werden, wenn dieser Wahnsinn, den man den europäischen Bürgern gerade aufbürdet, vorbei sein wird, ein neues europäisches Projekt zu erdenken und zu erbauen, in dem Europa politisch geeint ist und es bleibt, aber dezentral, regional, subsidiär, friedlich und neutral gestaltet wird. Also ein Europa jenseits der Strukturen der EU, das bereit ist, die Pax Americana zu überwinden, aus der NATO auszutreten und der multipolaren Welt seine Hand auszustrecken! Unser Europa ist postimperial, postkolonial, groß, vielfältig und friedfertig!

Ulrike Guérot, Jg. 1964, ist europäische Professorin, Publizistin und Bestsellerautorin. Seit rund 30 Jahren beschäftigt sie sich in europäischen Think Tanks und Universitäten in Paris, Brüssel, London, Washington, New York, Wien und Berlin mit Fragen der europäischen Demokratie sowie mit der Rolle Europas in der Welt. Ulrike Guérot ist seit März 2014 Gründerin und Direktorin des European Democracy Lab e.V., Berlin und initiierte im März 2023 das European Citizens Radio, das auf Spotify zu finden ist. Zuletzt erschien von ihr „Über Halford J. Mackinders Heartland-Theorie, Der geografische Drehpunkt der Geschichte“ (Westend, 2024). Mehr Infos zur Autorin hier.

LASSEN SIE DER FRIEDENSTAUBE FLÜGEL WACHSEN!

Hier können Sie die Friedenstaube abonnieren und bekommen die Artikel zugesandt.

Schon jetzt können Sie uns unterstützen:

- Für 50 CHF/EURO bekommen Sie ein Jahresabo der Friedenstaube.

- Für 120 CHF/EURO bekommen Sie ein Jahresabo und ein T-Shirt/Hoodie mit der Friedenstaube.

- Für 500 CHF/EURO werden Sie Förderer und bekommen ein lebenslanges Abo sowie ein T-Shirt/Hoodie mit der Friedenstaube.

- Ab 1000 CHF werden Sie Genossenschafter der Friedenstaube mit Stimmrecht (und bekommen lebenslanges Abo, T-Shirt/Hoodie).

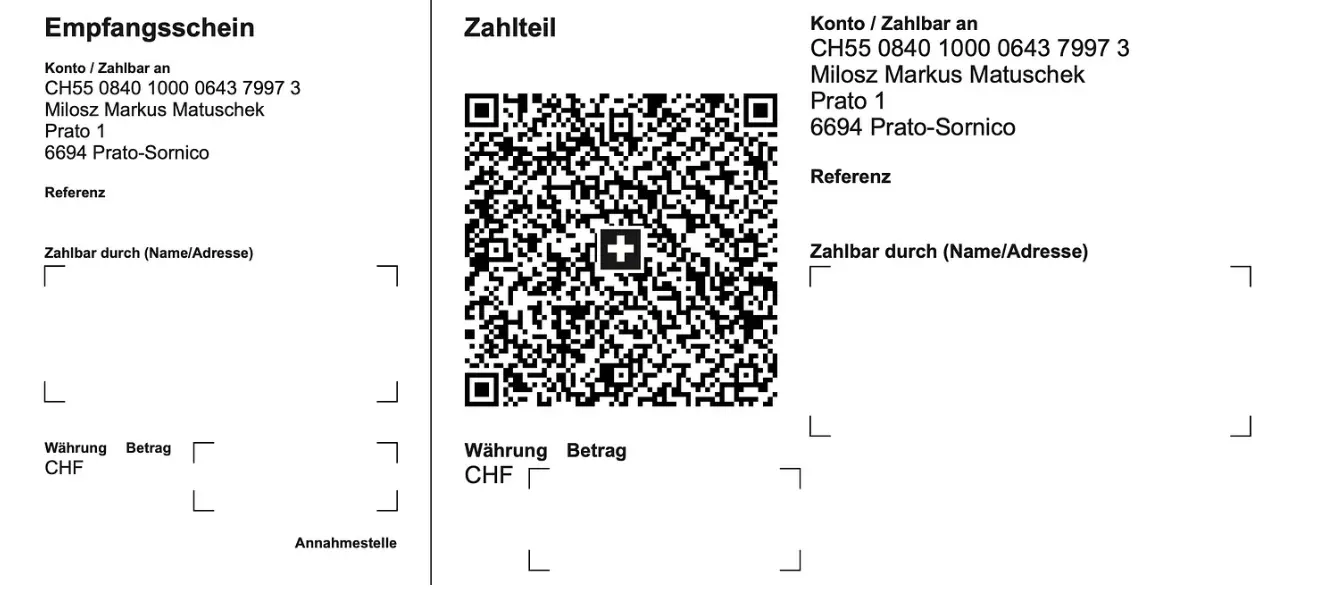

Für Einzahlungen in CHF (Betreff: Friedenstaube):

Für Einzahlungen in Euro:

Milosz Matuschek

IBAN DE 53710520500000814137

BYLADEM1TST

Sparkasse Traunstein-Trostberg

Betreff: Friedenstaube

Wenn Sie auf anderem Wege beitragen wollen, schreiben Sie die Friedenstaube an: friedenstaube@pareto.space

Sie sind noch nicht auf Nostr and wollen die volle Erfahrung machen (liken, kommentieren etc.)? Zappen können Sie den Autor auch ohne Nostr-Profil! Erstellen Sie sich einen Account auf Start. Weitere Onboarding-Leitfäden gibt es im Pareto-Wiki.

-

@ 23b0e2f8:d8af76fc

2025-01-08 18:17:52

@ 23b0e2f8:d8af76fc

2025-01-08 18:17:52Necessário

- Um Android que você não use mais (a câmera deve estar funcionando).

- Um cartão microSD (opcional, usado apenas uma vez).

- Um dispositivo para acompanhar seus fundos (provavelmente você já tem um).

Algumas coisas que você precisa saber

- O dispositivo servirá como um assinador. Qualquer movimentação só será efetuada após ser assinada por ele.

- O cartão microSD será usado para transferir o APK do Electrum e garantir que o aparelho não terá contato com outras fontes de dados externas após sua formatação. Contudo, é possível usar um cabo USB para o mesmo propósito.

- A ideia é deixar sua chave privada em um dispositivo offline, que ficará desligado em 99% do tempo. Você poderá acompanhar seus fundos em outro dispositivo conectado à internet, como seu celular ou computador pessoal.

O tutorial será dividido em dois módulos:

- Módulo 1 - Criando uma carteira fria/assinador.

- Módulo 2 - Configurando um dispositivo para visualizar seus fundos e assinando transações com o assinador.

No final, teremos:

- Uma carteira fria que também servirá como assinador.

- Um dispositivo para acompanhar os fundos da carteira.

Módulo 1 - Criando uma carteira fria/assinador

-

Baixe o APK do Electrum na aba de downloads em https://electrum.org/. Fique à vontade para verificar as assinaturas do software, garantindo sua autenticidade.

-

Formate o cartão microSD e coloque o APK do Electrum nele. Caso não tenha um cartão microSD, pule este passo.

- Retire os chips e acessórios do aparelho que será usado como assinador, formate-o e aguarde a inicialização.

- Durante a inicialização, pule a etapa de conexão ao Wi-Fi e rejeite todas as solicitações de conexão. Após isso, você pode desinstalar aplicativos desnecessários, pois precisará apenas do Electrum. Certifique-se de que Wi-Fi, Bluetooth e dados móveis estejam desligados. Você também pode ativar o modo avião.\ (Curiosidade: algumas pessoas optam por abrir o aparelho e danificar a antena do Wi-Fi/Bluetooth, impossibilitando essas funcionalidades.)

- Insira o cartão microSD com o APK do Electrum no dispositivo e instale-o. Será necessário permitir instalações de fontes não oficiais.

- No Electrum, crie uma carteira padrão e gere suas palavras-chave (seed). Anote-as em um local seguro. Caso algo aconteça com seu assinador, essas palavras permitirão o acesso aos seus fundos novamente. (Aqui entra seu método pessoal de backup.)

Módulo 2 - Configurando um dispositivo para visualizar seus fundos e assinando transações com o assinador.

-

Criar uma carteira somente leitura em outro dispositivo, como seu celular ou computador pessoal, é uma etapa bastante simples. Para este tutorial, usaremos outro smartphone Android com Electrum. Instale o Electrum a partir da aba de downloads em https://electrum.org/ ou da própria Play Store. (ATENÇÃO: O Electrum não existe oficialmente para iPhone. Desconfie se encontrar algum.)

-

Após instalar o Electrum, crie uma carteira padrão, mas desta vez escolha a opção Usar uma chave mestra.

- Agora, no assinador que criamos no primeiro módulo, exporte sua chave pública: vá em Carteira > Detalhes da carteira > Compartilhar chave mestra pública.

-

Escaneie o QR gerado da chave pública com o dispositivo de consulta. Assim, ele poderá acompanhar seus fundos, mas sem permissão para movimentá-los.

-

Para receber fundos, envie Bitcoin para um dos endereços gerados pela sua carteira: Carteira > Addresses/Coins.

-

Para movimentar fundos, crie uma transação no dispositivo de consulta. Como ele não possui a chave privada, será necessário assiná-la com o dispositivo assinador.

- No assinador, escaneie a transação não assinada, confirme os detalhes, assine e compartilhe. Será gerado outro QR, desta vez com a transação já assinada.

- No dispositivo de consulta, escaneie o QR da transação assinada e transmita-a para a rede.

Conclusão

Pontos positivos do setup:

- Simplicidade: Basta um dispositivo Android antigo.

- Flexibilidade: Funciona como uma ótima carteira fria, ideal para holders.

Pontos negativos do setup:

- Padronização: Não utiliza seeds no padrão BIP-39, você sempre precisará usar o electrum.

- Interface: A aparência do Electrum pode parecer antiquada para alguns usuários.

Nesse ponto, temos uma carteira fria que também serve para assinar transações. O fluxo de assinar uma transação se torna: Gerar uma transação não assinada > Escanear o QR da transação não assinada > Conferir e assinar essa transação com o assinador > Gerar QR da transação assinada > Escanear a transação assinada com qualquer outro dispositivo que possa transmiti-la para a rede.

Como alguns devem saber, uma transação assinada de Bitcoin é praticamente impossível de ser fraudada. Em um cenário catastrófico, você pode mesmo que sem internet, repassar essa transação assinada para alguém que tenha acesso à rede por qualquer meio de comunicação. Mesmo que não queiramos que isso aconteça um dia, esse setup acaba por tornar essa prática possível.

-

@ 91bea5cd:1df4451c

2025-04-26 10:16:21

@ 91bea5cd:1df4451c

2025-04-26 10:16:21O Contexto Legal Brasileiro e o Consentimento

No ordenamento jurídico brasileiro, o consentimento do ofendido pode, em certas circunstâncias, afastar a ilicitude de um ato que, sem ele, configuraria crime (como lesão corporal leve, prevista no Art. 129 do Código Penal). Contudo, o consentimento tem limites claros: não é válido para bens jurídicos indisponíveis, como a vida, e sua eficácia é questionável em casos de lesões corporais graves ou gravíssimas.

A prática de BDSM consensual situa-se em uma zona complexa. Em tese, se ambos os parceiros são adultos, capazes, e consentiram livre e informadamente nos atos praticados, sem que resultem em lesões graves permanentes ou risco de morte não consentido, não haveria crime. O desafio reside na comprovação desse consentimento, especialmente se uma das partes, posteriormente, o negar ou alegar coação.

A Lei Maria da Penha (Lei nº 11.340/2006)

A Lei Maria da Penha é um marco fundamental na proteção da mulher contra a violência doméstica e familiar. Ela estabelece mecanismos para coibir e prevenir tal violência, definindo suas formas (física, psicológica, sexual, patrimonial e moral) e prevendo medidas protetivas de urgência.

Embora essencial, a aplicação da lei em contextos de BDSM pode ser delicada. Uma alegação de violência por parte da mulher, mesmo que as lesões ou situações decorram de práticas consensuais, tende a receber atenção prioritária das autoridades, dada a presunção de vulnerabilidade estabelecida pela lei. Isso pode criar um cenário onde o parceiro masculino enfrenta dificuldades significativas em demonstrar a natureza consensual dos atos, especialmente se não houver provas robustas pré-constituídas.

Outros riscos:

Lesão corporal grave ou gravíssima (art. 129, §§ 1º e 2º, CP), não pode ser justificada pelo consentimento, podendo ensejar persecução penal.

Crimes contra a dignidade sexual (arts. 213 e seguintes do CP) são de ação pública incondicionada e independem de representação da vítima para a investigação e denúncia.

Riscos de Falsas Acusações e Alegação de Coação Futura

Os riscos para os praticantes de BDSM, especialmente para o parceiro que assume o papel dominante ou que inflige dor/restrição (frequentemente, mas não exclusivamente, o homem), podem surgir de diversas frentes:

- Acusações Externas: Vizinhos, familiares ou amigos que desconhecem a natureza consensual do relacionamento podem interpretar sons, marcas ou comportamentos como sinais de abuso e denunciar às autoridades.

- Alegações Futuras da Parceira: Em caso de término conturbado, vingança, arrependimento ou mudança de perspectiva, a parceira pode reinterpretar as práticas passadas como abuso e buscar reparação ou retaliação através de uma denúncia. A alegação pode ser de que o consentimento nunca existiu ou foi viciado.

- Alegação de Coação: Uma das formas mais complexas de refutar é a alegação de que o consentimento foi obtido mediante coação (física, moral, psicológica ou econômica). A parceira pode alegar, por exemplo, que se sentia pressionada, intimidada ou dependente, e que seu "sim" não era genuíno. Provar a ausência de coação a posteriori é extremamente difícil.

- Ingenuidade e Vulnerabilidade Masculina: Muitos homens, confiando na dinâmica consensual e na parceira, podem negligenciar a necessidade de precauções. A crença de que "isso nunca aconteceria comigo" ou a falta de conhecimento sobre as implicações legais e o peso processual de uma acusação no âmbito da Lei Maria da Penha podem deixá-los vulneráveis. A presença de marcas físicas, mesmo que consentidas, pode ser usada como evidência de agressão, invertendo o ônus da prova na prática, ainda que não na teoria jurídica.

Estratégias de Prevenção e Mitigação

Não existe um método infalível para evitar completamente o risco de uma falsa acusação, mas diversas medidas podem ser adotadas para construir um histórico de consentimento e reduzir vulnerabilidades:

- Comunicação Explícita e Contínua: A base de qualquer prática BDSM segura é a comunicação constante. Negociar limites, desejos, palavras de segurança ("safewords") e expectativas antes, durante e depois das cenas é crucial. Manter registros dessas negociações (e-mails, mensagens, diários compartilhados) pode ser útil.

-

Documentação do Consentimento:

-

Contratos de Relacionamento/Cena: Embora a validade jurídica de "contratos BDSM" seja discutível no Brasil (não podem afastar normas de ordem pública), eles servem como forte evidência da intenção das partes, da negociação detalhada de limites e do consentimento informado. Devem ser claros, datados, assinados e, idealmente, reconhecidos em cartório (para prova de data e autenticidade das assinaturas).

-