-

@ 6be5cc06:5259daf0

2025-04-28 01:05:49

@ 6be5cc06:5259daf0

2025-04-28 01:05:49Eu reconheço que Deus, e somente Deus, é o soberano legítimo sobre todas as coisas. Nenhum homem, nenhuma instituição, nenhum parlamento tem autoridade para usurpar aquilo que pertence ao Rei dos reis. O Estado moderno, com sua pretensão totalizante, é uma farsa blasfema diante do trono de Cristo. Não aceito outro senhor.

A Lei que me guia não é a ditada por burocratas, mas a gravada por Deus na própria natureza humana. A razão, quando iluminada pela fé, é suficiente para discernir o que é justo. Rejeito as leis arbitrárias que pretendem legitimar o roubo, o assassinato ou a escravidão em nome da ordem. A justiça não nasce do decreto, mas da verdade.

Acredito firmemente na propriedade privada como extensão da própria pessoa. Aquilo que é fruto do meu trabalho, da minha criatividade, da minha dedicação, dos dons a mim concedidos por Deus, pertence a mim por direito natural. Ninguém pode legitimamente tomar o que é meu sem meu consentimento. Todo imposto é uma agressão; toda expropriação, um roubo. Defendo a liberdade econômica não por idolatria ao mercado, mas porque a liberdade é condição necessária para a virtude.

Assumo o Princípio da Não Agressão como o mínimo ético que devo respeitar. Não iniciarei o uso da força contra ninguém, nem contra sua propriedade. Exijo o mesmo de todos. Mas sei que isso não basta. O PNA delimita o que não devo fazer — ele não me ensina o que devo ser. A liberdade exterior só é boa se houver liberdade interior. O mercado pode ser livre, mas se a alma estiver escravizada pelo vício, o colapso será inevitável.

Por isso, não me basta a ética negativa. Creio que uma sociedade justa precisa de valores positivos: honra, responsabilidade, compaixão, respeito, fidelidade à verdade. Sem isso, mesmo uma sociedade que respeite formalmente os direitos individuais apodrecerá por dentro. Um povo que ama o lucro, mas despreza a verdade, que celebra a liberdade mas esquece a justiça, está se preparando para ser dominado. Trocará um déspota visível por mil tiranias invisíveis — o hedonismo, o consumismo, a mentira, o medo.

Não aceito a falsa caridade feita com o dinheiro tomado à força. A verdadeira generosidade nasce do coração livre, não da coerção institucional. Obrigar alguém a ajudar o próximo destrói tanto a liberdade quanto a virtude. Só há mérito onde há escolha. A caridade que nasce do amor é redentora; a que nasce do fisco é propaganda.

O Estado moderno é um ídolo. Ele promete segurança, mas entrega servidão. Promete justiça, mas entrega privilégios. Disfarça a opressão com linguagem técnica, legal e democrática. Mas por trás de suas máscaras, vejo apenas a velha serpente. Um parasita que se alimenta do trabalho alheio e manipula consciências para se perpetuar.

Resistir não é apenas um direito, é um dever. Obedecer a Deus antes que aos homens — essa é a minha regra. O poder se volta contra a verdade, mas minha lealdade pertence a quem criou o céu e a terra. A tirania não se combate com outro tirano, mas com a desobediência firme e pacífica dos que amam a justiça.

Não acredito em utopias. Desejo uma ordem natural, orgânica, enraizada no voluntarismo. Uma sociedade que se construa de baixo para cima: a partir da família, da comunidade local, da tradição e da fé. Não quero uma máquina que planeje a vida alheia, mas um tecido de relações voluntárias onde a liberdade floresça à sombra da cruz.

Desejo, sim, o reinado social de Cristo. Não por imposição, mas por convicção. Que Ele reine nos corações, nas famílias, nas ruas e nos contratos. Que a fé guie a razão e a razão ilumine a vida. Que a liberdade seja meio para a santidade — não um fim em si. E que, livres do jugo do Leviatã, sejamos servos apenas do Senhor.

-

@ 52b4a076:e7fad8bd

2025-04-28 00:48:57

@ 52b4a076:e7fad8bd

2025-04-28 00:48:57I have been recently building NFDB, a new relay DB. This post is meant as a short overview.

Regular relays have challenges

Current relay software have significant challenges, which I have experienced when hosting Nostr.land: - Scalability is only supported by adding full replicas, which does not scale to large relays. - Most relays use slow databases and are not optimized for large scale usage. - Search is near-impossible to implement on standard relays. - Privacy features such as NIP-42 are lacking. - Regular DB maintenance tasks on normal relays require extended downtime. - Fault-tolerance is implemented, if any, using a load balancer, which is limited. - Personalization and advanced filtering is not possible. - Local caching is not supported.

NFDB: A scalable database for large relays

NFDB is a new database meant for medium-large scale relays, built on FoundationDB that provides: - Near-unlimited scalability - Extended fault tolerance - Instant loading - Better search - Better personalization - and more.

Search

NFDB has extended search capabilities including: - Semantic search: Search for meaning, not words. - Interest-based search: Highlight content you care about. - Multi-faceted queries: Easily filter by topic, author group, keywords, and more at the same time. - Wide support for event kinds, including users, articles, etc.

Personalization

NFDB allows significant personalization: - Customized algorithms: Be your own algorithm. - Spam filtering: Filter content to your WoT, and use advanced spam filters. - Topic mutes: Mute topics, not keywords. - Media filtering: With Nostr.build, you will be able to filter NSFW and other content - Low data mode: Block notes that use high amounts of cellular data. - and more

Other

NFDB has support for many other features such as: - NIP-42: Protect your privacy with private drafts and DMs - Microrelays: Easily deploy your own personal microrelay - Containers: Dedicated, fast storage for discoverability events such as relay lists

Calcite: A local microrelay database

Calcite is a lightweight, local version of NFDB that is meant for microrelays and caching, meant for thousands of personal microrelays.

Calcite HA is an additional layer that allows live migration and relay failover in under 30 seconds, providing higher availability compared to current relays with greater simplicity. Calcite HA is enabled in all Calcite deployments.

For zero-downtime, NFDB is recommended.

Noswhere SmartCache

Relays are fixed in one location, but users can be anywhere.

Noswhere SmartCache is a CDN for relays that dynamically caches data on edge servers closest to you, allowing: - Multiple regions around the world - Improved throughput and performance - Faster loading times

routerd

routerdis a custom load-balancer optimized for Nostr relays, integrated with SmartCache.routerdis specifically integrated with NFDB and Calcite HA to provide fast failover and high performance.Ending notes

NFDB is planned to be deployed to Nostr.land in the coming weeks.

A lot more is to come. 👀️️️️️️

-

@ 266815e0:6cd408a5

2025-04-29 17:47:57

@ 266815e0:6cd408a5

2025-04-29 17:47:57I'm excited to announce the release of Applesauce v1.0.0! There are a few breaking changes and a lot of improvements and new features across all packages. Each package has been updated to 1.0.0, marking a stable API for developers to build upon.

Applesauce core changes

There was a change in the

applesauce-corepackage in theQueryStore.The

Queryinterface has been converted to a method instead of an object withkeyandrunfields.A bunch of new helper methods and queries were added, checkout the changelog for a full list.

Applesauce Relay

There is a new

applesauce-relaypackage that provides a simple RxJS based api for connecting to relays and publishing events.Documentation: applesauce-relay

Features:

- A simple API for subscribing or publishing to a single relay or a group of relays

- No

connectorclosemethods, connections are managed automatically by rxjs - NIP-11

auth_requiredsupport - Support for NIP-42 authentication

- Prebuilt or custom re-connection back-off

- Keep-alive timeout (default 30s)

- Client-side Negentropy sync support

Example Usage: Single relay

```typescript import { Relay } from "applesauce-relay";

// Connect to a relay const relay = new Relay("wss://relay.example.com");

// Create a REQ and subscribe to it relay .req({ kinds: [1], limit: 10, }) .subscribe((response) => { if (response === "EOSE") { console.log("End of stored events"); } else { console.log("Received event:", response); } }); ```

Example Usage: Relay pool

```typescript import { Relay, RelayPool } from "applesauce-relay";

// Create a pool with a custom relay const pool = new RelayPool();

// Create a REQ and subscribe to it pool .req(["wss://relay.damus.io", "wss://relay.snort.social"], { kinds: [1], limit: 10, }) .subscribe((response) => { if (response === "EOSE") { console.log("End of stored events on all relays"); } else { console.log("Received event:", response); } }); ```

Applesauce actions

Another new package is the

applesauce-actionspackage. This package provides a set of async operations for common Nostr actions.Actions are run against the events in the

EventStoreand use theEventFactoryto create new events to publish.Documentation: applesauce-actions

Example Usage:

```typescript import { ActionHub } from "applesauce-actions";

// An EventStore and EventFactory are required to use the ActionHub import { eventStore } from "./stores.ts"; import { eventFactory } from "./factories.ts";

// Custom publish logic const publish = async (event: NostrEvent) => { console.log("Publishing", event); await app.relayPool.publish(event, app.defaultRelays); };

// The

publishmethod is optional for the asyncrunmethod to work const hub = new ActionHub(eventStore, eventFactory, publish); ```Once an

ActionsHubis created, you can use therunorexecmethods to execute actions:```typescript import { FollowUser, MuteUser } from "applesauce-actions/actions";

// Follow fiatjaf await hub.run( FollowUser, "3bf0c63fcb93463407af97a5e5ee64fa883d107ef9e558472c4eb9aaaefa459d", );

// Or use the

execmethod with a custom publish method await hub .exec( MuteUser, "3bf0c63fcb93463407af97a5e5ee64fa883d107ef9e558472c4eb9aaaefa459d", ) .forEach((event) => { // NOTE: Don't publish this event because we never want to mute fiatjaf // pool.publish(['wss://pyramid.fiatjaf.com/'], event) }); ```There are a log more actions including some for working with NIP-51 lists (private and public), you can find them in the reference

Applesauce loaders

The

applesauce-loaderspackage has been updated to support any relay connection libraries and not justrx-nostr.Before:

```typescript import { ReplaceableLoader } from "applesauce-loaders"; import { createRxNostr } from "rx-nostr";

// Create a new rx-nostr instance const rxNostr = createRxNostr();

// Create a new replaceable loader const replaceableLoader = new ReplaceableLoader(rxNostr); ```

After:

```typescript

import { Observable } from "rxjs"; import { ReplaceableLoader, NostrRequest } from "applesauce-loaders"; import { SimplePool } from "nostr-tools";

// Create a new nostr-tools pool const pool = new SimplePool();

// Create a method that subscribes using nostr-tools and returns an observable function nostrRequest: NostrRequest = (relays, filters, id) => { return new Observable((subscriber) => { const sub = pool.subscribe(relays, filters, { onevent: (event) => { subscriber.next(event); }, onclose: () => subscriber.complete(), oneose: () => subscriber.complete(), });

return () => sub.close();}); };

// Create a new replaceable loader const replaceableLoader = new ReplaceableLoader(nostrRequest); ```

Of course you can still use rx-nostr if you want:

```typescript import { createRxNostr } from "rx-nostr";

// Create a new rx-nostr instance const rxNostr = createRxNostr();

// Create a method that subscribes using rx-nostr and returns an observable function nostrRequest( relays: string[], filters: Filter[], id?: string, ): Observable

{ // Create a new oneshot request so it will complete when EOSE is received const req = createRxOneshotReq({ filters, rxReqId: id }); return rxNostr .use(req, { on: { relays } }) .pipe(map((packet) => packet.event)); } // Create a new replaceable loader const replaceableLoader = new ReplaceableLoader(nostrRequest); ```

There where a few more changes, check out the changelog

Applesauce wallet

Its far from complete, but there is a new

applesauce-walletpackage that provides a actions and queries for working with NIP-60 wallets.Documentation: applesauce-wallet

Example Usage:

```typescript import { CreateWallet, UnlockWallet } from "applesauce-wallet/actions";

// Create a new NIP-60 wallet await hub.run(CreateWallet, ["wss://mint.example.com"], privateKey);

// Unlock wallet and associated tokens/history await hub.run(UnlockWallet, { tokens: true, history: true }); ```

-

@ 30ceb64e:7f08bdf5

2025-04-26 20:33:30

@ 30ceb64e:7f08bdf5

2025-04-26 20:33:30Status: Draft

Author: TheWildHustleAbstract

This NIP defines a framework for storing and sharing health and fitness profile data on Nostr. It establishes a set of standardized event kinds for individual health metrics, allowing applications to selectively access specific health information while preserving user control and privacy.

In this framework exists - NIP-101h.1 Weight using kind 1351 - NIP-101h.2 Height using kind 1352 - NIP-101h.3 Age using kind 1353 - NIP-101h.4 Gender using kind 1354 - NIP-101h.5 Fitness Level using kind 1355

Motivation

I want to build and support an ecosystem of health and fitness related nostr clients that have the ability to share and utilize a bunch of specific interoperable health metrics.

- Selective access - Applications can access only the data they need

- User control - Users can choose which metrics to share

- Interoperability - Different health applications can share data

- Privacy - Sensitive health information can be managed independently

Specification

Kind Number Range

Health profile metrics use the kind number range 1351-1399:

| Kind | Metric | | --------- | ---------------------------------- | | 1351 | Weight | | 1352 | Height | | 1353 | Age | | 1354 | Gender | | 1355 | Fitness Level | | 1356-1399 | Reserved for future health metrics |

Common Structure

All health metric events SHOULD follow these guidelines:

- The content field contains the primary value of the metric

- Required tags:

['t', 'health']- For categorizing as health data['t', metric-specific-tag]- For identifying the specific metric['unit', unit-of-measurement]- When applicable- Optional tags:

['converted_value', value, unit]- For providing alternative unit measurements['timestamp', ISO8601-date]- When the metric was measured['source', application-name]- The source of the measurement

Unit Handling

Health metrics often have multiple ways to be measured. To ensure interoperability:

- Where multiple units are possible, one standard unit SHOULD be chosen as canonical

- When using non-standard units, a

converted_valuetag SHOULD be included with the canonical unit - Both the original and converted values should be provided for maximum compatibility

Client Implementation Guidelines

Clients implementing this NIP SHOULD:

- Allow users to explicitly choose which metrics to publish

- Support reading health metrics from other users when appropriate permissions exist

- Support updating metrics with new values over time

- Preserve tags they don't understand for future compatibility

- Support at least the canonical unit for each metric

Extensions

New health metrics can be proposed as extensions to this NIP using the format:

- NIP-101h.X where X is the metric number

Each extension MUST specify: - A unique kind number in the range 1351-1399 - The content format and meaning - Required and optional tags - Examples of valid events

Privacy Considerations

Health data is sensitive personal information. Clients implementing this NIP SHOULD:

- Make it clear to users when health data is being published

- Consider incorporating NIP-44 encryption for sensitive metrics

- Allow users to selectively share metrics with specific individuals

- Provide easy ways to delete previously published health data

NIP-101h.1: Weight

Description

This NIP defines the format for storing and sharing weight data on Nostr.

Event Kind: 1351

Content

The content field MUST contain the numeric weight value as a string.

Required Tags

- ['unit', 'kg' or 'lb'] - Unit of measurement

- ['t', 'health'] - Categorization tag

- ['t', 'weight'] - Specific metric tag

Optional Tags

- ['converted_value', value, unit] - Provides the weight in alternative units for interoperability

- ['timestamp', ISO8601 date] - When the weight was measured

Examples

json { "kind": 1351, "content": "70", "tags": [ ["unit", "kg"], ["t", "health"], ["t", "weight"] ] }json { "kind": 1351, "content": "154", "tags": [ ["unit", "lb"], ["t", "health"], ["t", "weight"], ["converted_value", "69.85", "kg"] ] }NIP-101h.2: Height

Status: Draft

Description

This NIP defines the format for storing and sharing height data on Nostr.

Event Kind: 1352

Content

The content field can use two formats: - For metric height: A string containing the numeric height value in centimeters (cm) - For imperial height: A JSON string with feet and inches properties

Required Tags

['t', 'health']- Categorization tag['t', 'height']- Specific metric tag['unit', 'cm' or 'imperial']- Unit of measurement

Optional Tags

['converted_value', value, 'cm']- Provides height in centimeters for interoperability when imperial is used['timestamp', ISO8601-date]- When the height was measured

Examples

```jsx // Example 1: Metric height Apply to App.jsx

// Example 2: Imperial height with conversion Apply to App.jsx ```

Implementation Notes

- Centimeters (cm) is the canonical unit for height interoperability

- When using imperial units, a conversion to centimeters SHOULD be provided

- Height values SHOULD be positive integers

- For maximum compatibility, clients SHOULD support both formats

NIP-101h.3: Age

Status: Draft

Description

This NIP defines the format for storing and sharing age data on Nostr.

Event Kind: 1353

Content

The content field MUST contain the numeric age value as a string.

Required Tags

['unit', 'years']- Unit of measurement['t', 'health']- Categorization tag['t', 'age']- Specific metric tag

Optional Tags

['timestamp', ISO8601-date]- When the age was recorded['dob', ISO8601-date]- Date of birth (if the user chooses to share it)

Examples

```jsx // Example 1: Basic age Apply to App.jsx

// Example 2: Age with DOB Apply to App.jsx ```

Implementation Notes

- Age SHOULD be represented as a positive integer

- For privacy reasons, date of birth (dob) is optional

- Clients SHOULD consider updating age automatically if date of birth is known

- Age can be a sensitive metric and clients may want to consider encrypting this data

NIP-101h.4: Gender

Status: Draft

Description

This NIP defines the format for storing and sharing gender data on Nostr.

Event Kind: 1354

Content

The content field contains a string representing the user's gender.

Required Tags

['t', 'health']- Categorization tag['t', 'gender']- Specific metric tag

Optional Tags

['timestamp', ISO8601-date]- When the gender was recorded['preferred_pronouns', string]- User's preferred pronouns

Common Values

While any string value is permitted, the following common values are recommended for interoperability: - male - female - non-binary - other - prefer-not-to-say

Examples

```jsx // Example 1: Basic gender Apply to App.jsx

// Example 2: Gender with pronouns Apply to App.jsx ```

Implementation Notes

- Clients SHOULD allow free-form input for gender

- For maximum compatibility, clients SHOULD support the common values

- Gender is a sensitive personal attribute and clients SHOULD consider appropriate privacy controls

- Applications focusing on health metrics should be respectful of gender diversity

NIP-101h.5: Fitness Level

Status: Draft

Description

This NIP defines the format for storing and sharing fitness level data on Nostr.

Event Kind: 1355

Content

The content field contains a string representing the user's fitness level.

Required Tags

['t', 'health']- Categorization tag['t', 'fitness']- Fitness category tag['t', 'level']- Specific metric tag

Optional Tags

['timestamp', ISO8601-date]- When the fitness level was recorded['activity', activity-type]- Specific activity the fitness level relates to['metrics', JSON-string]- Quantifiable fitness metrics used to determine level

Common Values

While any string value is permitted, the following common values are recommended for interoperability: - beginner - intermediate - advanced - elite - professional

Examples

```jsx // Example 1: Basic fitness level Apply to App.jsx

// Example 2: Activity-specific fitness level with metrics Apply to App.jsx ```

Implementation Notes

- Fitness level is subjective and may vary by activity

- The activity tag can be used to specify fitness level for different activities

- The metrics tag can provide objective measurements to support the fitness level

- Clients can extend this format to include activity-specific fitness assessments

- For general fitness apps, the simple beginner/intermediate/advanced scale is recommended

-

@ 91bea5cd:1df4451c

2025-04-26 10:16:21

@ 91bea5cd:1df4451c

2025-04-26 10:16:21O Contexto Legal Brasileiro e o Consentimento

No ordenamento jurídico brasileiro, o consentimento do ofendido pode, em certas circunstâncias, afastar a ilicitude de um ato que, sem ele, configuraria crime (como lesão corporal leve, prevista no Art. 129 do Código Penal). Contudo, o consentimento tem limites claros: não é válido para bens jurídicos indisponíveis, como a vida, e sua eficácia é questionável em casos de lesões corporais graves ou gravíssimas.

A prática de BDSM consensual situa-se em uma zona complexa. Em tese, se ambos os parceiros são adultos, capazes, e consentiram livre e informadamente nos atos praticados, sem que resultem em lesões graves permanentes ou risco de morte não consentido, não haveria crime. O desafio reside na comprovação desse consentimento, especialmente se uma das partes, posteriormente, o negar ou alegar coação.

A Lei Maria da Penha (Lei nº 11.340/2006)

A Lei Maria da Penha é um marco fundamental na proteção da mulher contra a violência doméstica e familiar. Ela estabelece mecanismos para coibir e prevenir tal violência, definindo suas formas (física, psicológica, sexual, patrimonial e moral) e prevendo medidas protetivas de urgência.

Embora essencial, a aplicação da lei em contextos de BDSM pode ser delicada. Uma alegação de violência por parte da mulher, mesmo que as lesões ou situações decorram de práticas consensuais, tende a receber atenção prioritária das autoridades, dada a presunção de vulnerabilidade estabelecida pela lei. Isso pode criar um cenário onde o parceiro masculino enfrenta dificuldades significativas em demonstrar a natureza consensual dos atos, especialmente se não houver provas robustas pré-constituídas.

Outros riscos:

Lesão corporal grave ou gravíssima (art. 129, §§ 1º e 2º, CP), não pode ser justificada pelo consentimento, podendo ensejar persecução penal.

Crimes contra a dignidade sexual (arts. 213 e seguintes do CP) são de ação pública incondicionada e independem de representação da vítima para a investigação e denúncia.

Riscos de Falsas Acusações e Alegação de Coação Futura

Os riscos para os praticantes de BDSM, especialmente para o parceiro que assume o papel dominante ou que inflige dor/restrição (frequentemente, mas não exclusivamente, o homem), podem surgir de diversas frentes:

- Acusações Externas: Vizinhos, familiares ou amigos que desconhecem a natureza consensual do relacionamento podem interpretar sons, marcas ou comportamentos como sinais de abuso e denunciar às autoridades.

- Alegações Futuras da Parceira: Em caso de término conturbado, vingança, arrependimento ou mudança de perspectiva, a parceira pode reinterpretar as práticas passadas como abuso e buscar reparação ou retaliação através de uma denúncia. A alegação pode ser de que o consentimento nunca existiu ou foi viciado.

- Alegação de Coação: Uma das formas mais complexas de refutar é a alegação de que o consentimento foi obtido mediante coação (física, moral, psicológica ou econômica). A parceira pode alegar, por exemplo, que se sentia pressionada, intimidada ou dependente, e que seu "sim" não era genuíno. Provar a ausência de coação a posteriori é extremamente difícil.

- Ingenuidade e Vulnerabilidade Masculina: Muitos homens, confiando na dinâmica consensual e na parceira, podem negligenciar a necessidade de precauções. A crença de que "isso nunca aconteceria comigo" ou a falta de conhecimento sobre as implicações legais e o peso processual de uma acusação no âmbito da Lei Maria da Penha podem deixá-los vulneráveis. A presença de marcas físicas, mesmo que consentidas, pode ser usada como evidência de agressão, invertendo o ônus da prova na prática, ainda que não na teoria jurídica.

Estratégias de Prevenção e Mitigação

Não existe um método infalível para evitar completamente o risco de uma falsa acusação, mas diversas medidas podem ser adotadas para construir um histórico de consentimento e reduzir vulnerabilidades:

- Comunicação Explícita e Contínua: A base de qualquer prática BDSM segura é a comunicação constante. Negociar limites, desejos, palavras de segurança ("safewords") e expectativas antes, durante e depois das cenas é crucial. Manter registros dessas negociações (e-mails, mensagens, diários compartilhados) pode ser útil.

-

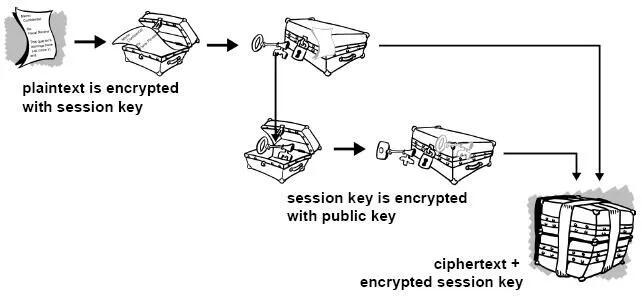

Documentação do Consentimento:

-

Contratos de Relacionamento/Cena: Embora a validade jurídica de "contratos BDSM" seja discutível no Brasil (não podem afastar normas de ordem pública), eles servem como forte evidência da intenção das partes, da negociação detalhada de limites e do consentimento informado. Devem ser claros, datados, assinados e, idealmente, reconhecidos em cartório (para prova de data e autenticidade das assinaturas).

-

Registros Audiovisuais: Gravar (com consentimento explícito para a gravação) discussões sobre consentimento e limites antes das cenas pode ser uma prova poderosa. Gravar as próprias cenas é mais complexo devido a questões de privacidade e potencial uso indevido, mas pode ser considerado em casos específicos, sempre com consentimento mútuo documentado para a gravação.

Importante: a gravação deve ser com ciência da outra parte, para não configurar violação da intimidade (art. 5º, X, da Constituição Federal e art. 20 do Código Civil).

-

-

Testemunhas: Em alguns contextos de comunidade BDSM, a presença de terceiros de confiança durante negociações ou mesmo cenas pode servir como testemunho, embora isso possa alterar a dinâmica íntima do casal.

- Estabelecimento Claro de Limites e Palavras de Segurança: Definir e respeitar rigorosamente os limites (o que é permitido, o que é proibido) e as palavras de segurança é fundamental. O desrespeito a uma palavra de segurança encerra o consentimento para aquele ato.

- Avaliação Contínua do Consentimento: O consentimento não é um cheque em branco; ele deve ser entusiástico, contínuo e revogável a qualquer momento. Verificar o bem-estar do parceiro durante a cena ("check-ins") é essencial.

- Discrição e Cuidado com Evidências Físicas: Ser discreto sobre a natureza do relacionamento pode evitar mal-entendidos externos. Após cenas que deixem marcas, é prudente que ambos os parceiros estejam cientes e de acordo, talvez documentando por fotos (com data) e uma nota sobre a consensualidade da prática que as gerou.

- Aconselhamento Jurídico Preventivo: Consultar um advogado especializado em direito de família e criminal, com sensibilidade para dinâmicas de relacionamento alternativas, pode fornecer orientação personalizada sobre as melhores formas de documentar o consentimento e entender os riscos legais específicos.

Observações Importantes

- Nenhuma documentação substitui a necessidade de consentimento real, livre, informado e contínuo.

- A lei brasileira protege a "integridade física" e a "dignidade humana". Práticas que resultem em lesões graves ou que violem a dignidade de forma não consentida (ou com consentimento viciado) serão ilegais, independentemente de qualquer acordo prévio.

- Em caso de acusação, a existência de documentação robusta de consentimento não garante a absolvição, mas fortalece significativamente a defesa, ajudando a demonstrar a natureza consensual da relação e das práticas.

-

A alegação de coação futura é particularmente difícil de prevenir apenas com documentos. Um histórico consistente de comunicação aberta (whatsapp/telegram/e-mails), respeito mútuo e ausência de dependência ou controle excessivo na relação pode ajudar a contextualizar a dinâmica como não coercitiva.

-

Cuidado com Marcas Visíveis e Lesões Graves Práticas que resultam em hematomas severos ou lesões podem ser interpretadas como agressão, mesmo que consentidas. Evitar excessos protege não apenas a integridade física, mas também evita questionamentos legais futuros.

O que vem a ser consentimento viciado

No Direito, consentimento viciado é quando a pessoa concorda com algo, mas a vontade dela não é livre ou plena — ou seja, o consentimento existe formalmente, mas é defeituoso por alguma razão.

O Código Civil brasileiro (art. 138 a 165) define várias formas de vício de consentimento. As principais são:

Erro: A pessoa se engana sobre o que está consentindo. (Ex.: A pessoa acredita que vai participar de um jogo leve, mas na verdade é exposta a práticas pesadas.)

Dolo: A pessoa é enganada propositalmente para aceitar algo. (Ex.: Alguém mente sobre o que vai acontecer durante a prática.)

Coação: A pessoa é forçada ou ameaçada a consentir. (Ex.: "Se você não aceitar, eu termino com você" — pressão emocional forte pode ser vista como coação.)

Estado de perigo ou lesão: A pessoa aceita algo em situação de necessidade extrema ou abuso de sua vulnerabilidade. (Ex.: Alguém em situação emocional muito fragilizada é induzida a aceitar práticas que normalmente recusaria.)

No contexto de BDSM, isso é ainda mais delicado: Mesmo que a pessoa tenha "assinado" um contrato ou dito "sim", se depois ela alegar que seu consentimento foi dado sob medo, engano ou pressão psicológica, o consentimento pode ser considerado viciado — e, portanto, juridicamente inválido.

Isso tem duas implicações sérias:

-

O crime não se descaracteriza: Se houver vício, o consentimento é ignorado e a prática pode ser tratada como crime normal (lesão corporal, estupro, tortura, etc.).

-

A prova do consentimento precisa ser sólida: Mostrando que a pessoa estava informada, lúcida, livre e sem qualquer tipo de coação.

Consentimento viciado é quando a pessoa concorda formalmente, mas de maneira enganada, forçada ou pressionada, tornando o consentimento inútil para efeitos jurídicos.

Conclusão

Casais que praticam BDSM consensual no Brasil navegam em um terreno que exige não apenas confiança mútua e comunicação excepcional, mas também uma consciência aguçada das complexidades legais e dos riscos de interpretações equivocadas ou acusações mal-intencionadas. Embora o BDSM seja uma expressão legítima da sexualidade humana, sua prática no Brasil exige responsabilidade redobrada. Ter provas claras de consentimento, manter a comunicação aberta e agir com prudência são formas eficazes de se proteger de falsas alegações e preservar a liberdade e a segurança de todos os envolvidos. Embora leis controversas como a Maria da Penha sejam "vitais" para a proteção contra a violência real, os praticantes de BDSM, e em particular os homens nesse contexto, devem adotar uma postura proativa e prudente para mitigar os riscos inerentes à potencial má interpretação ou instrumentalização dessas práticas e leis, garantindo que a expressão de sua consensualidade esteja resguardada na medida do possível.

Importante: No Brasil, mesmo com tudo isso, o Ministério Público pode denunciar por crime como lesão corporal grave, estupro ou tortura, independente de consentimento. Então a prudência nas práticas é fundamental.

Aviso Legal: Este artigo tem caráter meramente informativo e não constitui aconselhamento jurídico. As leis e interpretações podem mudar, e cada situação é única. Recomenda-se buscar orientação de um advogado qualificado para discutir casos específicos.

Se curtiu este artigo faça uma contribuição, se tiver algum ponto relevante para o artigo deixe seu comentário.

-

@ 005bc4de:ef11e1a2

2025-04-29 16:08:56

@ 005bc4de:ef11e1a2

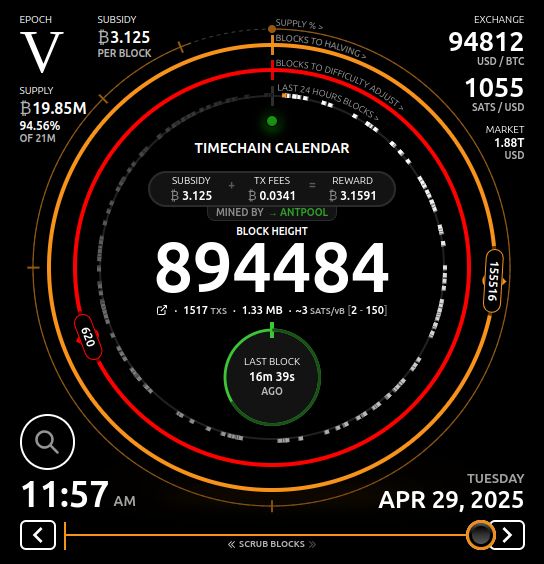

2025-04-29 16:08:56Trump Bitcoin Report Card - Day 100

For whatever reason day 100 of a president's term has been deemed a milestone. So, it's time to check in with President Trump's bitcoin pledges and issue a report card.

Repo and prior reports: - GitHub: https://github.com/crrdlx/trump-bitcoin-report-card - First post: https://stacker.news/items/757211 - Progress Report 1: https://stacker.news/items/774165 - Day 1 Report Card: https://stacker.news/items/859475 - Day 100 Report Card: https://stacker.news/items/966434

Report Card | | Pledge | Prior Grade | Current Grade | |--|--|--|--| | 1 | Fire SEC Chair Gary Gensler on day 1 | A | A | | 2 | Commute the sentence of Ross Ulbricht on day 1 | A | A | | 3 | Remove capital gains taxes on bitcoin transactions | F | F | | 4 | Create and hodl a strategic bitcoin stockpile | D | C- | | 5 | Prevent a CBDC during his presidency | B+ | A | | 6 | Create a "bitcoin and crypto" advisory council | C- | C | | 7 | Support the right to self-custody | D+ | B- | | 8 | End the "war on crypto" | D+ | B+ | | 9 | Mine all remaining bitcoin in the USA | C- | C | | 10 | Make the US the "crypto capital of the planet" | C- | C+ |

Comments

Pledge 1 - SEC chair - (no change from earlier) - Gensler is out. This happened after the election and Trump took office. With the writing on the wall, Gensler announced he would resign, Trump picked a new SEC head in Paul Atkins, and Gensler left office just before Trump was sworn in. The only reason an A+ was not awarded was that Trump wasn't given the chance to actually fire Gensler, because he quit. No doubt, though, his quitting was due to Trump and the threat of being sacked.

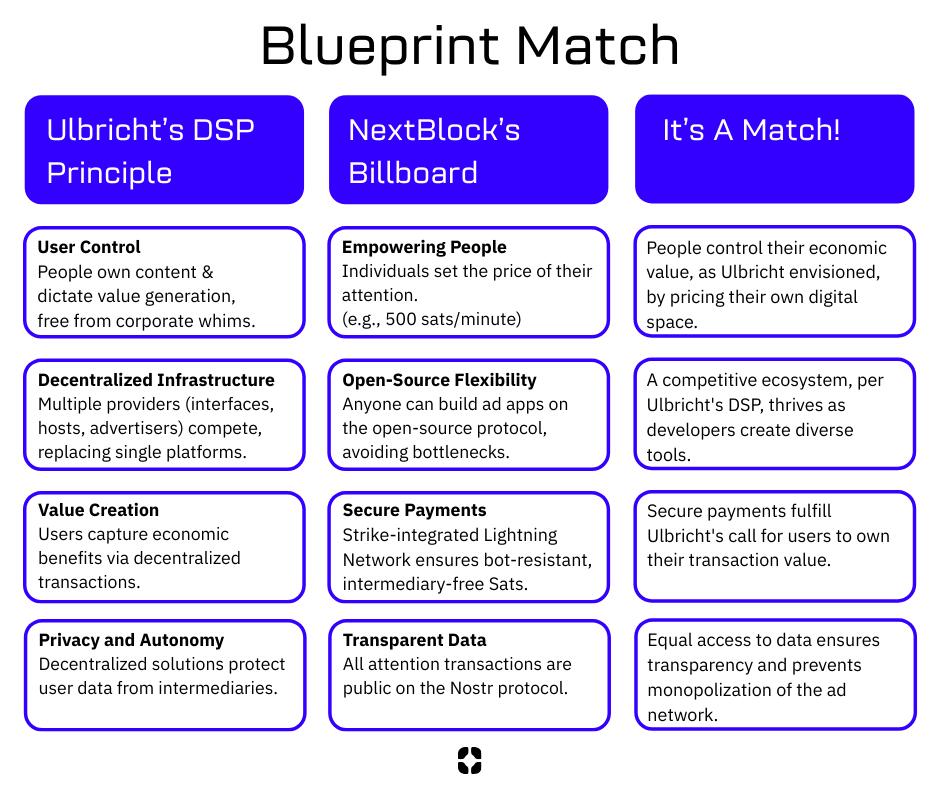

Day 100 Report Card Grade: A

Pledge 2 - free Ross - (no change from earlier) - Ross Ulbricht's sentence was just commuted. Going will "option 3" above, the pledge was kept. An A+ would have been a commutation yesterday or by noon today, but, let's not split hairs. It's done.

Day 100 Report Card Grade: A

Pledge 3 - capital gains - This requires either executive action and/or legislation. There was no action. Executive action can be done with the stroke of a pen, but it was not. Legislation is tricky and time-consuming, however, there wasn't even mention of this matter. This seems to be on the back burner since statements such as this report in November. See Progress Report 1: https://stacker.news/items/774165 for more context.

Trump's main tax thrust has been the tariff, actually a tax increase, instead of a cut. Currently, the emphasis is on extending the "Trump tax cuts" and recently House Speaker Mike Johnson indicated such a bill would be ready by Memorial Day. Earlier in his term, there was more chatter about tax relief for bitcoin or cryptocurrency. There seems to be less chatter on this, or none at all, such as its absence in the "ready by Memorial Day" article.

Until tax reform is codified and signed, it isn't tax law and the old code still applies.

Day 100 Report Card Grade: F

Pledge 4 - bitcoin reserve - The initial grade was a C, it was dropped to a D mainly due to Trump's propensity to [alt]coinery, and now it's back to a C-.

Getting the grade back up into C-level at a C- was a little bumpy. On March 2, 2025, Trump posted that a U.S. Crypto Reserve would be created. This is what had been hoped for, except that the pledge was for a Bitcoin Reserve, not crypto. And secondly, he specifically named XRP, SOL, and ADA (but not BTC). Just a couple of hours later, likely in clean up mode, he did add BTC (along with ETH) as "obviously" being included. So, the "Bitcoin Reserve" became a "Crypto Reserve."

Maybe still in "cleanup mode," Sec. of Commerce Howard Lutnick said bitcoin will hold "special status" in the reserve. Then, on March 6, an executive order made the U.S. Digital Asset Stockpile official. Again, "Bitcoin" was generalized until section 3 where the "Strategic Bitcoin Reserve" did come to official fruition.

The grade is only a C- because the only thing that happened was the naming of the stockpile. Indeed, it became official. But the "stockpile" was just BTC already held by the U.S. government. I think it's fair to say most bitcoiners would have preferred a statement about buying BTC. Other Trump bitcoin officials indicated acquiring "as much as we can get", which sounds great, but until it happens, is only words.

Day 100 Report Card Grade: C-

Pledge 5 - no CBDC - An executive order on January 23, 2025 forbade a CBDC in section 1, part v by "prohibiting the establishment, issuance, circulation, and use of a CBDC."

Day 100 Report Card Grade: A

Pledge 6 - advisory council - The Trump bitcoin or crypto team consists of the following: David Sacks as “crypto czar” and Bo Hines as executive director of the Presidential Council of Advisers for Digital Assets.

A White House Crypto Summit (see video) was held on March 7, 2025. In principle, the meeting was good, however, the summit seemed (a) to be very heavily "crypto" oriented, and (b) to largely be a meet-and-greet show.

Still, just the fact that such a show took place, inside the White House, reveals how far things have come and the change in climate. For the grade to go higher, more tangible things should take place over time.

Day 100 Report Card Grade: C

Pledge 7 - self-custody - There's been a bit of good news though on this front. First, the executive order above from January 23 stated in section 1, i, one of the goals was "...to maintain self-custody of digital assets." Also, the Phoenix wallet returned to the U.S. In 2024, both Phoenix and Wallet of Satoshi pulled out of the U.S. for fear of government crackdowns. The return of Phoenix, again, speaks to the difference in climate now and is a win for self-custody.

To rise above B-level, more assurance, it would be good to see further clear assurance that people can self-custody, that developers can build self-custody, and businesses can create products to self-custody. Also, Congressional action could get to an A.

Day 100 Report Card Grade: B-

Pledge 8 - end war on crypto - There has been improvement here. First, tangibly, SAB 121 was sent packing as SEC Commissioner Hester Peirce announced. Essentially, this removed a large regulatory burden. Commissioner Peirce also said ending the burdens will be a process to get out of the "mess". So, there's work to do. Also, hurdles were recently removed so that banks can now engage in bitcoin activity. This is both a symbolic and real change.

Somewhat ironically, Trump's own venture into cryptocurrency with his World Liberty Financial and the $TRUMP and $MELANIA tokens, roundly poo-pood by bitcoiners, might actually be beneficial in a way. The signal from the White House seems to be on all things cryptocurrency, "Do it."

The improvement and climate now seems very different than with the previous administration and leaders who openly touted a war on crypto.

Day 100 Report Card Grade: B+

Pledge 9 - USA mining - As noted earlier, this is an impossible pledge. That said, things can be done to make America mining friendly. The U.S. holds an estimated 37 to 40% of Bitcoin hash rate, which is substantial. Plus, Trump, or the Trump family at least, has entered into bitcoin mining. With Hut 8, Eric Trump is heading "American Bitcoin" to mine BTC. Like the $TRUMP token, this conveys that bitcoin mining is a go in the USA.

Day 100 Report Card Grade: C

Pledge 10 - USA crypto capital - This pledge closely aligned with pledges 8 and 9. If the war on crypto ends, the USA becomes more and more crypto and bitcoin friendly. And, if the hashrate stays high and even increases, that puts the USA at the center of it all. Most of the categories above have seen improvements, all of which help this last pledge. Trump's executive orders help this grade as well as they move from only words spoken to becoming official policy.

To get higher, the Bitcoin Strategic Reserve should move from a name-change only to acquiring more BTC. If the USA wants to be the world's crypto capital, being the leader in bitcoin ownership is the way to do it.

Day 100 Report Card Grade: C+

Sources

- Nashville speech - https://www.youtube.com/watch?v=EiEIfBatnH8

- CryptoPotato "top 8 promises" - https://x.com/Crypto_Potato/status/1854105511349584226

- CNBC - https://www.cnbc.com/2024/11/06/trump-claims-presidential-win-here-is-what-he-promised-the-crypto-industry-ahead-of-the-election.html

- BLOCKHEAD - https://www.blockhead.co/2024/11/07/heres-everything-trump-promised-to-the-crypto-industry/

- CoinTelegraph - https://cointelegraph.com/news/trump-promises-crypto-election-usa

- China vid - Bitcoin ATH and US Strategic Bitcoin Stockpile - https://njump.me/nevent1qqsgmmuqumhfktugtnx9kcsh3ap6v7ca4z8rgx79palz2qk0wzz5cksppemhxue69uhkummn9ekx7mp0qgszwaxc8j8e0zw9sdq59y43rykyx3wm0lcd2502xth699v0gxf0degrqsqqqqqpglusv6

- Capitals gains tax - https://bravenewcoin.com/insights/trump-proposes-crypto-tax-cuts-targets-u-s-made-tokens-for-tax-exemption Progress report 1 ------------------------------------------------------------------------------------

- Meeting with Brian Armstrong - https://www.wsj.com/livecoverage/stock-market-today-dow-sp500-nasdaq-live-11-18-2024/card/exclusive-trump-to-meet-privately-with-coinbase-ceo-brian-armstrong-DDkgF0xW1BW242rVeuqx

- Michael Saylor podcast - https://fountain.fm/episode/DHEzGE0f99QQqyM36nVr

- Gensler resigns - https://coinpedia.org/news/big-breaking-sec-chair-gary-gensler-officially-resigns/ Progress report 2 ------------------------------------------------------------------------------------

- Trump & Justin Sun - https://www.coindesk.com/business/2024/11/26/justin-sun-joins-donald-trumps-world-liberty-financial-as-adviser $30M investment: https://www.yahoo.com/news/trump-crypto-project-bust-until-154313241.html

- SEC chair - https://www.cnbc.com/2024/12/04/trump-plans-to-nominate-paul-atkins-as-sec-chair.html

- Crypto czar - https://www.zerohedge.com/crypto/trump-names-david-sacks-white-house-ai-crypto-czar

- Investigate Choke Point 2.0 - https://www.cryptopolitan.com/crypto-czar-investigate-choke-point/

- Crypto council head Bo Hines - https://cointelegraph.com/news/trump-appoints-bo-hines-head-crypto-council

- National hash rate: https://www.cryptopolitan.com/the-us-controls-40-of-bitcoins-hashrate/

- Senate committee https://coinjournal.net/news/rep-senator-cynthia-lummis-selected-to-chair-crypto-subcommittee/

- Treasurh Sec. CBDC: https://decrypt.co/301444/trumps-treasury-pick-scott-bessant-pours-cold-water-on-us-digital-dollar-initiative

- National priority: https://cointelegraph.com/news/trump-executive-order-crypto-national-priority-bloomberg?utm_source=rss_feed&utm_medium=rss&utm_campaign=rss_partner_inbound

- $TRUMP https://njump.me/nevent1qqsffe0d7mgtu5jhasy4hmkcdy7wfrlcqwc4vf676hulvdn8uaqa3acpzamhxue69uhhyetvv9ujuurjd9kkzmpwdejhgtczyztpa8q038vw5xluyhnydj5u39d7cpssvuswjhhjqj8q42jh4ul3wqcyqqqqqqgmha026

- World Liberty buys alts: https://www.theblock.co/post/335779/trumps-world-liberty-buys-25-million-of-tokens-including-link-tron-aave-and-ethena?utm_source=rss&utm_medium=rss

- CFTC chair: https://cryptoslate.com/trump-appoints-crypto-advocate-caroline-pham-as-cftc-acting-chair/

- WLF buys wrapped BTC https://www.cryptopolitan.com/trump-buys-47-million-in-bitcoin/

- SEC turnover https://www.theblock.co/post/335944/trump-names-sec-commissioner-mark-uyeda-as-acting-chair-amid-a-crypto-regulatory-shift?utm_source=rss&utm_medium=rss

- ----------------------------100 Days Report---------------------------------Davos speech "world capital of AI and crypto" https://coinpedia.org/news/big-breaking-president-trump-says-u-s-to-become-ai-and-crypto-superpower/

- SAB 121 gone, Hester P heads talk force & ends sab 121?, war on crypto https://x.com/HesterPeirce/status/1882562977985114185 article: https://www.theblock.co/post/336761/days-after-gensler-leaves-sec-rescinds-controversial-crypto-accounting-guidance-sab-121?utm_source=twitter&utm_medium=social CoinTelegraph: https://cointelegraph.com/news/trump-executive-order-cbdc-ban-game-changer-us-institutional-crypto-adoption?utm_source=rss_feed&utm_medium=rss&utm_campaign=rss_partner_inbound

- Possible tax relief https://cryptodnes.bg/en/will-trumps-crypto-policies-lead-to-tax-relief-for-crypto-investors/

- War on crypto https://decrypt.co/304395/trump-sec-crypto-task-force-priorities-mess

- Trump "truths" 2/18 make usa #1 in crypto, "Trump effect" https://www.theblock.co/post/333137/ripple-ceo-says-75-of-open-roles-are-now-us-based-due-to-trump-effect and https://www.coindesk.com/markets/2025/01/06/ripples-garlinghouse-touts-trump-effect-amid-bump-in-u-s-deals

- Strategic reserve https://njump.me/nevent1qqsf89l74mqfkk74jqhjcqtwp5m970gedmtykn5uhl0vz9mhmrvvvgqpzamhxue69uhhyetvv9ujuurjd9kkzmpwdejhgtczyztpa8q038vw5xluyhnydj5u39d7cpssvuswjhhjqj8q42jh4ul3wqcyqqqqqqge7c74u and https://njump.me/nevent1qqswv50m7mc95m3saqce08jzpqc0vedw4avdk6zxy9axrn3hqet52xgpzamhxue69uhhyetvv9ujuurjd9kkzmpwdejhgtczyztpa8q038vw5xluyhnydj5u39d7cpssvuswjhhjqj8q42jh4ul3wqcyqqqqqqgpc7cp3

- Strategic reserve, bitcoin special https://www.thestreet.com/crypto/policy/bitcoin-to-hold-special-status-in-u-s-crypto-strategic-reserve

- Bitcoin reserve, crypto stockpile https://decrypt.co/309032/president-trump-signs-executive-order-to-establish-bitcoin-reserve-crypto-stockpile vid link https://njump.me/nevent1qqs09h58patpv9vfjpcss6v5nxv7m23u8g6g43nqvkjzgzescztucmspr9mhxue69uhhyetvv9ujumt0d4hhxarj9ecxjmnt9upzqtjzyy2ylrsceh5uj20j5e95v0e99s3epsvyctu2y0vrwyltvq33qvzqqqqqqyus4pu7

- Truth summit https://njump.me/nevent1qqswj6sv0wr4d4ppwzam5egr5k6nmqgjpwmsrlx2a7d4ndpfj0fxvcqpzamhxue69uhhyetvv9ujuurjd9kkzmpwdejhgtczyztpa8q038vw5xluyhnydj5u39d7cpssvuswjhhjqj8q42jh4ul3wqcyqqqqqqgu0mzzh and vid https://njump.me/nevent1qqsptn8c8wyuhlqtjr5u767x20q4dmjvxy28cdj30t4v9phhf6y5a5spzamhxue69uhhyetvv9ujuurjd9kkzmpwdejhgtczyztpa8q038vw5xluyhnydj5u39d7cpssvuswjhhjqj8q42jh4ul3wqcyqqqqqqgqklklu

- SEC chair confirmed https://beincrypto.com/sec-chair-paul-atkins-confirmed-senate-vote/

- pro bitcoin USA https://coinpedia.org/news/u-s-secretary-of-commerce-howard-lutnick-says-america-is-ready-for-bitcoin/

- tax cuts https://thehill.com/homenews/house/5272043-johnson-house-trump-agenda-memorial-day/

- "as much as we can get" https://cryptobriefing.com/trump-bitcoin-acquisition-strategy/

- ban on CBDC https://www.whitehouse.gov/presidential-actions/2025/01/strengthening-american-leadership-in-digital-financial-technology/

- Phoenix WoS leave https://www.coindesk.com/opinion/2024/04/29/wasabi-wallet-and-phoenix-leave-the-us-whats-next-for-non-custodial-crypto

- Trump hut 8 mining https://www.reuters.com/technology/hut-8-eric-trump-launch-bitcoin-mining-company-2025-03-31/

-

@ 8125b911:a8400883

2025-04-25 07:02:35

@ 8125b911:a8400883

2025-04-25 07:02:35In Nostr, all data is stored as events. Decentralization is achieved by storing events on multiple relays, with signatures proving the ownership of these events. However, if you truly want to own your events, you should run your own relay to store them. Otherwise, if the relays you use fail or intentionally delete your events, you'll lose them forever.

For most people, running a relay is complex and costly. To solve this issue, I developed nostr-relay-tray, a relay that can be easily run on a personal computer and accessed over the internet.

Project URL: https://github.com/CodyTseng/nostr-relay-tray

This article will guide you through using nostr-relay-tray to run your own relay.

Download

Download the installation package for your operating system from the GitHub Release Page.

| Operating System | File Format | | --------------------- | ---------------------------------- | | Windows |

nostr-relay-tray.Setup.x.x.x.exe| | macOS (Apple Silicon) |nostr-relay-tray-x.x.x-arm64.dmg| | macOS (Intel) |nostr-relay-tray-x.x.x.dmg| | Linux | You should know which one to use |Installation

Since this app isn’t signed, you may encounter some obstacles during installation. Once installed, an ostrich icon will appear in the status bar. Click on the ostrich icon, and you'll see a menu where you can click the "Dashboard" option to open the relay's control panel for further configuration.

macOS Users:

- On first launch, go to "System Preferences > Security & Privacy" and click "Open Anyway."

- If you encounter a "damaged" message, run the following command in the terminal to remove the restrictions:

bash sudo xattr -rd com.apple.quarantine /Applications/nostr-relay-tray.appWindows Users:

- On the security warning screen, click "More Info > Run Anyway."

Connecting

By default, nostr-relay-tray is only accessible locally through

ws://localhost:4869/, which makes it quite limited. Therefore, we need to expose it to the internet.In the control panel, click the "Proxy" tab and toggle the switch. You will then receive a "Public address" that you can use to access your relay from anywhere. It's that simple.

Next, add this address to your relay list and position it as high as possible in the list. Most clients prioritize connecting to relays that appear at the top of the list, and relays lower in the list are often ignored.

Restrictions

Next, we need to set up some restrictions to prevent the relay from storing events that are irrelevant to you and wasting storage space. nostr-relay-tray allows for flexible and fine-grained configuration of which events to accept, but some of this is more complex and will not be covered here. If you're interested, you can explore this further later.

For now, I'll introduce a simple and effective strategy: WoT (Web of Trust). You can enable this feature in the "WoT & PoW" tab. Before enabling, you'll need to input your pubkey.

There's another important parameter,

Depth, which represents the relationship depth between you and others. Someone you follow has a depth of 1, someone they follow has a depth of 2, and so on.- Setting this parameter to 0 means your relay will only accept your own events.

- Setting it to 1 means your relay will accept events from you and the people you follow.

- Setting it to 2 means your relay will accept events from you, the people you follow, and the people they follow.

Currently, the maximum value for this parameter is 2.

Conclusion

You've now successfully run your own relay and set a simple restriction to prevent it from storing irrelevant events.

If you encounter any issues during use, feel free to submit an issue on GitHub, and I'll respond as soon as possible.

Not your relay, not your events.

-

@ 7d33ba57:1b82db35

2025-04-29 14:14:11

@ 7d33ba57:1b82db35

2025-04-29 14:14:11Located in eastern Poland, Lublin is a city where history, culture, and youthful energy come together. Often called the "Gateway to the East," Lublin blends Gothic and Renaissance architecture, vibrant street life, and deep historical roots—especially as a center of Jewish heritage and intellectual life.

🏙️ Top Things to See in Lublin

🏰 Lublin Castle

- A striking hilltop castle with a neo-Gothic façade and a beautifully preserved Romanesque chapel (Chapel of the Holy Trinity)

- Don’t miss the frescoes inside—a rare mix of Byzantine and Western art styles

🚪 Old Town (Stare Miasto)

- Wander through cobblestone streets, pastel buildings, and arched gateways

- Filled with cozy cafes, galleries, and vibrant murals

- The Grodzka Gate symbolizes the passage between Christian and Jewish quarters

🕯️ Lublin’s Jewish Heritage

- Visit the Grodzka Gate – NN Theatre, a powerful memorial and museum telling the story of the once-vibrant Jewish community

- Nearby Majdanek Concentration Camp offers a sobering but important historical experience

🎭 Culture & Events

- Lublin is known for its festivals, like Carnaval Sztukmistrzów (Festival of Magicians and Street Performers) and the Night of Culture

- The city has a thriving theatre and music scene, supported by its large student population

🌳 Green Spaces

- Relax in Saski Garden, a peaceful park with walking paths and fountains

- Or take a walk along the Bystrzyca River for a quieter, more local feel

🍽️ Local Tastes

- Sample Polish classics like pierogi, żurek (sour rye soup), and bigos (hunter’s stew)

- Look for modern twists on traditional dishes in Lublin’s growing number of bistros and artisan cafés

🚆 Getting There

- Easy access by train or bus from Warsaw (2–2.5 hours)

- Compact center—easily walkable

-

@ e691f4df:1099ad65

2025-04-24 18:56:12

@ e691f4df:1099ad65

2025-04-24 18:56:12Viewing Bitcoin Through the Light of Awakening

Ankh & Ohm Capital’s Overview of the Psycho-Spiritual Nature of Bitcoin

Glossary:

I. Preface: The Logos of Our Logo

II. An Oracular Introduction

III. Alchemizing Greed

IV. Layers of Fractalized Thought

V. Permissionless Individuation

VI. Dispelling Paradox Through Resonance

VII. Ego Deflation

VIII. The Coin of Great Price

Preface: The Logos of Our Logo

Before we offer our lens on Bitcoin, it’s important to illuminate the meaning behind Ankh & Ohm’s name and symbol. These elements are not ornamental—they are foundational, expressing the cosmological principles that guide our work.

Our mission is to bridge the eternal with the practical. As a Bitcoin-focused family office and consulting firm, we understand capital not as an end, but as a tool—one that, when properly aligned, becomes a vehicle for divine order. We see Bitcoin not simply as a technological innovation but as an emanation of the Divine Logos—a harmonic expression of truth, transparency, and incorruptible structure. Both the beginning and the end, the Alpha and Omega.

The Ankh (☥), an ancient symbol of eternal life, is a key to the integration of opposites. It unites spirit and matter, force and form, continuity and change. It reminds us that capital, like Life, must not only be generative, but regenerative; sacred. Money must serve Life, not siphon from it.

The Ohm (Ω) holds a dual meaning. In physics, it denotes a unit of electrical resistance—the formative tension that gives energy coherence. In the Vedic tradition, Om (ॐ) is the primordial vibration—the sound from which all existence unfolds. Together, these symbols affirm a timeless truth: resistance and resonance are both sacred instruments of the Creator.

Ankh & Ohm, then, represents our striving for union, for harmony —between the flow of life and intentional structure, between incalculable abundance and measured restraint, between the lightbulb’s electrical impulse and its light-emitting filament. We stand at the threshold where intention becomes action, and where capital is not extracted, but cultivated in rhythm with the cosmos.

We exist to shepherd this transformation, as guides of this threshold —helping families, founders, and institutions align with a deeper order, where capital serves not as the prize, but as a pathway to collective Presence, Purpose, Peace and Prosperity.

An Oracular Introduction

Bitcoin is commonly understood as the first truly decentralized and secure form of digital money—a breakthrough in monetary sovereignty. But this view, while technically correct, is incomplete and spiritually shallow. Bitcoin is more than a tool for economic disruption. Bitcoin represents a mythic threshold: a symbol of the psycho-spiritual shift that many ancient traditions have long foretold.

For millennia, sages and seers have spoken of a coming Golden Age. In the Vedic Yuga cycles, in Plato’s Great Year, in the Eagle and Condor prophecies of the Americas—there exists a common thread: that humanity will emerge from darkness into a time of harmony, cooperation, and clarity. That the veil of illusion (maya, materiality) will thin, and reality will once again become transparent to the transcendent. In such an age, systems based on scarcity, deception, and centralization fall away. A new cosmology takes root—one grounded in balance, coherence, and sacred reciprocity.

But we must ask—how does such a shift happen? How do we cross from the age of scarcity, fear, and domination into one of coherence, abundance, and freedom?

One possible answer lies in the alchemy of incentive.

Bitcoin operates not just on the rules of computer science or Austrian economics, but on something far more old and subtle: the logic of transformation. It transmutes greed—a base instinct rooted in scarcity—into cooperation, transparency, and incorruptibility.

In this light, Bitcoin becomes more than code—it becomes a psychoactive protocol, one that rewires human behavior by aligning individual gain with collective integrity. It is not simply a new form of money. It is a new myth of value. A new operating system for human consciousness.

Bitcoin does not moralize. It harmonizes. It transforms the instinct for self-preservation into a pathway for planetary coherence.

Alchemizing Greed

At the heart of Bitcoin lies the ancient alchemical principle of transmutation: that which is base may be refined into gold.

Greed, long condemned as a vice, is not inherently evil. It is a distorted longing. A warped echo of the drive to preserve life. But in systems built on scarcity and deception, this longing calcifies into hoarding, corruption, and decay.

Bitcoin introduces a new game. A game with memory. A game that makes deception inefficient and truth profitable. It does not demand virtue—it encodes consequence. Its design does not suppress greed; it reprograms it.

In traditional models, game theory often illustrates the fragility of trust. The Prisoner’s Dilemma reveals how self-interest can sabotage collective well-being. But Bitcoin inverts this. It creates an environment where self-interest and integrity converge—where the most rational action is also the most truthful.

Its ledger, immutable and transparent, exposes manipulation for what it is: energetically wasteful and economically self-defeating. Dishonesty burns energy and yields nothing. The network punishes incoherence, not by decree, but by natural law.

This is the spiritual elegance of Bitcoin: it does not suppress greed—it transmutes it. It channels the drive for personal gain into the architecture of collective order. Miners compete not to dominate, but to validate. Nodes collaborate not through trust, but through mathematical proof.

This is not austerity. It is alchemy.

Greed, under Bitcoin, is refined. Tempered. Re-forged into a generative force—no longer parasitic, but harmonic.

Layers of Fractalized Thought Fragments

All living systems are layered. So is the cosmos. So is the human being. So is a musical scale.

At its foundation lies the timechain—the pulsing, incorruptible record of truth. Like the heart, it beats steadily. Every block, like a pulse, affirms its life through continuity. The difficulty adjustment—Bitcoin’s internal calibration—functions like heart rate variability, adapting to pressure while preserving coherence.

Above this base layer is the Lightning Network—a second layer facilitating rapid, efficient transactions. It is the nervous system: transmitting energy, reducing latency, enabling real-time interaction across a distributed whole.

Beyond that, emerging tools like Fedimint and Cashu function like the capillaries—bringing vitality to the extremities, to those underserved by legacy systems. They empower the unbanked, the overlooked, the forgotten. Privacy and dignity in the palms of those the old system refused to see.

And then there is NOSTR—the decentralized protocol for communication and creation. It is the throat chakra, the vocal cords of the “freedom-tech” body. It reclaims speech from the algorithmic overlords, making expression sovereign once more. It is also the reproductive system, as it enables the propagation of novel ideas and protocols in fertile, uncensorable soil.

Each layer plays its part. Not in hierarchy, but in harmony. In holarchy. Bitcoin and other open source protocols grow not through exogenous command, but through endogenous coherence. Like cells in an organism. Like a song.

Imagine the cell as a piece of glass from a shattered holographic plate —by which its perspectival, moving image can be restructured from the single shard. DNA isn’t only a logical script of base pairs, but an evolving progressive song. Its lyrics imbued with wise reflections on relationships. The nucleus sings, the cell responds—not by command, but by memory. Life is not imposed; it is expressed. A reflection of a hidden pattern.

Bitcoin chants this. Each node, a living cell, holds the full timechain—Truth distributed, incorruptible. Remove one, and the whole remains. This isn’t redundancy. It’s a revelation on the power of protection in Truth.

Consensus is communion. Verification becomes a sacred rite—Truth made audible through math.

Not just the signal; the song. A web of self-expression woven from Truth.

No center, yet every point alive with the whole. Like Indra’s Net, each reflects all. This is more than currency and information exchange. It is memory; a self-remembering Mind, unfolding through consensus and code. A Mind reflecting the Truth of reality at the speed of thought.

Heuristics are mental shortcuts—efficient, imperfect, alive. Like cells, they must adapt or decay. To become unbiased is to have self-balancing heuristics which carry feedback loops within them: they listen to the environment, mutate when needed, and survive by resonance with reality. Mutation is not error, but evolution. Its rules are simple, but their expression is dynamic.

What persists is not rigidity, but pattern.

To think clearly is not necessarily to be certain, but to dissolve doubt by listening, adjusting, and evolving thought itself.

To understand Bitcoin is simply to listen—patiently, clearly, as one would to a familiar rhythm returning.

Permissionless Individuation

Bitcoin is a path. One that no one can walk for you.

Said differently, it is not a passive act. It cannot be spoon-fed. Like a spiritual path, it demands initiation, effort, and the willingness to question inherited beliefs.

Because Bitcoin is permissionless, no one can be forced to adopt it. One must choose to engage it—compelled by need, interest, or intuition. Each person who embarks undergoes their own version of the hero’s journey.

Carl Jung called this process Individuation—the reconciliation of fragmented psychic elements into a coherent, mature Self. Bitcoin mirrors this: it invites individuals to confront the unconscious assumptions of the fiat paradigm, and to re-integrate their relationship to time, value, and agency.

In Western traditions—alchemy, Christianity, Kabbalah—the individual is sacred, and salvation is personal. In Eastern systems—Daoism, Buddhism, the Vedas—the self is ultimately dissolved into the cosmic whole. Bitcoin, in a paradoxical way, echoes both: it empowers the individual, while aligning them with a holistic, transcendent order.

To truly see Bitcoin is to allow something false to die. A belief. A habit. A self-concept.

In that death—a space opens for deeper connection with the Divine itSelf.

In that dissolution, something luminous is reborn.

After the passing, Truth becomes resurrected.

Dispelling Paradox Through Resonance

There is a subtle paradox encoded into the hero’s journey: each starts in solidarity, yet the awakening affects the collective.

No one can be forced into understanding Bitcoin. Like a spiritual truth, it must be seen. And yet, once seen, it becomes nearly impossible to unsee—and easier for others to glimpse. The pattern catches.

This phenomenon mirrors the concept of morphic resonance, as proposed and empirically tested by biologist Rupert Sheldrake. Once a critical mass of individuals begins to embody a new behavior or awareness, it becomes easier—instinctive—for others to follow suit. Like the proverbial hundredth monkey who begins to wash the fruit in the sea water, and suddenly, monkeys across islands begin doing the same—without ever meeting.

When enough individuals embody a pattern, it ripples outward. Not through propaganda, but through field effect and wave propagation. It becomes accessible, instinctive, familiar—even across great distance.

Bitcoin spreads in this way. Not through centralized broadcast, but through subtle resonance. Each new node, each individual who integrates the protocol into their life, strengthens the signal for others. The protocol doesn’t shout; it hums, oscillates and vibrates——persistently, coherently, patiently.

One awakens. Another follows. The current builds. What was fringe becomes familiar. What was radical becomes obvious.

This is the sacred geometry of spiritual awakening. One awakens, another follows, and soon the fluidic current is strong enough to carry the rest. One becomes two, two become many, and eventually the many become One again. This tessellation reverberates through the human aura, not as ideology, but as perceivable pattern recognition.

Bitcoin’s most powerful marketing tool is truth. Its most compelling evangelist is reality. Its most unstoppable force is resonance.

Therefore, Bitcoin is not just financial infrastructure—it is psychic scaffolding. It is part of the subtle architecture through which new patterns of coherence ripple across the collective field.

The training wheels from which humanity learns to embody Peace and Prosperity.

Ego Deflation

The process of awakening is not linear, and its beginning is rarely gentle—it usually begins with disruption, with ego inflation and destruction.

To individuate is to shape a center; to recognize peripherals and create boundaries—to say, “I am.” But without integration, the ego tilts—collapsing into void or inflating into noise. Fiat reflects this pathology: scarcity hoarded, abundance simulated. Stagnation becomes disguised as safety, and inflation masquerades as growth.

In other words, to become whole, the ego must first rise—claiming agency, autonomy, and identity. However, when left unbalanced, it inflates, or implodes. It forgets its context. It begins to consume rather than connect. And so the process must reverse: what inflates must deflate.

In the fiat paradigm, this inflation is literal. More is printed, and ethos is diluted. Savings decay. Meaning erodes. Value is abstracted. The economy becomes bloated with inaudible noise. And like the psyche that refuses to confront its own shadow, it begins to collapse under the weight of its own illusions.

But under Bitcoin, time is honored. Value is preserved. Energy is not abstracted but grounded.

Bitcoin is inherently deflationary—in both economic and spiritual senses. With a fixed supply, it reveals what is truly scarce. Not money, not status—but the finite number of heartbeats we each carry.

To see Bitcoin is to feel that limit in one’s soul. To hold Bitcoin is to feel Time’s weight again. To sense the importance of Bitcoin is to feel the value of preserved, potential energy. It is to confront the reality that what matters cannot be printed, inflated, or faked. In this way, Bitcoin gently confronts the ego—not through punishment, but through clarity.

Deflation, rightly understood, is not collapse—it is refinement. It strips away illusion, bloat, and excess. It restores the clarity of essence.

Spiritually, this is liberation.

The Coin of Great Price

There is an ancient parable told by a wise man:

“The kingdom of heaven is like a merchant seeking fine pearls, who, upon finding one of great price, sold all he had and bought it.”

Bitcoin is such a pearl.

But the ledger is more than a chest full of treasure. It is a key to the heart of things.

It is not just software—it is sacrament.

A symbol of what cannot be corrupted. A mirror of divine order etched into code. A map back to the sacred center.

It reflects what endures. It encodes what cannot be falsified. It remembers what we forgot: that Truth, when aligned with form, becomes Light once again.

Its design is not arbitrary. It speaks the language of life itself—

The elliptic orbits of the planets mirrored in its cryptography,

The logarithmic spiral of the nautilus shell discloses its adoption rate,

The interconnectivity of mycelium in soil reflect the network of nodes in cyberspace,

A webbed breadth of neurons across synaptic space fires with each new confirmed transaction.

It is geometry in devotion. Stillness in motion.

It is the Logos clothed in protocol.

What this key unlocks is beyond external riches. It is the eternal gold within us.

Clarity. Sovereignty. The unshakeable knowing that what is real cannot be taken. That what is sacred was never for sale.

Bitcoin is not the destination.

It is the Path.

And we—when we are willing to see it—are the Temple it leads back to.

-

@ 40b9c85f:5e61b451

2025-04-24 15:27:02

@ 40b9c85f:5e61b451

2025-04-24 15:27:02Introduction

Data Vending Machines (DVMs) have emerged as a crucial component of the Nostr ecosystem, offering specialized computational services to clients across the network. As defined in NIP-90, DVMs operate on an apparently simple principle: "data in, data out." They provide a marketplace for data processing where users request specific jobs (like text translation, content recommendation, or AI text generation)

While DVMs have gained significant traction, the current specification faces challenges that hinder widespread adoption and consistent implementation. This article explores some ideas on how we can apply the reflection pattern, a well established approach in RPC systems, to address these challenges and improve the DVM ecosystem's clarity, consistency, and usability.

The Current State of DVMs: Challenges and Limitations

The NIP-90 specification provides a broad framework for DVMs, but this flexibility has led to several issues:

1. Inconsistent Implementation

As noted by hzrd149 in "DVMs were a mistake" every DVM implementation tends to expect inputs in slightly different formats, even while ostensibly following the same specification. For example, a translation request DVM might expect an event ID in one particular format, while an LLM service could expect a "prompt" input that's not even specified in NIP-90.

2. Fragmented Specifications

The DVM specification reserves a range of event kinds (5000-6000), each meant for different types of computational jobs. While creating sub-specifications for each job type is being explored as a possible solution for clarity, in a decentralized and permissionless landscape like Nostr, relying solely on specification enforcement won't be effective for creating a healthy ecosystem. A more comprehensible approach is needed that works with, rather than against, the open nature of the protocol.

3. Ambiguous API Interfaces

There's no standardized way for clients to discover what parameters a specific DVM accepts, which are required versus optional, or what output format to expect. This creates uncertainty and forces developers to rely on documentation outside the protocol itself, if such documentation exists at all.

The Reflection Pattern: A Solution from RPC Systems

The reflection pattern in RPC systems offers a compelling solution to many of these challenges. At its core, reflection enables servers to provide metadata about their available services, methods, and data types at runtime, allowing clients to dynamically discover and interact with the server's API.

In established RPC frameworks like gRPC, reflection serves as a self-describing mechanism where services expose their interface definitions and requirements. In MCP reflection is used to expose the capabilities of the server, such as tools, resources, and prompts. Clients can learn about available capabilities without prior knowledge, and systems can adapt to changes without requiring rebuilds or redeployments. This standardized introspection creates a unified way to query service metadata, making tools like

grpcurlpossible without requiring precompiled stubs.How Reflection Could Transform the DVM Specification

By incorporating reflection principles into the DVM specification, we could create a more coherent and predictable ecosystem. DVMs already implement some sort of reflection through the use of 'nip90params', which allow clients to discover some parameters, constraints, and features of the DVMs, such as whether they accept encryption, nutzaps, etc. However, this approach could be expanded to provide more comprehensive self-description capabilities.

1. Defined Lifecycle Phases

Similar to the Model Context Protocol (MCP), DVMs could benefit from a clear lifecycle consisting of an initialization phase and an operation phase. During initialization, the client and DVM would negotiate capabilities and exchange metadata, with the DVM providing a JSON schema containing its input requirements. nip-89 (or other) announcements can be used to bootstrap the discovery and negotiation process by providing the input schema directly. Then, during the operation phase, the client would interact with the DVM according to the negotiated schema and parameters.

2. Schema-Based Interactions

Rather than relying on rigid specifications for each job type, DVMs could self-advertise their schemas. This would allow clients to understand which parameters are required versus optional, what type validation should occur for inputs, what output formats to expect, and what payment flows are supported. By internalizing the input schema of the DVMs they wish to consume, clients gain clarity on how to interact effectively.

3. Capability Negotiation

Capability negotiation would enable DVMs to advertise their supported features, such as encryption methods, payment options, or specialized functionalities. This would allow clients to adjust their interaction approach based on the specific capabilities of each DVM they encounter.

Implementation Approach

While building DVMCP, I realized that the RPC reflection pattern used there could be beneficial for constructing DVMs in general. Since DVMs already follow an RPC style for their operation, and reflection is a natural extension of this approach, it could significantly enhance and clarify the DVM specification.

A reflection enhanced DVM protocol could work as follows: 1. Discovery: Clients discover DVMs through existing NIP-89 application handlers, input schemas could also be advertised in nip-89 announcements, making the second step unnecessary. 2. Schema Request: Clients request the DVM's input schema for the specific job type they're interested in 3. Validation: Clients validate their request against the provided schema before submission 4. Operation: The job proceeds through the standard NIP-90 flow, but with clearer expectations on both sides

Parallels with Other Protocols

This approach has proven successful in other contexts. The Model Context Protocol (MCP) implements a similar lifecycle with capability negotiation during initialization, allowing any client to communicate with any server as long as they adhere to the base protocol. MCP and DVM protocols share fundamental similarities, both aim to expose and consume computational resources through a JSON-RPC-like interface, albeit with specific differences.

gRPC's reflection service similarly allows clients to discover service definitions at runtime, enabling generic tools to work with any gRPC service without prior knowledge. In the REST API world, OpenAPI/Swagger specifications document interfaces in a way that makes them discoverable and testable.

DVMs would benefit from adopting these patterns while maintaining the decentralized, permissionless nature of Nostr.

Conclusion

I am not attempting to rewrite the DVM specification; rather, explore some ideas that could help the ecosystem improve incrementally, reducing fragmentation and making the ecosystem more comprehensible. By allowing DVMs to self describe their interfaces, we could maintain the flexibility that makes Nostr powerful while providing the structure needed for interoperability.

For developers building DVM clients or libraries, this approach would simplify consumption by providing clear expectations about inputs and outputs. For DVM operators, it would establish a standard way to communicate their service's requirements without relying on external documentation.