-

@ 91bea5cd:1df4451c

2025-02-04 17:24:50

@ 91bea5cd:1df4451c

2025-02-04 17:24:50Definição de ULID:

Timestamp 48 bits, Aleatoriedade 80 bits Sendo Timestamp 48 bits inteiro, tempo UNIX em milissegundos, Não ficará sem espaço até o ano 10889 d.C. e Aleatoriedade 80 bits, Fonte criptograficamente segura de aleatoriedade, se possível.

Gerar ULID

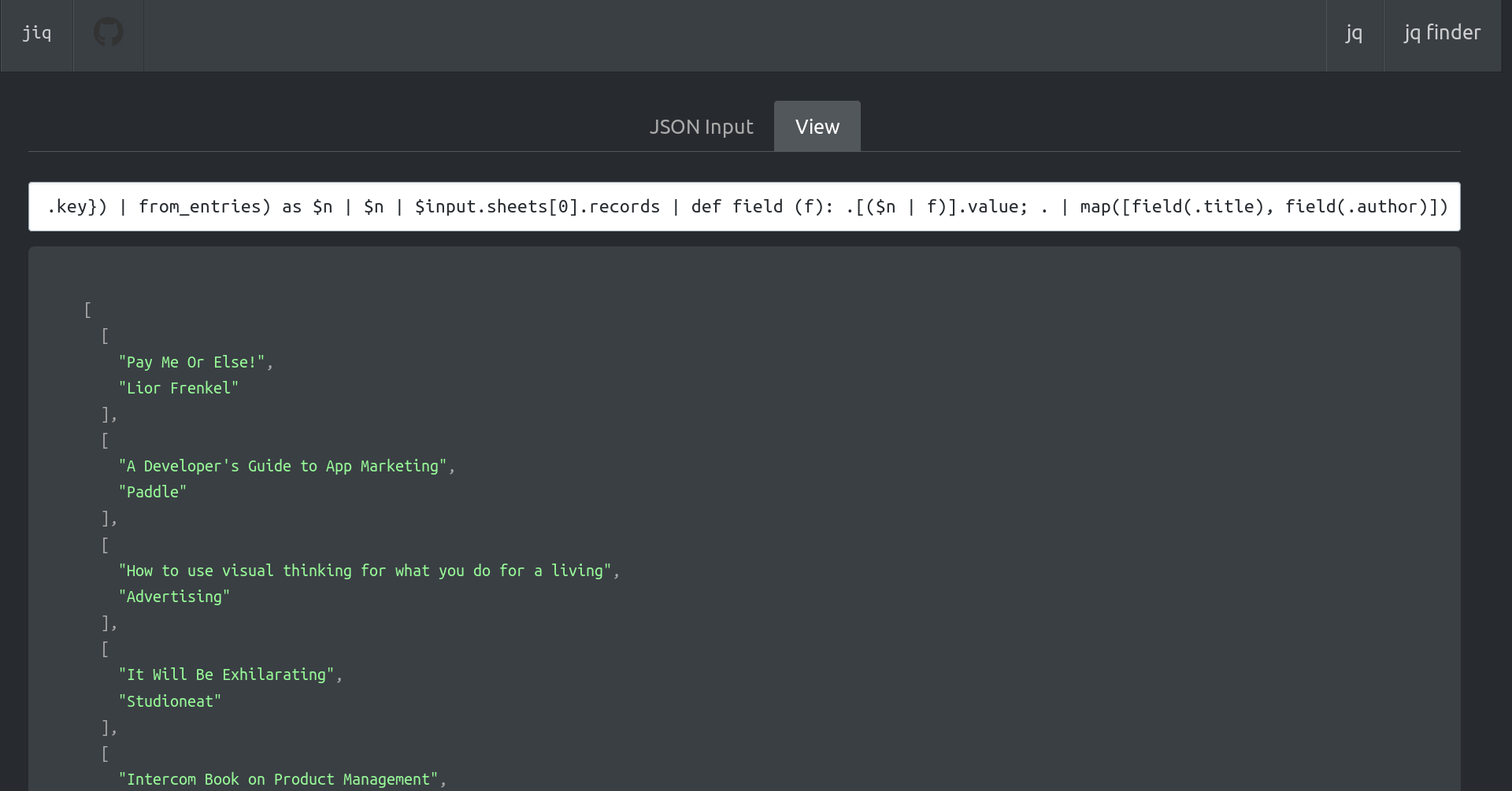

```sql

CREATE EXTENSION IF NOT EXISTS pgcrypto;

CREATE FUNCTION generate_ulid() RETURNS TEXT AS $$ DECLARE -- Crockford's Base32 encoding BYTEA = '0123456789ABCDEFGHJKMNPQRSTVWXYZ'; timestamp BYTEA = E'\000\000\000\000\000\000'; output TEXT = '';

unix_time BIGINT; ulid BYTEA; BEGIN -- 6 timestamp bytes unix_time = (EXTRACT(EPOCH FROM CLOCK_TIMESTAMP()) * 1000)::BIGINT; timestamp = SET_BYTE(timestamp, 0, (unix_time >> 40)::BIT(8)::INTEGER); timestamp = SET_BYTE(timestamp, 1, (unix_time >> 32)::BIT(8)::INTEGER); timestamp = SET_BYTE(timestamp, 2, (unix_time >> 24)::BIT(8)::INTEGER); timestamp = SET_BYTE(timestamp, 3, (unix_time >> 16)::BIT(8)::INTEGER); timestamp = SET_BYTE(timestamp, 4, (unix_time >> 8)::BIT(8)::INTEGER); timestamp = SET_BYTE(timestamp, 5, unix_time::BIT(8)::INTEGER);

-- 10 entropy bytes ulid = timestamp || gen_random_bytes(10);

-- Encode the timestamp output = output || CHR(GET_BYTE(encoding, (GET_BYTE(ulid, 0) & 224) >> 5)); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(ulid, 0) & 31))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(ulid, 1) & 248) >> 3)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(ulid, 1) & 7) << 2) | ((GET_BYTE(ulid, 2) & 192) >> 6))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(ulid, 2) & 62) >> 1)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(ulid, 2) & 1) << 4) | ((GET_BYTE(ulid, 3) & 240) >> 4))); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(ulid, 3) & 15) << 1) | ((GET_BYTE(ulid, 4) & 128) >> 7))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(ulid, 4) & 124) >> 2)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(ulid, 4) & 3) << 3) | ((GET_BYTE(ulid, 5) & 224) >> 5))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(ulid, 5) & 31)));

-- Encode the entropy output = output || CHR(GET_BYTE(encoding, (GET_BYTE(ulid, 6) & 248) >> 3)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(ulid, 6) & 7) << 2) | ((GET_BYTE(ulid, 7) & 192) >> 6))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(ulid, 7) & 62) >> 1)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(ulid, 7) & 1) << 4) | ((GET_BYTE(ulid, 8) & 240) >> 4))); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(ulid, 8) & 15) << 1) | ((GET_BYTE(ulid, 9) & 128) >> 7))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(ulid, 9) & 124) >> 2)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(ulid, 9) & 3) << 3) | ((GET_BYTE(ulid, 10) & 224) >> 5))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(ulid, 10) & 31))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(ulid, 11) & 248) >> 3)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(ulid, 11) & 7) << 2) | ((GET_BYTE(ulid, 12) & 192) >> 6))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(ulid, 12) & 62) >> 1)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(ulid, 12) & 1) << 4) | ((GET_BYTE(ulid, 13) & 240) >> 4))); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(ulid, 13) & 15) << 1) | ((GET_BYTE(ulid, 14) & 128) >> 7))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(ulid, 14) & 124) >> 2)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(ulid, 14) & 3) << 3) | ((GET_BYTE(ulid, 15) & 224) >> 5))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(ulid, 15) & 31)));

RETURN output; END $$ LANGUAGE plpgsql VOLATILE; ```

ULID TO UUID

```sql CREATE OR REPLACE FUNCTION parse_ulid(ulid text) RETURNS bytea AS $$ DECLARE -- 16byte bytes bytea = E'\x00000000 00000000 00000000 00000000'; v char[]; -- Allow for O(1) lookup of index values dec integer[] = ARRAY[ 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 255, 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 255, 255, 255, 255, 255, 255, 255, 10, 11, 12, 13, 14, 15, 16, 17, 1, 18, 19, 1, 20, 21, 0, 22, 23, 24, 25, 26, 255, 27, 28, 29, 30, 31, 255, 255, 255, 255, 255, 255, 10, 11, 12, 13, 14, 15, 16, 17, 1, 18, 19, 1, 20, 21, 0, 22, 23, 24, 25, 26, 255, 27, 28, 29, 30, 31 ]; BEGIN IF NOT ulid ~* '^[0-7][0-9ABCDEFGHJKMNPQRSTVWXYZ]{25}$' THEN RAISE EXCEPTION 'Invalid ULID: %', ulid; END IF;

v = regexp_split_to_array(ulid, '');

-- 6 bytes timestamp (48 bits) bytes = SET_BYTE(bytes, 0, (dec[ASCII(v[1])] << 5) | dec[ASCII(v[2])]); bytes = SET_BYTE(bytes, 1, (dec[ASCII(v[3])] << 3) | (dec[ASCII(v[4])] >> 2)); bytes = SET_BYTE(bytes, 2, (dec[ASCII(v[4])] << 6) | (dec[ASCII(v[5])] << 1) | (dec[ASCII(v[6])] >> 4)); bytes = SET_BYTE(bytes, 3, (dec[ASCII(v[6])] << 4) | (dec[ASCII(v[7])] >> 1)); bytes = SET_BYTE(bytes, 4, (dec[ASCII(v[7])] << 7) | (dec[ASCII(v[8])] << 2) | (dec[ASCII(v[9])] >> 3)); bytes = SET_BYTE(bytes, 5, (dec[ASCII(v[9])] << 5) | dec[ASCII(v[10])]);

-- 10 bytes of entropy (80 bits); bytes = SET_BYTE(bytes, 6, (dec[ASCII(v[11])] << 3) | (dec[ASCII(v[12])] >> 2)); bytes = SET_BYTE(bytes, 7, (dec[ASCII(v[12])] << 6) | (dec[ASCII(v[13])] << 1) | (dec[ASCII(v[14])] >> 4)); bytes = SET_BYTE(bytes, 8, (dec[ASCII(v[14])] << 4) | (dec[ASCII(v[15])] >> 1)); bytes = SET_BYTE(bytes, 9, (dec[ASCII(v[15])] << 7) | (dec[ASCII(v[16])] << 2) | (dec[ASCII(v[17])] >> 3)); bytes = SET_BYTE(bytes, 10, (dec[ASCII(v[17])] << 5) | dec[ASCII(v[18])]); bytes = SET_BYTE(bytes, 11, (dec[ASCII(v[19])] << 3) | (dec[ASCII(v[20])] >> 2)); bytes = SET_BYTE(bytes, 12, (dec[ASCII(v[20])] << 6) | (dec[ASCII(v[21])] << 1) | (dec[ASCII(v[22])] >> 4)); bytes = SET_BYTE(bytes, 13, (dec[ASCII(v[22])] << 4) | (dec[ASCII(v[23])] >> 1)); bytes = SET_BYTE(bytes, 14, (dec[ASCII(v[23])] << 7) | (dec[ASCII(v[24])] << 2) | (dec[ASCII(v[25])] >> 3)); bytes = SET_BYTE(bytes, 15, (dec[ASCII(v[25])] << 5) | dec[ASCII(v[26])]);

RETURN bytes; END $$ LANGUAGE plpgsql IMMUTABLE;

CREATE OR REPLACE FUNCTION ulid_to_uuid(ulid text) RETURNS uuid AS $$ BEGIN RETURN encode(parse_ulid(ulid), 'hex')::uuid; END $$ LANGUAGE plpgsql IMMUTABLE; ```

UUID to ULID

```sql CREATE OR REPLACE FUNCTION uuid_to_ulid(id uuid) RETURNS text AS $$ DECLARE encoding bytea = '0123456789ABCDEFGHJKMNPQRSTVWXYZ'; output text = ''; uuid_bytes bytea = uuid_send(id); BEGIN

-- Encode the timestamp output = output || CHR(GET_BYTE(encoding, (GET_BYTE(uuid_bytes, 0) & 224) >> 5)); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(uuid_bytes, 0) & 31))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(uuid_bytes, 1) & 248) >> 3)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(uuid_bytes, 1) & 7) << 2) | ((GET_BYTE(uuid_bytes, 2) & 192) >> 6))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(uuid_bytes, 2) & 62) >> 1)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(uuid_bytes, 2) & 1) << 4) | ((GET_BYTE(uuid_bytes, 3) & 240) >> 4))); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(uuid_bytes, 3) & 15) << 1) | ((GET_BYTE(uuid_bytes, 4) & 128) >> 7))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(uuid_bytes, 4) & 124) >> 2)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(uuid_bytes, 4) & 3) << 3) | ((GET_BYTE(uuid_bytes, 5) & 224) >> 5))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(uuid_bytes, 5) & 31)));

-- Encode the entropy output = output || CHR(GET_BYTE(encoding, (GET_BYTE(uuid_bytes, 6) & 248) >> 3)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(uuid_bytes, 6) & 7) << 2) | ((GET_BYTE(uuid_bytes, 7) & 192) >> 6))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(uuid_bytes, 7) & 62) >> 1)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(uuid_bytes, 7) & 1) << 4) | ((GET_BYTE(uuid_bytes, 8) & 240) >> 4))); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(uuid_bytes, 8) & 15) << 1) | ((GET_BYTE(uuid_bytes, 9) & 128) >> 7))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(uuid_bytes, 9) & 124) >> 2)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(uuid_bytes, 9) & 3) << 3) | ((GET_BYTE(uuid_bytes, 10) & 224) >> 5))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(uuid_bytes, 10) & 31))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(uuid_bytes, 11) & 248) >> 3)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(uuid_bytes, 11) & 7) << 2) | ((GET_BYTE(uuid_bytes, 12) & 192) >> 6))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(uuid_bytes, 12) & 62) >> 1)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(uuid_bytes, 12) & 1) << 4) | ((GET_BYTE(uuid_bytes, 13) & 240) >> 4))); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(uuid_bytes, 13) & 15) << 1) | ((GET_BYTE(uuid_bytes, 14) & 128) >> 7))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(uuid_bytes, 14) & 124) >> 2)); output = output || CHR(GET_BYTE(encoding, ((GET_BYTE(uuid_bytes, 14) & 3) << 3) | ((GET_BYTE(uuid_bytes, 15) & 224) >> 5))); output = output || CHR(GET_BYTE(encoding, (GET_BYTE(uuid_bytes, 15) & 31)));

RETURN output; END $$ LANGUAGE plpgsql IMMUTABLE; ```

Gera 11 Digitos aleatórios: YBKXG0CKTH4

```sql -- Cria a extensão pgcrypto para gerar uuid CREATE EXTENSION IF NOT EXISTS pgcrypto;

-- Cria a função para gerar ULID CREATE OR REPLACE FUNCTION gen_lrandom() RETURNS TEXT AS $$ DECLARE ts_millis BIGINT; ts_chars TEXT; random_bytes BYTEA; random_chars TEXT; base32_chars TEXT := '0123456789ABCDEFGHJKMNPQRSTVWXYZ'; i INT; BEGIN -- Pega o timestamp em milissegundos ts_millis := FLOOR(EXTRACT(EPOCH FROM clock_timestamp()) * 1000)::BIGINT;

-- Converte o timestamp para base32 ts_chars := ''; FOR i IN REVERSE 0..11 LOOP ts_chars := ts_chars || substr(base32_chars, ((ts_millis >> (5 * i)) & 31) + 1, 1); END LOOP; -- Gera 10 bytes aleatórios e converte para base32 random_bytes := gen_random_bytes(10); random_chars := ''; FOR i IN 0..9 LOOP random_chars := random_chars || substr(base32_chars, ((get_byte(random_bytes, i) >> 3) & 31) + 1, 1); IF i < 9 THEN random_chars := random_chars || substr(base32_chars, (((get_byte(random_bytes, i) & 7) << 2) | (get_byte(random_bytes, i + 1) >> 6)) & 31 + 1, 1); ELSE random_chars := random_chars || substr(base32_chars, ((get_byte(random_bytes, i) & 7) << 2) + 1, 1); END IF; END LOOP; -- Concatena o timestamp e os caracteres aleatórios RETURN ts_chars || random_chars;END; $$ LANGUAGE plpgsql; ```

Exemplo de USO

```sql -- Criação da extensão caso não exista CREATE EXTENSION IF NOT EXISTS pgcrypto; -- Criação da tabela pessoas CREATE TABLE pessoas ( ID UUID DEFAULT gen_random_uuid ( ) PRIMARY KEY, nome TEXT NOT NULL );

-- Busca Pessoa na tabela SELECT * FROM "pessoas" WHERE uuid_to_ulid ( ID ) = '252FAC9F3V8EF80SSDK8PXW02F'; ```

Fontes

- https://github.com/scoville/pgsql-ulid

- https://github.com/geckoboard/pgulid

-

@ 0fa80bd3:ea7325de

2025-01-29 05:55:02

@ 0fa80bd3:ea7325de

2025-01-29 05:55:02The land that belongs to the indigenous peoples of Russia has been seized by a gang of killers who have unleashed a war of extermination. They wipe out anyone who refuses to conform to their rules. Those who disagree and stay behind are tortured and killed in prisons and labor camps. Those who flee lose their homeland, dissolve into foreign cultures, and fade away. And those who stand up to protect their people are attacked by the misled and deceived. The deceived die for the unchecked greed of a single dictator—thousands from both sides, people who just wanted to live, raise their kids, and build a future.

Now, they are forced to make an impossible choice: abandon their homeland or die. Some perish on the battlefield, others lose themselves in exile, stripped of their identity, scattered in a world that isn’t theirs.

There’s been endless debate about how to fix this, how to clear the field of the weeds that choke out every new sprout, every attempt at change. But the real problem? We can’t play by their rules. We can’t speak their language or use their weapons. We stand for humanity, and no matter how righteous our cause, we will not multiply suffering. Victory doesn’t come from matching the enemy—it comes from staying ahead, from using tools they haven’t mastered yet. That’s how wars are won.

Our only resource is the will of the people to rewrite the order of things. Historian Timothy Snyder once said that a nation cannot exist without a city. A city is where the most active part of a nation thrives. But the cities are occupied. The streets are watched. Gatherings are impossible. They control the money. They control the mail. They control the media. And any dissent is crushed before it can take root.

So I started asking myself: How do we stop this fragmentation? How do we create a space where people can rebuild their connections when they’re ready? How do we build a self-sustaining network, where everyone contributes and benefits proportionally, while keeping their freedom to leave intact? And more importantly—how do we make it spread, even in occupied territory?

In 2009, something historic happened: the internet got its own money. Thanks to Satoshi Nakamoto, the world took a massive leap forward. Bitcoin and decentralized ledgers shattered the idea that money must be controlled by the state. Now, to move or store value, all you need is an address and a key. A tiny string of text, easy to carry, impossible to seize.

That was the year money broke free. The state lost its grip. Its biggest weapon—physical currency—became irrelevant. Money became purely digital.

The internet was already a sanctuary for information, a place where people could connect and organize. But with Bitcoin, it evolved. Now, value itself could flow freely, beyond the reach of authorities.

Think about it: when seedlings are grown in controlled environments before being planted outside, they get stronger, survive longer, and bear fruit faster. That’s how we handle crops in harsh climates—nurture them until they’re ready for the wild.

Now, picture the internet as that controlled environment for ideas. Bitcoin? It’s the fertile soil that lets them grow. A testing ground for new models of interaction, where concepts can take root before they move into the real world. If nation-states are a battlefield, locked in a brutal war for territory, the internet is boundless. It can absorb any number of ideas, any number of people, and it doesn’t run out of space.

But for this ecosystem to thrive, people need safe ways to communicate, to share ideas, to build something real—without surveillance, without censorship, without the constant fear of being erased.

This is where Nostr comes in.

Nostr—"Notes and Other Stuff Transmitted by Relays"—is more than just a messaging protocol. It’s a new kind of city. One that no dictator can seize, no corporation can own, no government can shut down.

It’s built on decentralization, encryption, and individual control. Messages don’t pass through central servers—they are relayed through independent nodes, and users choose which ones to trust. There’s no master switch to shut it all down. Every person owns their identity, their data, their connections. And no one—no state, no tech giant, no algorithm—can silence them.

In a world where cities fall and governments fail, Nostr is a city that cannot be occupied. A place for ideas, for networks, for freedom. A city that grows stronger the more people build within it.

-

@ 78b3c1ed:5033eea9

2025-04-27 01:48:48

@ 78b3c1ed:5033eea9

2025-04-27 01:48:48※スポットライトから移植した古い記事です。参考程度に。

これを参考にしてUmbrelのBitcoin Nodeをカスタムsignetノードにする。 以下メモ書きご容赦。備忘録程度に書き留めました。

メインネットとは共存できない。Bitcoinに依存する全てのアプリを削除しなければならない。よって実験機に導入すべき。

<手順>

1.Umbrel Bitcoin Nodeアプリのadvance settingでsignetを選択。

2.CLI appスクリプトでbitcoinを止める。

cd umbrel/scripts ./app stop bitcoin3.bitcoin.conf, umbrel-bitcoin.conf以外を削除ディレクトリの場所は ~/umbrel/app-data/bitcoin/data/bitcoin4.umbrel-bitcoin.confをsu権限で編集。末尾にsignetchallengeを追加。 ``` [signet] bind=0.0.0.0:8333 bind=10.21.21.8:8334=onion51,21,<公開鍵>,51,ae

signetchallenge=5121<公開鍵>51ae

5.appスクリプトでbitcoinを開始。cd ~/umbrel/scripts ./app start bitcoin ``` 6.適当にディレクトリを作りgithubからbitcoindのソースをクローン。7.bitcoindのバイナリをダウンロード、bitcoin-cliおよびbitcoin-utilを~/.local/binに置く。6.のソースからビルドしても良い。ビルド方法は自分で調べて。

8.bitcondにマイニング用のウォレットを作成 ``` alias bcli='docker exec -it bitcoin_bitcoind_1 bitcoin-cli -signet -rpcconnect=10.21.21.8 -rpcport=8332 -rpcuser=umbrel -rpcpassword=<パスワード>'

ウォレットを作る。

bcli createwallet "mining" false true "" false false

秘密鍵をインポート

bcli importprivkey "<秘密鍵>"

RPCパスワードは以下で確認cat ~/umbrel/.env | grep BITCOIN_RPC_PASS9.ソースにあるbitcoin/contrib/signet/minerスクリプトを使ってマイニングcd <ダウンロードしたディレクトリ>/bitcoin/contrib/signet難易度の算出

./miner \ --cli="bitcoin-cli -signet -rpcconnect=10.21.21.8 -rpcport=8332 -rpcuser=umbrel -rpcpassword=<パスワード>" calibrate \ --grind-cmd="bitcoin-util grind" --seconds 30 ★私の環境で30秒指定したら nbits=1d4271e7 と算出された。実際にこれで動かすと2分30になるけど...

ジェネシスブロック生成

./miner \ --cli="bitcoin-cli -signet -rpcconnect=10.21.21.8 -rpcport=8332 -rpcuser=umbrel -rpcpassword=<パスワード>" generate \ --address <ビットコインアドレス> \ --grind-cmd="bitcoin-util grind" --nbits=1d4271e7 \ --set-block-time=$(date +%s)

継続的にマイニング

./miner \ --cli="bitcoin-cli -signet -rpcconnect=10.21.21.8 -rpcport=8332 -rpcuser=umbrel -rpcpassword=<パスワード>" generate \ --address <ビットコインアドレス> \ --grind-cmd="bitcoin-util grind" --nbits=1d4271e7 \ --ongoing ``` ここまでやればカスタムsignetでビットコインノードが稼働する。

-

@ 9e69e420:d12360c2

2025-01-26 15:26:44

@ 9e69e420:d12360c2

2025-01-26 15:26:44Secretary of State Marco Rubio issued new guidance halting spending on most foreign aid grants for 90 days, including military assistance to Ukraine. This immediate order shocked State Department officials and mandates “stop-work orders” on nearly all existing foreign assistance awards.

While it allows exceptions for military financing to Egypt and Israel, as well as emergency food assistance, it restricts aid to key allies like Ukraine, Jordan, and Taiwan. The guidance raises potential liability risks for the government due to unfulfilled contracts.

A report will be prepared within 85 days to recommend which programs to continue or discontinue.

-

@ 40b9c85f:5e61b451

2025-04-24 15:27:02

@ 40b9c85f:5e61b451

2025-04-24 15:27:02Introduction

Data Vending Machines (DVMs) have emerged as a crucial component of the Nostr ecosystem, offering specialized computational services to clients across the network. As defined in NIP-90, DVMs operate on an apparently simple principle: "data in, data out." They provide a marketplace for data processing where users request specific jobs (like text translation, content recommendation, or AI text generation)

While DVMs have gained significant traction, the current specification faces challenges that hinder widespread adoption and consistent implementation. This article explores some ideas on how we can apply the reflection pattern, a well established approach in RPC systems, to address these challenges and improve the DVM ecosystem's clarity, consistency, and usability.

The Current State of DVMs: Challenges and Limitations

The NIP-90 specification provides a broad framework for DVMs, but this flexibility has led to several issues:

1. Inconsistent Implementation

As noted by hzrd149 in "DVMs were a mistake" every DVM implementation tends to expect inputs in slightly different formats, even while ostensibly following the same specification. For example, a translation request DVM might expect an event ID in one particular format, while an LLM service could expect a "prompt" input that's not even specified in NIP-90.

2. Fragmented Specifications

The DVM specification reserves a range of event kinds (5000-6000), each meant for different types of computational jobs. While creating sub-specifications for each job type is being explored as a possible solution for clarity, in a decentralized and permissionless landscape like Nostr, relying solely on specification enforcement won't be effective for creating a healthy ecosystem. A more comprehensible approach is needed that works with, rather than against, the open nature of the protocol.

3. Ambiguous API Interfaces

There's no standardized way for clients to discover what parameters a specific DVM accepts, which are required versus optional, or what output format to expect. This creates uncertainty and forces developers to rely on documentation outside the protocol itself, if such documentation exists at all.

The Reflection Pattern: A Solution from RPC Systems

The reflection pattern in RPC systems offers a compelling solution to many of these challenges. At its core, reflection enables servers to provide metadata about their available services, methods, and data types at runtime, allowing clients to dynamically discover and interact with the server's API.

In established RPC frameworks like gRPC, reflection serves as a self-describing mechanism where services expose their interface definitions and requirements. In MCP reflection is used to expose the capabilities of the server, such as tools, resources, and prompts. Clients can learn about available capabilities without prior knowledge, and systems can adapt to changes without requiring rebuilds or redeployments. This standardized introspection creates a unified way to query service metadata, making tools like

grpcurlpossible without requiring precompiled stubs.How Reflection Could Transform the DVM Specification

By incorporating reflection principles into the DVM specification, we could create a more coherent and predictable ecosystem. DVMs already implement some sort of reflection through the use of 'nip90params', which allow clients to discover some parameters, constraints, and features of the DVMs, such as whether they accept encryption, nutzaps, etc. However, this approach could be expanded to provide more comprehensive self-description capabilities.

1. Defined Lifecycle Phases

Similar to the Model Context Protocol (MCP), DVMs could benefit from a clear lifecycle consisting of an initialization phase and an operation phase. During initialization, the client and DVM would negotiate capabilities and exchange metadata, with the DVM providing a JSON schema containing its input requirements. nip-89 (or other) announcements can be used to bootstrap the discovery and negotiation process by providing the input schema directly. Then, during the operation phase, the client would interact with the DVM according to the negotiated schema and parameters.

2. Schema-Based Interactions

Rather than relying on rigid specifications for each job type, DVMs could self-advertise their schemas. This would allow clients to understand which parameters are required versus optional, what type validation should occur for inputs, what output formats to expect, and what payment flows are supported. By internalizing the input schema of the DVMs they wish to consume, clients gain clarity on how to interact effectively.

3. Capability Negotiation

Capability negotiation would enable DVMs to advertise their supported features, such as encryption methods, payment options, or specialized functionalities. This would allow clients to adjust their interaction approach based on the specific capabilities of each DVM they encounter.

Implementation Approach

While building DVMCP, I realized that the RPC reflection pattern used there could be beneficial for constructing DVMs in general. Since DVMs already follow an RPC style for their operation, and reflection is a natural extension of this approach, it could significantly enhance and clarify the DVM specification.

A reflection enhanced DVM protocol could work as follows: 1. Discovery: Clients discover DVMs through existing NIP-89 application handlers, input schemas could also be advertised in nip-89 announcements, making the second step unnecessary. 2. Schema Request: Clients request the DVM's input schema for the specific job type they're interested in 3. Validation: Clients validate their request against the provided schema before submission 4. Operation: The job proceeds through the standard NIP-90 flow, but with clearer expectations on both sides

Parallels with Other Protocols

This approach has proven successful in other contexts. The Model Context Protocol (MCP) implements a similar lifecycle with capability negotiation during initialization, allowing any client to communicate with any server as long as they adhere to the base protocol. MCP and DVM protocols share fundamental similarities, both aim to expose and consume computational resources through a JSON-RPC-like interface, albeit with specific differences.

gRPC's reflection service similarly allows clients to discover service definitions at runtime, enabling generic tools to work with any gRPC service without prior knowledge. In the REST API world, OpenAPI/Swagger specifications document interfaces in a way that makes them discoverable and testable.

DVMs would benefit from adopting these patterns while maintaining the decentralized, permissionless nature of Nostr.

Conclusion

I am not attempting to rewrite the DVM specification; rather, explore some ideas that could help the ecosystem improve incrementally, reducing fragmentation and making the ecosystem more comprehensible. By allowing DVMs to self describe their interfaces, we could maintain the flexibility that makes Nostr powerful while providing the structure needed for interoperability.

For developers building DVM clients or libraries, this approach would simplify consumption by providing clear expectations about inputs and outputs. For DVM operators, it would establish a standard way to communicate their service's requirements without relying on external documentation.

I am currently developing DVMCP following these patterns. Of course, DVMs and MCP servers have different details; MCP includes capabilities such as tools, resources, and prompts on the server side, as well as 'roots' and 'sampling' on the client side, creating a bidirectional way to consume capabilities. In contrast, DVMs typically function similarly to MCP tools, where you call a DVM with an input and receive an output, with each job type representing a different categorization of the work performed.

Without further ado, I hope this article has provided some insight into the potential benefits of applying the reflection pattern to the DVM specification.

-

@ 9e69e420:d12360c2

2025-01-25 22:16:54

@ 9e69e420:d12360c2

2025-01-25 22:16:54President Trump plans to withdraw 20,000 U.S. troops from Europe and expects European allies to contribute financially to the remaining military presence. Reported by ANSA, Trump aims to deliver this message to European leaders since taking office. A European diplomat noted, “the costs cannot be borne solely by American taxpayers.”

The Pentagon hasn't commented yet. Trump has previously sought lower troop levels in Europe and had ordered cuts during his first term. The U.S. currently maintains around 65,000 troops in Europe, with total forces reaching 100,000 since the Ukraine invasion. Trump's new approach may shift military focus to the Pacific amid growing concerns about China.

-

@ b17fccdf:b7211155

2025-01-21 17:02:21

@ b17fccdf:b7211155

2025-01-21 17:02:21The past 26 August, Tor introduced officially a proof-of-work (PoW) defense for onion services designed to prioritize verified network traffic as a deterrent against denial of service (DoS) attacks.

~ > This feature at the moment, is deactivate by default, so you need to follow these steps to activate this on a MiniBolt node:

- Make sure you have the latest version of Tor installed, at the time of writing this post, which is v0.4.8.6. Check your current version by typing

tor --versionExample of expected output:

Tor version 0.4.8.6. This build of Tor is covered by the GNU General Public License (https://www.gnu.org/licenses/gpl-3.0.en.html) Tor is running on Linux with Libevent 2.1.12-stable, OpenSSL 3.0.9, Zlib 1.2.13, Liblzma 5.4.1, Libzstd N/A and Glibc 2.36 as libc. Tor compiled with GCC version 12.2.0~ > If you have v0.4.8.X, you are OK, if not, type

sudo apt update && sudo apt upgradeand confirm to update.- Basic PoW support can be checked by running this command:

tor --list-modulesExpected output:

relay: yes dirauth: yes dircache: yes pow: **yes**~ > If you have

pow: yes, you are OK- Now go to the torrc file of your MiniBolt and add the parameter to enable PoW for each hidden service added

sudo nano /etc/tor/torrcExample:

```

Hidden Service BTC RPC Explorer

HiddenServiceDir /var/lib/tor/hidden_service_btcrpcexplorer/ HiddenServiceVersion 3 HiddenServicePoWDefensesEnabled 1 HiddenServicePort 80 127.0.0.1:3002 ```

~ > Bitcoin Core and LND use the Tor control port to automatically create the hidden service, requiring no action from the user. We have submitted a feature request in the official GitHub repositories to explore the need for the integration of Tor's PoW defense into the automatic creation process of the hidden service. You can follow them at the following links:

- Bitcoin Core: https://github.com/lightningnetwork/lnd/issues/8002

- LND: https://github.com/bitcoin/bitcoin/issues/28499

More info:

- https://blog.torproject.org/introducing-proof-of-work-defense-for-onion-services/

- https://gitlab.torproject.org/tpo/onion-services/onion-support/-/wikis/Documentation/PoW-FAQ

Enjoy it MiniBolter! 💙

-

@ 6be5cc06:5259daf0

2025-01-21 01:51:46

@ 6be5cc06:5259daf0

2025-01-21 01:51:46Bitcoin: Um sistema de dinheiro eletrônico direto entre pessoas.

Satoshi Nakamoto

satoshin@gmx.com

www.bitcoin.org

Resumo

O Bitcoin é uma forma de dinheiro digital que permite pagamentos diretos entre pessoas, sem a necessidade de um banco ou instituição financeira. Ele resolve um problema chamado gasto duplo, que ocorre quando alguém tenta gastar o mesmo dinheiro duas vezes. Para evitar isso, o Bitcoin usa uma rede descentralizada onde todos trabalham juntos para verificar e registrar as transações.

As transações são registradas em um livro público chamado blockchain, protegido por uma técnica chamada Prova de Trabalho. Essa técnica cria uma cadeia de registros que não pode ser alterada sem refazer todo o trabalho já feito. Essa cadeia é mantida pelos computadores que participam da rede, e a mais longa é considerada a verdadeira.

Enquanto a maior parte do poder computacional da rede for controlada por participantes honestos, o sistema continuará funcionando de forma segura. A rede é flexível, permitindo que qualquer pessoa entre ou saia a qualquer momento, sempre confiando na cadeia mais longa como prova do que aconteceu.

1. Introdução

Hoje, quase todos os pagamentos feitos pela internet dependem de bancos ou empresas como processadores de pagamento (cartões de crédito, por exemplo) para funcionar. Embora esse sistema seja útil, ele tem problemas importantes porque é baseado em confiança.

Primeiro, essas empresas podem reverter pagamentos, o que é útil em caso de erros, mas cria custos e incertezas. Isso faz com que pequenas transações, como pagar centavos por um serviço, se tornem inviáveis. Além disso, os comerciantes são obrigados a desconfiar dos clientes, pedindo informações extras e aceitando fraudes como algo inevitável.

Esses problemas não existem no dinheiro físico, como o papel-moeda, onde o pagamento é final e direto entre as partes. No entanto, não temos como enviar dinheiro físico pela internet sem depender de um intermediário confiável.

O que precisamos é de um sistema de pagamento eletrônico baseado em provas matemáticas, não em confiança. Esse sistema permitiria que qualquer pessoa enviasse dinheiro diretamente para outra, sem depender de bancos ou processadores de pagamento. Além disso, as transações seriam irreversíveis, protegendo vendedores contra fraudes, mas mantendo a possibilidade de soluções para disputas legítimas.

Neste documento, apresentamos o Bitcoin, que resolve o problema do gasto duplo usando uma rede descentralizada. Essa rede cria um registro público e protegido por cálculos matemáticos, que garante a ordem das transações. Enquanto a maior parte da rede for controlada por pessoas honestas, o sistema será seguro contra ataques.

2. Transações

Para entender como funciona o Bitcoin, é importante saber como as transações são realizadas. Imagine que você quer transferir uma "moeda digital" para outra pessoa. No sistema do Bitcoin, essa "moeda" é representada por uma sequência de registros que mostram quem é o atual dono. Para transferi-la, você adiciona um novo registro comprovando que agora ela pertence ao próximo dono. Esse registro é protegido por um tipo especial de assinatura digital.

O que é uma assinatura digital?

Uma assinatura digital é como uma senha secreta, mas muito mais segura. No Bitcoin, cada usuário tem duas chaves: uma "chave privada", que é secreta e serve para criar a assinatura, e uma "chave pública", que pode ser compartilhada com todos e é usada para verificar se a assinatura é válida. Quando você transfere uma moeda, usa sua chave privada para assinar a transação, provando que você é o dono. A próxima pessoa pode usar sua chave pública para confirmar isso.

Como funciona na prática?

Cada "moeda" no Bitcoin é, na verdade, uma cadeia de assinaturas digitais. Vamos imaginar o seguinte cenário:

- A moeda está com o Dono 0 (você). Para transferi-la ao Dono 1, você assina digitalmente a transação com sua chave privada. Essa assinatura inclui o código da transação anterior (chamado de "hash") e a chave pública do Dono 1.

- Quando o Dono 1 quiser transferir a moeda ao Dono 2, ele assinará a transação seguinte com sua própria chave privada, incluindo também o hash da transação anterior e a chave pública do Dono 2.

- Esse processo continua, formando uma "cadeia" de transações. Qualquer pessoa pode verificar essa cadeia para confirmar quem é o atual dono da moeda.

Resolvendo o problema do gasto duplo

Um grande desafio com moedas digitais é o "gasto duplo", que é quando uma mesma moeda é usada em mais de uma transação. Para evitar isso, muitos sistemas antigos dependiam de uma entidade central confiável, como uma casa da moeda, que verificava todas as transações. No entanto, isso criava um ponto único de falha e centralizava o controle do dinheiro.

O Bitcoin resolve esse problema de forma inovadora: ele usa uma rede descentralizada onde todos os participantes (os "nós") têm acesso a um registro completo de todas as transações. Cada nó verifica se as transações são válidas e se a moeda não foi gasta duas vezes. Quando a maioria dos nós concorda com a validade de uma transação, ela é registrada permanentemente na blockchain.

Por que isso é importante?

Essa solução elimina a necessidade de confiar em uma única entidade para gerenciar o dinheiro, permitindo que qualquer pessoa no mundo use o Bitcoin sem precisar de permissão de terceiros. Além disso, ela garante que o sistema seja seguro e resistente a fraudes.

3. Servidor Timestamp

Para assegurar que as transações sejam realizadas de forma segura e transparente, o sistema Bitcoin utiliza algo chamado de "servidor de registro de tempo" (timestamp). Esse servidor funciona como um registro público que organiza as transações em uma ordem específica.

Ele faz isso agrupando várias transações em blocos e criando um código único chamado "hash". Esse hash é como uma impressão digital que representa todo o conteúdo do bloco. O hash de cada bloco é amplamente divulgado, como se fosse publicado em um jornal ou em um fórum público.

Esse processo garante que cada bloco de transações tenha um registro de quando foi criado e que ele existia naquele momento. Além disso, cada novo bloco criado contém o hash do bloco anterior, formando uma cadeia contínua de blocos conectados — conhecida como blockchain.

Com isso, se alguém tentar alterar qualquer informação em um bloco anterior, o hash desse bloco mudará e não corresponderá ao hash armazenado no bloco seguinte. Essa característica torna a cadeia muito segura, pois qualquer tentativa de fraude seria imediatamente detectada.

O sistema de timestamps é essencial para provar a ordem cronológica das transações e garantir que cada uma delas seja única e autêntica. Dessa forma, ele reforça a segurança e a confiança na rede Bitcoin.

4. Prova-de-Trabalho

Para implementar o registro de tempo distribuído no sistema Bitcoin, utilizamos um mecanismo chamado prova-de-trabalho. Esse sistema é semelhante ao Hashcash, desenvolvido por Adam Back, e baseia-se na criação de um código único, o "hash", por meio de um processo computacionalmente exigente.

A prova-de-trabalho envolve encontrar um valor especial que, quando processado junto com as informações do bloco, gere um hash que comece com uma quantidade específica de zeros. Esse valor especial é chamado de "nonce". Encontrar o nonce correto exige um esforço significativo do computador, porque envolve tentativas repetidas até que a condição seja satisfeita.

Esse processo é importante porque torna extremamente difícil alterar qualquer informação registrada em um bloco. Se alguém tentar mudar algo em um bloco, seria necessário refazer o trabalho de computação não apenas para aquele bloco, mas também para todos os blocos que vêm depois dele. Isso garante a segurança e a imutabilidade da blockchain.

A prova-de-trabalho também resolve o problema de decidir qual cadeia de blocos é a válida quando há múltiplas cadeias competindo. A decisão é feita pela cadeia mais longa, pois ela representa o maior esforço computacional já realizado. Isso impede que qualquer indivíduo ou grupo controle a rede, desde que a maioria do poder de processamento seja mantida por participantes honestos.

Para garantir que o sistema permaneça eficiente e equilibrado, a dificuldade da prova-de-trabalho é ajustada automaticamente ao longo do tempo. Se novos blocos estiverem sendo gerados rapidamente, a dificuldade aumenta; se estiverem sendo gerados muito lentamente, a dificuldade diminui. Esse ajuste assegura que novos blocos sejam criados aproximadamente a cada 10 minutos, mantendo o sistema estável e funcional.

5. Rede

A rede Bitcoin é o coração do sistema e funciona de maneira distribuída, conectando vários participantes (ou nós) para garantir o registro e a validação das transações. Os passos para operar essa rede são:

-

Transmissão de Transações: Quando alguém realiza uma nova transação, ela é enviada para todos os nós da rede. Isso é feito para garantir que todos estejam cientes da operação e possam validá-la.

-

Coleta de Transações em Blocos: Cada nó agrupa as novas transações recebidas em um "bloco". Este bloco será preparado para ser adicionado à cadeia de blocos (a blockchain).

-

Prova-de-Trabalho: Os nós competem para resolver a prova-de-trabalho do bloco, utilizando poder computacional para encontrar um hash válido. Esse processo é como resolver um quebra-cabeça matemático difícil.

-

Envio do Bloco Resolvido: Quando um nó encontra a solução para o bloco (a prova-de-trabalho), ele compartilha esse bloco com todos os outros nós na rede.

-

Validação do Bloco: Cada nó verifica o bloco recebido para garantir que todas as transações nele contidas sejam válidas e que nenhuma moeda tenha sido gasta duas vezes. Apenas blocos válidos são aceitos.

-

Construção do Próximo Bloco: Os nós que aceitaram o bloco começam a trabalhar na criação do próximo bloco, utilizando o hash do bloco aceito como base (hash anterior). Isso mantém a continuidade da cadeia.

Resolução de Conflitos e Escolha da Cadeia Mais Longa

Os nós sempre priorizam a cadeia mais longa, pois ela representa o maior esforço computacional já realizado, garantindo maior segurança. Se dois blocos diferentes forem compartilhados simultaneamente, os nós trabalharão no primeiro bloco recebido, mas guardarão o outro como uma alternativa. Caso o segundo bloco eventualmente forme uma cadeia mais longa (ou seja, tenha mais blocos subsequentes), os nós mudarão para essa nova cadeia.

Tolerância a Falhas

A rede é robusta e pode lidar com mensagens que não chegam a todos os nós. Uma transação não precisa alcançar todos os nós de imediato; basta que chegue a um número suficiente deles para ser incluída em um bloco. Da mesma forma, se um nó não receber um bloco em tempo hábil, ele pode solicitá-lo ao perceber que está faltando quando o próximo bloco é recebido.

Esse mecanismo descentralizado permite que a rede Bitcoin funcione de maneira segura, confiável e resiliente, sem depender de uma autoridade central.

6. Incentivo

O incentivo é um dos pilares fundamentais que sustenta o funcionamento da rede Bitcoin, garantindo que os participantes (nós) continuem operando de forma honesta e contribuindo com recursos computacionais. Ele é estruturado em duas partes principais: a recompensa por mineração e as taxas de transação.

Recompensa por Mineração

Por convenção, o primeiro registro em cada bloco é uma transação especial que cria novas moedas e as atribui ao criador do bloco. Essa recompensa incentiva os mineradores a dedicarem poder computacional para apoiar a rede. Como não há uma autoridade central para emitir moedas, essa é a maneira pela qual novas moedas entram em circulação. Esse processo pode ser comparado ao trabalho de garimpeiros, que utilizam recursos para colocar mais ouro em circulação. No caso do Bitcoin, o "recurso" consiste no tempo de CPU e na energia elétrica consumida para resolver a prova-de-trabalho.

Taxas de Transação

Além da recompensa por mineração, os mineradores também podem ser incentivados pelas taxas de transação. Se uma transação utiliza menos valor de saída do que o valor de entrada, a diferença é tratada como uma taxa, que é adicionada à recompensa do bloco contendo essa transação. Com o passar do tempo e à medida que o número de moedas em circulação atinge o limite predeterminado, essas taxas de transação se tornam a principal fonte de incentivo, substituindo gradualmente a emissão de novas moedas. Isso permite que o sistema opere sem inflação, uma vez que o número total de moedas permanece fixo.

Incentivo à Honestidade

O design do incentivo também busca garantir que os participantes da rede mantenham um comportamento honesto. Para um atacante que consiga reunir mais poder computacional do que o restante da rede, ele enfrentaria duas escolhas:

- Usar esse poder para fraudar o sistema, como reverter transações e roubar pagamentos.

- Seguir as regras do sistema, criando novos blocos e recebendo recompensas legítimas.

A lógica econômica favorece a segunda opção, pois um comportamento desonesto prejudicaria a confiança no sistema, diminuindo o valor de todas as moedas, incluindo aquelas que o próprio atacante possui. Jogar dentro das regras não apenas maximiza o retorno financeiro, mas também preserva a validade e a integridade do sistema.

Esse mecanismo garante que os incentivos econômicos estejam alinhados com o objetivo de manter a rede segura, descentralizada e funcional ao longo do tempo.

7. Recuperação do Espaço em Disco

Depois que uma moeda passa a estar protegida por muitos blocos na cadeia, as informações sobre as transações antigas que a geraram podem ser descartadas para economizar espaço em disco. Para que isso seja possível sem comprometer a segurança, as transações são organizadas em uma estrutura chamada "árvore de Merkle". Essa árvore funciona como um resumo das transações: em vez de armazenar todas elas, guarda apenas um "hash raiz", que é como uma assinatura compacta que representa todo o grupo de transações.

Os blocos antigos podem, então, ser simplificados, removendo as partes desnecessárias dessa árvore. Apenas a raiz do hash precisa ser mantida no cabeçalho do bloco, garantindo que a integridade dos dados seja preservada, mesmo que detalhes específicos sejam descartados.

Para exemplificar: imagine que você tenha vários recibos de compra. Em vez de guardar todos os recibos, você cria um documento e lista apenas o valor total de cada um. Mesmo que os recibos originais sejam descartados, ainda é possível verificar a soma com base nos valores armazenados.

Além disso, o espaço ocupado pelos blocos em si é muito pequeno. Cada bloco sem transações ocupa apenas cerca de 80 bytes. Isso significa que, mesmo com blocos sendo gerados a cada 10 minutos, o crescimento anual em espaço necessário é insignificante: apenas 4,2 MB por ano. Com a capacidade de armazenamento dos computadores crescendo a cada ano, esse espaço continuará sendo trivial, garantindo que a rede possa operar de forma eficiente sem problemas de armazenamento, mesmo a longo prazo.

8. Verificação de Pagamento Simplificada

É possível confirmar pagamentos sem a necessidade de operar um nó completo da rede. Para isso, o usuário precisa apenas de uma cópia dos cabeçalhos dos blocos da cadeia mais longa (ou seja, a cadeia com maior esforço de trabalho acumulado). Ele pode verificar a validade de uma transação ao consultar os nós da rede até obter a confirmação de que tem a cadeia mais longa. Para isso, utiliza-se o ramo Merkle, que conecta a transação ao bloco em que ela foi registrada.

Entretanto, o método simplificado possui limitações: ele não pode confirmar uma transação isoladamente, mas sim assegurar que ela ocupa um lugar específico na cadeia mais longa. Dessa forma, se um nó da rede aprova a transação, os blocos subsequentes reforçam essa aceitação.

A verificação simplificada é confiável enquanto a maioria dos nós da rede for honesta. Contudo, ela se torna vulnerável caso a rede seja dominada por um invasor. Nesse cenário, um atacante poderia fabricar transações fraudulentas que enganariam o usuário temporariamente até que o invasor obtivesse controle completo da rede.

Uma estratégia para mitigar esse risco é configurar alertas nos softwares de nós completos. Esses alertas identificam blocos inválidos, sugerindo ao usuário baixar o bloco completo para confirmar qualquer inconsistência. Para maior segurança, empresas que realizam pagamentos frequentes podem preferir operar seus próprios nós, reduzindo riscos e permitindo uma verificação mais direta e confiável.

9. Combinando e Dividindo Valor

No sistema Bitcoin, cada unidade de valor é tratada como uma "moeda" individual, mas gerenciar cada centavo como uma transação separada seria impraticável. Para resolver isso, o Bitcoin permite que valores sejam combinados ou divididos em transações, facilitando pagamentos de qualquer valor.

Entradas e Saídas

Cada transação no Bitcoin é composta por:

- Entradas: Representam os valores recebidos em transações anteriores.

- Saídas: Correspondem aos valores enviados, divididos entre os destinatários e, eventualmente, o troco para o remetente.

Normalmente, uma transação contém:

- Uma única entrada com valor suficiente para cobrir o pagamento.

- Ou várias entradas combinadas para atingir o valor necessário.

O valor total das saídas nunca excede o das entradas, e a diferença (se houver) pode ser retornada ao remetente como troco.

Exemplo Prático

Imagine que você tem duas entradas:

- 0,03 BTC

- 0,07 BTC

Se deseja enviar 0,08 BTC para alguém, a transação terá:

- Entrada: As duas entradas combinadas (0,03 + 0,07 BTC = 0,10 BTC).

- Saídas: Uma para o destinatário (0,08 BTC) e outra como troco para você (0,02 BTC).

Essa flexibilidade permite que o sistema funcione sem precisar manipular cada unidade mínima individualmente.

Difusão e Simplificação

A difusão de transações, onde uma depende de várias anteriores e assim por diante, não representa um problema. Não é necessário armazenar ou verificar o histórico completo de uma transação para utilizá-la, já que o registro na blockchain garante sua integridade.

10. Privacidade

O modelo bancário tradicional oferece um certo nível de privacidade, limitando o acesso às informações financeiras apenas às partes envolvidas e a um terceiro confiável (como bancos ou instituições financeiras). No entanto, o Bitcoin opera de forma diferente, pois todas as transações são publicamente registradas na blockchain. Apesar disso, a privacidade pode ser mantida utilizando chaves públicas anônimas, que desvinculam diretamente as transações das identidades das partes envolvidas.

Fluxo de Informação

- No modelo tradicional, as transações passam por um terceiro confiável que conhece tanto o remetente quanto o destinatário.

- No Bitcoin, as transações são anunciadas publicamente, mas sem revelar diretamente as identidades das partes. Isso é comparável a dados divulgados por bolsas de valores, onde informações como o tempo e o tamanho das negociações (a "fita") são públicas, mas as identidades das partes não.

Protegendo a Privacidade

Para aumentar a privacidade no Bitcoin, são adotadas as seguintes práticas:

- Chaves Públicas Anônimas: Cada transação utiliza um par de chaves diferentes, dificultando a associação com um proprietário único.

- Prevenção de Ligação: Ao usar chaves novas para cada transação, reduz-se a possibilidade de links evidentes entre múltiplas transações realizadas pelo mesmo usuário.

Riscos de Ligação

Embora a privacidade seja fortalecida, alguns riscos permanecem:

- Transações multi-entrada podem revelar que todas as entradas pertencem ao mesmo proprietário, caso sejam necessárias para somar o valor total.

- O proprietário da chave pode ser identificado indiretamente por transações anteriores que estejam conectadas.

11. Cálculos

Imagine que temos um sistema onde as pessoas (ou computadores) competem para adicionar informações novas (blocos) a um grande registro público (a cadeia de blocos ou blockchain). Este registro é como um livro contábil compartilhado, onde todos podem verificar o que está escrito.

Agora, vamos pensar em um cenário: um atacante quer enganar o sistema. Ele quer mudar informações já registradas para beneficiar a si mesmo, por exemplo, desfazendo um pagamento que já fez. Para isso, ele precisa criar uma versão alternativa do livro contábil (a cadeia de blocos dele) e convencer todos os outros participantes de que essa versão é a verdadeira.

Mas isso é extremamente difícil.

Como o Ataque Funciona

Quando um novo bloco é adicionado à cadeia, ele depende de cálculos complexos que levam tempo e esforço. Esses cálculos são como um grande quebra-cabeça que precisa ser resolvido.

- Os “bons jogadores” (nós honestos) estão sempre trabalhando juntos para resolver esses quebra-cabeças e adicionar novos blocos à cadeia verdadeira.

- O atacante, por outro lado, precisa resolver quebra-cabeças sozinho, tentando “alcançar” a cadeia honesta para que sua versão alternativa pareça válida.

Se a cadeia honesta já está vários blocos à frente, o atacante começa em desvantagem, e o sistema está projetado para que a dificuldade de alcançá-los aumente rapidamente.

A Corrida Entre Cadeias

Você pode imaginar isso como uma corrida. A cada bloco novo que os jogadores honestos adicionam à cadeia verdadeira, eles se distanciam mais do atacante. Para vencer, o atacante teria que resolver os quebra-cabeças mais rápido que todos os outros jogadores honestos juntos.

Suponha que:

- A rede honesta tem 80% do poder computacional (ou seja, resolve 8 de cada 10 quebra-cabeças).

- O atacante tem 20% do poder computacional (ou seja, resolve 2 de cada 10 quebra-cabeças).

Cada vez que a rede honesta adiciona um bloco, o atacante tem que "correr atrás" e resolver mais quebra-cabeças para alcançar.

Por Que o Ataque Fica Cada Vez Mais Improvável?

Vamos usar uma fórmula simples para mostrar como as chances de sucesso do atacante diminuem conforme ele precisa "alcançar" mais blocos:

P = (q/p)^z

- q é o poder computacional do atacante (20%, ou 0,2).

- p é o poder computacional da rede honesta (80%, ou 0,8).

- z é a diferença de blocos entre a cadeia honesta e a cadeia do atacante.

Se o atacante está 5 blocos atrás (z = 5):

P = (0,2 / 0,8)^5 = (0,25)^5 = 0,00098, (ou, 0,098%)

Isso significa que o atacante tem menos de 0,1% de chance de sucesso — ou seja, é muito improvável.

Se ele estiver 10 blocos atrás (z = 10):

P = (0,2 / 0,8)^10 = (0,25)^10 = 0,000000095, (ou, 0,0000095%).

Neste caso, as chances de sucesso são praticamente nulas.

Um Exemplo Simples

Se você jogar uma moeda, a chance de cair “cara” é de 50%. Mas se precisar de 10 caras seguidas, sua chance já é bem menor. Se precisar de 20 caras seguidas, é quase impossível.

No caso do Bitcoin, o atacante precisa de muito mais do que 20 caras seguidas. Ele precisa resolver quebra-cabeças extremamente difíceis e alcançar os jogadores honestos que estão sempre à frente. Isso faz com que o ataque seja inviável na prática.

Por Que Tudo Isso é Seguro?

- A probabilidade de sucesso do atacante diminui exponencialmente. Isso significa que, quanto mais tempo passa, menor é a chance de ele conseguir enganar o sistema.

- A cadeia verdadeira (honesta) está protegida pela força da rede. Cada novo bloco que os jogadores honestos adicionam à cadeia torna mais difícil para o atacante alcançar.

E Se o Atacante Tentar Continuar?

O atacante poderia continuar tentando indefinidamente, mas ele estaria gastando muito tempo e energia sem conseguir nada. Enquanto isso, os jogadores honestos estão sempre adicionando novos blocos, tornando o trabalho do atacante ainda mais inútil.

Assim, o sistema garante que a cadeia verdadeira seja extremamente segura e que ataques sejam, na prática, impossíveis de ter sucesso.

12. Conclusão

Propusemos um sistema de transações eletrônicas que elimina a necessidade de confiança, baseando-se em assinaturas digitais e em uma rede peer-to-peer que utiliza prova de trabalho. Isso resolve o problema do gasto duplo, criando um histórico público de transações imutável, desde que a maioria do poder computacional permaneça sob controle dos participantes honestos. A rede funciona de forma simples e descentralizada, com nós independentes que não precisam de identificação ou coordenação direta. Eles entram e saem livremente, aceitando a cadeia de prova de trabalho como registro do que ocorreu durante sua ausência. As decisões são tomadas por meio do poder de CPU, validando blocos legítimos, estendendo a cadeia e rejeitando os inválidos. Com este mecanismo de consenso, todas as regras e incentivos necessários para o funcionamento seguro e eficiente do sistema são garantidos.

Faça o download do whitepaper original em português: https://bitcoin.org/files/bitcoin-paper/bitcoin_pt_br.pdf

-

@ f9cf4e94:96abc355

2025-01-18 06:09:50

@ f9cf4e94:96abc355

2025-01-18 06:09:50Para esse exemplo iremos usar: | Nome | Imagem | Descrição | | --------------- | ------------------------------------------------------------ | ------------------------------------------------------------ | | Raspberry PI B+ |

| Cortex-A53 (ARMv8) 64-bit a 1.4GHz e 1 GB de SDRAM LPDDR2, |

| Pen drive |

| Cortex-A53 (ARMv8) 64-bit a 1.4GHz e 1 GB de SDRAM LPDDR2, |

| Pen drive |  | 16Gb |

| 16Gb |Recomendo que use o Ubuntu Server para essa instalação. Você pode baixar o Ubuntu para Raspberry Pi aqui. O passo a passo para a instalação do Ubuntu no Raspberry Pi está disponível aqui. Não instale um desktop (como xubuntu, lubuntu, xfce, etc.).

Passo 1: Atualizar o Sistema 🖥️

Primeiro, atualize seu sistema e instale o Tor:

bash apt update apt install tor

Passo 2: Criar o Arquivo de Serviço

nrs.service🔧Crie o arquivo de serviço que vai gerenciar o servidor Nostr. Você pode fazer isso com o seguinte conteúdo:

```unit [Unit] Description=Nostr Relay Server Service After=network.target

[Service] Type=simple WorkingDirectory=/opt/nrs ExecStart=/opt/nrs/nrs-arm64 Restart=on-failure

[Install] WantedBy=multi-user.target ```

Passo 3: Baixar o Binário do Nostr 🚀

Baixe o binário mais recente do Nostr aqui no GitHub.

Passo 4: Criar as Pastas Necessárias 📂

Agora, crie as pastas para o aplicativo e o pendrive:

bash mkdir -p /opt/nrs /mnt/edriver

Passo 5: Listar os Dispositivos Conectados 🔌

Para saber qual dispositivo você vai usar, liste todos os dispositivos conectados:

bash lsblk

Passo 6: Formatando o Pendrive 💾

Escolha o pendrive correto (por exemplo,

/dev/sda) e formate-o:bash mkfs.vfat /dev/sda

Passo 7: Montar o Pendrive 💻

Monte o pendrive na pasta

/mnt/edriver:bash mount /dev/sda /mnt/edriver

Passo 8: Verificar UUID dos Dispositivos 📋

Para garantir que o sistema monte o pendrive automaticamente, liste os UUID dos dispositivos conectados:

bash blkid

Passo 9: Alterar o

fstabpara Montar o Pendrive Automáticamente 📝Abra o arquivo

/etc/fstabe adicione uma linha para o pendrive, com o UUID que você obteve no passo anterior. A linha deve ficar assim:fstab UUID=9c9008f8-f852 /mnt/edriver vfat defaults 0 0

Passo 10: Copiar o Binário para a Pasta Correta 📥

Agora, copie o binário baixado para a pasta

/opt/nrs:bash cp nrs-arm64 /opt/nrs

Passo 11: Criar o Arquivo de Configuração 🛠️

Crie o arquivo de configuração com o seguinte conteúdo e salve-o em

/opt/nrs/config.yaml:yaml app_env: production info: name: Nostr Relay Server description: Nostr Relay Server pub_key: "" contact: "" url: http://localhost:3334 icon: https://external-content.duckduckgo.com/iu/?u= https://public.bnbstatic.com/image/cms/crawler/COINCU_NEWS/image-495-1024x569.png base_path: /mnt/edriver negentropy: true

Passo 12: Copiar o Serviço para o Diretório de Systemd ⚙️

Agora, copie o arquivo

nrs.servicepara o diretório/etc/systemd/system/:bash cp nrs.service /etc/systemd/system/Recarregue os serviços e inicie o serviço

nrs:bash systemctl daemon-reload systemctl enable --now nrs.service

Passo 13: Configurar o Tor 🌐

Abra o arquivo de configuração do Tor

/var/lib/tor/torrce adicione a seguinte linha:torrc HiddenServiceDir /var/lib/tor/nostr_server/ HiddenServicePort 80 127.0.0.1:3334

Passo 14: Habilitar e Iniciar o Tor 🧅

Agora, ative e inicie o serviço Tor:

bash systemctl enable --now tor.serviceO Tor irá gerar um endereço

.onionpara o seu servidor Nostr. Você pode encontrá-lo no arquivo/var/lib/tor/nostr_server/hostname.

Observações ⚠️

- Com essa configuração, os dados serão salvos no pendrive, enquanto o binário ficará no cartão SD do Raspberry Pi.

- O endereço

.oniondo seu servidor Nostr será algo como:ws://y3t5t5wgwjif<exemplo>h42zy7ih6iwbyd.onion.

Agora, seu servidor Nostr deve estar configurado e funcionando com Tor! 🥳

Se este artigo e as informações aqui contidas forem úteis para você, convidamos a considerar uma doação ao autor como forma de reconhecimento e incentivo à produção de novos conteúdos.

-

@ 3f770d65:7a745b24

2024-12-31 17:03:46

@ 3f770d65:7a745b24

2024-12-31 17:03:46Here are my predictions for Nostr in 2025:

Decentralization: The outbox and inbox communication models, sometimes referred to as the Gossip model, will become the standard across the ecosystem. By the end of 2025, all major clients will support these models, providing seamless communication and enhanced decentralization. Clients that do not adopt outbox/inbox by then will be regarded as outdated or legacy systems.

Privacy Standards: Major clients such as Damus and Primal will move away from NIP-04 DMs, adopting more secure protocol possibilities like NIP-17 or NIP-104. These upgrades will ensure enhanced encryption and metadata protection. Additionally, NIP-104 MLS tools will drive the development of new clients and features, providing users with unprecedented control over the privacy of their communications.

Interoperability: Nostr's ecosystem will become even more interconnected. Platforms like the Olas image-sharing service will expand into prominent clients such as Primal, Damus, Coracle, and Snort, alongside existing integrations with Amethyst, Nostur, and Nostrudel. Similarly, audio and video tools like Nostr Nests and Zap.stream will gain seamless integration into major clients, enabling easy participation in live events across the ecosystem.

Adoption and Migration: Inspired by early pioneers like Fountain and Orange Pill App, more platforms will adopt Nostr for authentication, login, and social systems. In 2025, a significant migration from a high-profile application platform with hundreds of thousands of users will transpire, doubling Nostr’s daily activity and establishing it as a cornerstone of decentralized technologies.

-

@ 16d11430:61640947

2024-12-23 16:47:01

@ 16d11430:61640947

2024-12-23 16:47:01At the intersection of philosophy, theology, physics, biology, and finance lies a terrifying truth: the fiat monetary system, in its current form, is not just an economic framework but a silent, relentless force actively working against humanity's survival. It isn't simply a failed financial model—it is a systemic engine of destruction, both externally and within the very core of our biological existence.

The Philosophical Void of Fiat

Philosophy has long questioned the nature of value and the meaning of human existence. From Socrates to Kant, thinkers have pondered the pursuit of truth, beauty, and virtue. But in the modern age, the fiat system has hijacked this discourse. The notion of "value" in a fiat world is no longer rooted in human potential or natural resources—it is abstracted, manipulated, and controlled by central authorities with the sole purpose of perpetuating their own power. The currency is not a reflection of society’s labor or resources; it is a representation of faith in an authority that, more often than not, breaks that faith with reckless monetary policies and hidden inflation.

The fiat system has created a kind of ontological nihilism, where the idea of true value, rooted in work, creativity, and family, is replaced with speculative gambling and short-term gains. This betrayal of human purpose at the systemic level feeds into a philosophical despair: the relentless devaluation of effort, the erosion of trust, and the abandonment of shared human values. In this nihilistic economy, purpose and meaning become increasingly difficult to find, leaving millions to question the very foundation of their existence.

Theological Implications: Fiat and the Collapse of the Sacred

Religious traditions have long linked moral integrity with the stewardship of resources and the preservation of life. Fiat currency, however, corrupts these foundational beliefs. In the theological narrative of creation, humans are given dominion over the Earth, tasked with nurturing and protecting it for future generations. But the fiat system promotes the exact opposite: it commodifies everything—land, labor, and life—treating them as mere transactions on a ledger.

This disrespect for creation is an affront to the divine. In many theologies, creation is meant to be sustained, a delicate balance that mirrors the harmony of the divine order. Fiat systems—by continuously printing money and driving inflation—treat nature and humanity as expendable resources to be exploited for short-term gains, leading to environmental degradation and societal collapse. The creation narrative, in which humans are called to be stewards, is inverted. The fiat system, through its unholy alliance with unrestrained growth and unsustainable debt, is destroying the very creation it should protect.

Furthermore, the fiat system drives idolatry of power and wealth. The central banks and corporations that control the money supply have become modern-day gods, their decrees shaping the lives of billions, while the masses are enslaved by debt and inflation. This form of worship isn't overt, but it is profound. It leads to a world where people place their faith not in God or their families, but in the abstract promises of institutions that serve their own interests.

Physics and the Infinite Growth Paradox

Physics teaches us that the universe is finite—resources, energy, and space are all limited. Yet, the fiat system operates under the delusion of infinite growth. Central banks print money without concern for natural limits, encouraging an economy that assumes unending expansion. This is not only an economic fallacy; it is a physical impossibility.

In thermodynamics, the Second Law states that entropy (disorder) increases over time in any closed system. The fiat system operates as if the Earth were an infinite resource pool, perpetually able to expand without consequence. The real world, however, does not bend to these abstract concepts of infinite growth. Resources are finite, ecosystems are fragile, and human capacity is limited. Fiat currency, by promoting unsustainable consumption and growth, accelerates the depletion of resources and the degradation of natural systems that support life itself.

Even the financial “growth” driven by fiat policies leads to unsustainable bubbles—inflated stock markets, real estate, and speculative assets that burst and leave ruin in their wake. These crashes aren’t just economic—they have profound biological consequences. The cycles of boom and bust undermine communities, erode social stability, and increase anxiety and depression, all of which affect human health at a biological level.

Biology: The Fiat System and the Destruction of Human Health

Biologically, the fiat system is a cancerous growth on human society. The constant chase for growth and the devaluation of work leads to chronic stress, which is one of the leading causes of disease in modern society. The strain of living in a system that values speculation over well-being results in a biological feedback loop: rising anxiety, poor mental health, physical diseases like cardiovascular disorders, and a shortening of lifespans.

Moreover, the focus on profit and short-term returns creates a biological disconnect between humans and the planet. The fiat system fuels industries that destroy ecosystems, increase pollution, and deplete resources at unsustainable rates. These actions are not just environmentally harmful; they directly harm human biology. The degradation of the environment—whether through toxic chemicals, pollution, or resource extraction—has profound biological effects on human health, causing respiratory diseases, cancers, and neurological disorders.

The biological cost of the fiat system is not a distant theory; it is being paid every day by millions in the form of increased health risks, diseases linked to stress, and the growing burden of mental health disorders. The constant uncertainty of an inflation-driven economy exacerbates these conditions, creating a society of individuals whose bodies and minds are under constant strain. We are witnessing a systemic biological unraveling, one in which the very act of living is increasingly fraught with pain, instability, and the looming threat of collapse.

Finance as the Final Illusion

At the core of the fiat system is a fundamental illusion—that financial growth can occur without any real connection to tangible value. The abstraction of currency, the manipulation of interest rates, and the constant creation of new money hide the underlying truth: the system is built on nothing but faith. When that faith falters, the entire system collapses.

This illusion has become so deeply embedded that it now defines the human experience. Work no longer connects to production or creation—it is reduced to a transaction on a spreadsheet, a means to acquire more fiat currency in a world where value is ephemeral and increasingly disconnected from human reality.

As we pursue ever-expanding wealth, the fundamental truths of biology—interdependence, sustainability, and balance—are ignored. The fiat system’s abstract financial models serve to disconnect us from the basic realities of life: that we are part of an interconnected world where every action has a reaction, where resources are finite, and where human health, both mental and physical, depends on the stability of our environment and our social systems.

The Ultimate Extermination

In the end, the fiat system is not just an economic issue; it is a biological, philosophical, theological, and existential threat to the very survival of humanity. It is a force that devalues human effort, encourages environmental destruction, fosters inequality, and creates pain at the core of the human biological condition. It is an economic framework that leads not to prosperity, but to extermination—not just of species, but of the very essence of human well-being.

To continue on this path is to accept the slow death of our species, one based not on natural forces, but on our own choice to worship the abstract over the real, the speculative over the tangible. The fiat system isn't just a threat; it is the ultimate self-inflicted wound, a cultural and financial cancer that, if left unchecked, will destroy humanity’s chance for survival and peace.

-

@ a367f9eb:0633efea

2024-12-22 21:35:22

@ a367f9eb:0633efea

2024-12-22 21:35:22I’ll admit that I was wrong about Bitcoin. Perhaps in 2013. Definitely 2017. Probably in 2018-2019. And maybe even today.

Being wrong about Bitcoin is part of finally understanding it. It will test you, make you question everything, and in the words of BTC educator and privacy advocate Matt Odell, “Bitcoin will humble you”.

I’ve had my own stumbles on the way.

In a very public fashion in 2017, after years of using Bitcoin, trying to start a company with it, using it as my primary exchange vehicle between currencies, and generally being annoying about it at parties, I let out the bear.

In an article published in my own literary magazine Devolution Review in September 2017, I had a breaking point. The article was titled “Going Bearish on Bitcoin: Cryptocurrencies are the tulip mania of the 21st century”.

It was later republished in Huffington Post and across dozens of financial and crypto blogs at the time with another, more appropriate title: “Bitcoin Has Become About The Payday, Not Its Potential”.

As I laid out, my newfound bearishness had little to do with the technology itself or the promise of Bitcoin, and more to do with the cynical industry forming around it:

In the beginning, Bitcoin was something of a revolution to me. The digital currency represented everything from my rebellious youth.

It was a decentralized, denationalized, and digital currency operating outside the traditional banking and governmental system. It used tools of cryptography and connected buyers and sellers across national borders at minimal transaction costs.

…

The 21st-century version (of Tulip mania) has welcomed a plethora of slick consultants, hazy schemes dressed up as investor possibilities, and too much wishy-washy language for anything to really make sense to anyone who wants to use a digital currency to make purchases.

While I called out Bitcoin by name at the time, on reflection, I was really talking about the ICO craze, the wishy-washy consultants, and the altcoin ponzis.

What I was articulating — without knowing it — was the frame of NgU, or “numbers go up”. Rather than advocating for Bitcoin because of its uncensorability, proof-of-work, or immutability, the common mentality among newbies and the dollar-obsessed was that Bitcoin mattered because its price was a rocket ship.

And because Bitcoin was gaining in price, affinity tokens and projects that were imperfect forks of Bitcoin took off as well.

The price alone — rather than its qualities — were the reasons why you’d hear Uber drivers, finance bros, or your gym buddy mention Bitcoin. As someone who came to Bitcoin for philosophical reasons, that just sat wrong with me.

Maybe I had too many projects thrown in my face, or maybe I was too frustrated with the UX of Bitcoin apps and sites at the time. No matter what, I’ve since learned something.

I was at least somewhat wrong.

My own journey began in early 2011. One of my favorite radio programs, Free Talk Live, began interviewing guests and having discussions on the potential of Bitcoin. They tied it directly to a libertarian vision of the world: free markets, free people, and free banking. That was me, and I was in. Bitcoin was at about $5 back then (NgU).

I followed every article I could, talked about it with guests on my college radio show, and became a devoted redditor on r/Bitcoin. At that time, at least to my knowledge, there was no possible way to buy Bitcoin where I was living. Very weak.

I was probably wrong. And very wrong for not trying to acquire by mining or otherwise.

The next year, after moving to Florida, Bitcoin was a heavy topic with a friend of mine who shared the same vision (and still does, according to the Celsius bankruptcy documents). We talked about it with passionate leftists at Occupy Tampa in 2012, all the while trying to explain the ills of Keynesian central banking, and figuring out how to use Coinbase.

I began writing more about Bitcoin in 2013, writing a guide on “How to Avoid Bank Fees Using Bitcoin,” discussing its potential legalization in Germany, and interviewing Jeremy Hansen, one of the first political candidates in the U.S. to accept Bitcoin donations.

Even up until that point, I thought Bitcoin was an interesting protocol for sending and receiving money quickly, and converting it into fiat. The global connectedness of it, plus this cypherpunk mentality divorced from government control was both useful and attractive. I thought it was the perfect go-between.

But I was wrong.

When I gave my first public speech on Bitcoin in Vienna, Austria in December 2013, I had grown obsessed with Bitcoin’s adoption on dark net markets like Silk Road.

My theory, at the time, was the number and price were irrelevant. The tech was interesting, and a novel attempt. It was unlike anything before. But what was happening on the dark net markets, which I viewed as the true free market powered by Bitcoin, was even more interesting. I thought these markets would grow exponentially and anonymous commerce via BTC would become the norm.

While the price was irrelevant, it was all about buying and selling goods without permission or license.

Now I understand I was wrong.

Just because Bitcoin was this revolutionary technology that embraced pseudonymity did not mean that all commerce would decentralize as well. It did not mean that anonymous markets were intended to be the most powerful layer in the Bitcoin stack.

What I did not even anticipate is something articulated very well by noted Bitcoin OG Pierre Rochard: Bitcoin as a savings technology.

The ability to maintain long-term savings, practice self-discipline while stacking stats, and embrace a low-time preference was just not something on the mind of the Bitcoiners I knew at the time.

Perhaps I was reading into the hype while outwardly opposing it. Or perhaps I wasn’t humble enough to understand the true value proposition that many of us have learned years later.

In the years that followed, I bought and sold more times than I can count, and I did everything to integrate it into passion projects. I tried to set up a company using Bitcoin while at my university in Prague.

My business model depended on university students being technologically advanced enough to have a mobile wallet, own their keys, and be able to make transactions on a consistent basis. Even though I was surrounded by philosophically aligned people, those who would advance that to actually put Bitcoin into practice were sparse.

This is what led me to proclaim that “Technological Literacy is Doomed” in 2016.

And I was wrong again.

Indeed, since that time, the UX of Bitcoin-only applications, wallets, and supporting tech has vastly improved and onboarded millions more people than anyone thought possible. The entrepreneurship, coding excellence, and vision offered by Bitcoiners of all stripes have renewed a sense in me that this project is something built for us all — friends and enemies alike.

While many of us were likely distracted by flashy and pumpy altcoins over the years (me too, champs), most of us have returned to the Bitcoin stable.

Fast forward to today, there are entire ecosystems of creators, activists, and developers who are wholly reliant on the magic of Bitcoin’s protocol for their life and livelihood. The options are endless. The FUD is still present, but real proof of work stands powerfully against those forces.

In addition, there are now dozens of ways to use Bitcoin privately — still without custodians or intermediaries — that make it one of the most important assets for global humanity, especially in dictatorships.

This is all toward a positive arc of innovation, freedom, and pure independence. Did I see that coming? Absolutely not.

Of course, there are probably other shots you’ve missed on Bitcoin. Price predictions (ouch), the short-term inflation hedge, or the amount of institutional investment. While all of these may be erroneous predictions in the short term, we have to realize that Bitcoin is a long arc. It will outlive all of us on the planet, and it will continue in its present form for the next generation.

Being wrong about the evolution of Bitcoin is no fault, and is indeed part of the learning curve to finally understanding it all.

When your family or friends ask you about Bitcoin after your endless sessions explaining market dynamics, nodes, how mining works, and the genius of cryptographic signatures, try to accept that there is still so much we have to learn about this decentralized digital cash.

There are still some things you’ve gotten wrong about Bitcoin, and plenty more you’ll underestimate or get wrong in the future. That’s what makes it a beautiful journey. It’s a long road, but one that remains worth it.

-

@ 8cda1daa:e9e5bdd8

2025-04-24 10:20:13

@ 8cda1daa:e9e5bdd8

2025-04-24 10:20:13Bitcoin cracked the code for money. Now it's time to rebuild everything else.