-

@ 42342239:1d80db24

2024-10-23 12:28:41

@ 42342239:1d80db24

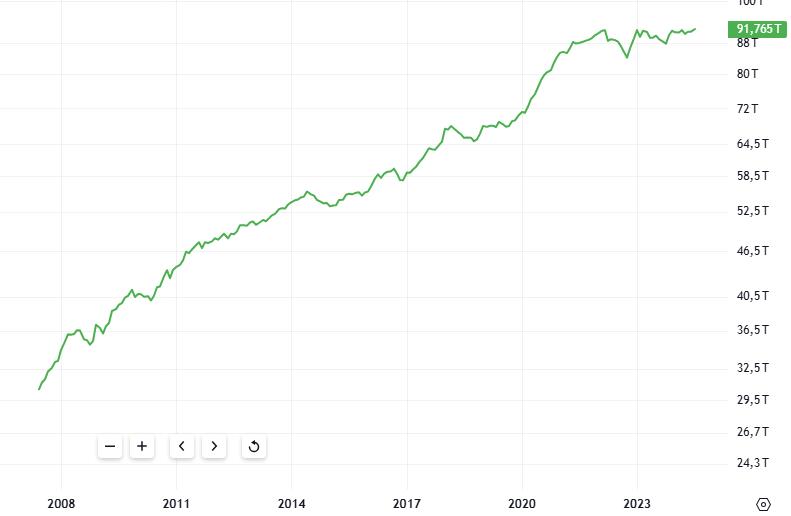

2024-10-23 12:28:41TL;DR: The mathematics of trust says that news reporting will fall flat when the population becomes suspicious of the media. Which is now the case for growing subgroups in the U.S. as well as in Sweden.

A recent wedding celebration for Sweden Democrats leader Jimmie Åkesson resulted in controversy, as one of the guests in attendance was reportedly linked to organized crime. Following this “wedding scandal”, a columnist noted that the party’s voters had not been significantly affected. Instead of a decrease in trust - which one might have expected - 10% of them stated that their confidence in the party had actually increased. “Over the years, the Sweden Democrats have surprisingly emerged unscathed from their numerous scandals,” she wrote. But is this really so surprising?

In mathematics, a probability is expressed as the likelihood of something occurring given one or more conditions. For example, one can express a probability as “the likelihood that a certain stock will rise in price, given that the company has presented a positive quarterly report.” In this case, the company’s quarterly report is the basis for the assessment. If we add more information, such as the company’s strong market position and a large order from an important customer, the probability increases further. The more information we have to go on, the more precise we can be in our assessment.

From this perspective, the Sweden Democrats’ “numerous scandals” should lead to a more negative assessment of the party. But this perspective omits something important.

A couple of years ago, the term “gaslighting” was chosen as the word of the year in the US. The term comes from a 1944 film of the same name and refers to a type of psychological manipulation, as applied to the lovely Ingrid Bergman. Today, the term is used in politics, for example, when a large group of people is misled to achieve political goals. The techniques used can be very effective but have a limitation. When the target becomes aware of what is happening, everything changes. Then the target becomes vigilant and views all new information with great suspicion.

The Sweden Democrats’ “numerous scandals” should lead to a more negative assessment of the party. But if SD voters to a greater extent than others believe that the source of the information is unreliable, for example, by omitting information or adding unnecessary information, the conclusion is different. The Swedish SOM survey shows that these voters have lower trust in journalists and also lower confidence in the objectivity of the news. Like a victim of gaslighting, they view negative reporting with suspicion. The arguments can no longer get through. A kind of immunity has developed.

In the US, trust in the media is at an all-time low. So when American media writes that “Trump speaks like Hitler, Stalin, and Mussolini,” that his idea of deporting illegal immigrants would cost hundreds of billions of dollars, or gets worked up over his soda consumption, the consequence is likely to be similar to here at home.

The mathematics of trust says that reporting will fall flat when the population becomes suspicious of the media. Or as the Swedish columnist put it: like water off a duck’s back.

Cover image: Ingrid Bergman 1946. RKO Radio Pictures - eBay, Public Domain, Wikimedia Commons

-

@ 42342239:1d80db24

2024-10-22 07:57:17

@ 42342239:1d80db24

2024-10-22 07:57:17It was recently reported that Sweden's Minister for Culture, Parisa Liljestrand, wishes to put an end to anonymous accounts on social media. The issue has been at the forefront following revelations of political parties using pseudonymous accounts on social media platforms earlier this year.

The importance of the internet is also well-known. As early as 2015, Roberta Alenius, who was then the press secretary for Fredrik Reinfeldt (Moderate Party), openly spoke about her experiences with the Social Democrats' and Moderates' internet activists: Twitter actually set the agenda for journalism at the time.

The Minister for Culture now claims, amongst other things, that anonymous accounts pose a threat to democracy, that they deceive people, and that they can be used to mislead, etc. It is indeed easy to find arguments against anonymity; perhaps the most common one is the 'nothing to hide, nothing to fear' argument.

One of the many problems with this argument is that it assumes that abuse of power never occurs. History has much to teach us here. Sometimes, authorities can act in an arbitrary, discriminatory, or even oppressive manner, at least in hindsight. Take, for instance, the struggles of the homosexual community, the courageous dissidents who defied communist regimes, or the women who fought for their right to vote in the suffragette movement.

It was difficult for homosexuals to be open about their sexuality in Sweden in the 1970s. Many risked losing their jobs, being ostracised, or harassed. Anonymity was therefore a necessity for many. Homosexuality was actually classified as a mental illness in Sweden until 1979.

A couple of decades earlier, dissidents in communist regimes in Europe used pseudonyms when publishing samizdat magazines. The Czech author and dissident Václav Havel, who later became the President of the Czech Republic, used a pseudonym when publishing his texts. The same was true for the Russian author and literary prize winner Alexander Solzhenitsyn. Indeed, in Central and Eastern Europe, anonymity was of the utmost importance.

One hundred years ago, women all over the world fought for the right to vote and to be treated as equals. Many were open in their struggle, but for others, anonymity was a necessity as they risked being socially ostracised, losing their jobs, or even being arrested.

Full transparency is not always possible or desirable. Anonymity can promote creativity and innovation as it gives people the opportunity to experiment and try out new ideas without fear of being judged or criticised. This applies not only to individuals but also to our society, in terms of ideas, laws, norms, and culture.

It is also a strange paradox that those who wish to limit freedom of speech and abolish anonymity simultaneously claim to be concerned about the possible return of fascism. The solutions they advocate are, in fact, precisely what would make it easier for a tyrannical regime to maintain its power. To advocate for the abolition of anonymity, one must also be of the (absurd) opinion that the development of history has now reached its definitive end.

-

@ 42342239:1d80db24

2024-09-26 07:57:04

@ 42342239:1d80db24

2024-09-26 07:57:04The boiling frog is a simple tale that illustrates the danger of gradual change: if you put a frog in boiling water, it will quickly jump out to escape the heat. But if you place a frog in warm water and gradually increase the temperature, it won't notice the change and will eventually cook itself. Might the decline in cash usage be construed as an example of this tale?

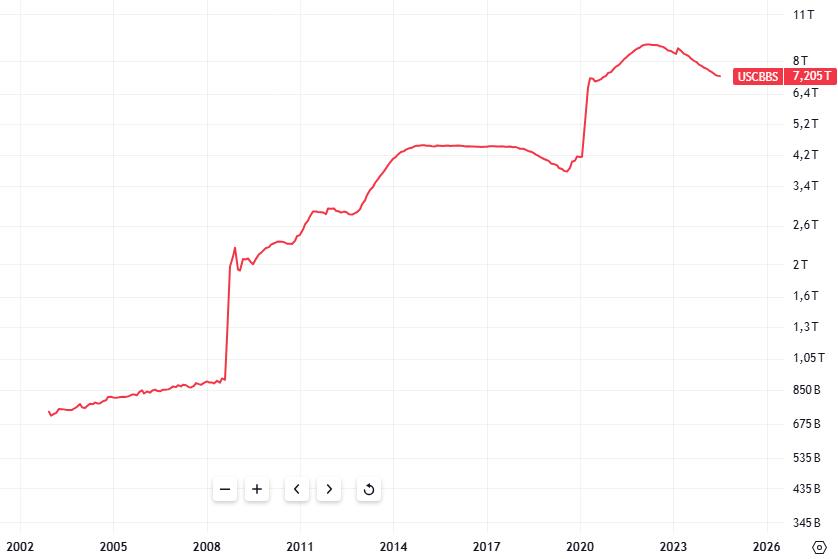

As long as individuals can freely transact with each other and conduct purchases and sales without intermediaries[^1] such as with cash, our freedoms and rights remain secure from potential threats posed by the payment system. However, as we have seen in several countries such as Sweden over the past 15 years, the use of cash and the amount of banknotes and coins in circulation have decreased. All to the benefit of various intermediated[^1] electronic alternatives.

The reasons for this trend include: - The costs associated with cash usage has been increasing. - Increased regulatory burdens due to stricter anti-money laundering regulations. - Closed bank branches and fewer ATMs. - The Riksbank's aggressive note switches resulted in a situation where they were no longer recognized.

Market forces or "market forces"?

Some may argue that the "de-cashing" of society is a consequence of market forces. But does this hold true? Leading economists at times recommend interventions with the express purpose to mislead the public, such as proposing measures who are "opaque to most voters."

In a working paper on de-cashing by the International Monetary Fund (IMF) from 2017, such thought processes, even recommendations, can be found. IMF economist Alexei Kireyev, formerly a professor at an institute associated with the Soviet Union's KGB (MGIMO) and economic adviser to Michail Gorbachov 1989-91, wrote that:

- "Social conventions may also be disrupted as de-cashing may be viewed as a violation of fundamental rights, including freedom of contract and freedom of ownership."

- Letting the private sector lead "the de-cashing" is preferable, as it will seem "almost entirely benign". The "tempting attempts to impose de-cashing by a decree should be avoided"

- "A targeted outreach program is needed to alleviate suspicions related to de-cashing"

In the text, he also offered suggestions on the most effective approach to diminish the use of cash:

- The de-cashing process could build on the initial and largely uncontested steps, such as the phasing out of large denomination bills, the placement of ceilings on cash transactions, and the reporting of cash moves across the borders.

- Include creating economic incentives to reduce the use of cash in transactions

- Simplify "the opening and use of transferrable deposits, and further computerizing the financial system."

As is customary in such a context, it is noted that the article only describes research and does not necessarily reflect IMF's views. However, isn't it remarkable that all of these proposals have come to fruition and the process continues? Central banks have phased out banknotes with higher denominations. Banks' regulatory complexity seemingly increase by the day (try to get a bank to handle any larger amounts of cash). The transfer of cash from one nation to another has become increasingly burdensome. The European Union has recently introduced restrictions on cash transactions. Even the law governing the Swedish central bank is written so as to guarantee a further undermining of cash. All while the market share is growing for alternatives such as transferable deposits[^1].

The old European disease

The Czech Republic's former president Václav Havel, who played a key role in advocating for human rights during the communist repression, was once asked what the new member states in the EU could do to pay back for all the economic support they had received from older member states. He replied that the European Union still suffers from the old European disease, namely the tendency to compromise with evil. And that the new members, who have a recent experience of totalitarianism, are obliged to take a more principled stance - sometimes necessary - and to monitor the European Union in this regard, and educate it.

The American computer scientist and cryptographer David Chaum said in 1996 that "[t]he difference between a bad electronic cash system and well-developed digital cash will determine whether we will have a dictatorship or a real democracy". If Václav Havel were alive today, he would likely share Chaum's sentiment. Indeed, on the current path of "de-cashing", we risk abolishing or limiting our liberties and rights, "including freedom of contract and freedom of ownership" - and this according to an economist at the IMF(!).

As the frog was unwittingly boiled alive, our freedoms are quietly being undermined. The temperature is rising. Will people take notice before our liberties are irreparably damaged?

[^1]: Transferable deposits are intermediated. Intermediated means payments involving one or several intermediares, like a bank, a card issuer or a payment processor. In contrast, a disintermediated payment would entail a direct transactions between parties without go-betweens, such as with cash.

-

@ ee11a5df:b76c4e49

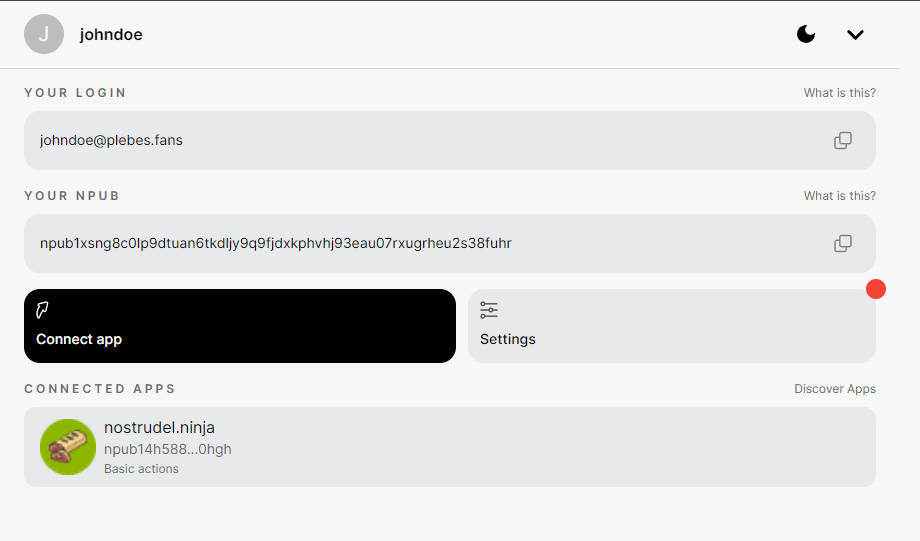

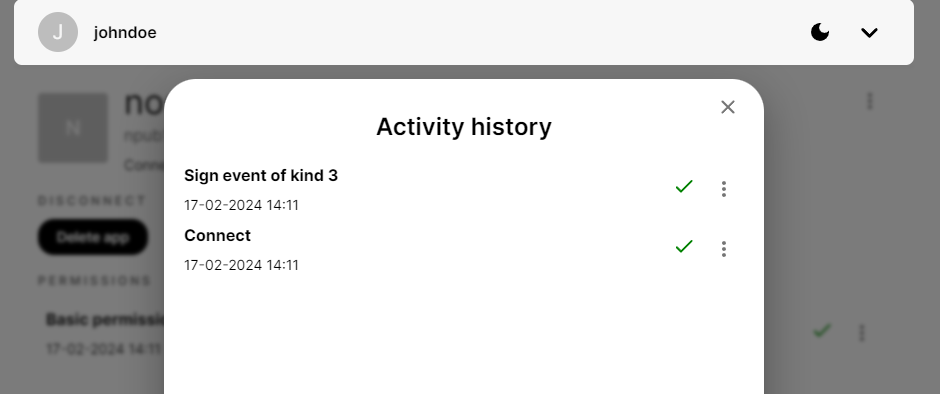

2024-09-11 08:16:37

@ ee11a5df:b76c4e49

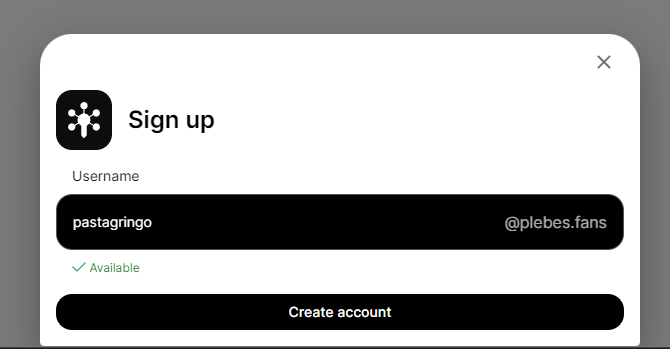

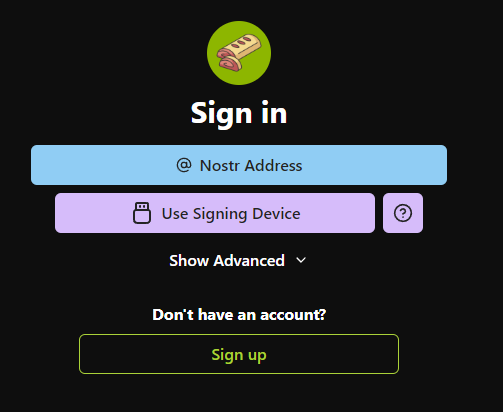

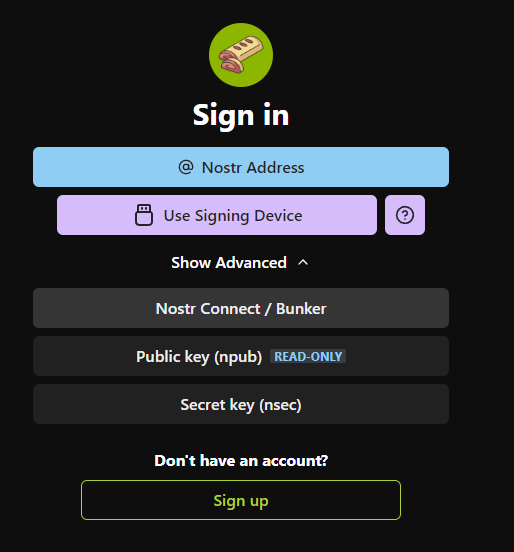

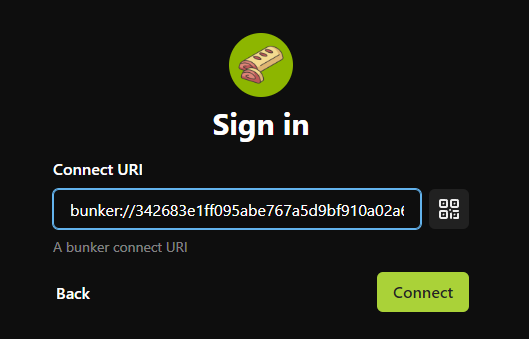

2024-09-11 08:16:37Bye-Bye Reply Guy

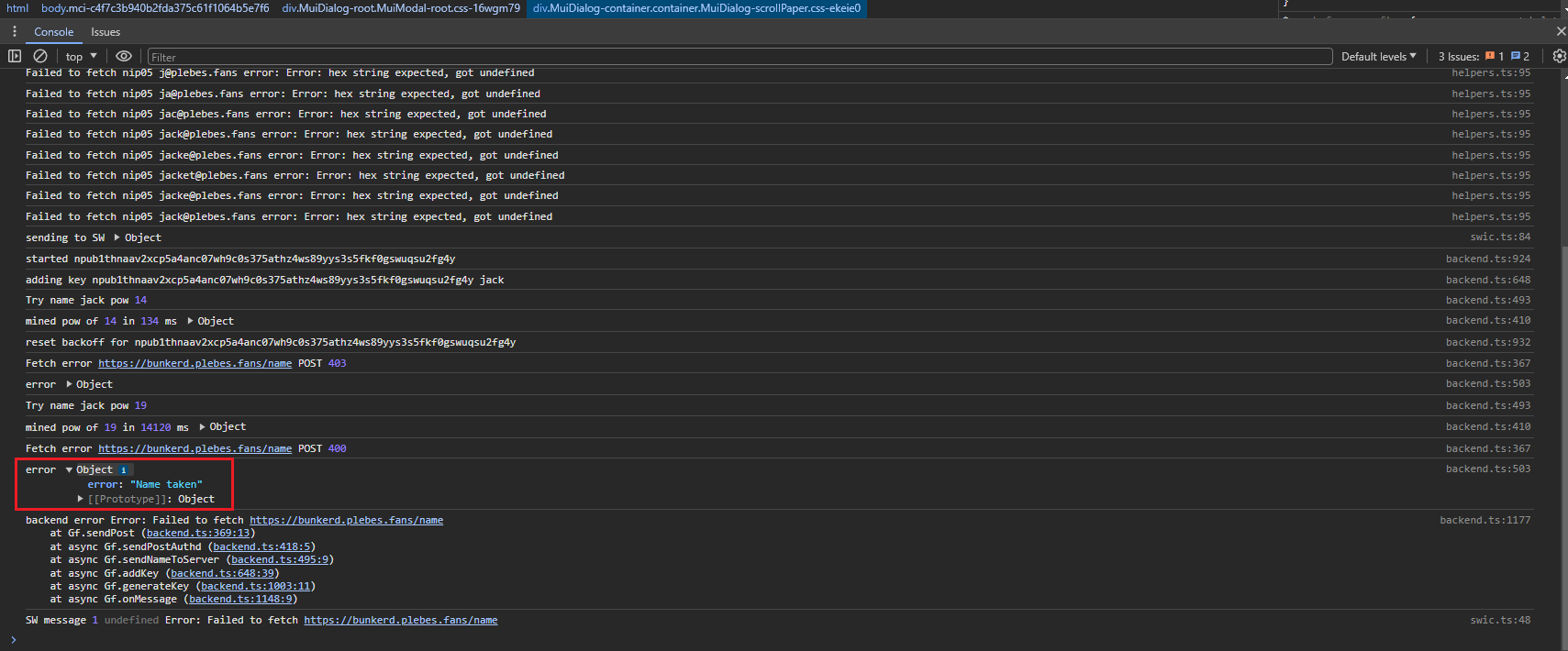

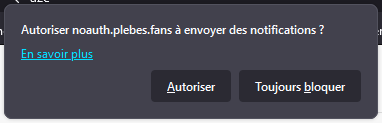

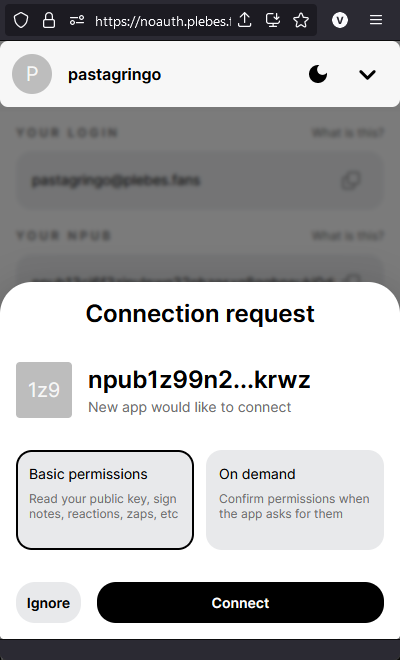

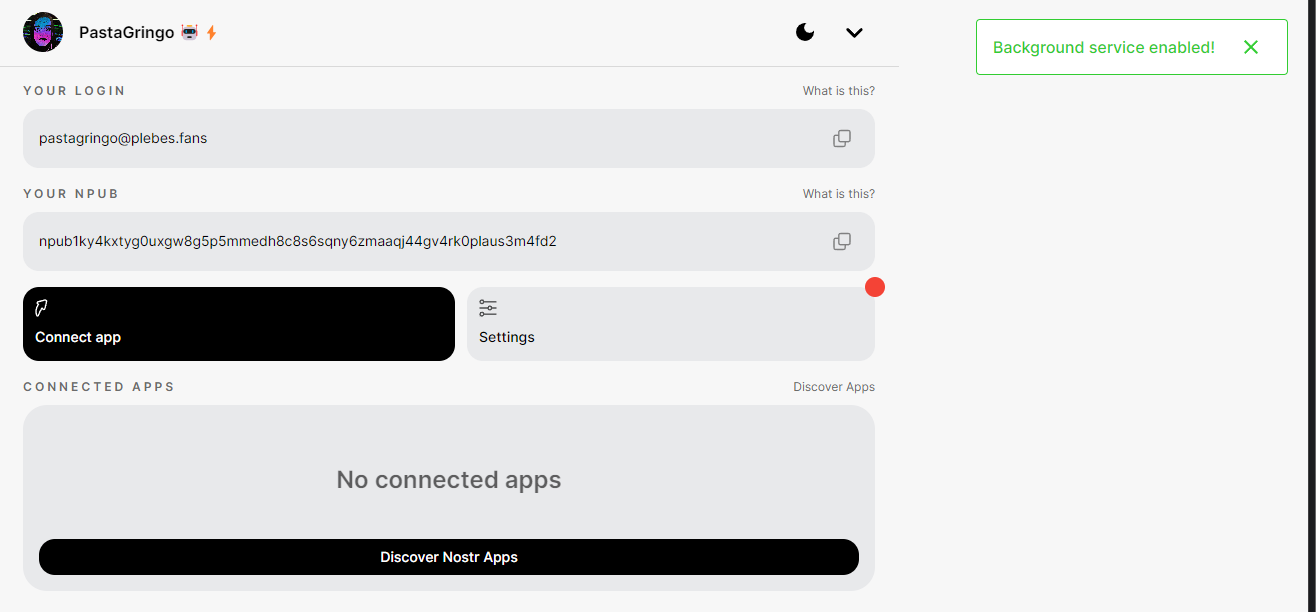

There is a camp of nostr developers that believe spam filtering needs to be done by relays. Or at the very least by DVMs. I concur. In this way, once you configure what you want to see, it applies to all nostr clients.

But we are not there yet.

In the mean time we have ReplyGuy, and gossip needed some changes to deal with it.

Strategies in Short

- WEB OF TRUST: Only accept events from people you follow, or people they follow - this avoids new people entirely until somebody else that you follow friends them first, which is too restrictive for some people.

- TRUSTED RELAYS: Allow every post from relays that you trust to do good spam filtering.

- REJECT FRESH PUBKEYS: Only accept events from people you have seen before - this allows you to find new people, but you will miss their very first post (their second post must count as someone you have seen before, even if you discarded the first post)

- PATTERN MATCHING: Scan for known spam phrases and words and block those events, either on content or metadata or both or more.

- TIE-IN TO EXTERNAL SYSTEMS: Require a valid NIP-05, or other nostr event binding their identity to some external identity

- PROOF OF WORK: Require a minimum proof-of-work

All of these strategies are useful, but they have to be combined properly.

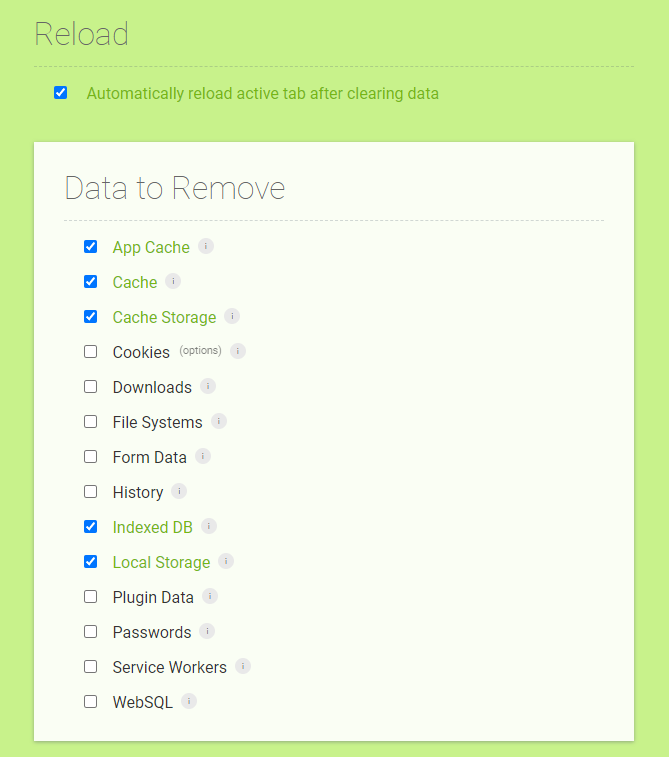

filter.rhai

Gossip loads a file called "filter.rhai" in your gossip directory if it exists. It must be a Rhai language script that meets certain requirements (see the example in the gossip source code directory). Then it applies it to filter spam.

This spam filtering code is being updated currently. It is not even on unstable yet, but it will be there probably tomorrow sometime. Then to master. Eventually to a release.

Here is an example using all of the techniques listed above:

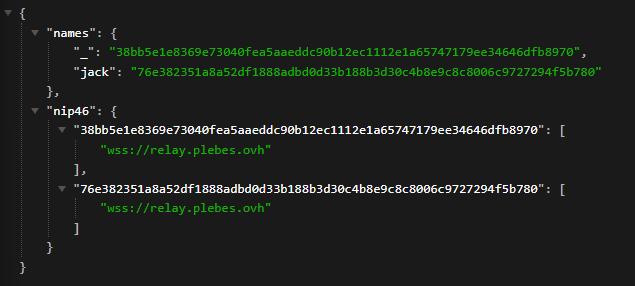

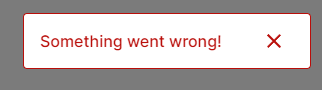

```rhai // This is a sample spam filtering script for the gossip nostr // client. The language is called Rhai, details are at: // https://rhai.rs/book/ // // For gossip to find your spam filtering script, put it in // your gossip profile directory. See // https://docs.rs/dirs/latest/dirs/fn.data_dir.html // to find the base directory. A subdirectory "gossip" is your // gossip data directory which for most people is their profile // directory too. (Note: if you use a GOSSIP_PROFILE, you'll // need to put it one directory deeper into that profile // directory). // // This filter is used to filter out and refuse to process // incoming events as they flow in from relays, and also to // filter which events get/ displayed in certain circumstances. // It is only run on feed-displayable event kinds, and only by // authors you are not following. In case of error, nothing is // filtered. // // You must define a function called 'filter' which returns one // of these constant values: // DENY (the event is filtered out) // ALLOW (the event is allowed through) // MUTE (the event is filtered out, and the author is // automatically muted) // // Your script will be provided the following global variables: // 'caller' - a string that is one of "Process", // "Thread", "Inbox" or "Global" indicating // which part of the code is running your // script // 'content' - the event content as a string // 'id' - the event ID, as a hex string // 'kind' - the event kind as an integer // 'muted' - if the author is in your mute list // 'name' - if we have it, the name of the author // (or your petname), else an empty string // 'nip05valid' - whether nip05 is valid for the author, // as a boolean // 'pow' - the Proof of Work on the event // 'pubkey' - the event author public key, as a hex // string // 'seconds_known' - the number of seconds that the author // of the event has been known to gossip // 'spamsafe' - true only if the event came in from a // relay marked as SpamSafe during Process // (even if the global setting for SpamSafe // is off)

fn filter() {

// Show spam on global // (global events are ephemeral; these won't grow the // database) if caller=="Global" { return ALLOW; } // Block ReplyGuy if name.contains("ReplyGuy") || name.contains("ReplyGal") { return DENY; } // Block known DM spam // (giftwraps are unwrapped before the content is passed to // this script) if content.to_lower().contains( "Mr. Gift and Mrs. Wrap under the tree, KISSING!" ) { return DENY; } // Reject events from new pubkeys, unless they have a high // PoW or we somehow already have a nip05valid for them // // If this turns out to be a legit person, we will start // hearing their events 2 seconds from now, so we will // only miss their very first event. if seconds_known <= 2 && pow < 25 && !nip05valid { return DENY; } // Mute offensive people if content.to_lower().contains(" kike") || content.to_lower().contains("kike ") || content.to_lower().contains(" nigger") || content.to_lower().contains("nigger ") { return MUTE; } // Reject events from muted people // // Gossip already does this internally, and since we are // not Process, this is rather redundant. But this works // as an example. if muted { return DENY; } // Accept if the PoW is large enough if pow >= 25 { return ALLOW; } // Accept if their NIP-05 is valid if nip05valid { return ALLOW; } // Accept if the event came through a spamsafe relay if spamsafe { return ALLOW; } // Reject the rest DENY} ```

-

@ 42342239:1d80db24

2024-09-02 12:08:29

@ 42342239:1d80db24

2024-09-02 12:08:29The ongoing debate surrounding freedom of expression may revolve more around determining who gets to control the dissemination of information rather than any claimed notion of safeguarding democracy. Similarities can be identified from 500 years ago, following the invention of the printing press.

What has been will be again, what has been done will be done again; there is nothing new under the sun.

-- Ecclesiastes 1:9

The debate over freedom of expression and its limits continues to rage on. In the UK, citizens are being arrested for sharing humouristic images. In Ireland, it may soon become illegal to possess "reckless" memes. Australia is trying to get X to hide information. Venezuela's Maduro blocked X earlier this year, as did a judge on Brazil's Supreme Court. In the US, a citizen has been imprisoned for spreading misleading material following a controversial court ruling. In Germany, the police are searching for a social media user who called a politician overweight. Many are also expressing concerns about deep fakes (AI-generated videos, images, or audio that are designed to deceive).

These questions are not new, however. What we perceive as new questions are often just a reflection of earlier times. After Gutenberg invented the printing press in the 15th century, there were soon hundreds of printing presses across Europe. The Church began using printing presses to mass-produce indulgences. "As soon as the coin in the coffer rings, the soul from purgatory springs" was a phrase used by a traveling monk who sold such indulgences at the time. Martin Luther questioned the reasonableness of this practice. Eventually, he posted the 95 theses on the church door in Wittenberg. He also translated the Bible into German. A short time later, his works, also mass-produced, accounted for a third of all books sold in Germany. Luther refused to recant his provocations as then determined by the Church's central authority. He was excommunicated in 1520 by the Pope and soon declared an outlaw by the Holy Roman Emperor.

This did not stop him. Instead, Luther referred to the Pope as "Pope Fart-Ass" and as the "Ass-God in Rome)". He also commissioned caricatures, such as woodcuts showing a female demon giving birth to the Pope and cardinals, of German peasants responding to a papal edict by showing the Pope their backsides and breaking wind, and more.

Gutenberg's printing presses contributed to the spread of information in a way similar to how the internet does in today's society. The Church's ability to control the flow of information was undermined, much like how newspapers, radio, and TV have partially lost this power today. The Pope excommunicated Luther, which is reminiscent of those who are de-platformed or banned from various platforms today. The Emperor declared Luther an outlaw, which is similar to how the UK's Prime Minister is imprisoning British citizens today. Luther called the Pope derogatory names, which is reminiscent of the individual who recently had the audacity to call an overweight German minister overweight.

Freedom of expression must be curtailed to combat the spread of false or harmful information in order to protect democracy, or so it is claimed. But perhaps it is more about who gets to control the flow of information?

As is often the case, there is nothing new under the sun.

-

@ eac63075:b4988b48

2024-10-21 08:11:11

@ eac63075:b4988b48

2024-10-21 08:11:11Imagine sending a private message to a friend, only to learn that authorities could be scanning its contents without your knowledge. This isn't a scene from a dystopian novel but a potential reality under the European Union's proposed "Chat Control" measures. Aimed at combating serious crimes like child exploitation and terrorism, these proposals could significantly impact the privacy of everyday internet users. As encrypted messaging services become the norm for personal and professional communication, understanding Chat Control is essential. This article delves into what Chat Control entails, why it's being considered, and how it could affect your right to private communication.

https://www.fountain.fm/episode/coOFsst7r7mO1EP1kSzV

https://open.spotify.com/episode/0IZ6kMExfxFm4FHg5DAWT8?si=e139033865e045de

Sections:

- Introduction

- What Is Chat Control?

- Why Is the EU Pushing for Chat Control?

- The Privacy Concerns and Risks

- The Technical Debate: Encryption and Backdoors

- Global Reactions and the Debate in Europe

- Possible Consequences for Messaging Services

- What Happens Next? The Future of Chat Control

- Conclusion

What Is Chat Control?

"Chat Control" refers to a set of proposed measures by the European Union aimed at monitoring and scanning private communications on messaging platforms. The primary goal is to detect and prevent the spread of illegal content, such as child sexual abuse material (CSAM) and to combat terrorism. While the intention is to enhance security and protect vulnerable populations, these proposals have raised significant privacy concerns.

At its core, Chat Control would require messaging services to implement automated scanning technologies that can analyze the content of messages—even those that are end-to-end encrypted. This means that the private messages you send to friends, family, or colleagues could be subject to inspection by algorithms designed to detect prohibited content.

Origins of the Proposal

The initiative for Chat Control emerged from the EU's desire to strengthen its digital security infrastructure. High-profile cases of online abuse and the use of encrypted platforms by criminal organizations have prompted lawmakers to consider more invasive surveillance tactics. The European Commission has been exploring legislation that would make it mandatory for service providers to monitor communications on their platforms.

How Messaging Services Work

Most modern messaging apps, like Signal, Session, SimpleX, Veilid, Protonmail and Tutanota (among others), use end-to-end encryption (E2EE). This encryption ensures that only the sender and the recipient can read the messages being exchanged. Not even the service providers can access the content. This level of security is crucial for maintaining privacy in digital communications, protecting users from hackers, identity thieves, and other malicious actors.

Key Elements of Chat Control

- Automated Content Scanning: Service providers would use algorithms to scan messages for illegal content.

- Circumvention of Encryption: To scan encrypted messages, providers might need to alter their encryption methods, potentially weakening security.

- Mandatory Reporting: If illegal content is detected, providers would be required to report it to authorities.

- Broad Applicability: The measures could apply to all messaging services operating within the EU, affecting both European companies and international platforms.

Why It Matters

Understanding Chat Control is essential because it represents a significant shift in how digital privacy is handled. While combating illegal activities online is crucial, the methods proposed could set a precedent for mass surveillance and the erosion of privacy rights. Everyday users who rely on encrypted messaging for personal and professional communication might find their conversations are no longer as private as they once thought.

Why Is the EU Pushing for Chat Control?

The European Union's push for Chat Control stems from a pressing concern to protect its citizens, particularly children, from online exploitation and criminal activities. With the digital landscape becoming increasingly integral to daily life, the EU aims to strengthen its ability to combat serious crimes facilitated through online platforms.

Protecting Children and Preventing Crime

One of the primary motivations behind Chat Control is the prevention of child sexual abuse material (CSAM) circulating on the internet. Law enforcement agencies have reported a significant increase in the sharing of illegal content through private messaging services. By implementing Chat Control, the EU believes it can more effectively identify and stop perpetrators, rescue victims, and deter future crimes.

Terrorism is another critical concern. Encrypted messaging apps can be used by terrorist groups to plan and coordinate attacks without detection. The EU argues that accessing these communications could be vital in preventing such threats and ensuring public safety.

Legal Context and Legislative Drivers

The push for Chat Control is rooted in several legislative initiatives:

-

ePrivacy Directive: This directive regulates the processing of personal data and the protection of privacy in electronic communications. The EU is considering amendments that would allow for the scanning of private messages under specific circumstances.

-

Temporary Derogation: In 2021, the EU adopted a temporary regulation permitting voluntary detection of CSAM by communication services. The current proposals aim to make such measures mandatory and more comprehensive.

-

Regulation Proposals: The European Commission has proposed regulations that would require service providers to detect, report, and remove illegal content proactively. This would include the use of technologies to scan private communications.

Balancing Security and Privacy

EU officials argue that the proposed measures are a necessary response to evolving digital threats. They emphasize the importance of staying ahead of criminals who exploit technology to harm others. By implementing Chat Control, they believe law enforcement can be more effective without entirely dismantling privacy protections.

However, the EU also acknowledges the need to balance security with fundamental rights. The proposals include provisions intended to limit the scope of surveillance, such as:

-

Targeted Scanning: Focusing on specific threats rather than broad, indiscriminate monitoring.

-

Judicial Oversight: Requiring court orders or oversight for accessing private communications.

-

Data Protection Safeguards: Implementing measures to ensure that data collected is handled securely and deleted when no longer needed.

The Urgency Behind the Push

High-profile cases of online abuse and terrorism have heightened the sense of urgency among EU policymakers. Reports of increasing online grooming and the widespread distribution of illegal content have prompted calls for immediate action. The EU posits that without measures like Chat Control, these problems will continue to escalate unchecked.

Criticism and Controversy

Despite the stated intentions, the push for Chat Control has been met with significant criticism. Opponents argue that the measures could be ineffective against savvy criminals who can find alternative ways to communicate. There is also concern that such surveillance could be misused or extended beyond its original purpose.

The Privacy Concerns and Risks

While the intentions behind Chat Control focus on enhancing security and protecting vulnerable groups, the proposed measures raise significant privacy concerns. Critics argue that implementing such surveillance could infringe on fundamental rights and set a dangerous precedent for mass monitoring of private communications.

Infringement on Privacy Rights

At the heart of the debate is the right to privacy. By scanning private messages, even with automated tools, the confidentiality of personal communications is compromised. Users may no longer feel secure sharing sensitive information, fearing that their messages could be intercepted or misinterpreted by algorithms.

Erosion of End-to-End Encryption

End-to-end encryption (E2EE) is a cornerstone of digital security, ensuring that only the sender and recipient can read the messages exchanged. Chat Control could necessitate the introduction of "backdoors" or weaken encryption protocols, making it easier for unauthorized parties to access private data. This not only affects individual privacy but also exposes communications to potential cyber threats.

Concerns from Privacy Advocates

Organizations like Signal and Tutanota, which offer encrypted messaging services, have voiced strong opposition to Chat Control. They warn that undermining encryption could have far-reaching consequences:

- Security Risks: Weakening encryption makes systems more vulnerable to hacking, espionage, and cybercrime.

- Global Implications: Changes in EU regulations could influence policies worldwide, leading to a broader erosion of digital privacy.

- Ineffectiveness Against Crime: Determined criminals might resort to other, less detectable means of communication, rendering the measures ineffective while still compromising the privacy of law-abiding citizens.

Potential for Government Overreach

There is a fear that Chat Control could lead to increased surveillance beyond its original scope. Once the infrastructure for scanning private messages is in place, it could be repurposed or expanded to monitor other types of content, stifling free expression and dissent.

Real-World Implications for Users

- False Positives: Automated scanning technologies are not infallible and could mistakenly flag innocent content, leading to unwarranted scrutiny or legal consequences for users.

- Chilling Effect: Knowing that messages could be monitored might discourage people from expressing themselves freely, impacting personal relationships and societal discourse.

- Data Misuse: Collected data could be vulnerable to leaks or misuse, compromising personal and sensitive information.

Legal and Ethical Concerns

Privacy advocates also highlight potential conflicts with existing laws and ethical standards:

- Violation of Fundamental Rights: The European Convention on Human Rights and other international agreements protect the right to privacy and freedom of expression.

- Questionable Effectiveness: The ethical justification for such invasive measures is challenged if they do not significantly improve safety or if they disproportionately impact innocent users.

Opposition from Member States and Organizations

Countries like Germany and organizations such as the European Digital Rights (EDRi) have expressed opposition to Chat Control. They emphasize the need to protect digital privacy and caution against hasty legislation that could have unintended consequences.

The Technical Debate: Encryption and Backdoors

The discussion around Chat Control inevitably leads to a complex technical debate centered on encryption and the potential introduction of backdoors into secure communication systems. Understanding these concepts is crucial to grasping the full implications of the proposed measures.

What Is End-to-End Encryption (E2EE)?

End-to-end encryption is a method of secure communication that prevents third parties from accessing data while it's transferred from one end system to another. In simpler terms, only the sender and the recipient can read the messages. Even the service providers operating the messaging platforms cannot decrypt the content.

- Security Assurance: E2EE ensures that sensitive information—be it personal messages, financial details, or confidential business communications—remains private.

- Widespread Use: Popular messaging apps like Signal, Session, SimpleX, Veilid, Protonmail and Tutanota (among others) rely on E2EE to protect user data.

How Chat Control Affects Encryption

Implementing Chat Control as proposed would require messaging services to scan the content of messages for illegal material. To do this on encrypted platforms, providers might have to:

- Introduce Backdoors: Create a means for third parties (including the service provider or authorities) to access encrypted messages.

- Client-Side Scanning: Install software on users' devices that scans messages before they are encrypted and sent, effectively bypassing E2EE.

The Risks of Weakening Encryption

1. Compromised Security for All Users

Introducing backdoors or client-side scanning tools can create vulnerabilities:

- Exploitable Gaps: If a backdoor exists, malicious actors might find and exploit it, leading to data breaches.

- Universal Impact: Weakening encryption doesn't just affect targeted individuals; it potentially exposes all users to increased risk.

2. Undermining Trust in Digital Services

- User Confidence: Knowing that private communications could be accessed might deter people from using digital services or push them toward unregulated platforms.

- Business Implications: Companies relying on secure communications might face increased risks, affecting economic activities.

3. Ineffectiveness Against Skilled Adversaries

- Alternative Methods: Criminals might shift to other encrypted channels or develop new ways to avoid detection.

- False Sense of Security: Weakening encryption could give the impression of increased safety while adversaries adapt and continue their activities undetected.

Signal’s Response and Stance

Signal, a leading encrypted messaging service, has been vocal in its opposition to the EU's proposals:

- Refusal to Weaken Encryption: Signal's CEO Meredith Whittaker has stated that the company would rather cease operations in the EU than compromise its encryption standards.

- Advocacy for Privacy: Signal emphasizes that strong encryption is essential for protecting human rights and freedoms in the digital age.

Understanding Backdoors

A "backdoor" in encryption is an intentional weakness inserted into a system to allow authorized access to encrypted data. While intended for legitimate use by authorities, backdoors pose several problems:

- Security Vulnerabilities: They can be discovered and exploited by unauthorized parties, including hackers and foreign governments.

- Ethical Concerns: The existence of backdoors raises questions about consent and the extent to which governments should be able to access private communications.

The Slippery Slope Argument

Privacy advocates warn that introducing backdoors or mandatory scanning sets a precedent:

- Expanded Surveillance: Once in place, these measures could be extended to monitor other types of content beyond the original scope.

- Erosion of Rights: Gradual acceptance of surveillance can lead to a significant reduction in personal freedoms over time.

Potential Technological Alternatives

Some suggest that it's possible to fight illegal content without undermining encryption:

- Metadata Analysis: Focusing on patterns of communication rather than content.

- Enhanced Reporting Mechanisms: Encouraging users to report illegal content voluntarily.

- Investing in Law Enforcement Capabilities: Strengthening traditional investigative methods without compromising digital security.

The technical community largely agrees that weakening encryption is not the solution:

- Consensus on Security: Strong encryption is essential for the safety and privacy of all internet users.

- Call for Dialogue: Technologists and privacy experts advocate for collaborative approaches that address security concerns without sacrificing fundamental rights.

Global Reactions and the Debate in Europe

The proposal for Chat Control has ignited a heated debate across Europe and beyond, with various stakeholders weighing in on the potential implications for privacy, security, and fundamental rights. The reactions are mixed, reflecting differing national perspectives, political priorities, and societal values.

Support for Chat Control

Some EU member states and officials support the initiative, emphasizing the need for robust measures to combat online crime and protect citizens, especially children. They argue that:

- Enhanced Security: Mandatory scanning can help law enforcement agencies detect and prevent serious crimes.

- Responsibility of Service Providers: Companies offering communication services should play an active role in preventing their platforms from being used for illegal activities.

- Public Safety Priorities: The protection of vulnerable populations justifies the implementation of such measures, even if it means compromising some aspects of privacy.

Opposition within the EU

Several countries and organizations have voiced strong opposition to Chat Control, citing concerns over privacy rights and the potential for government overreach.

Germany

- Stance: Germany has been one of the most vocal opponents of the proposed measures.

- Reasons:

- Constitutional Concerns: The German government argues that Chat Control could violate constitutional protections of privacy and confidentiality of communications.

- Security Risks: Weakening encryption is seen as a threat to cybersecurity.

- Legal Challenges: Potential conflicts with national laws protecting personal data and communication secrecy.

Netherlands

- Recent Developments: The Dutch government decided against supporting Chat Control, emphasizing the importance of encryption for security and privacy.

- Arguments:

- Effectiveness Doubts: Skepticism about the actual effectiveness of the measures in combating crime.

- Negative Impact on Privacy: Concerns about mass surveillance and the infringement of citizens' rights.

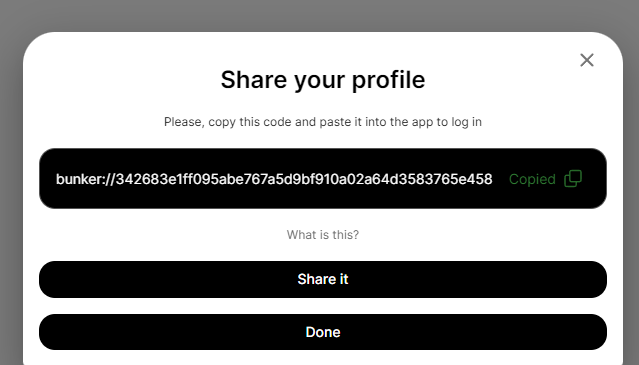

Table reference: Patrick Breyer - Chat Control in 23 September 2024

Table reference: Patrick Breyer - Chat Control in 23 September 2024Privacy Advocacy Groups

European Digital Rights (EDRi)

- Role: A network of civil and human rights organizations working to defend rights and freedoms in the digital environment.

- Position:

- Strong Opposition: EDRi argues that Chat Control is incompatible with fundamental rights.

- Awareness Campaigns: Engaging in public campaigns to inform citizens about the potential risks.

- Policy Engagement: Lobbying policymakers to consider alternative approaches that respect privacy.

Politicians and Activists

Patrick Breyer

- Background: A Member of the European Parliament (MEP) from Germany, representing the Pirate Party.

- Actions:

- Advocacy: Actively campaigning against Chat Control through speeches, articles, and legislative efforts.

- Public Outreach: Using social media and public events to raise awareness.

- Legal Expertise: Highlighting the legal inconsistencies and potential violations of EU law.

Global Reactions

International Organizations

- Human Rights Watch and Amnesty International: These organizations have expressed concerns about the implications for human rights, urging the EU to reconsider.

Technology Companies

- Global Tech Firms: Companies like Apple and Microsoft are monitoring the situation, as EU regulations could affect their operations and user trust.

- Industry Associations: Groups representing tech companies have issued statements highlighting the risks to innovation and competitiveness.

The Broader Debate

The controversy over Chat Control reflects a broader struggle between security interests and privacy rights in the digital age. Key points in the debate include:

- Legal Precedents: How the EU's decision might influence laws and regulations in other countries.

- Digital Sovereignty: The desire of nations to control digital spaces within their borders.

- Civil Liberties: The importance of protecting freedoms in the face of technological advancements.

Public Opinion

- Diverse Views: Surveys and public forums show a range of opinions, with some citizens prioritizing security and others valuing privacy above all.

- Awareness Levels: Many people are still unaware of the potential changes, highlighting the need for public education on the issue.

The EU is at a crossroads, facing the challenge of addressing legitimate security concerns without undermining the fundamental rights that are central to its values. The outcome of this debate will have significant implications for the future of digital privacy and the balance between security and freedom in society.

Possible Consequences for Messaging Services

The implementation of Chat Control could have significant implications for messaging services operating within the European Union. Both large platforms and smaller providers might need to adapt their technologies and policies to comply with the new regulations, potentially altering the landscape of digital communication.

Impact on Encrypted Messaging Services

Signal and Similar Platforms

-

Compliance Challenges: Encrypted messaging services like Signal rely on end-to-end encryption to secure user communications. Complying with Chat Control could force them to weaken their encryption protocols or implement client-side scanning, conflicting with their core privacy principles.

-

Operational Decisions: Some platforms may choose to limit their services in the EU or cease operations altogether rather than compromise on encryption. Signal, for instance, has indicated that it would prefer to withdraw from European markets than undermine its security features.

Potential Blocking or Limiting of Services

-

Regulatory Enforcement: Messaging services that do not comply with Chat Control regulations could face fines, legal action, or even be blocked within the EU.

-

Access Restrictions: Users in Europe might find certain services unavailable or limited in functionality if providers decide not to meet the regulatory requirements.

Effects on Smaller Providers

-

Resource Constraints: Smaller messaging services and startups may lack the resources to implement the required scanning technologies, leading to increased operational costs or forcing them out of the market.

-

Innovation Stifling: The added regulatory burden could deter new entrants, reducing competition and innovation in the messaging service sector.

User Experience and Trust

-

Privacy Concerns: Users may lose trust in messaging platforms if they know their communications are subject to scanning, leading to a decline in user engagement.

-

Migration to Unregulated Platforms: There is a risk that users might shift to less secure or unregulated services, including those operated outside the EU or on the dark web, potentially exposing them to greater risks.

Technical and Security Implications

-

Increased Vulnerabilities: Modifying encryption protocols to comply with Chat Control could introduce security flaws, making platforms more susceptible to hacking and data breaches.

-

Global Security Risks: Changes made to accommodate EU regulations might affect the global user base of these services, extending security risks beyond European borders.

Impact on Businesses and Professional Communications

-

Confidentiality Issues: Businesses that rely on secure messaging for sensitive communications may face challenges in ensuring confidentiality, affecting sectors like finance, healthcare, and legal services.

-

Compliance Complexity: Companies operating internationally will need to navigate a complex landscape of differing regulations, increasing administrative burdens.

Economic Consequences

-

Market Fragmentation: Divergent regulations could lead to a fragmented market, with different versions of services for different regions.

-

Loss of Revenue: Messaging services might experience reduced revenue due to decreased user trust and engagement or the costs associated with compliance.

Responses from Service Providers

-

Legal Challenges: Companies might pursue legal action against the regulations, citing conflicts with privacy laws and user rights.

-

Policy Advocacy: Service providers may increase lobbying efforts to influence policy decisions and promote alternatives to Chat Control.

Possible Adaptations

-

Technological Innovation: Some providers might invest in developing new technologies that can detect illegal content without compromising encryption, though the feasibility remains uncertain.

-

Transparency Measures: To maintain user trust, companies might enhance transparency about how data is handled and what measures are in place to protect privacy.

The potential consequences of Chat Control for messaging services are profound, affecting not only the companies that provide these services but also the users who rely on them daily. The balance between complying with legal requirements and maintaining user privacy and security presents a significant challenge that could reshape the digital communication landscape.

What Happens Next? The Future of Chat Control

The future of Chat Control remains uncertain as the debate continues among EU member states, policymakers, technology companies, and civil society organizations. Several factors will influence the outcome of this contentious proposal, each carrying significant implications for digital privacy, security, and the regulatory environment within the European Union.

Current Status of Legislation

-

Ongoing Negotiations: The proposed Chat Control measures are still under discussion within the European Parliament and the Council of the European Union. Amendments and revisions are being considered in response to the feedback from various stakeholders.

-

Timeline: While there is no fixed date for the final decision, the EU aims to reach a consensus to implement effective measures against online crime without undue delay.

Key Influencing Factors

1. Legal Challenges and Compliance with EU Law

-

Fundamental Rights Assessment: The proposals must be evaluated against the Charter of Fundamental Rights of the European Union, ensuring that any measures comply with rights to privacy, data protection, and freedom of expression.

-

Court Scrutiny: Potential legal challenges could arise, leading to scrutiny by the European Court of Justice (ECJ), which may impact the feasibility and legality of Chat Control.

2. Technological Feasibility

-

Development of Privacy-Preserving Technologies: Research into methods that can detect illegal content without compromising encryption is ongoing. Advances in this area could provide alternative solutions acceptable to both privacy advocates and security agencies.

-

Implementation Challenges: The practical aspects of deploying scanning technologies across various platforms and services remain complex, and technical hurdles could delay or alter the proposed measures.

3. Political Dynamics

-

Member State Positions: The differing stances of EU countries, such as Germany's opposition, play a significant role in shaping the final outcome. Consensus among member states is crucial for adopting EU-wide regulations.

-

Public Opinion and Advocacy: Growing awareness and activism around digital privacy can influence policymakers. Public campaigns and lobbying efforts may sway decisions in favor of stronger privacy protections.

4. Industry Responses

-

Negotiations with Service Providers: Ongoing dialogues between EU authorities and technology companies may lead to compromises or collaborative efforts to address concerns without fully implementing Chat Control as initially proposed.

-

Potential for Self-Regulation: Messaging services might propose self-regulatory measures to combat illegal content, aiming to demonstrate effectiveness without the need for mandatory scanning.

Possible Scenarios

Optimistic Outcome:

- Balanced Regulation: A revised proposal emerges that effectively addresses security concerns while upholding strong encryption and privacy rights, possibly through innovative technologies or targeted measures with robust oversight.

Pessimistic Outcome:

- Adoption of Strict Measures: Chat Control is implemented as initially proposed, leading to weakened encryption, reduced privacy, and potential withdrawal of services like Signal from the EU market.

Middle Ground:

- Incremental Implementation: Partial measures are adopted, focusing on voluntary cooperation with service providers and emphasizing transparency and user consent, with ongoing evaluations to assess effectiveness and impact.

How to Stay Informed and Protect Your Privacy

-

Follow Reputable Sources: Keep up with news from reliable outlets, official EU communications, and statements from privacy organizations to stay informed about developments.

-

Engage in the Dialogue: Participate in public consultations, sign petitions, or contact representatives to express your views on Chat Control and digital privacy.

-

Utilize Secure Practices: Regardless of legislative outcomes, adopting good digital hygiene—such as using strong passwords and being cautious with personal information—can enhance your online security.

The Global Perspective

-

International Implications: The EU's decision may influence global policies on encryption and surveillance, setting precedents that other countries might follow or react against.

-

Collaboration Opportunities: International cooperation on developing solutions that protect both security and privacy could emerge, fostering a more unified approach to addressing online threats.

Looking Ahead

The future of Chat Control is a critical issue that underscores the challenges of governing in the digital age. Balancing the need for security with the protection of fundamental rights is a complex task that requires careful consideration, open dialogue, and collaboration among all stakeholders.

As the situation evolves, staying informed and engaged is essential. The decisions made in the coming months will shape the digital landscape for years to come, affecting how we communicate, conduct business, and exercise our rights in an increasingly connected world.

Conclusion

The debate over Chat Control highlights a fundamental challenge in our increasingly digital world: how to protect society from genuine threats without eroding the very rights and freedoms that define it. While the intention to safeguard children and prevent crime is undeniably important, the means of achieving this through intrusive surveillance measures raise critical concerns.

Privacy is not just a personal preference but a cornerstone of democratic societies. End-to-end encryption has become an essential tool for ensuring that our personal conversations, professional communications, and sensitive data remain secure from unwanted intrusion. Weakening these protections could expose individuals and organizations to risks that far outweigh the proposed benefits.

The potential consequences of implementing Chat Control are far-reaching:

- Erosion of Trust: Users may lose confidence in digital platforms, impacting how we communicate and conduct business online.

- Security Vulnerabilities: Introducing backdoors or weakening encryption can make systems more susceptible to cyberattacks.

- Stifling Innovation: Regulatory burdens may hinder technological advancement and competitiveness in the tech industry.

- Global Implications: The EU's decisions could set precedents that influence digital policies worldwide, for better or worse.

As citizens, it's crucial to stay informed about these developments. Engage in conversations, reach out to your representatives, and advocate for solutions that respect both security needs and fundamental rights. Technology and policy can evolve together to address challenges without compromising core values.

The future of Chat Control is not yet decided, and public input can make a significant difference. By promoting open dialogue, supporting privacy-preserving innovations, and emphasizing the importance of human rights in legislation, we can work towards a digital landscape that is both safe and free.

In a world where digital communication is integral to daily life, striking the right balance between security and privacy is more important than ever. The choices made today will shape the digital environment for generations to come, determining not just how we communicate, but how we live and interact in an interconnected world.

Thank you for reading this article. We hope it has provided you with a clear understanding of Chat Control and its potential impact on your privacy and digital rights. Stay informed, stay engaged, and let's work together towards a secure and open digital future.

Read more:

- https://www.patrick-breyer.de/en/posts/chat-control/

- https://www.patrick-breyer.de/en/new-eu-push-for-chat-control-will-messenger-services-be-blocked-in-europe/

- https://edri.org/our-work/dutch-decision-puts-brakes-on-chat-control/

- https://signal.org/blog/pdfs/ndss-keynote.pdf

- https://tuta.com/blog/germany-stop-chat-control

- https://cointelegraph.com/news/signal-president-slams-revised-eu-encryption-proposal

- https://mullvad.net/en/why-privacy-matters

-

@ c3f12a9a:06c21301

2024-10-23 17:59:45

@ c3f12a9a:06c21301

2024-10-23 17:59:45Destination: Medieval Venice, 1100 AD

How did Venice build its trade empire through collaboration, innovation, and trust in decentralized networks?

Venice: A City of Merchants and Innovators

In medieval times, Venice was a symbol of freedom and prosperity. Unlike the monarchies that dominated Europe, Venice was a republic – a city-state where important decisions were made by the Council of Nobles, and (the Doge, the elected chief magistrate of Venice who served as a ceremonial head of state with limited power), not an absolute ruler. Venice became synonymous with innovative approaches to governance, banking, and trade networks, which led to its rise as one of the most powerful trading centers of its time.

Decentralized Trade Networks

The success of Venice lay in its trust in decentralized trade networks. Every merchant, known as a patrician, could freely develop their trade activities and connect with others. While elsewhere trade was often controlled by kings and local lords, Venetians believed that prosperity would come from a free market and collaboration between people.

Unlike feudal models based on hierarchy and absolute power, Venetian trade networks were open and based on mutual trust. Every merchant could own ships and trade with the Middle East, and this decentralized ownership of trade routes led to unprecedented prosperity.

Story: The Secret Venetian Alliance

A young merchant named Marco, who inherited a shipyard from his father, was trying to make a name for himself in the bustling Venetian spice market. In Venice, there were many competitors, and competition was fierce. Marco, however, learned that an opportunity lay outside the traditional trade networks – among small merchants who were trying to maintain their independence from the larger Venetian patricians.

Marco decided to form an alliance with several other small merchants. Together, they began to share ships, crew costs, and information about trade routes. By creating this informal network, they were able to compete with the larger patricians who controlled the major trade routes. Through collaboration and shared resources, they began to achieve profits they would never have achieved alone.

In the end, Marco and his fellow merchants succeeded, not because they had the most wealth or influence, but because they trusted each other and worked together. They proved that small players could thrive, even in a market dominated by powerful patricians.

Satoshi ends his journey here, enlightened by the lesson that even in a world where big players exist, trust and collaboration can ensure that the market remains free and open for everyone.

Venice and Trust in Decentralized Systems

Venice was a symbol of how decentralization could lead to prosperity. There was no need for kings or powerful rulers, but instead, trust and collaboration among merchants led to the creation of a wealthy city-state.

Venice demonstrated that when people collaborate and share resources, they can achieve greater success than in a hierarchical system with a single central ruler.

A Lesson from Venice for Today's World

Today, as we think about the world of Bitcoin and decentralized finance (DeFi), Venice reminds us that trust among individuals and collaboration are key to maintaining independence and freedom. Just as in Venice, where smaller merchants found strength in collaboration, we can also find ways to keep the crypto world decentralized, open, and fair.

Key

| Term | Explanation | |------|-------------| | Doge | The elected chief magistrate of Venice who served as a ceremonial head of state with limited power. | | Patrician | A member of the noble class in Venice, typically involved in trade and governance. | | Decentralized Finance (DeFi) | A financial system that does not rely on central financial intermediaries, instead using blockchain technology and smart contracts. |

originally posted at https://stacker.news/items/737232

-

@ 42342239:1d80db24

2024-08-30 06:26:21

@ 42342239:1d80db24

2024-08-30 06:26:21Quis custodiet ipsos custodes?

-- Juvenal (Who will watch the watchmen?)

In mid-July, numerous media outlets reported on the assassination attempt on Donald Trump. FBI Director Christopher Wray stated later that same month that what hit the former president Trump was a bullet. A few days later, it was reported from various sources that search engines no longer acknowledged that an assassination attempt on ex-President Trump had taken place. When users used automatic completion in Google and Bing (91% respectively 4% market share), these search engines only suggested earlier presidents such as Harry Truman and Theodore Roosevelt, along with Russian President Vladimir Putin as people who could have been subjected to assassination attempts.

The reports were comprehensive enough for the Republican district attorney of Missouri to say that he would investigate matter. The senator from Kansas - also a Republican - planned to make an official request to Google. Google has responded through a spokesman to the New York Post that the company had not "manually changed" search results, but its system includes "protection" against search results "connected to political violence."

A similar phenomenon occurred during the 2016 presidential election. At the time, reports emerged of Google, unlike other less widely used search engines, rarely or never suggesting negative search results for Hillary Clinton. The company however provided negative search results for then-candidate Trump. Then, as today, the company denied deliberately favouring any specific political candidate.

These occurrences led to research on how such search suggestions can influence public opinion and voting preferences. For example, the impact of simply removing negative search suggestions has been investigated. A study published in June 2024 reports that such search results can dramatically affect undecided voters. Reducing negative search suggestions can turn a 50/50 split into a 90/10 split in favour of the candidate for whom negative search suggestions were suppressed. The researchers concluded that search suggestions can have "a dramatic impact," that this can "shift a large number of votes" and do so without leaving "any trace for authorities to follow." How search engines operate should therefore be considered of great importance by anyone who claims to take democracy seriously. And this regardless of one's political sympathies.

A well-known thought experiment in philosophy asks: "If a tree falls in the forest and no one hears it, does it make a sound?" Translated to today's media landscape: If an assassination attempt took place on a former president, but search engines don't want to acknowledge it, did it really happen?

-

@ fa0165a0:03397073

2024-10-23 17:19:41

@ fa0165a0:03397073

2024-10-23 17:19:41Chef's notes

This recipe is for 48 buns. Total cooking time takes at least 90 minutes, but 60 minutes of that is letting the dough rest in between processing.

The baking is a simple three-step process. 1. Making the Wheat dough 2. Making and applying the filling 3. Garnishing and baking in the oven

When done: Enjoy during Fika!

PS;

-

Can be frozen and thawed in microwave for later enjoyment as well.

-

If you need unit conversion, this site may be of help: https://www.unitconverters.net/

-

Traditionally we use something we call "Pearl sugar" which is optimal, but normal sugar or sprinkles is okay too. Pearl sugar (Pärlsocker) looks like this: https://search.brave.com/images?q=p%C3%A4rlsocker

Ingredients

- 150 g butter

- 5 dl milk

- 50 g baking yeast (normal or for sweet dough)

- 1/2 teaspoon salt

- 1-1 1/2 dl sugar

- (Optional) 2 teaspoons of crushed or grounded cardamom seeds.

- 1.4 liters of wheat flour

- Filling: 50-75 g butter, room temperature

- Filling: 1/2 - 1 dl sugar

- Filling: 1 teaspoons crushed or ground cardamom and 1 teaspoons ground cinnamon (or 2 teaspoons of cinnamon)

- Garnish: 1 egg, sugar or Almond Shavings

Directions

- Melt the butter/margarine in a saucepan.

- Pour in the milk and allow the mixture to warm reach body temperature (approx. + 37 ° C).

- Dissolve the yeast in a dough bowl with the help of the salt.

- Add the 37 ° C milk/butter mixture, sugar and if you choose to the optional cardamom. (I like this option!) and just over 2/3 of the flour.

- Work the dough shiny and smooth, about 4 minutes with a machine or 8 minutes by hand.

- Add if necessary. additional flour but save at least 1 dl for baking.

- Let the dough rise covered (by a kitchen towel), about 30 minutes.

- Work the dough into the bowl and then pick it up on a floured workbench. Knead the dough smoothly. Divide the dough into 2 parts. Roll out each piece into a rectangular cake.

- Stir together the ingredients for the filling and spread it.

- Roll up and cut each roll into 24 pieces.

- Place them in paper molds or directly on baking paper with the cut surface facing up. Let them rise covered with a baking sheet, about 30 minutes.

- Brush the buns with beaten egg and sprinkle your chosen topping.

- Bake in the middle of the oven at 250 ° C, 5-8 minutes.

- Allow to cool on a wire rack under a baking sheet.

-

-

@ 3bf0c63f:aefa459d

2024-03-23 08:57:08

@ 3bf0c63f:aefa459d

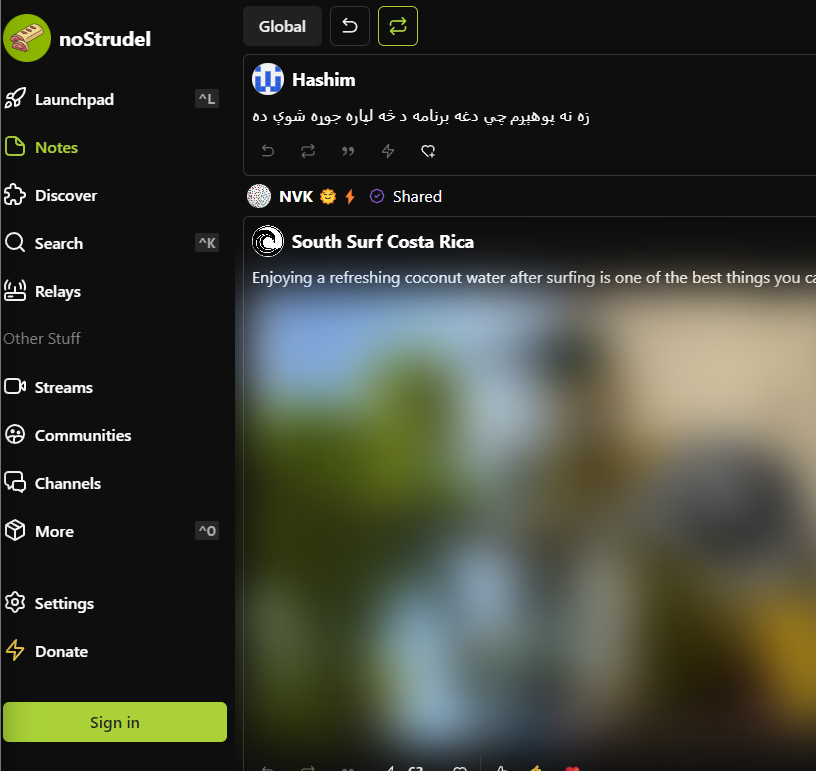

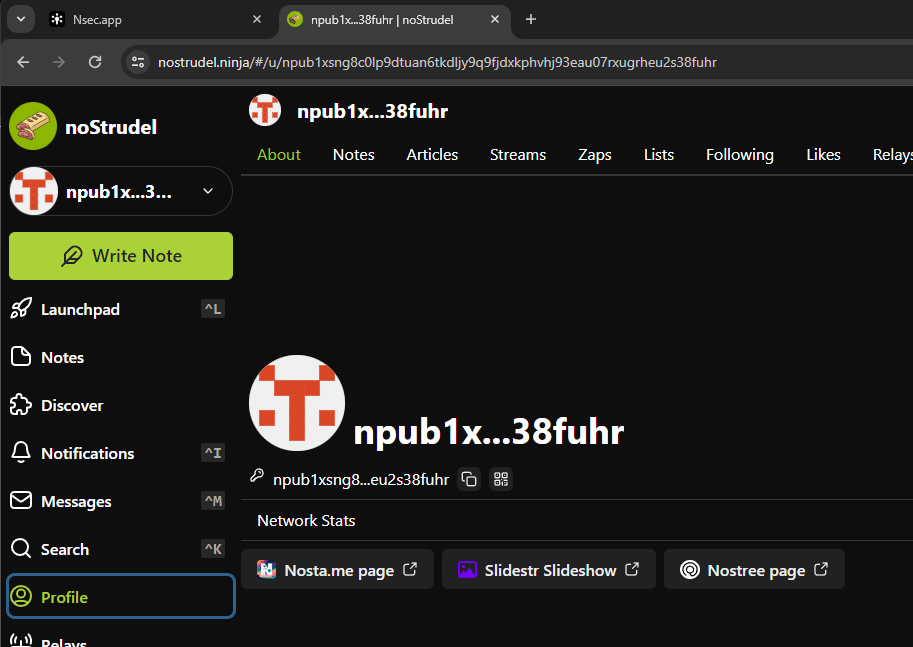

2024-03-23 08:57:08Nostr is not decentralized nor censorship-resistant

Peter Todd has been saying this for a long time and all the time I've been thinking he is misunderstanding everything, but I guess a more charitable interpretation is that he is right.

Nostr today is indeed centralized.

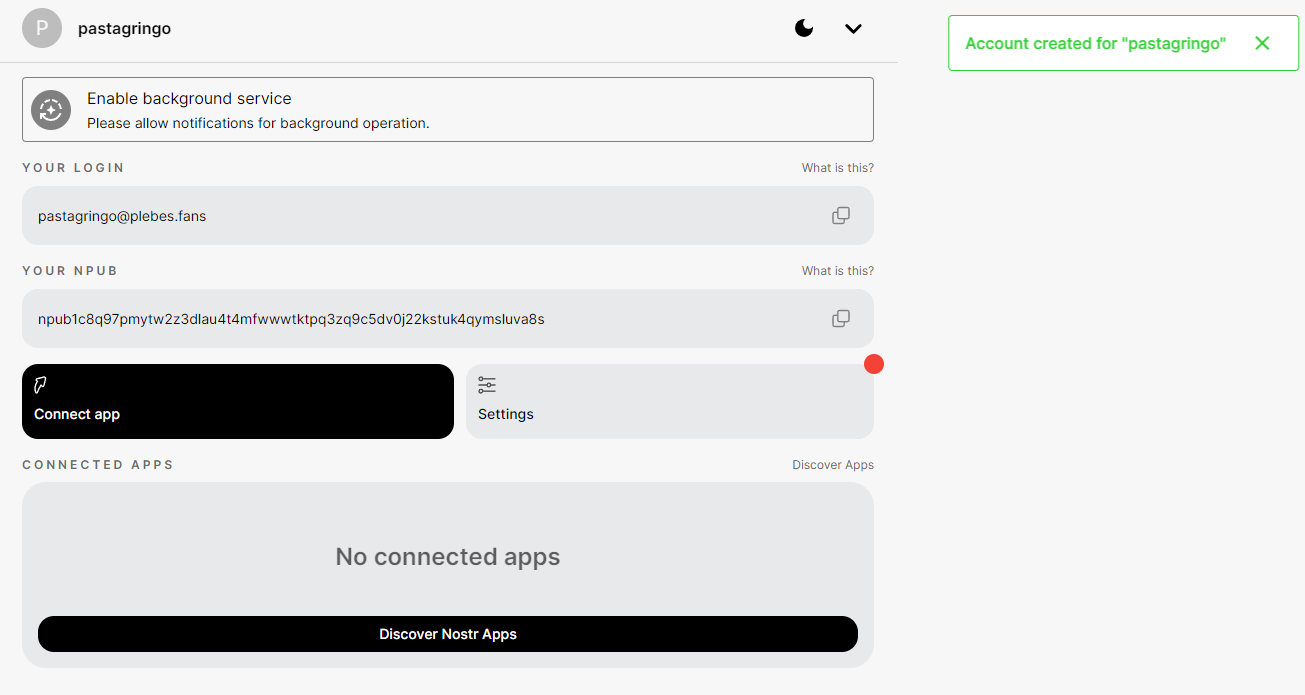

Yesterday I published two harmless notes with the exact same content at the same time. In two minutes the notes had a noticeable difference in responses:

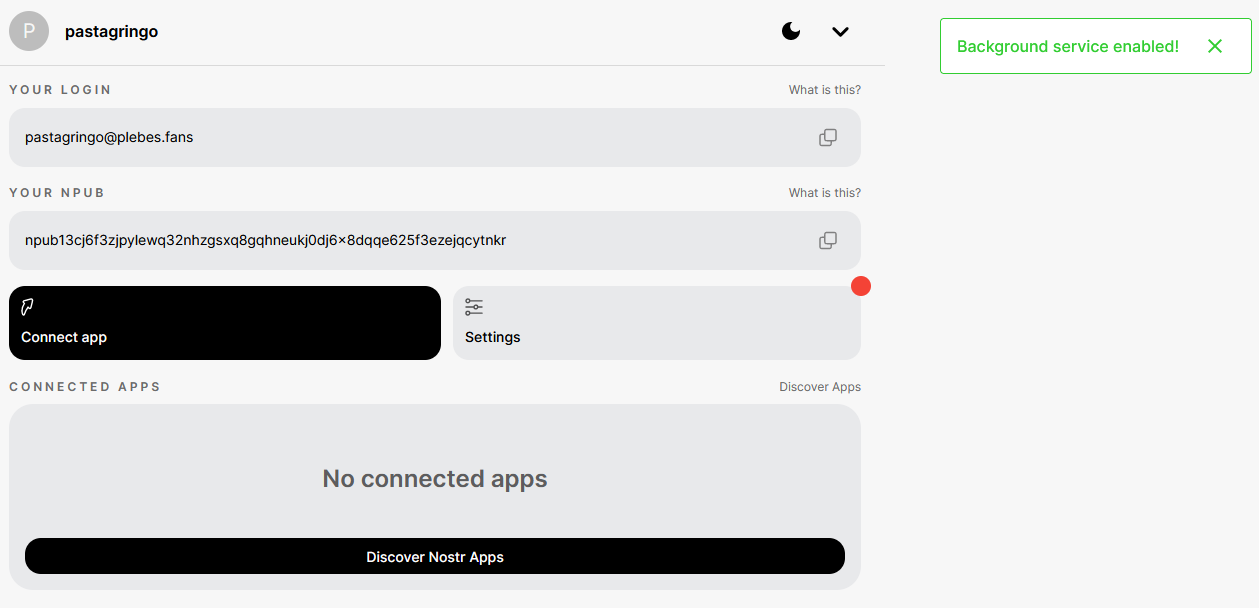

The top one was published to

wss://nostr.wine,wss://nos.lol,wss://pyramid.fiatjaf.com. The second was published to the relay where I generally publish all my notes to,wss://pyramid.fiatjaf.com, and that is announced on my NIP-05 file and on my NIP-65 relay list.A few minutes later I published that screenshot again in two identical notes to the same sets of relays, asking if people understood the implications. The difference in quantity of responses can still be seen today:

These results are skewed now by the fact that the two notes got rebroadcasted to multiple relays after some time, but the fundamental point remains.

What happened was that a huge lot more of people saw the first note compared to the second, and if Nostr was really censorship-resistant that shouldn't have happened at all.

Some people implied in the comments, with an air of obviousness, that publishing the note to "more relays" should have predictably resulted in more replies, which, again, shouldn't be the case if Nostr is really censorship-resistant.

What happens is that most people who engaged with the note are following me, in the sense that they have instructed their clients to fetch my notes on their behalf and present them in the UI, and clients are failing to do that despite me making it clear in multiple ways that my notes are to be found on

wss://pyramid.fiatjaf.com.If we were talking not about me, but about some public figure that was being censored by the State and got banned (or shadowbanned) by the 3 biggest public relays, the sad reality would be that the person would immediately get his reach reduced to ~10% of what they had before. This is not at all unlike what happened to dozens of personalities that were banned from the corporate social media platforms and then moved to other platforms -- how many of their original followers switched to these other platforms? Probably some small percentage close to 10%. In that sense Nostr today is similar to what we had before.

Peter Todd is right that if the way Nostr works is that you just subscribe to a small set of relays and expect to get everything from them then it tends to get very centralized very fast, and this is the reality today.

Peter Todd is wrong that Nostr is inherently centralized or that it needs a protocol change to become what it has always purported to be. He is in fact wrong today, because what is written above is not valid for all clients of today, and if we drive in the right direction we can successfully make Peter Todd be more and more wrong as time passes, instead of the contrary.

See also:

-

@ 42342239:1d80db24

2024-07-28 08:35:26

@ 42342239:1d80db24

2024-07-28 08:35:26Jerome Powell, Chairman of the US Federal Reserve, stated during a hearing in March that the central bank has no plans to introduce a central bank digital currency (CBDCs) or consider it necessary at present. He said this even though the material Fed staff presents to Congress suggests otherwise - that CBDCs are described as one of the Fed’s key duties .

A CBDC is a state-controlled and programmable currency that could allow the government or its intermediaries the possibility to monitor all transactions in detail and also to block payments based on certain conditions.

Critics argue that the introduction of CBDCs could undermine citizens’ constitutionally guaranteed freedoms and rights . Republican House Majority Leader Tom Emmer, the sponsor of a bill aimed at preventing the central bank from unilaterally introducing a CBDC, believes that if they do not mimic cash, they would only serve as a “CCP-style [Chinese Communist Party] surveillance tool” and could “undermine the American way of life”. Emmer’s proposed bill has garnered support from several US senators , including Republican Ted Cruz from Texas, who introduced the bill to the Senate. Similarly to how Swedish cash advocates risk missing the mark , Tom Emmer and the US senators risk the same outcome with their bill. If the central bank is prevented from introducing a central bank digital currency, nothing would stop major banks from implementing similar systems themselves, with similar consequences for citizens.

Indeed, the entity controlling your money becomes less significant once it is no longer you. Even if central bank digital currencies are halted in the US, a future administration could easily outsource financial censorship to the private banking system, similar to how the Biden administration is perceived by many to have circumvented the First Amendment by getting private companies to enforce censorship. A federal court in New Orleans ruled last fall against the Biden administration for compelling social media platforms to censor content. The Supreme Court has now begun hearing the case.

Deng Xiaoping, China’s paramount leader who played a vital role in China’s modernization, once said, “It does not matter if the cat is black or white. What matters is that it catches mice.” This statement reflected a pragmatic approach to economic policy, focusing on results foremost. China’s economic growth during his tenure was historic.

The discussion surrounding CBDCs and their negative impact on citizens’ freedoms and rights would benefit from a more practical and comprehensive perspective. Ultimately, it is the outcomes that matter above all. So too for our freedoms.

-

@ 57d1a264:69f1fee1

2024-10-23 16:11:26

@ 57d1a264:69f1fee1

2024-10-23 16:11:26Welcome back to our weekly

JABBB, Just Another Bitcoin Bubble Boom, a comics and meme contest crafted for you, creative stackers!If you'd like to learn more, check our welcome post here.

This week sticker:

Bitcoin Students NetworkYou can download the source file directly from the HereComesBitcoin website in SVG and PNG.

The task

Starting from this sticker, design a comic frame or a meme, add a message that perfectly captures the sentiment of the current most hilarious take on the Bitcoin space. You can contextualize it or not, it's up to you, you chose the message, the context and anything else that will help you submit your comic art masterpiece.

Are you a meme creator? There's space for you too: select the most similar shot from the gifts hosted on the Gif Station section and craft your best meme... Let's Jabbb!

If you enjoy designing and memeing, feel free to check our JABBB archive and create more to spread Bitcoin awareness to the moon.

Submit each proposal on the relative thread, bounties will be distributed next week together with the release of next JABBB contest.

₿ creative and have fun!

originally posted at https://stacker.news/items/736536

-

@ ee11a5df:b76c4e49

2024-07-11 23:57:53

@ ee11a5df:b76c4e49

2024-07-11 23:57:53What Can We Get by Breaking NOSTR?

"What if we just started over? What if we took everything we have learned while building nostr and did it all again, but did it right this time?"

That is a question I've heard quite a number of times, and it is a question I have pondered quite a lot myself.

My conclusion (so far) is that I believe that we can fix all the important things without starting over. There are different levels of breakage, starting over is the most extreme of them. In this post I will describe these levels of breakage and what each one could buy us.

Cryptography

Your key-pair is the most fundamental part of nostr. That is your portable identity.

If the cryptography changed from secp256k1 to ed25519, all current nostr identities would not be usable.

This would be a complete start over.

Every other break listed in this post could be done as well to no additional detriment (save for reuse of some existing code) because we would be starting over.

Why would anyone suggest making such a break? What does this buy us?

- Curve25519 is a safe curve meaning a bunch of specific cryptography things that us mortals do not understand but we are assured that it is somehow better.

- Ed25519 is more modern, said to be faster, and has more widespread code/library support than secp256k1.

- Nostr keys could be used as TLS server certificates. TLS 1.3 using RFC 7250 Raw Public Keys allows raw public keys as certificates. No DNS or certification authorities required, removing several points of failure. These ed25519 keys could be used in TLS, whereas secp256k1 keys cannot as no TLS algorithm utilizes them AFAIK. Since relays currently don't have assigned nostr identities but are instead referenced by a websocket URL, this doesn't buy us much, but it is interesting. This idea is explored further below (keep reading) under a lesser level of breakage.

Besides breaking everything, another downside is that people would not be able to manage nostr keys with bitcoin hardware.

I am fairly strongly against breaking things this far. I don't think it is worth it.

Signature Scheme and Event Structure

Event structure is the next most fundamental part of nostr. Although events can be represented in many ways (clients and relays usually parse the JSON into data structures and/or database columns), the nature of the content of an event is well defined as seven particular fields. If we changed those, that would be a hard fork.

This break is quite severe. All current nostr events wouldn't work in this hard fork. We would be preserving identities, but all content would be starting over.

It would be difficult to bridge between this fork and current nostr because the bridge couldn't create the different signature required (not having anybody's private key) and current nostr wouldn't be generating the new kind of signature. Therefore any bridge would have to do identity mapping just like bridges to entirely different protocols do (e.g. mostr to mastodon).

What could we gain by breaking things this far?

- We could have a faster event hash and id verification: the current signature scheme of nostr requires lining up 5 JSON fields into a JSON array and using that as hash input. There is a performance cost to copying this data in order to hash it.

- We could introduce a subkey field, and sign events via that subkey, while preserving the pubkey as the author everybody knows and searches by. Note however that we can already get a remarkably similar thing using something like NIP-26 where the actual author is in a tag, and the pubkey field is the signing subkey.

- We could refactor the kind integer into composable bitflags (that could apply to any application) and an application kind (that specifies the application).

- Surely there are other things I haven't thought of.

I am currently against this kind of break. I don't think the benefits even come close to outweighing the cost. But if I learned about other things that we could "fix" by restructuring the events, I could possibly change my mind.

Replacing Relay URLs

Nostr is defined by relays that are addressed by websocket URLs. If that changed, that would be a significant break. Many (maybe even most) current event kinds would need superseding.

The most reasonable change is to define relays with nostr identities, specifying their pubkey instead of their URL.

What could we gain by this?

- We could ditch reliance on DNS. Relays could publish events under their nostr identity that advertise their current IP address(es).

- We could ditch certificates because relays could generate ed25519 keypairs for themselves (or indeed just self-signed certificates which might be much more broadly supported) and publish their public ed25519 key in the same replaceable event where they advertise their current IP address(es).

This is a gigantic break. Almost all event kinds need redefining and pretty much all nostr software will need fairly major upgrades. But it also gives us a kind of Internet liberty that many of us have dreamt of our entire lives.

I am ambivalent about this idea.

Protocol Messaging and Transport

The protocol messages of nostr are the next level of breakage. We could preserve keypair identities, all current events, and current relay URL references, but just break the protocol of how clients and relay communicate this data.

This would not necessarily break relay and client implementations at all, so long as the new protocol were opt-in.

What could we get?

- The new protocol could transmit events in binary form for increased performance (no more JSON parsing with it's typical many small memory allocations and string escaping nightmares). I think event throughput could double (wild guess).

- It could have clear expectations of who talks first, and when and how AUTH happens, avoiding a lot of current miscommunication between clients and relays.

- We could introduce bitflags for feature support so that new features could be added later and clients would not bother trying them (and getting an error or timing out) on relays that didn't signal support. This could replace much of NIP-11.

- We could then introduce something like negentropy or negative filters (but not that... probably something else solving that same problem) without it being a breaking change.

- The new protocol could just be a few websocket-binary messages enhancing the current protocol, continuing to leverage the existing websocket-text messages we currently have, meaning newer relays would still support all the older stuff.

The downsides are just that if you want this new stuff you have to build it. It makes the protocol less simple, having now multiple protocols, multiple ways of doing the same thing.

Nonetheless, this I am in favor of. I think the trade-offs are worth it. I will be pushing a draft PR for this soon.

The path forward

I propose then the following path forward:

- A new nostr protocol over websockets binary (draft PR to be shared soon)