-

@ 42342239:1d80db24

2024-07-28 08:35:26

@ 42342239:1d80db24

2024-07-28 08:35:26Jerome Powell, Chairman of the US Federal Reserve, stated during a hearing in March that the central bank has no plans to introduce a central bank digital currency (CBDCs) or consider it necessary at present. He said this even though the material Fed staff presents to Congress suggests otherwise - that CBDCs are described as one of the Fed’s key duties .

A CBDC is a state-controlled and programmable currency that could allow the government or its intermediaries the possibility to monitor all transactions in detail and also to block payments based on certain conditions.

Critics argue that the introduction of CBDCs could undermine citizens’ constitutionally guaranteed freedoms and rights . Republican House Majority Leader Tom Emmer, the sponsor of a bill aimed at preventing the central bank from unilaterally introducing a CBDC, believes that if they do not mimic cash, they would only serve as a “CCP-style [Chinese Communist Party] surveillance tool” and could “undermine the American way of life”. Emmer’s proposed bill has garnered support from several US senators , including Republican Ted Cruz from Texas, who introduced the bill to the Senate. Similarly to how Swedish cash advocates risk missing the mark , Tom Emmer and the US senators risk the same outcome with their bill. If the central bank is prevented from introducing a central bank digital currency, nothing would stop major banks from implementing similar systems themselves, with similar consequences for citizens.

Indeed, the entity controlling your money becomes less significant once it is no longer you. Even if central bank digital currencies are halted in the US, a future administration could easily outsource financial censorship to the private banking system, similar to how the Biden administration is perceived by many to have circumvented the First Amendment by getting private companies to enforce censorship. A federal court in New Orleans ruled last fall against the Biden administration for compelling social media platforms to censor content. The Supreme Court has now begun hearing the case.

Deng Xiaoping, China’s paramount leader who played a vital role in China’s modernization, once said, “It does not matter if the cat is black or white. What matters is that it catches mice.” This statement reflected a pragmatic approach to economic policy, focusing on results foremost. China’s economic growth during his tenure was historic.

The discussion surrounding CBDCs and their negative impact on citizens’ freedoms and rights would benefit from a more practical and comprehensive perspective. Ultimately, it is the outcomes that matter above all. So too for our freedoms.

-

@ ee11a5df:b76c4e49

2024-07-11 23:57:53

@ ee11a5df:b76c4e49

2024-07-11 23:57:53What Can We Get by Breaking NOSTR?

"What if we just started over? What if we took everything we have learned while building nostr and did it all again, but did it right this time?"

That is a question I've heard quite a number of times, and it is a question I have pondered quite a lot myself.

My conclusion (so far) is that I believe that we can fix all the important things without starting over. There are different levels of breakage, starting over is the most extreme of them. In this post I will describe these levels of breakage and what each one could buy us.

Cryptography

Your key-pair is the most fundamental part of nostr. That is your portable identity.

If the cryptography changed from secp256k1 to ed25519, all current nostr identities would not be usable.

This would be a complete start over.

Every other break listed in this post could be done as well to no additional detriment (save for reuse of some existing code) because we would be starting over.

Why would anyone suggest making such a break? What does this buy us?

- Curve25519 is a safe curve meaning a bunch of specific cryptography things that us mortals do not understand but we are assured that it is somehow better.

- Ed25519 is more modern, said to be faster, and has more widespread code/library support than secp256k1.

- Nostr keys could be used as TLS server certificates. TLS 1.3 using RFC 7250 Raw Public Keys allows raw public keys as certificates. No DNS or certification authorities required, removing several points of failure. These ed25519 keys could be used in TLS, whereas secp256k1 keys cannot as no TLS algorithm utilizes them AFAIK. Since relays currently don't have assigned nostr identities but are instead referenced by a websocket URL, this doesn't buy us much, but it is interesting. This idea is explored further below (keep reading) under a lesser level of breakage.

Besides breaking everything, another downside is that people would not be able to manage nostr keys with bitcoin hardware.

I am fairly strongly against breaking things this far. I don't think it is worth it.

Signature Scheme and Event Structure

Event structure is the next most fundamental part of nostr. Although events can be represented in many ways (clients and relays usually parse the JSON into data structures and/or database columns), the nature of the content of an event is well defined as seven particular fields. If we changed those, that would be a hard fork.

This break is quite severe. All current nostr events wouldn't work in this hard fork. We would be preserving identities, but all content would be starting over.

It would be difficult to bridge between this fork and current nostr because the bridge couldn't create the different signature required (not having anybody's private key) and current nostr wouldn't be generating the new kind of signature. Therefore any bridge would have to do identity mapping just like bridges to entirely different protocols do (e.g. mostr to mastodon).

What could we gain by breaking things this far?

- We could have a faster event hash and id verification: the current signature scheme of nostr requires lining up 5 JSON fields into a JSON array and using that as hash input. There is a performance cost to copying this data in order to hash it.

- We could introduce a subkey field, and sign events via that subkey, while preserving the pubkey as the author everybody knows and searches by. Note however that we can already get a remarkably similar thing using something like NIP-26 where the actual author is in a tag, and the pubkey field is the signing subkey.

- We could refactor the kind integer into composable bitflags (that could apply to any application) and an application kind (that specifies the application).

- Surely there are other things I haven't thought of.

I am currently against this kind of break. I don't think the benefits even come close to outweighing the cost. But if I learned about other things that we could "fix" by restructuring the events, I could possibly change my mind.

Replacing Relay URLs

Nostr is defined by relays that are addressed by websocket URLs. If that changed, that would be a significant break. Many (maybe even most) current event kinds would need superseding.

The most reasonable change is to define relays with nostr identities, specifying their pubkey instead of their URL.

What could we gain by this?

- We could ditch reliance on DNS. Relays could publish events under their nostr identity that advertise their current IP address(es).

- We could ditch certificates because relays could generate ed25519 keypairs for themselves (or indeed just self-signed certificates which might be much more broadly supported) and publish their public ed25519 key in the same replaceable event where they advertise their current IP address(es).

This is a gigantic break. Almost all event kinds need redefining and pretty much all nostr software will need fairly major upgrades. But it also gives us a kind of Internet liberty that many of us have dreamt of our entire lives.

I am ambivalent about this idea.

Protocol Messaging and Transport

The protocol messages of nostr are the next level of breakage. We could preserve keypair identities, all current events, and current relay URL references, but just break the protocol of how clients and relay communicate this data.

This would not necessarily break relay and client implementations at all, so long as the new protocol were opt-in.

What could we get?

- The new protocol could transmit events in binary form for increased performance (no more JSON parsing with it's typical many small memory allocations and string escaping nightmares). I think event throughput could double (wild guess).

- It could have clear expectations of who talks first, and when and how AUTH happens, avoiding a lot of current miscommunication between clients and relays.

- We could introduce bitflags for feature support so that new features could be added later and clients would not bother trying them (and getting an error or timing out) on relays that didn't signal support. This could replace much of NIP-11.

- We could then introduce something like negentropy or negative filters (but not that... probably something else solving that same problem) without it being a breaking change.

- The new protocol could just be a few websocket-binary messages enhancing the current protocol, continuing to leverage the existing websocket-text messages we currently have, meaning newer relays would still support all the older stuff.

The downsides are just that if you want this new stuff you have to build it. It makes the protocol less simple, having now multiple protocols, multiple ways of doing the same thing.

Nonetheless, this I am in favor of. I think the trade-offs are worth it. I will be pushing a draft PR for this soon.

The path forward

I propose then the following path forward:

- A new nostr protocol over websockets binary (draft PR to be shared soon)

- Subkeys brought into nostr via NIP-26 (but let's use a single letter tag instead, OK?) via a big push to get all the clients to support it (the transition will be painful - most major clients will need to support this before anybody can start using it).

- Some kind of solution to the negative-filter-negentropy need added to the new protocol as its first optional feature.

- We seriously consider replacing Relay URLs with nostr pubkeys assigned to the relay, and then have relays publish their IP address and TLS key or certificate.

We sacrifice these:

- Faster event hash/verification

- Composable event bitflags

- Safer faster more well-supported crypto curve

- Nostr keys themselves as TLS 1.3 RawPublicKey certificates

-

@ f977c464:32fcbe00

2024-01-30 20:06:18

@ f977c464:32fcbe00

2024-01-30 20:06:18Güneşin kaybolmasının üçüncü günü, saat öğlen on ikiyi yirmi geçiyordu. Trenin kalkmasına yaklaşık iki saat vardı. Hepimiz perondaydık. Valizlerimiz, kolilerimiz, renk renk ve biçimsiz çantalarımızla yan yana dizilmiş, kısa aralıklarla tepemizdeki devasa saati kontrol ediyorduk.

Ama ne kadar dik bakarsak bakalım zaman bir türlü istediğimiz hızla ilerlemiyordu. Herkes birkaç dakika sürmesi gereken alelade bir doğa olayına sıkışıp kalmış, karanlıktan sürünerek çıkmayı deniyordu.

Bekleme salonuna doğru döndüm. Nefesimden çıkan buharın arkasında, kalın taş duvarları ve camlarıyla morg kadar güvenli ve soğuk duruyordu. Cesetleri o yüzden bunun gibi yerlere taşımaya başlamışlardı. Demek insanların bütün iyiliği başkaları onları gördüğü içindi ki gündüzleri gecelerden daha karanlık olduğunda hemen birbirlerinin gırtlağına çökmüş, böğürlerinde delikler açmış, gözlerini oyup kafataslarını parçalamışlardı.

İstasyonun ışığı titrediğinde karanlığın enseme saplandığını hissettim. Eğer şimdi, böyle kalabalık bir yerde elektrik kesilse başımıza ne gelirdi?

İçerideki askerlerden biri bakışlarımı yakalayınca yeniden saate odaklanmış gibi yaptım. Sadece birkaç dakika geçmişti.

“Tarlalarım gitti. Böyle boyum kadar ayçiçeği doluydu. Ah, hepsi ölüp gidiyor. Afitap’ın çiçekleri de gi-”

“Dayı, Allah’ını seversen sus. Hepimizi yakacaksın şimdi.”

Karanlıkta durduğunda, görünmez olmayı istemeye başlıyordun. Kimse seni görmemeli, nefesini bile duymamalıydı. Kimsenin de ayağının altında dolaşmamalıydın; gelip kazayla sana çarpmamalılar, takılıp sendelememeliydiler. Yoksa aslında hedefi sen olmadığın bir öfke gürlemeye başlar, yaşadığın ilk şoku ve acıyı silerek üstünden geçerdi.

İlk konuşan, yaşlıca bir adam, kafasında kasketi, nasırlı ellerine hohluyordu. Gözleri ve burnu kızarmıştı. Güneşin kaybolması onun için kendi başına bir felaket değildi. Hayatına olan pratik yansımalarından korkuyordu olsa olsa. Bir anının kaybolması, bu yüzden çoktan kaybettiği birinin biraz daha eksilmesi. Hayatta kalmasını gerektiren sebepler azalırken, hayatta kalmasını sağlayacak kaynaklarını da kaybediyordu.

Onu susturan delikanlıysa atkısını bütün kafasına sarmış, sakalı ve yüzünün derinliklerine kaçmış gözleri dışında bedeninin bütün parçalarını gizlemeye çalışıyordu. İşte o, güneşin kaybolmasının tam olarak ne anlama geldiğini anlamamış olsa bile, dehşetini olduğu gibi hissedebilenlerdendi.

Güneşin onlardan alındıktan sonra kime verileceğini sormuyorlardı. En başta onlara verildiğinde de hiçbir soru sormamışlardı zaten.

İki saat ne zaman geçer?

Midemin üstünde, sağ tarafıma doğru keskin bir acı hissettim. Karaciğerim. Gözlerimi yumdum. Yanımda biri metal bir nesneyi yere bıraktı. Bir kafesti. İçerisindeki kartalın ıslak kokusu burnuma ulaşmadan önce bile biliyordum bunu.

“Yeniden mi?” diye sordu bana kartal. Kanatları kanlı. Zamanın her bir parçası tüylerinin üstüne çöreklenmişti. Gagası bir şey, tahminen et parçası geveliyor gibi hareket ediyordu. Eski anılar kolay unutulmazmış. Şu anda kafesinin kalın parmaklıklarının ardında olsa da bunun bir aldatmaca olduğunu bir tek ben biliyordum. Her an kanatlarını iki yana uzatıverebilir, hava bu hareketiyle dalgalanarak kafesi esneterek hepimizi içine alacak kadar genişleyebilir, parmaklıklar önce ayaklarımızın altına serilir gibi gözükebilir ama aslında hepimizin üstünde yükselerek tepemize çökebilirdi.

Aşağıya baktım. Tahtalarla zapt edilmiş, hiçbir yere gidemeyen ama her yere uzanan tren rayları. Atlayıp koşsam… Çantam çok ağırdı. Daha birkaç adım atamadan, kartal, suratını bedenime gömerdi.

“Bu sefer farklı,” diye yanıtladım onu. “Yeniden diyemezsin. Tekrarladığım bir şey değil bu. Hatta bir hata yapıyormuşum gibi tonlayamazsın da. Bu sefer, insanların hak etmediğini biliyorum.”

“O zaman daha vahim. Süzme salaksın demektir.”

“İnsanların hak etmemesi, insanlığın hak etmediği anlamına gelmez ki.”

Az önce göz göze geldiğim genççe ama çökük asker hâlâ bana bakıyordu. Bir kartalla konuştuğumu anlamamıştı şüphesiz. Yanımdakilerden biriyle konuştuğumu sanmış olmalıydı. Ama konuştuğum kişiye bakmıyordum ona göre. Çekingence kafamı eğmiştim. Bir kez daha göz göze geldiğimizde içerideki diğer iki askere bir şeyler söyledi, onlar dönüp beni süzerken dışarı çıktı.

Yanımızdaki, az önce konuşan iki adam da şaşkınlıkla bir bana bir kartala bakıyordu.

“Yalnız bu sefer kalbin de kırılacak, Prometheus,” dedi kartal, bana. “Belki son olur. Biliyorsun, bir sürü soruna neden oluyor bu yaptıkların.”

Beni koruyordu sözde. En çok kanıma dokunan buydu. Kasıklarımın üstüne oturmuş, kanlı suratının ardında gözleri parlarken attığı çığlık kulaklarımda titremeye devam ediyordu. Bu tabloda kimsenin kimseyi düşündüğü yoktu. Kartalın, yanımızdaki adamların, artık arkama kadar gelmiş olması gereken askerin, tren raylarının, geçmeyen saatlerin…

Arkamı döndüğümde, asker sahiden oradaydı. Zaten öyle olması gerekiyordu; görmüştüm bunu, biliyordum. Kehanetler… Bir şeyler söylüyordu ama ağzı oynarken sesi çıkmıyordu. Yavaşlamış, kendisini saatin akışına uydurmuştu. Havada donan tükürüğünden anlaşılıyordu, sinirliydi. Korktuğu için olduğunu biliyordum. Her seferinde korkmuşlardı. Beni unutmuş olmaları işlerini kolaylaştırmıyordu. Sadece yeni bir isim vermelerine neden oluyordu. Bu seferkiyle beni lanetleyecekleri kesinleşmişti.

Olması gerekenle olanların farklı olması ne kadar acınasıydı. Olması gerekenlerin doğasının kötücül olmasıysa bir yerde buna dayanıyordu.

“Salaksın,” dedi kartal bana. Zamanı aşan bir çığlık. Hepimizin önüne geçmişti ama kimseyi durduramıyordu.

Sonsuzluğa kaç tane iki saat sıkıştırabilirsiniz?

Ben bir tane bile sıkıştıramadım.

Çantama uzanıyordum. Asker de sırtındaki tüfeğini indiriyordu. Benim acelem yoktu, onunsa eli ayağı birbirine dolaşıyordu. Oysaki her şey tam olması gerektiği anda olacaktı. Kehanet başkasının parmaklarının ucundaydı.

Güneş, bir tüfeğin patlamasıyla yeryüzüne doğdu.

Rayların üzerine serilmiş göğsümün ortasından, bir çantanın içinden.

Not: Bu öykü ilk olarak 2021 yılında Esrarengiz Hikâyeler'de yayımlanmıştır.

-

@ 42342239:1d80db24

2024-07-06 15:26:39

@ 42342239:1d80db24

2024-07-06 15:26:39Claims that we need greater centralisation, more EU, or more globalisation are prevalent across the usual media channels. The climate crisis, environmental destruction, pandemics, the AI-threat, yes, everything will apparently be solved if a little more global coordination, governance and leadership can be brought about.

But, is this actually true? One of the best arguments for this conclusion stems implicitly from the futurist Eliezer Yudkowsky, who once proposed a new Moore's Law, though this time not for computer processors but instead for mad science: "every 18 months, the minimum IQ necessary to destroy the world drops by one point".

Perhaps we simply have to tolerate more centralisation, globalisation, control, surveillance, and so on, to prevent all kinds of fools from destroying the world?

Note: a Swedish version of this text is avalable at Affärsvärlden.

At the same time, more centralisation, globalisation, etc. is also what we have experienced. Power has been shifting from the local, and from the majorities, to central-planning bureaucrats working in remote places. This has been going on for several decades. The EU's subsidiarity principle, i.e. the idea that decisions should be made at the lowest expedient level, and which came to everyone's attention ahead of Sweden's EU vote in 1994, is today swept under the rug as untimely and outdated, perhaps even retarded.

At the same time, there are many crises, more than usual it would seem. If it is not a crisis of criminality, a logistics/supply chain crisis or a water crisis, then it is an energy crisis, a financial crisis, a refugee crisis or a climate crisis. It is almost as if one starts to suspect that all this centralisation may be leading us down the wrong path. Perhaps centralisation is part of the problem, rather than the capital S solution?

Why centralisation may cause rather than prevent problems

There are several reasons why centralisation, etc, may actually be a problem. And though few seem to be interested in such questions today (or perhaps they are too timid to mention their concerns?), it has not always been this way. In this short essay we'll note four reasons (though there are several others):

- Political failures (Buchanan et al)

- Local communities & skin in the game (Ostrom and Taleb)

- The local knowledge problem (von Hayek)

- Governance by sociopaths (Hare)

James Buchanan who was given the so-called Nobel price in economics in the eighties once said that: "politicians and bureaucrats are no different from the rest of us. They will maximise their incentives just like everybody else.".

Buchanan was prominent in research on rent-seeking and political failures, i.e. when political "solutions" to so-called market failures make everything worse. Rent-seeking is when a company spends resources (e.g. lobbying) to get legislators or other decision makers to pass laws or create regulations that benefit the company instead of it having to engage in productive activities. The result is regulatory capture. The more centralised decision-making is, the greater the negative consequences from such rent-seeking will be for society at large. This is known.

Another economist, Elinor Ostrom, was given the same prize in the great financial crisis year of 2009. In her research, she had found that local communities where people had influence over rules and regulations, as well as how violations there-of were handled, were much better suited to look after common resources than centralised bodies. To borrow a term from the combative Nassim Nicholas Taleb: everything was better handled when decision makers had "skin in the game".

A third economist, Friedrich von Hayek, was given this prize as early as 1974, partly because he showed that central planning could not possibly take into account all relevant information. The information needed in economic planning is by its very nature distributed, and will never be available to a central planning committee, or even to an AI.

Moreover, human systems are complex and not just complicated. When you realise this, you also understand why the forecasts made by central planners often end up wildly off the mark - and at times in a catastrophic way. (This in itself is an argument for relying more on factors outside of the models in the decision-making process.)

From Buchanan's, Ostrom's, Taleb's or von Hayek's perspectives, it also becomes difficult to believe that today's bureaucrats are the most suited to manage and price e.g. climate risks. One can compare with the insurance industry, which has both a long habit of pricing risks as well as "skin in the game" - two things sorely missing in today's planning bodies.

Instead of preventing fools, we may be enabling madmen

An even more troubling conclusion is that centralisation tends to transfer power to people who perhaps shouldn't have more of that good. "Not all psychopaths are in prison - some are in the boardroom," psychologist Robert Hare once said during a lecture. Most people have probably known for a long time that those with sharp elbows and who don't hesitate to stab a colleague in the back can climb quickly in organisations. In recent years, this fact seems to have become increasingly well known even in academia.

You will thus tend to encounter an increased prevalance of individuals with narcissistic and sociopathic traits the higher up you get in the the status hierarchy. And if working in large organisations (such as the European Union or Congress) or in large corporations, is perceived as higher status - which is generally the case, then it follows that the more we centralise, the more we will be governed by people with less flattering Dark Triad traits.

By their fruits ye shall know them

Perhaps it is thus not a coincidence that we have so many crises. Perhaps centralisation, globalisation, etc. cause crises. Perhaps the "elites" and their planning bureaucrats are, in fact, not the salt of the earth and the light of the world. Perhaps President Trump even had a point when he said "they are not sending their best".

https://www.youtube.com/watch?v=w4b8xgaiuj0

The opposite of centralisation is decentralisation. And while most people may still be aware that decentralisation can be a superpower within the business world, it's time we remind ourselves that this also applies to the economy - and society - at large, and preferably before the next Great Leap Forward is fully thrust upon us.

-

@ b12b632c:d9e1ff79

2024-05-29 12:10:18

@ b12b632c:d9e1ff79

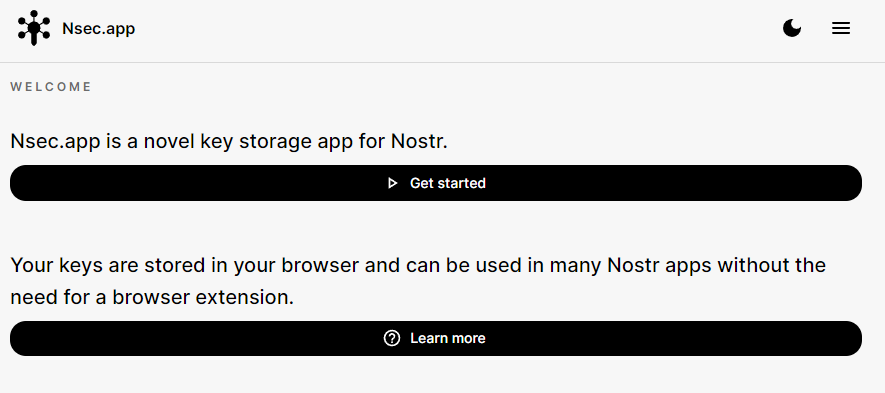

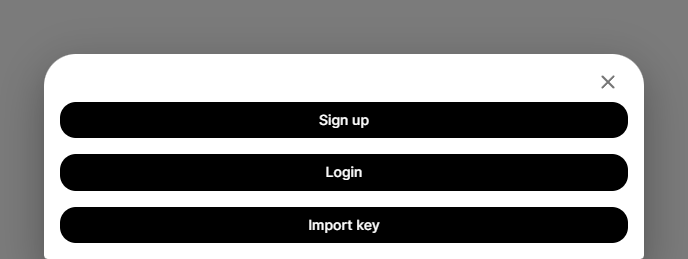

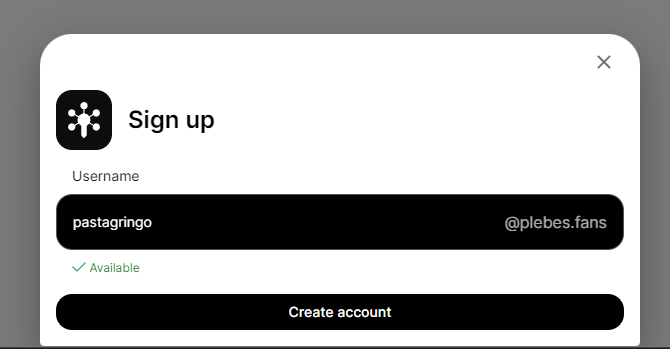

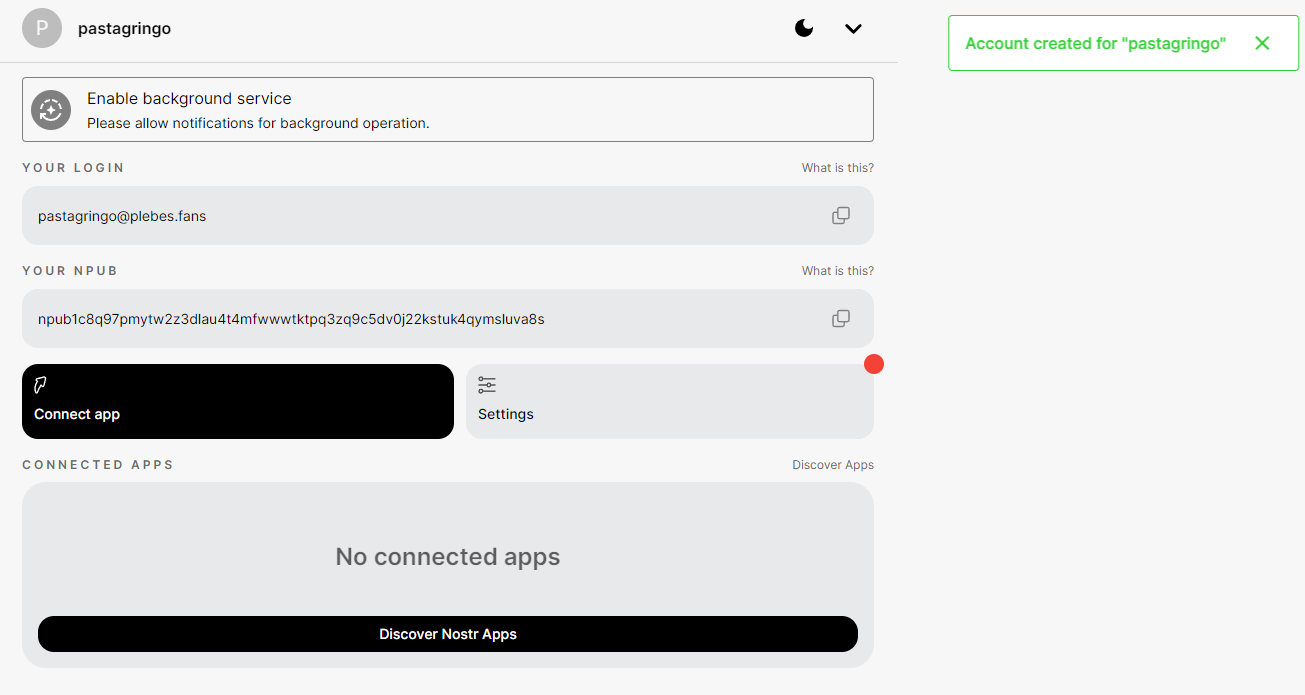

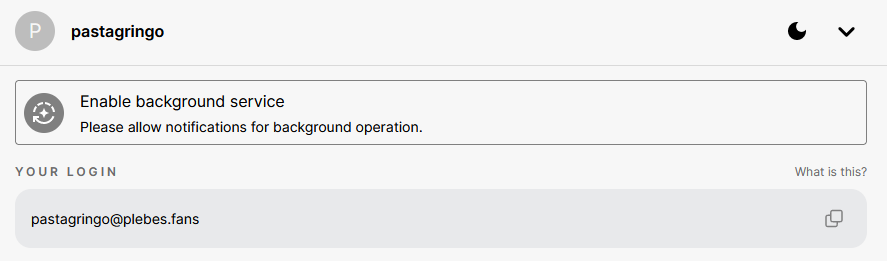

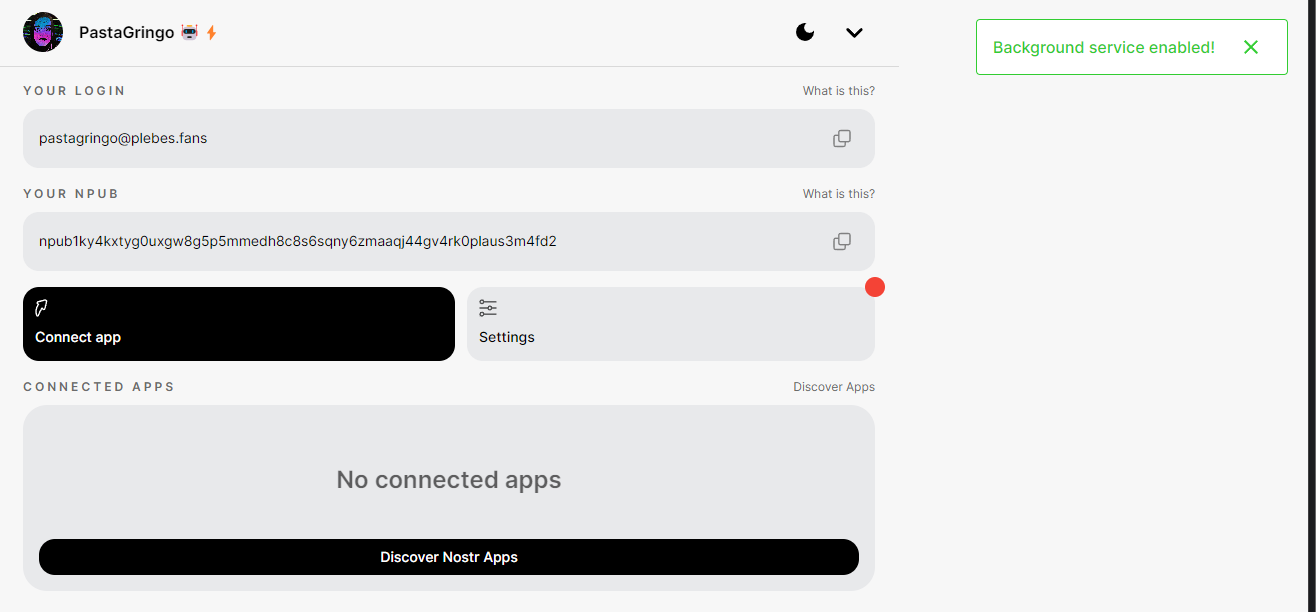

2024-05-29 12:10:18One other day on Nostr, one other app!

Today I'll present you a new self-hosted Nostr blog web application recently released on github by dtonon, Oracolo:

https://github.com/dtonon/oracolo

Oracolo is a minimalist blog powered by Nostr, that consists of a single html file, weighing only ~140Kb. You can use whatever Nostr client that supports long format (habla.news, yakihonne, highlighter.com, etc ) to write your posts, and your personal blog is automatically updated.

It works also without a web server; for example you can send it via email as a business card.Oracolo fetches Nostr data, builds the page, execute the JavaScript code and displays article on clean and sobr blog (a Dark theme would be awesome 👀).

Blog articles are nostr events you published or will publish on Nostr relays through long notes applications like the ones quoted above.

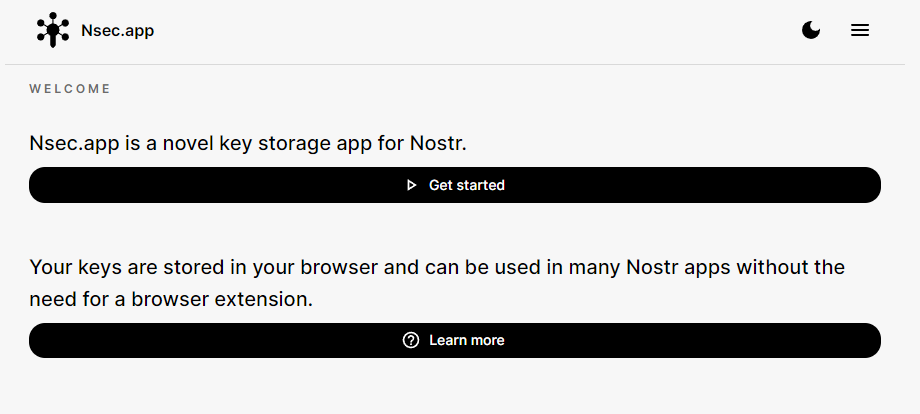

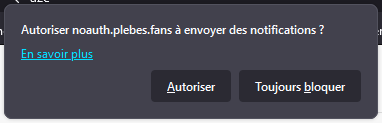

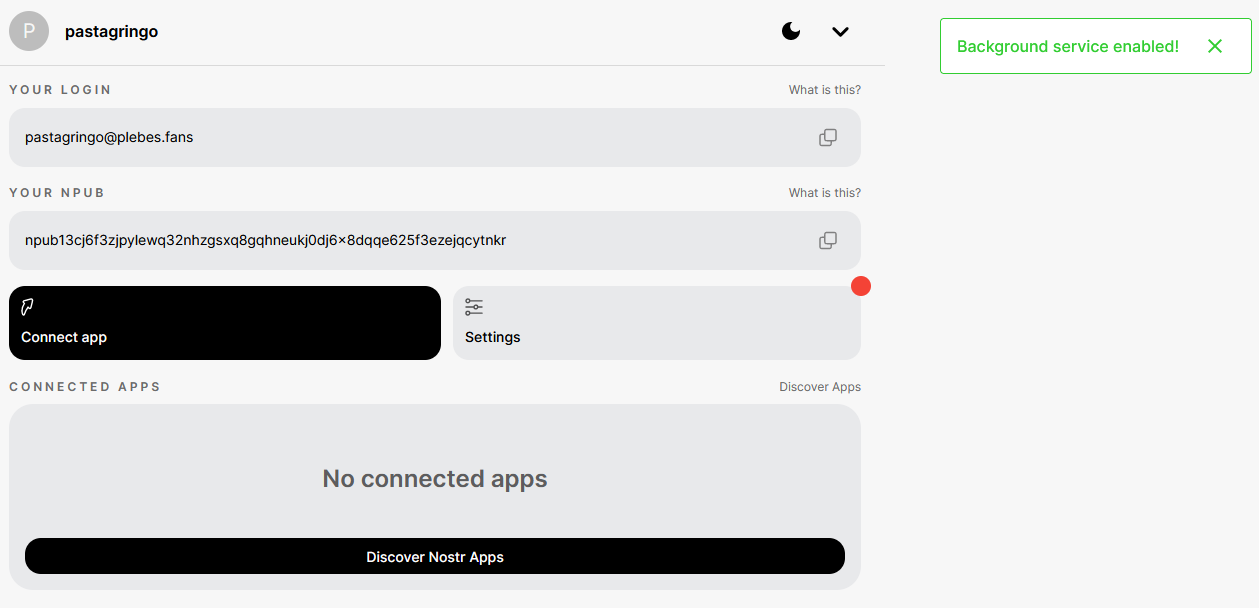

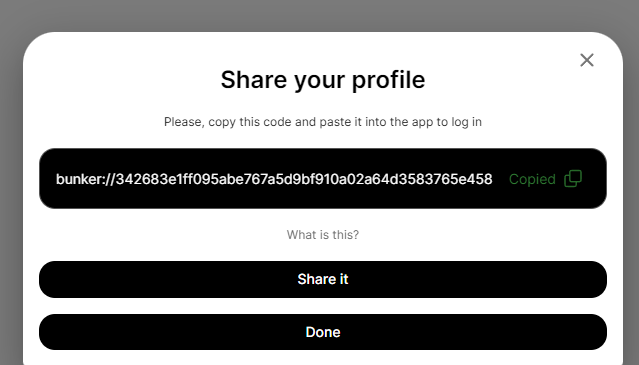

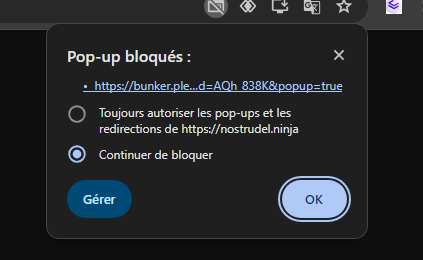

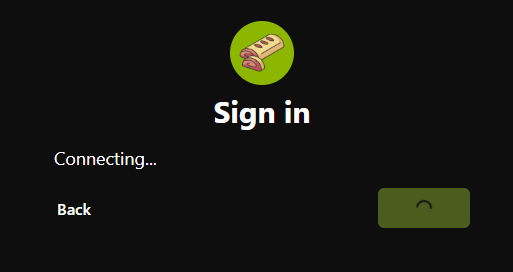

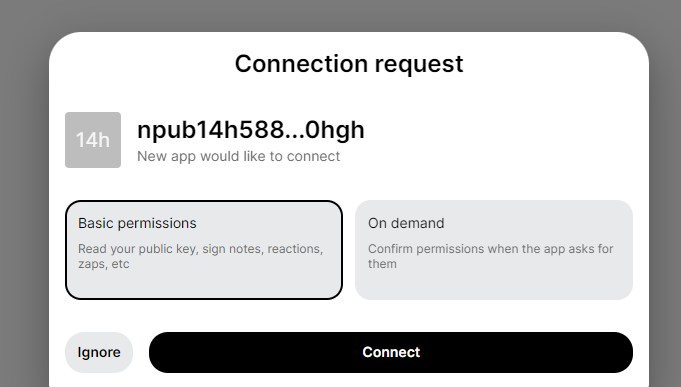

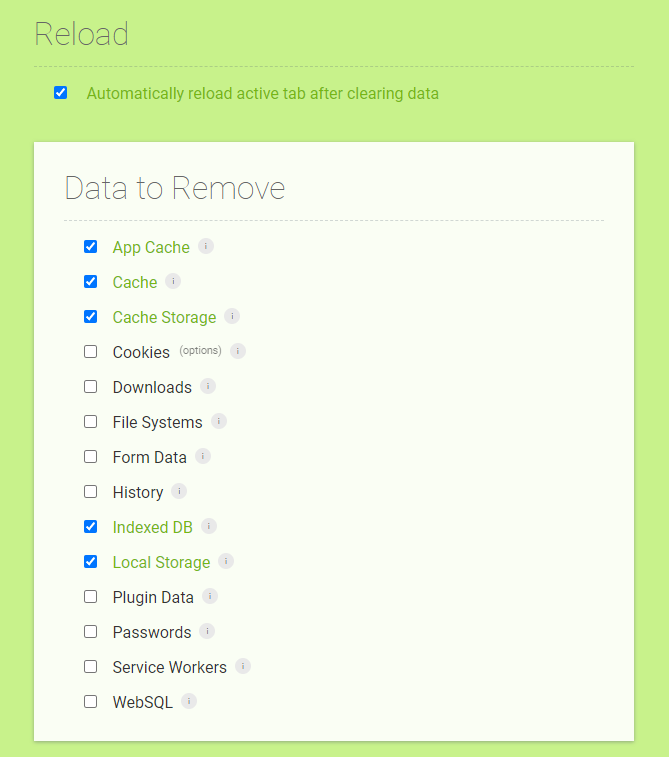

Don't forget to use a NIP07 web browser extensions to login on those websites. Old time where we were forced to fill our nsec key is nearly over!

For the hurry ones of you, you can find here the Oracolo demo with my Nostr long notes article. It will include this one when I'll publish it on Nostr!

https://oracolo.fractalized.net/

How to self-host Oracolo?

You can build the application locally or use a docker compose stack to run it (or any other method). I just build a docker compose stack with Traefik and an Oracolo docker image to let you quickly run it.

The oracolo-docker github repo is available here:

https://github.com/PastaGringo/oracolo-docker

PS: don't freak out about the commits number, oracolo has been the lucky one to let me practrice docker image CI/CD build/push with Forgejo, that went well but it took me a while before finding how to make Forgejo runner dood work 😆). Please ping me on Nostr if you are interested by an article on this topic!

This repo is a mirror from my new Forgejo git instance where the code has been originaly published and will be updated if needed (I think it will):

https://git.fractalized.net/PastaGringo/oracolo-docker

Here is how to do it.

1) First, you need to create an A DNS record into your domain.tld zone. You can create a A with "oracolo" .domain.tld or "*" .domain.tld. The second one will allow traefik to generate all the future subdomain.domain.tld without having to create them in advance. You can verify DNS records with the website https://dnschecker.org.

2) Clone the oracolo-docker repository:

bash git clone https://git.fractalized.net/PastaGringo/oracolo-docker.git cd oracolo-docker3) Rename the .env.example file:

bash mv .env.example .env4) Modify and update your .env file with your own infos:

```bash

Let's Encrypt email used to generate the SSL certificate

LETSENCRYPT_EMAIL=

domain for oracolo. Ex: oracolo.fractalized.net

ORACOLO_DOMAIN=

Npub author at "npub" format, not HEX.

NPUB=

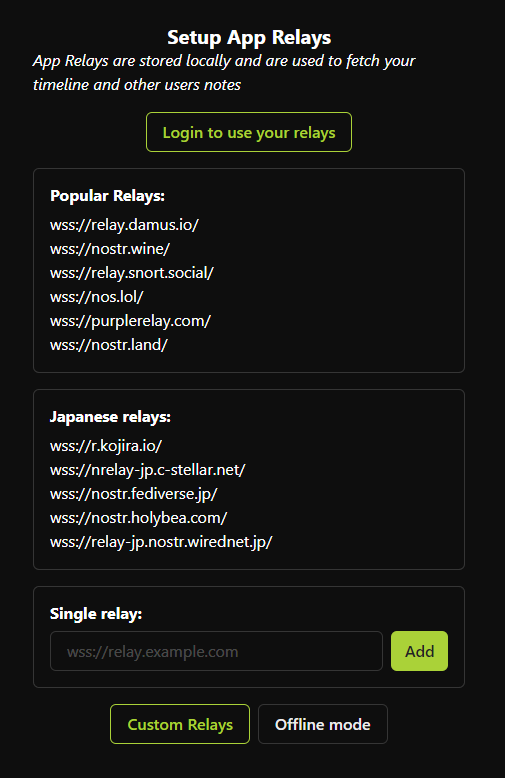

Relays where Oracolo will retrieve the Nostr events.

Ex: "wss://nostr.fractalized.net, wss://rnostr.fractalized.net"

RELAYS=

Number of blog article with an thumbnail. Ex: 4

TOP_NOTES_NB= ```

5) Compose Oracolo:

bash docker compose up -d && docker compose logs -f oracolo traefikbash [+] Running 2/0 ✔ Container traefik Running 0.0s ✔ Container oracolo Running 0.0s WARN[0000] /home/pastadmin/DEV/FORGEJO/PLAY/oracolo-docker/docker-compose.yml: `version` is obsolete traefik | 2024-05-28T19:24:18Z INF Traefik version 3.0.0 built on 2024-04-29T14:25:59Z version=3.0.0 oracolo | oracolo | ___ ____ ____ __ ___ _ ___ oracolo | / \ | \ / | / ] / \ | | / \ oracolo | | || D )| o | / / | || | | | oracolo | | O || / | |/ / | O || |___ | O | oracolo | | || \ | _ / \_ | || || | oracolo | | || . \| | \ || || || | oracolo | \___/ |__|\_||__|__|\____| \___/ |_____| \___/ oracolo | oracolo | Oracolo dtonon's repo: https://github.com/dtonon/oracolo oracolo | oracolo | ╭────────────────────────────╮ oracolo | │ Docker Compose Env Vars ⤵️ │ oracolo | ╰────────────────────────────╯ oracolo | oracolo | NPUB : npub1ky4kxtyg0uxgw8g5p5mmedh8c8s6sqny6zmaaqj44gv4rk0plaus3m4fd2 oracolo | RELAYS : wss://nostr.fractalized.net, wss://rnostr.fractalized.net oracolo | TOP_NOTES_NB : 4 oracolo | oracolo | ╭───────────────────────────╮ oracolo | │ Configuring Oracolo... ⤵️ │ oracolo | ╰───────────────────────────╯ oracolo | oracolo | > Updating npub key with npub1ky4kxtyg0uxgw8g5p5mmedh8c8s6sqny6zmaaqj44gv4rk0plaus3m4fd2... ✅ oracolo | > Updating nostr relays with wss://nostr.fractalized.net, wss://rnostr.fractalized.net... ✅ oracolo | > Updating TOP_NOTE with value 4... ✅ oracolo | oracolo | ╭───────────────────────╮ oracolo | │ Installing Oracolo ⤵️ │ oracolo | ╰───────────────────────╯ oracolo | oracolo | added 122 packages, and audited 123 packages in 8s oracolo | oracolo | 20 packages are looking for funding oracolo | run `npm fund` for details oracolo | oracolo | found 0 vulnerabilities oracolo | npm notice oracolo | npm notice New minor version of npm available! 10.7.0 -> 10.8.0 oracolo | npm notice Changelog: https://github.com/npm/cli/releases/tag/v10.8.0 oracolo | npm notice To update run: npm install -g npm@10.8.0 oracolo | npm notice oracolo | oracolo | >>> done ✅ oracolo | oracolo | ╭─────────────────────╮ oracolo | │ Building Oracolo ⤵️ │ oracolo | ╰─────────────────────╯ oracolo | oracolo | > oracolo@0.0.0 build oracolo | > vite build oracolo | oracolo | 7:32:49 PM [vite-plugin-svelte] WARNING: The following packages have a svelte field in their package.json but no exports condition for svelte. oracolo | oracolo | @splidejs/svelte-splide@0.2.9 oracolo | @splidejs/splide@4.1.4 oracolo | oracolo | Please see https://github.com/sveltejs/vite-plugin-svelte/blob/main/docs/faq.md#missing-exports-condition for details. oracolo | vite v5.2.11 building for production... oracolo | transforming... oracolo | ✓ 84 modules transformed. oracolo | rendering chunks... oracolo | oracolo | oracolo | Inlining: index-C6McxHm7.js oracolo | Inlining: style-DubfL5gy.css oracolo | computing gzip size... oracolo | dist/index.html 233.15 kB │ gzip: 82.41 kB oracolo | ✓ built in 7.08s oracolo | oracolo | >>> done ✅ oracolo | oracolo | > Copying Oracolo built index.html to nginx usr/share/nginx/html... ✅ oracolo | oracolo | ╭────────────────────────╮ oracolo | │ Configuring Nginx... ⤵️ │ oracolo | ╰────────────────────────╯ oracolo | oracolo | > Copying default nginx.conf file... ✅ oracolo | oracolo | ╭──────────────────────╮ oracolo | │ Starting Nginx... 🚀 │ oracolo | ╰──────────────────────╯ oracolo |If you don't have any issue with the Traefik container, Oracolo should be live! 🔥

You can now access it by going to the ORACOLO_DOMAIN URL configured into the .env file.

Have a good day!

Don't hesisate to follow dtonon on Nostr to follow-up the future updates ⚡🔥

See you soon in another Fractalized story!

PastaGringo 🤖⚡ -

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28O Planetinha

Fumaça verde me entrando pelas narinas e um coro desafinado fazia uma base melódica.

nos confins da galáxia havia um planetinha isolado. Era um planeta feliz.

O homem vestido de mago começava a aparecer por detrás da fumaça verde.

O planetinha recebeu três presentes, mas o seu habitante, o homem, estava num estado de confusão tão grande que ameaçava estragá-los. Os homens já havia escravizado o primeiro presente, a vida; lutavam contra o segundo presente, a morte; e havia alguns que achavam que deviam destruir totalmente o terceiro, o amor, e com isto levar a desordem total ao pobre planetinha perdido, que se chamava Terra.

O coro desafinado entrou antes do "Terra" cantando várias vezes, como se imitasse um eco, "terra-terra-terraaa". Depois de uma pausa dramática, o homem vestido de mago voltou a falar.

Terra, nossa nave mãe.

Neste momento eu me afastei. À frente do palco onde o mago e seu coral faziam apelos à multidão havia vários estandes cobertos com a tradicional armação de quatro pernas e lona branca. Em todos os cantos da praça havia gente, gente dos mais variados tipos. Visitantes curiosos que se aproximavam atraídos pela fumaça verde e as barraquinhas, gente que aproveitava o movimento para vender doces sem pagar imposto, casais que se abraçavam de pé para espantar o frio, os tradicionais corredores que faziam seu cooper, gente cheia de barba e vestida para imitar os hippies dos anos 60 e vender colares estendidos no chão, transeuntes novos e velhos, vestidos como baladeiros ou como ativistas do ônibus grátis, grupos de ciclistas entusiastas.

O mago fazia agora apelos para que nós, os homens, habitantes do isolado planetinha, passássemos a ver o planetinha, nossa nave mãe, como um todo, e adquiríssemos a consciência de que ele estava entrando em maus lençóis. A idéia, reforçada pela logomarca do evento, era que parássemos de olhar só para a nossa vida e pensássemos no planeta.

A logomarca do evento, um desenho estilizado do planeta Terra, nada tinha a ver com seu nome: "Festival Andando de Bem com a Vida", mas havia sido ali colocada estrategicamente pelos organizadores, de quem parecia justamente sair a mensagem dita pelo mago.

Aquela multidão de pessoas que, assim como eu, tinham suas próprias preocupações, não podiam ver o quadro caótico que formavam, cada uma com seus atos isolados, ali naquela praça isolada, naquele planeta isolado. Quando o hippie barbudo, quase um Osho, assustava um casal para tentar vender-lhes um colar, a quantidade de caos que isto acrescentava à cena era gigantesca. Por um segundo, pude ver, como se estivesse de longe e acima, com toda a pretensão que este estado imaginativo carrega, a cena completa do caos.

Uma nave-mãe, dessas de ficção científica, habitada por milhões de pessoas, seguia no espaço sem rumo, e sem saber que logo à frente um longo precipício espacial a esperava, para a desgraça completa sua e de seus habitantes.

Acostumados àquela nave tanto quanto outrora estiveram acostumados à sua terra natal, os homens viviam as próprias vidas sem nem se lembrar que estavam vagando pelo espaço. Ninguém sabia quem estava conduzindo a nave, e ninguém se importava.

No final do filme descobre-se que era a soma completa do caos que cada habitante produzia, com seus gestos egoístas e incapazes de levar em conta a totalidade, é que determinava a direção da nave-mãe. O efeito, no entanto, não era imediato, como nunca é. Havia gente de verdade encarregada de conduzir a nave, mas era uma gente bêbada, mau-caráter, que vivia brigando pelo controle da nave e o poder que isto lhes dava. Poder, status, dinheiro!

Essa gente bêbada era atraída até ali pela corrupção das instituições e da moral comum que, no fundo no fundo, era causada pelo egoísmo da população, através de um complexo -- mas que no filme aparece simplificado pela ação individual de um magnata do divertimento público -- processo social.

O homem vestido de mago era mais um agente causador de caos, com sua cena cheia de fumaça e sua roupa estroboscópica, ele achava que estava fazendo o bem ao alertar sua platéia, todos as sextas-feiras, de que havia algo que precisava ser feito, que cada um que estava ali ouvindo era responsável pelo planeta. A sua incapacidade, porém, de explicar o que precisava ser feito só aumentava a angústia geral; a culpa que ele jogava sobre seu público, e que era prontamente aceita e passada em frente, aos familiares e amigos de cada um, atormentava-os diariamente e os impedia de ter uma vida decente no trabalho e em casa. As famílias, estressadas, estavam constantemente brigando e os motivos mais insignificantes eram responsáveis pelas mais horrendas conseqüências.

O mago, que após o show tirava o chapéu entortado e ia tomar cerveja num boteco, era responsável por uma parcela considerável do caos que levava a nave na direção do seu desgraçado fim. No filme, porém, um dos transeuntes que de passagem ouviu um pedaço do discurso do mago despertou em si mesmo uma consiência transformadora e, com poderes sobre-humanos que lhe foram então concedidos por uma ordem iniciática do bem ou não, usando só os seus poderes humanos mesmo, o transeunte -- na primeira versão do filme um homem, na segunda uma mulher -- consegue consertar as instituições e retirar os bêbados da condução da máquina. A questão da moral pública é ignorada para abreviar a trama, já com duas horas e quarenta de duração, mas subentende-se que ela também fora resolvida.

No planeta Terra real, que não está indo em direção alguma, preso pela gravidade ao Sol, e onde as pessoas vivem a própria vida porque lhes é impossível viver a dos outros, não têm uma consciência global de nada porque só é possível mesmo ter a consciência delas mesmas, e onde a maioria, de uma maneira ou de outra, está tentando como pode, fazer as coisas direito, o filme é exibido.

Para a maioria dos espectadores, é um filme que evoca reflexões, um filme forte. Por um segundo elas têm o mesmo vislumbre do caos generalizado que eu tive ali naquela praça. Para uma pequena parcela dos espectadores -- entre eles alguns dos que estavam na platéia do mago, o próprio mago, o seguidor do Osho, o casal de duas mulheres e o vendedor de brigadeiros, mas aos quais se somam também críticos de televisão e jornal e gente que fala pelos cotovelos na internet -- o filme é um horror, o filme é uma vulgarização de um problema real e sério, o filme apela para a figura do herói salvador e passa uma mensagem totalmente errada, de que a maioria da população pode continuar vivendo as suas própria vidinhas miseráveis enquanto espera por um herói que vem do Olimpo e os salva da mixórdia que eles mesmos causaram, é um filme que presta um enorme desserviço à causa.

No dia seguinte ao lançamento, num bar meio caro ali perto da praça, numa mesa com oito pessoas, entre elas seis do primeiro grupo e oito do segundo, discute-se se o filme levará ou não o Oscar. Eu estou em casa dormindo e não escuto nada.

-

@ 4523be58:ba1facd0

2024-05-28 11:05:17

@ 4523be58:ba1facd0

2024-05-28 11:05:17NIP-116

Event paths

Description

Event kind

30079denotes an event defined by its event path rather than its event kind.The event directory path is included in the event path, specified in the event's

dtag. For example, an event path might beuser/profile/name, whereuser/profileis the directory path.Relays should parse the event directory from the event path

dtag and index the event by it. Relays should support "directory listing" of kind30079events using the#ffilter, such as{"#f": ["user/profile"]}.For backward compatibility, the event directory should also be saved in the event's

ftag (for "folder"), which is already indexed by some relay implementations, and can be queried using the#ffilter.Event content should be a JSON-encoded value. An empty object

{}signifies that the entry at the event path is itself a directory. For example, when savinguser/profile/name:Bob, you should also saveuser/profile:{}so the subdirectory can be listed underuser.In directory names, slashes should be escaped with a double slash.

Example

Event

json { "tags": [ ["d", "user/profile/name"], ["f", "user/profile"] ], "content": "\"Bob\"", "kind": 30079, ... }Query

json { "#f": ["user/profile"], "authors": ["[pubkey]"] }Motivation

To make Nostr an "everything app," we need a sustainable way to support new kinds of applications. Browsing Nostr data by human-readable nested directories and paths rather than obscure event kind numbers makes the data more manageable.

Numeric event kinds are not sustainable for the infinite number of potential applications. With numeric event kinds, developers need to find an unused number for each new application and announce it somewhere, which is cumbersome and not scalable.

Directories can also replace monolithic list events like follow lists or profile details. You can update a single directory entry such as

user/profile/nameorgroups/follows/[pubkey]without causing an overwrite of the whole profile or follow list when your client is out-of-sync with the most recent list version, as often happens on Nostr.Using

d-tagged replaceable events for reactions, such as{tags: [["d", "reactions/[eventId]"]], content: "\"👍\"", kind: 30079, ...}would make un-reacting trivial: just publish a new event with the samedtag and an empty content. Toggling a reaction on and off would not cause a flurry of new reaction & delete events that all need to be persisted.Implementations

- Relays that support tag-replaceable events and indexing by arbitrary tags (in this case

f) already support this feature. - IrisDB client side library: treelike data structure with subscribable nodes.

https://github.com/nostr-protocol/nips/pull/1266

- Relays that support tag-replaceable events and indexing by arbitrary tags (in this case

-

@ b12b632c:d9e1ff79

2024-04-24 20:21:27

@ b12b632c:d9e1ff79

2024-04-24 20:21:27What's Blossom?

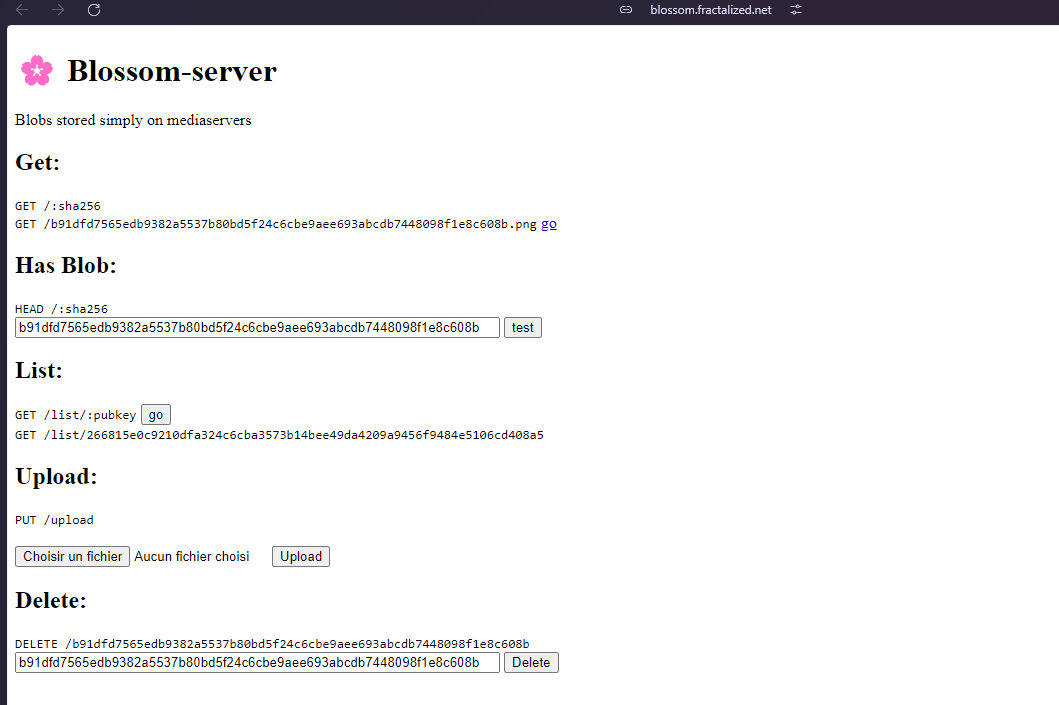

Blossom offers a bunch of HTTP endpoints that let Nostr users stash and fetch binary data on public servers using the SHA256 hash as a universal ID.

You can find more -precise- information about Blossom on the Nostr article published today by hzrd149, the developper behind it:

nostr:naddr1qqxkymr0wdek7mfdv3exjan9qgszv6q4uryjzr06xfxxew34wwc5hmjfmfpqn229d72gfegsdn2q3fgrqsqqqa28e4v8zy

You find the Blossom github repo here:

GitHub - hzrd149/blossom: Blobs stored simply on mediaservers https://github.com/hzrd149/blossom

Meet Blobs

Blobs are files with SHA256 hashes as IDs, making them unique and secure. You can compute these IDs from the files themselves using the sha256 hashing algorithm (when you run

sha256sum bitcoin.pdf).Meet Drives

Drives are like organized events on Nostr, mapping blobs to filenames and extra info. It's like setting up a roadmap for your data.

How do Servers Work?

Blossom servers have four endpoints for users to upload and handle blobs:

GET /<sha256>: Get blobs by their SHA256 hash, maybe with a file extension. PUT /upload: Chuck your blobs onto the server, verified with signed Nostr events. GET /list/<pubkey>: Peek at a list of blobs tied to a specific public key for smooth management. DELETE /<sha256>: Trash blobs from the server when needed, keeping things tidy.Yon can find detailed information about the Blossom Server Implementation here..

https://github.com/hzrd149/blossom/blob/master/Server.md

..and the Blossom-server source code is here:

https://github.com/hzrd149/blossom-server

What's Blossom Drive?

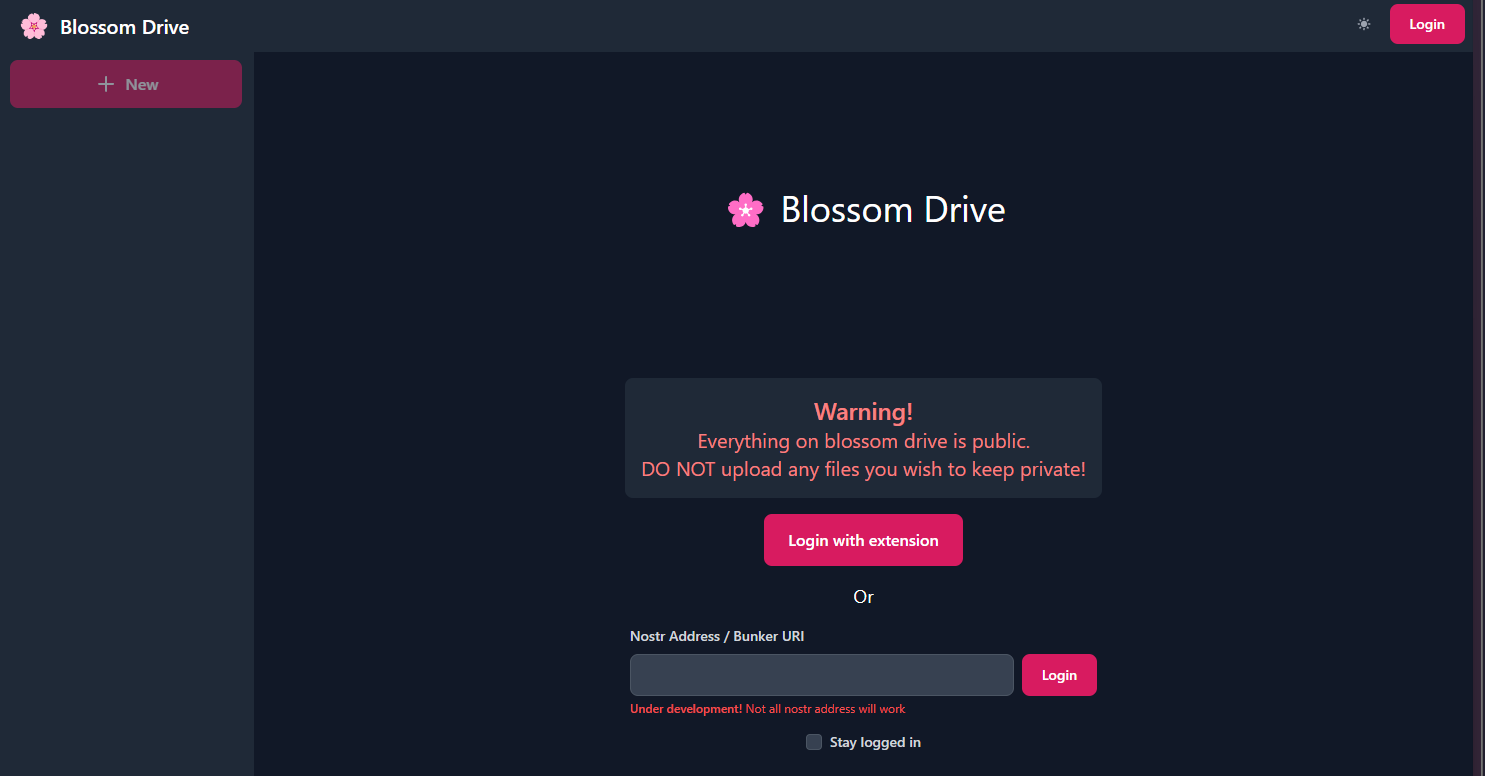

Think of Blossom Drive as the "Front-End" (or a public cloud drive) of Blossom servers, letting you upload, manage, share your files/folders blobs.

Source code is available here:

https://github.com/hzrd149/blossom-drive

Developpers

If you want to add Blossom into your Nostr client/app, the blossom-client-sdk explaining how it works (with few examples 🙏) is published here:

https://github.com/hzrd149/blossom-client-sdk

How to self-host Blossom server & Blossom Drive

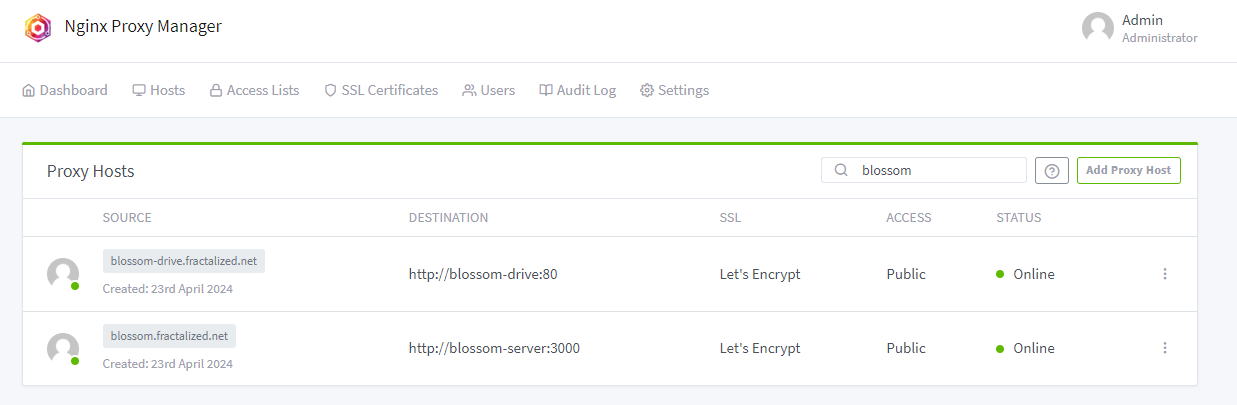

We'll use docker compose to setup Blossom server & drive. I included Nginx Proxy Manager because it's the Web Proxy I use for all the Fractalized self-hosted services :

Create a new docker-compose file:

~$ nano docker-compose.ymlInsert this content into the file:

``` version: '3.8' services:

blossom-drive: container_name: blossom-drive image: pastagringo/blossom-drive-docker

ports:

- '80:80'

blossom-server: container_name: blossom-server image: 'ghcr.io/hzrd149/blossom-server:master'

ports:

- '3000:3000'

volumes: - './blossom-server/config.yml:/app/config.yml' - 'blossom_data:/app/data'nginxproxymanager: container_name: nginxproxymanager image: 'jc21/nginx-proxy-manager:latest' restart: unless-stopped ports: - '80:80' - '81:81' - '443:443' volumes: - ./nginxproxymanager/data:/data - ./nginxproxymanager/letsencrypt:/etc/letsencrypt - ./nginxproxymanager/_hsts_map.conf:/app/templates/_hsts_map.conf

volumes: blossom_data: ```

You now need to personalize the blossom-server config.yml:

bash ~$ mkdir blossom-server ~$ nano blossom-server/config.ymlInsert this content to the file (CTRL+X & Y to save/exit):

```yaml

Used when listing blobs

publicDomain: https://blossom.fractalized.net

databasePath: data/sqlite.db

discovery: # find files by querying nostr relays nostr: enabled: true relays: - wss://nostrue.com - wss://relay.damus.io - wss://nostr.wine - wss://nos.lol - wss://nostr-pub.wellorder.net - wss://nostr.fractalized.net # find files by asking upstream CDNs upstream: enabled: true domains: - https://cdn.satellite.earth # don't set your blossom server here!

storage: # local or s3 backend: local local: dir: ./data # s3: # endpoint: https://s3.endpoint.com # bucket: blossom # accessKey: xxxxxxxx # secretKey: xxxxxxxxx # If this is set the server will redirect clients when loading blobs # publicURL: https://s3.region.example.com/

# rules are checked in descending order. if a blob matches a rule it is kept # "type" (required) the type of the blob, "" can be used to match any type # "expiration" (required) time passed since last accessed # "pubkeys" (optional) a list of owners # any blobs not matching the rules will be removed rules: # mime type of blob - type: text/ # time since last accessed expiration: 1 month - type: "image/" expiration: 1 week - type: "video/" expiration: 5 days - type: "model/" expiration: 1 week - type: "" expiration: 2 days

upload: # enable / disable uploads enabled: true # require auth to upload requireAuth: true # only check rules that include "pubkeys" requirePubkeyInRule: false

list: requireAuth: false allowListOthers: true

tor: enabled: false proxy: "" ```

You need to update few values with your own:

- Your own Blossom server public domain :

publicDomain: https://YourBlossomServer.YourDomain.tldand upstream domains where Nostr clients will also verify if the Blossom server own the file blob: :

upstream: enabled: true domains: - https://cdn.satellite.earth # don't set your blossom server here!- The Nostr relays where you want to publish your Blossom events (I added my own Nostr relay):

yaml discovery: # find files by querying nostr relays nostr: enabled: true relays: - wss://nostrue.com - wss://relay.damus.io - wss://nostr.wine - wss://nos.lol - wss://nostr-pub.wellorder.net - wss://nostr.fractalized.netEverything is setup! You can now compose your docker-compose file:

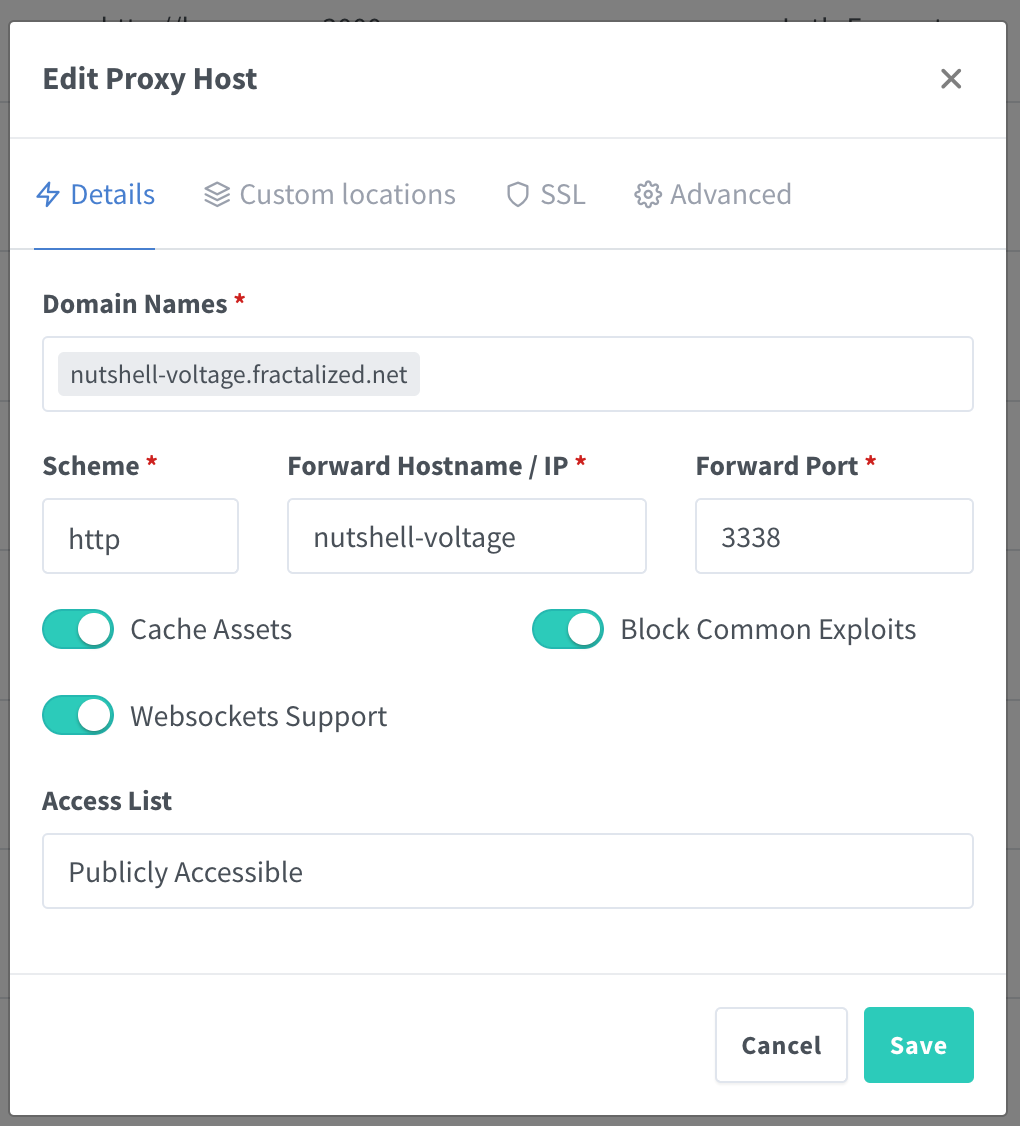

~$ docker compose up -dI will let your check this article to know how to configure and use Nginx Proxy Manager.

You can check both Blossom containers logs with this command:

~$ docker compose logs -f blossom-drive blossom-serverRegarding the Nginx Proxy Manager settings for Blossom, here is the configuration I used:

PS: it seems the naming convention for the kind of web service like Blossom is named "CDN" (for: "content delivery network"). It's not impossible in a near future I rename my subdomain blossom.fractalized.net to cdn.blossom.fractalized.net and blossom-drive.fractalized.net to blossom.fractalized.net 😅

Do what you prefer!

After having configured everything, you can now access Blossom server by going to your Blossom server subdomain. You should see a homepage as below:

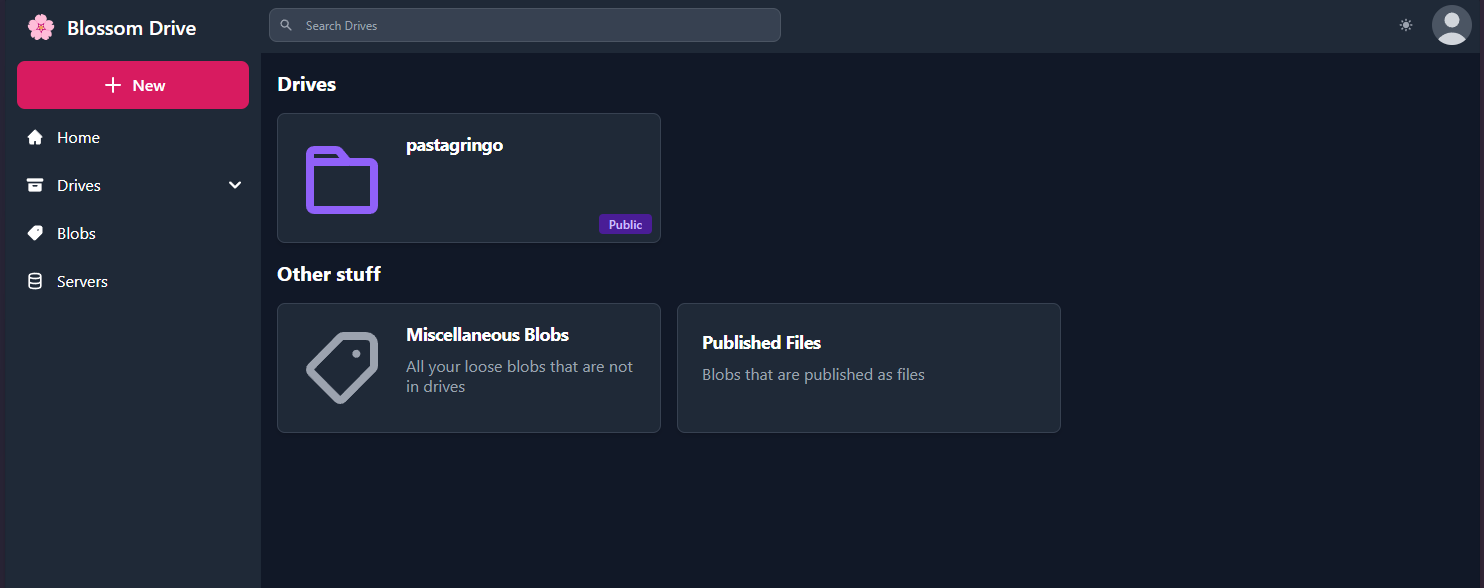

Same thing for the Blossom Drive, you should see this homepage:

You can now login with your prefered method. In my case, I login on Blossom Drive with my NIP-07 Chrome extension.

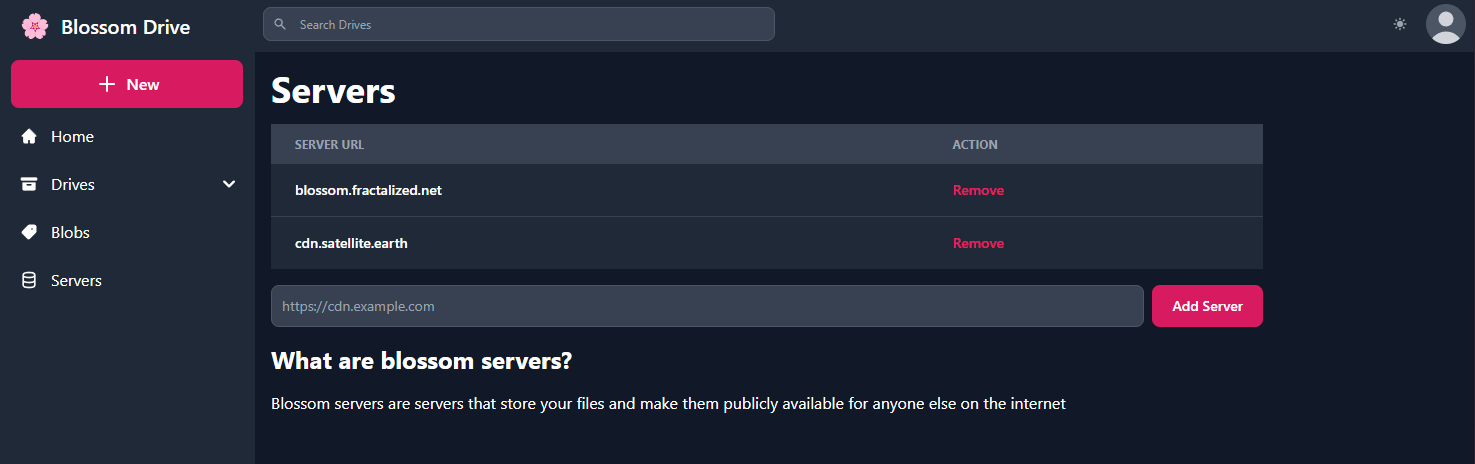

You now need to go the "Servers" tab to add some Blossom servers, including the fresh one you just installed.

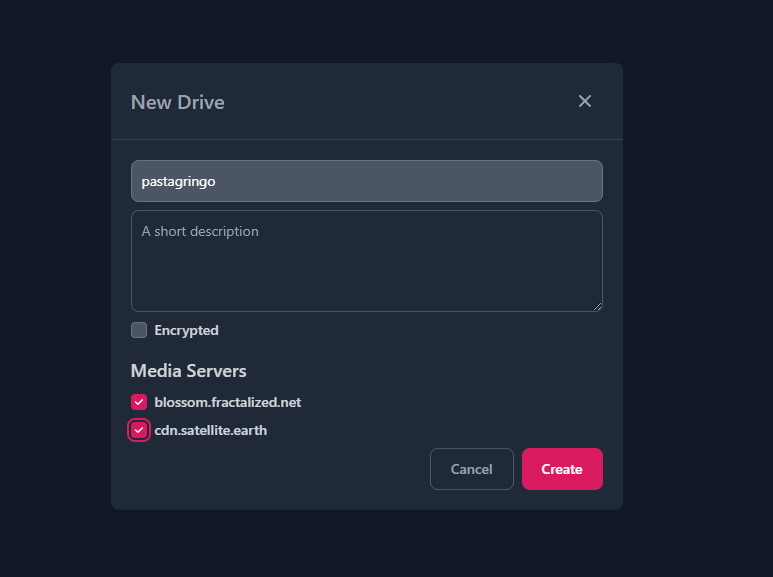

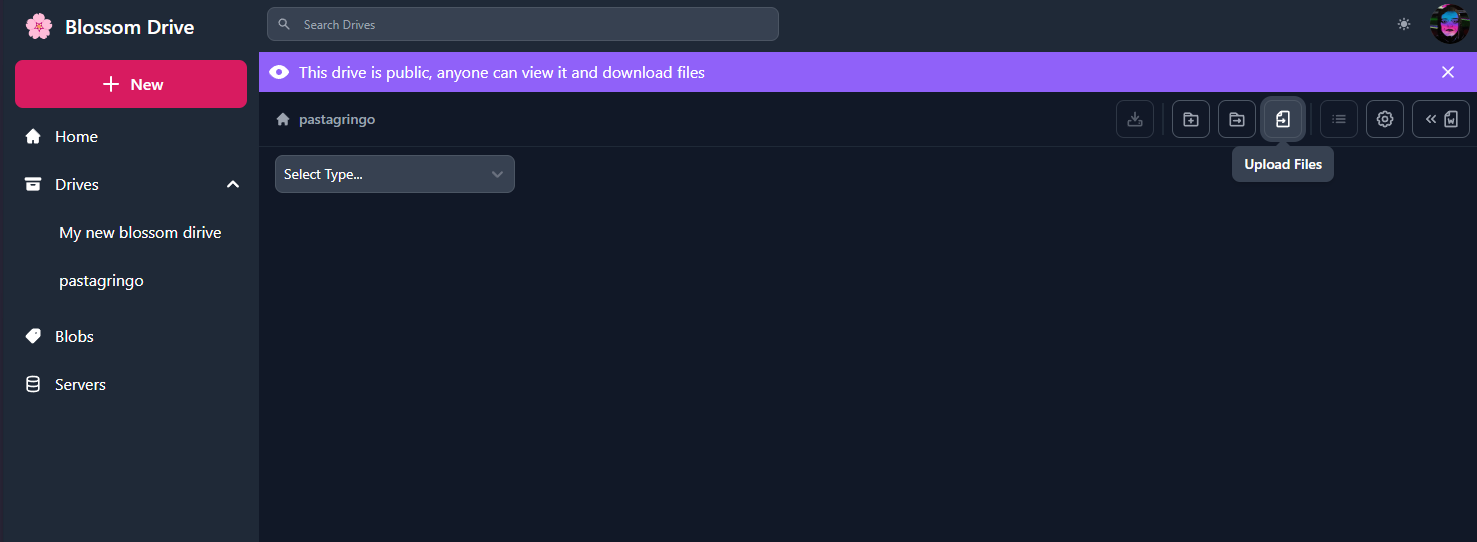

You can now create your first Blossom Drive by clicking on "+ New" > "Drive" on the top left button:

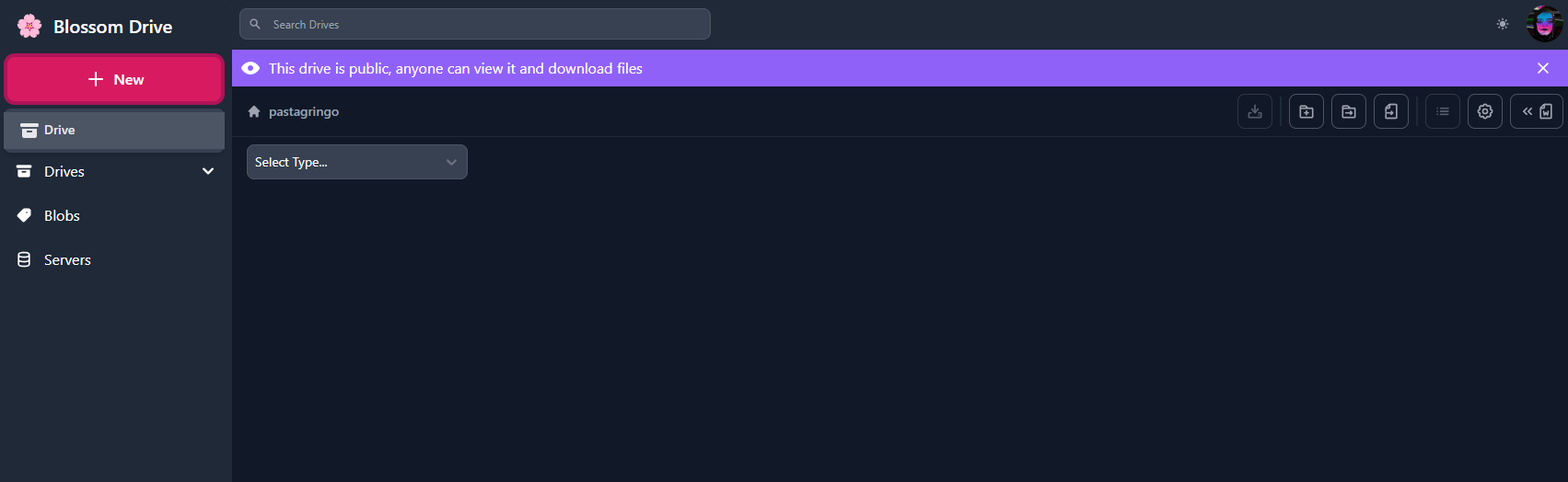

Fill your desired blossom drive name and select the media servers where you want to host your files and click on "Create":

PS: you can enable "Encrypted" option but as hzrd149 said on his Nostr note about Blossom:

"There is also the option to encrypt drives using NIP-49 password encryption. although its not tested at all so don't trust it, verify"

You are now able to upload some files (a picture for instance):

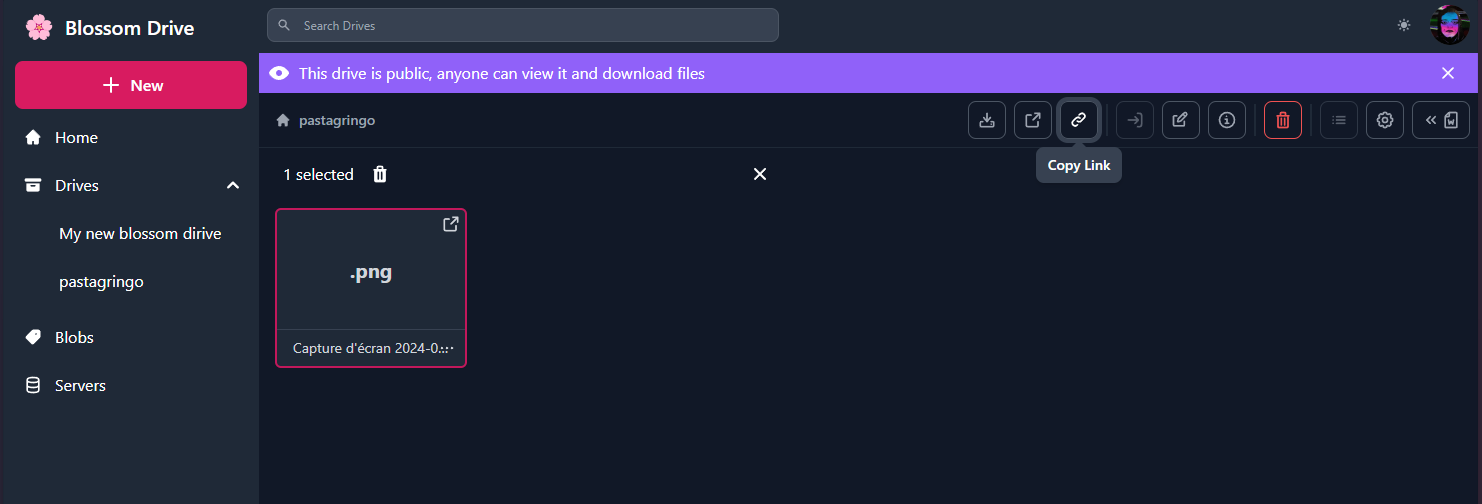

And obtain the HTTP direct link by clicking on the "Copy Link" button:

If you check URL image below, you'll see that it is served by Blossom:

It's done ! ✅

You can now upload your files to Blossom accross several Blossom servers to let them survive the future internet apocalypse.

Blossom has just been released few days ago, many news and features will come!

Don't hesisate to follow hzrd149 on Nostr to follow-up the future updates ⚡🔥

See you soon in another Fractalized story!

PastaGringo 🤖⚡ -

@ f977c464:32fcbe00

2024-01-11 18:47:47

@ f977c464:32fcbe00

2024-01-11 18:47:47Kendisini aynada ilk defa gördüğü o gün, diğerleri gibi olduğunu anlamıştı. Oysaki her insan biricik olmalıydı. Sözgelimi sinirlendiğinde bir kaşı diğerinden birkaç milimetre daha az çatılabilirdi veya sevindiğinde dudağı ona has bir açıyla dalgalanabilirdi. Hatta bunların hiçbiri mümkün değilse, en azından, gözlerinin içinde sadece onun sahip olabileceği bir ışık parlayabilirdi. Çok sıradan, öyle sıradan ki kimsenin fark etmediği o milyonlarca minik şeyden herhangi biri. Ne olursa.

Ama yansımasına bakarken bunların hiçbirini bulamadı ve diğer günlerden hiç de farklı başlamamış o gün, işe gitmek için vagonunun gelmesini beklediği alelade bir metro istasyonunda, içinde kaybolduğu illüzyon dağılmaya başladı.

İlk önce derisi döküldü. Tam olarak dökülmedi aslında, daha çok kıvılcımlara dönüşüp bedeninden fırlamış ve bir an sonra sönerek külleşmiş, havada dağılmıştı. Ardında da, kaybolmadan hemen önce, kısa süre için hayal meyal görülebilen, bir ruhun yok oluşuna ağıt yakan rengârenk peri cesetleri bırakmıştı. Beklenenin aksine, havaya toz kokusu yayıldı.

Dehşete düştü elbette. Dehşete düştüler. Panikle üstlerini yırtan 50 işçi. Her şeyin sebebiyse o vagon.

Saçları da döküldü. Her tel, yere varmadan önce, her santimde ikiye ayrıla ayrıla yok oldu.

Bütün yüzeylerin mat olduğu, hiçbir şeyin yansımadığı, suyun siyah aktığı ve kendine ancak kameralarla bakabildiğin bir dünyada, vagonun içine yerleştirilmiş bir aynadan ilk defa kendini görmek.

Gözlerinin akları buharlaşıp havada dağıldı, mercekleri boşalan yeri doldurmak için eriyip yayıldı. Gerçeği görmemek için yaratılmış, bu yüzden görmeye hazır olmayan ve hiç olmayacak gözler.

Her şeyin o anda sona erdiğini sanabilirdi insan. Derin bir karanlık ve ölüm. Görmenin görmek olduğu o anın bitişi.

Ben geldiğimde ölmüşlerdi.

Yani bozulmuşlardı demek istiyorum.

Belleklerini yeni taşıyıcılara takmam mümkün olmadı. Fiziksel olarak kusursuz durumdaydılar, olmayanları da tamir edebilirdim ama tüm o hengamede kendilerini baştan programlamış ve girdilerini modifiye etmişlerdi.

Belleklerden birini masanın üzerinden ileriye savurdu. Hınca hınç dolu bir barda oturuyorlardı. O ve arkadaşı.

Sırf şu kendisini insan sanan androidler travma geçirip delirmesin diye neler yapıyoruz, insanın aklı almıyor.

Eliyle arkasını işaret etti.

Polislerin söylediğine göre biri vagonun içerisine ayna yerleştirmiş. Bu zavallılar da kapı açılıp bir anda yansımalarını görünce kafayı kırmışlar.

Arkadaşı bunların ona ne hissettirdiğini sordu. Yani o kadar bozuk, insan olduğunu sanan androidi kendilerini parçalamış olarak yerde görmek onu sarsmamış mıydı?

Hayır, sonuçta belirli bir amaç için yaratılmış şeyler onlar. Kaliteli bir bilgisayarım bozulduğunda üzülürüm çünkü parasını ben vermişimdir. Bunlarsa devletin. Bana ne ki?

Arkadaşı anlayışla kafasını sallayıp suyundan bir yudum aldı. Kravatını biraz gevşetti.

Bira istemediğinden emin misin?

İstemediğini söyledi. Sahi, neden deliriyordu bu androidler?

Basit. Onların yapay zekâlarını kodlarken bir şeyler yazıyorlar. Yazılımcılar. Biliyorsun, ben donanımdayım. Bunlar da kendilerini insan sanıyorlar. Tiplerine bak.

Sesini alçalttı.

Arabalarda kaza testi yapılan mankenlere benziyor hepsi. Ağızları burunları bile yok ama şu geldiğimizden beri sakalını düzeltip duruyor mesela. Hayır, hepsi de diğerleri onun sakalı varmış sanıyor, o manyak bir şey.

Arkadaşı bunun delirmeleriyle bağlantısını çözemediğini söyledi. O da normal sesiyle konuşmaya devam etti.

Anlasana, aynayı falan ayırt edemiyor mercekleri. Lönk diye kendilerini görüyorlar. Böyle, olduğu gibi...

Nedenmiş peki? Ne gerek varmış?

Ne bileyim be abicim! Ahiret soruları gibi.

Birasına bakarak dalıp gitti. Sonra masaya abanarak arkadaşına iyice yaklaştı. Bulanık, bir tünelin ucundaki biri gibi, şekli şemalı belirsiz bir adam.

Ben seni nereden tanıyorum ki ulan? Kimsin sen?

Belleği makineden çıkardılar. İki kişiydiler. Soruşturmadan sorumlu memurlar.

─ Baştan mı başlıyoruz, diye sordu belleği elinde tutan ilk memur.

─ Bir kere daha deneyelim ama bu sefer direkt aynayı sorarak başla, diye cevapladı ikinci memur.

─ Bence de. Yeterince düzgün çalışıyor.

Simülasyon yüklenirken, ayakta, biraz arkada duran ve alnını kaşıyan ikinci memur sormaktan kendisini alamadı:

─ Bu androidleri niye böyle bir olay yerine göndermişler ki? Belli tost olacakları. İsraf. Gidip biz baksak aynayı kırıp delilleri mahvetmek zorunda da kalmazlar.

Diğer memur sandalyesinde hafifçe dönecek oldu, o sırada soruyu bilgisayarın hoparlöründen teknisyen cevapladı.

Hangi işimizde bir yamukluk yok ki be abi.

Ama bir son değildi. Üstlerindeki tüm illüzyon dağıldığında ve çıplak, cinsiyetsiz, birbirinin aynı bedenleriyle kaldıklarında sıra dünyaya gelmişti.

Yere düştüler. Elleri -bütün bedeni gibi siyah turmalinden, boğumları çelikten- yere değdiği anda, metronun zemini dağıldı.

Yerdeki karolar öncesinde beyazdı ve çok parlaktı. Tepelerindeki floresan, ışığını olduğu gibi yansıtıyor, tek bir lekenin olmadığı ve tek bir tozun uçmadığı istasyonu aydınlatıyorlardı.

Duvarlara duyurular asılmıştı. Örneğin, yarın akşam kültür merkezinde 20.00’da başlayacak bir tekno blues festivalinin cıvıl cıvıl afişi vardı. Onun yanında daha geniş, sarı puntolu harflerle yazılmış, yatay siyah kesiklerle çerçevesi çizilmiş, bir platformdan düşen çöp adamın bulunduğu “Dikkat! Sarı bandı geçmeyin!” uyarısı. Biraz ilerisinde günlük resmi gazete, onun ilerisinde bir aksiyon filminin ve başka bir romantik komedi filminin afişleri, yapılacakların ve yapılmayacakların söylendiği küçük puntolu çeşitli duyurular... Duvar uzayıp giden bir panoydu. On, on beş metrede bir tekrarlanıyordu.

Tüm istasyonun eni yüz metre kadar. Genişliği on metre civarı.

Önlerinde, açık kapısından o mendebur aynanın gözüktüğü vagon duruyordu. Metro, istasyona sığmayacak kadar uzundu. Bir kılıcın keskinliğiyle uzanıyor ama yer yer vagonların ek yerleriyle bölünüyordu.

Hiçbir vagonda pencere olmadığı için metronun içi, içlerindekiler meçhuldü.

Sonrasında karolar zerrelerine ayrılarak yükseldi. Floresanın ışığında her yeri toza boğdular ve ortalığı gri bir sisin altına gömdüler. Çok kısa bir an. Afişleri dalgalandırmadılar. Dalgalandırmaya vakitleri olmadı. Yerlerinden söküp aldılar en fazla. Işık birkaç kere sönüp yanarak direndi. Son kez söndüğünde bir daha geri gelmedi.

Yine de etraf aydınlıktı. Kırmızı, her yere eşit dağılan soluk bir ışıkla.

Yer tamamen tele dönüşmüştü. Altında çapraz hatlarla desteklenmiş demir bir iskelet. Işık birkaç metreden daha fazla aşağıya uzanamıyordu. Sonsuzluğa giden bir uçurum.

Duvarın yerini aynı teller ve demir iskelet almıştı. Arkasında, birbirine vidalarla tutturulmuş demir plakalardan oluşan, üstünden geçen boruların ek yerlerinden bazen ince buharların çıktığı ve bir süre asılı kaldıktan sonra ağır, yağlı bir havayla sürüklendiği bir koridor.

Diğer tarafta paslanmış, pencerelerindeki camlar kırıldığı için demir plakalarla kapatılmış külüstür bir metro. Kapının karşısındaki aynadan her şey olduğu gibi yansıyordu.

Bir konteynırın içini andıran bir evde, gerçi gayet de birbirine eklenmiş konteynırlardan oluşan bir şehirde “andıran” demek doğru olmayacağı için düpedüz bir konteynırın içinde, masaya mum görüntüsü vermek için koyulmuş, yarı katı yağ atıklarından şekillendirilmiş kütleleri yakmayı deniyordu. Kafasında hayvan kıllarından yapılmış grili siyahlı bir peruk. Aynı kıllardan kendisine gür bir bıyık da yapmıştı.

Üstünde mavi çöp poşetlerinden yapılmış, kravatlı, şık bir takım.

Masanın ayakları yerine oradan buradan çıkmış parçalar konulmuştu: bir arabanın şaft mili, üst üste konulmuş ve üstünde yazı okunamayan tenekeler, boş kitaplar, boş gazete balyaları... Hiçbir şeye yazı yazılmıyordu, gerek yoktu da zaten çünkü merkez veri bankası onları fark ettirmeden, merceklerden giren veriyi sentezleyerek insanlar için dolduruyordu. Yani, androidler için. Farklı şekilde isimlendirmek bir fark yaratacaksa.

Onların mercekleri için değil. Bağlantıları çok önceden kopmuştu.

─ Hayatım, sofra hazır, diye bağırdı yatak odasındaki karısına.

Sofrada tabak yerine düz, bardak yerine bükülmüş, çatal ve bıçak yerine sivriltilmiş plakalar.

Karısı salonun kapısında durakladı ve ancak kulaklarına kadar uzanan, kocasınınkine benzeyen, cansız, ölü hayvanların kıllarından ibaret peruğunu eliyle düzeltti. Dudağını, daha doğrusu dudağının olması gereken yeri koyu kırmızı bir yağ tabakasıyla renklendirmeyi denemişti. Biraz da yanaklarına sürmüştü.

─ Nasıl olmuş, diye sordu.

Sesi tek düzeydi ama hafif bir neşe olduğunu hissettiğinize yemin edebilirdiniz.

Üzerinde, çöp poşetlerinin içini yazısız gazete kağıtlarıyla doldurarak yaptığı iki parça giysi.

─ Çok güzelsin, diyerek kravatını düzeltti kocası.

─ Sen de öylesin, sevgilim.

Yaklaşıp kocasını öptü. Kocası da onu. Sonra nazikçe elinden tutarak, sandalyesini geriye çekerek oturmasına yardım etti.

Sofrada yemek niyetine hiçbir şey yoktu. Gerek de yoktu zaten.

Konteynırın kapısı gürültüyle tekmelenip içeri iki memur girene kadar birbirlerine öyküler anlattılar. O gün neler yaptıklarını. İşten erken çıkıp yemyeşil çimenlerde gezdiklerini, uçurtma uçurduklarını, kadının nasıl o elbiseyi bulmak için saatlerce gezip yorulduğunu, kocasının kısa süreliğine işe dönüp nasıl başarılı bir hamleyle yaşanan krizi çözdüğünü ve kadının yanına döndükten sonra, alışveriş merkezinde oturdukları yeni dondurmacının dondurmalarının ne kadar lezzetli olduğunu, boğazlarının ağrımasından korktuklarını...

Akşam film izleyebilirlerdi, televizyonda -boş ve mat bir plaka- güzel bir film oynayacaktı.

İki memur. Çıplak bedenleriyle birbirinin aynı. Ellerindeki silahları onlara doğrultmuşlardı. Mum ışığında, tertemiz bir örtünün serili olduğu masada, bardaklarında şaraplarla oturan ve henüz sofranın ortasındaki hindiye dokunmamış çifti gördüklerinde bocaladılar.

Hiç de androidlere bilinçli olarak zarar verebilecek gibi gözükmüyorlardı.

─ Sessiz kalma hakkına sahipsiniz, diye bağırdı içeri giren ikinci memur. Söylediğiniz her şey...

Cümlesini bitiremedi. Yatak odasındaki, masanın üzerinden gördüğü o şey, onunla aynı hareketleri yapan android, yoksa, bir aynadaki yansıması mıydı?

Bütün illüzyon o anda dağılmaya başladı.

Not: Bu öykü ilk olarak 2020 yılında Esrarengiz Hikâyeler'de yayımlanmıştır.

-

@ 42342239:1d80db24

2024-04-05 08:21:50

@ 42342239:1d80db24

2024-04-05 08:21:50Trust is a topic increasingly being discussed. Whether it is trust in each other, in the media, or in our authorities, trust is generally seen as a cornerstone of a strong and well-functioning society. The topic was also the theme of the World Economic Forum at its annual meeting in Davos earlier this year. Even among central bank economists, the subject is becoming more prevalent. Last year, Agustín Carstens, head of the BIS ("the central bank of central banks"), said that "[w]ith trust, the public will be more willing to accept actions that involve short-term costs in exchange for long-term benefits" and that "trust is vital for policy effectiveness".

It is therefore interesting when central banks or others pretend as if nothing has happened even when trust has been shattered.

Just as in Sweden and in hundreds of other countries, Canada is planning to introduce a central bank digital currency (CBDC), a new form of money where the central bank or its intermediaries (the banks) will have complete insight into citizens' transactions. Payments or money could also be made programmable. Everything from transferring ownership of a car automatically after a successful payment to the seller, to payments being denied if you have traveled too far from home.

"If Canadians decide a digital dollar is necessary, our obligation is to be ready" says Carolyn Rogers, Deputy Head of Bank of Canada, in a statement shared in an article.

So, what do the citizens want? According to a report from the Bank of Canada, a whopping 88% of those surveyed believe that the central bank should refrain from developing such a currency. About the same number (87%) believe that authorities should guarantee the opportunity to pay with cash instead. And nearly four out of five people (78%) do not believe that the central bank will care about people's opinions. What about trust again?

Canadians' likely remember the Trudeau government's actions against the "Freedom Convoy". The Freedom Convoy consisted of, among others, truck drivers protesting the country's strict pandemic policies, blocking roads in the capital Ottawa at the beginning of 2022. The government invoked never-before-used emergency measures to, among other things, "freeze" people's bank accounts. Suddenly, truck drivers and those with a "connection" to the protests were unable to pay their electricity bills or insurances, for instance. Superficially, this may not sound so serious, but ultimately, it could mean that their families end up in cold houses (due to electricity being cut off) and that they lose the ability to work (driving uninsured vehicles is not taken lightly). And this applied not only to the truck drivers but also to those with a "connection" to the protests. No court rulings were required.

Without the freedom to pay for goods and services, i.e. the freedom to transact, one has no real freedom at all, as several participants in the protests experienced.

In January of this year, a federal judge concluded that the government's actions two years ago were unlawful when it invoked the emergency measures. The use did not display "features of rationality - motivation, transparency, and intelligibility - and was not justified in relation to the relevant factual and legal limitations that had to be considered". He also argued that the use was not in line with the constitution. There are also reports alleging that the government fabricated evidence to go after the demonstrators. The case is set to continue to the highest court. Prime Minister Justin Trudeau and Finance Minister Chrystia Freeland have also recently been sued for the government's actions.

The Trudeau government's use of emergency measures two years ago sadly only provides a glimpse of what the future may hold if CBDCs or similar systems replace the current monetary system with commercial bank money and cash. In Canada, citizens do not want the central bank to proceed with the development of a CBDC. In canada, citizens in Canada want to strengthen the role of cash. In Canada, citizens suspect that the central bank will not listen to them. All while the central bank feverishly continues working on the new system...

"Trust is vital", said Agustín Carstens. But if policy-makers do not pause for a thoughtful reflection even when trust has been utterly shattered as is the case in Canada, are we then not merely dealing with lip service?

And how much trust do these policy-makers then deserve?

-

@ 42342239:1d80db24

2024-03-31 11:23:36

@ 42342239:1d80db24

2024-03-31 11:23:36Biologist Stuart Kauffman introduced the concept of the "adjacent possible" in evolutionary biology in 1996. A bacterium cannot suddenly transform into a flamingo; rather, it must rely on small exploratory changes (of the "adjacent possible") if it is ever to become a beautiful pink flying creature. The same principle applies to human societies, all of which exemplify complex systems. It is indeed challenging to transform shivering cave-dwellers into a space travelers without numerous intermediate steps.

Imagine a water wheel – in itself, perhaps not such a remarkable invention. Yet the water wheel transformed the hard-to-use energy of water into easily exploitable rotational energy. A little of the "adjacent possible" had now been explored: water mills, hammer forges, sawmills, and textile factories soon emerged. People who had previously ground by hand or threshed with the help of oxen could now spend their time on other things. The principles of the water wheel also formed the basis for wind power. Yes, a multitude of possibilities arose – reminiscent of the rapid development during the Cambrian explosion. When the inventors of bygone times constructed humanity's first water wheel, they thus expanded the "adjacent possible". Surely, the experts of old likely sought swift prohibitions. Not long ago, our expert class claimed that the internet was going to be a passing fad, or that it would only have the same modest impact on the economy as the fax machine. For what it's worth, there were even attempts to ban the number zero back in the days.

The pseudonymous creator of Bitcoin, Satoshi Nakamoto, wrote in Bitcoin's whitepaper that "[w]e have proposed a system for electronic transactions without relying on trust." The Bitcoin system enables participants to agree on what is true without needing to trust each other, something that has never been possible before. In light of this, it is worth noting that trust in the federal government in the USA is among the lowest levels measured in almost 70 years. Trust in media is at record lows. Moreover, in countries like the USA, the proportion of people who believe that one can trust "most people" has decreased significantly. "Rebuilding trust" was even the theme of the World Economic Forum at its annual meeting. It is evident, even in the international context, that trust between countries is not at its peak.

Over a fifteen-year period, Bitcoin has enabled electronic transactions without its participants needing to rely on a central authority, or even on each other. This may not sound like a particularly remarkable invention in itself. But like the water wheel, one must acknowledge that new potential seems to have been put in place, potential that is just beginning to be explored. Kauffman's "adjacent possible" has expanded. And despite dogmatic statements to the contrary, no one can know for sure where this might lead.

The discussion of Bitcoin or crypto currencies would benefit from greater humility and openness, not only from employees or CEOs of money laundering banks but also from forecast-failing central bank officials. When for instance Chinese Premier Zhou Enlai in the 1970s was asked about the effects of the French Revolution, he responded that it was "too early to say" - a far wiser answer than the categorical response of the bureaucratic class. Isn't exploring systems not based on trust is exactly what we need at this juncture?

-

@ 8fb140b4:f948000c

2023-11-21 21:37:48

@ 8fb140b4:f948000c

2023-11-21 21:37:48Embarking on the journey of operating your own Lightning node on the Bitcoin Layer 2 network is more than just a tech-savvy endeavor; it's a step into a realm of financial autonomy and cutting-edge innovation. By running a node, you become a vital part of a revolutionary movement that's reshaping how we think about money and digital transactions. This role not only offers a unique perspective on blockchain technology but also places you at the heart of a community dedicated to decentralization and network resilience. Beyond the technicalities, it's about embracing a new era of digital finance, where you contribute directly to the network's security, efficiency, and growth, all while gaining personal satisfaction and potentially lucrative rewards.

In essence, running your own Lightning node is a powerful way to engage with the forefront of blockchain technology, assert financial independence, and contribute to a more decentralized and efficient Bitcoin network. It's an adventure that offers both personal and communal benefits, from gaining in-depth tech knowledge to earning a place in the evolving landscape of cryptocurrency.

Running your own Lightning node for the Bitcoin Layer 2 network can be an empowering and beneficial endeavor. Here are 10 reasons why you might consider taking on this task:

-

Direct Contribution to Decentralization: Operating a node is a direct action towards decentralizing the Bitcoin network, crucial for its security and resistance to control or censorship by any single entity.

-

Financial Autonomy: Owning a node gives you complete control over your financial transactions on the network, free from reliance on third-party services, which can be subject to fees, restrictions, or outages.

-

Advanced Network Participation: As a node operator, you're not just a passive participant but an active player in shaping the network, influencing its efficiency and scalability through direct involvement.

-

Potential for Higher Revenue: With strategic management and optimal channel funding, your node can become a preferred route for transactions, potentially increasing the routing fees you can earn.

-

Cutting-Edge Technological Engagement: Running a node puts you at the forefront of blockchain and bitcoin technology, offering insights into future developments and innovations.

-

Strengthened Network Security: Each new node adds to the robustness of the Bitcoin network, making it more resilient against attacks and failures, thus contributing to the overall security of the ecosystem.

-

Personalized Fee Structures: You have the flexibility to set your own fee policies, which can balance earning potential with the service you provide to the network.

-

Empowerment Through Knowledge: The process of setting up and managing a node provides deep learning opportunities, empowering you with knowledge that can be applied in various areas of blockchain and fintech.

-

Boosting Transaction Capacity: By running a node, you help to increase the overall capacity of the Lightning Network, enabling more transactions to be processed quickly and at lower costs.

-

Community Leadership and Reputation: As an active node operator, you gain recognition within the Bitcoin community, which can lead to collaborative opportunities and a position of thought leadership in the space.

These reasons demonstrate the impactful and transformative nature of running a Lightning node, appealing to those who are deeply invested in the principles of bitcoin and wish to actively shape its future. Jump aboard, and embrace the journey toward full independence. 🐶🐾🫡🚀🚀🚀

-

-

@ 8fb140b4:f948000c

2023-11-18 23:28:31

@ 8fb140b4:f948000c

2023-11-18 23:28:31Chef's notes

Serving these two dishes together will create a delightful centerpiece for your Thanksgiving meal, offering a perfect blend of traditional flavors with a homemade touch.

Details

- ⏲️ Prep time: 30 min

- 🍳 Cook time: 1 - 2 hours

- 🍽️ Servings: 4-6

Ingredients

- 1 whole turkey (about 12-14 lbs), thawed and ready to cook

- 1 cup unsalted butter, softened

- 2 tablespoons fresh thyme, chopped

- 2 tablespoons fresh rosemary, chopped

- 2 tablespoons fresh sage, chopped

- Salt and freshly ground black pepper

- 1 onion, quartered

- 1 lemon, halved

- 2-3 cloves of garlic

- Apple and Sage Stuffing

- 1 loaf of crusty bread, cut into cubes

- 2 apples, cored and chopped

- 1 onion, diced

- 2 stalks celery, diced

- 3 cloves garlic, minced

- 1/4 cup fresh sage, chopped

- 1/2 cup unsalted butter

- 2 cups chicken broth

- Salt and pepper, to taste

Directions

- Preheat the Oven: Set your oven to 325°F (165°C).

- Prepare the Herb Butter: Mix the softened butter with the chopped thyme, rosemary, and sage. Season with salt and pepper.

- Prepare the Turkey: Remove any giblets from the turkey and pat it dry. Loosen the skin and spread a generous amount of herb butter under and over the skin.

- Add Aromatics: Inside the turkey cavity, place the quartered onion, lemon halves, and garlic cloves.

- Roast: Place the turkey in a roasting pan. Tent with aluminum foil and roast. A general guideline is about 15 minutes per pound, or until the internal temperature reaches 165°F (74°C) at the thickest part of the thigh.

- Rest and Serve: Let the turkey rest for at least 20 minutes before carving.

- Next: Apple and Sage Stuffing

- Dry the Bread: Spread the bread cubes on a baking sheet and let them dry overnight, or toast them in the oven.

- Cook the Vegetables: In a large skillet, melt the butter and cook the onion, celery, and garlic until soft.

- Combine Ingredients: Add the apples, sage, and bread cubes to the skillet. Stir in the chicken broth until the mixture is moist. Season with salt and pepper.

- Bake: Transfer the stuffing to a baking dish and bake at 350°F (175°C) for about 30-40 minutes, until golden brown on top.

-

@ b12b632c:d9e1ff79

2024-03-23 16:42:49

@ b12b632c:d9e1ff79

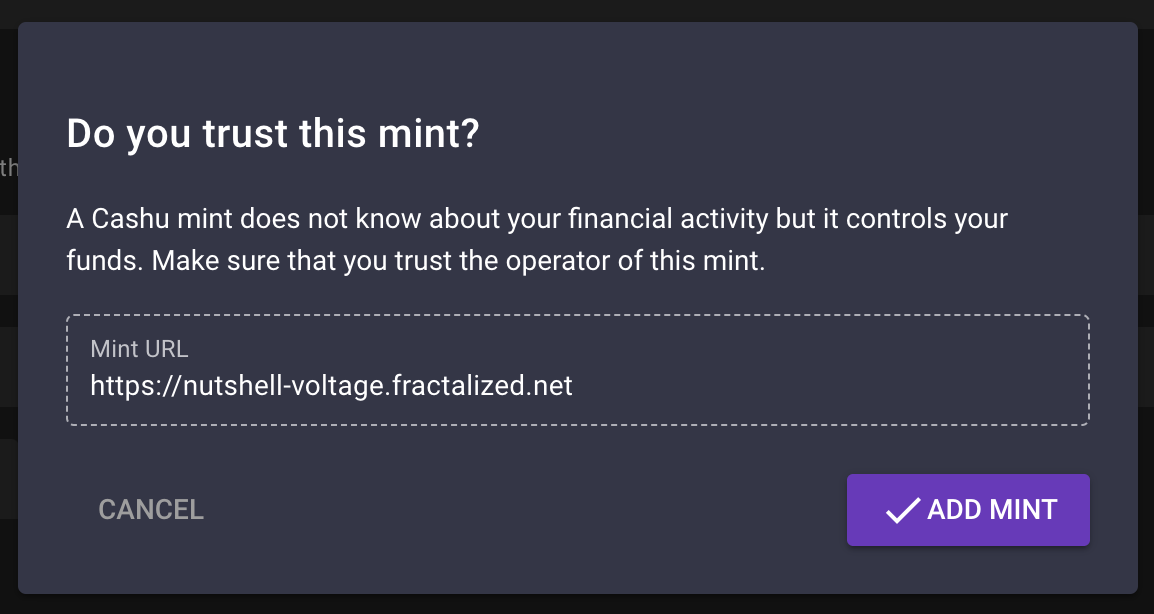

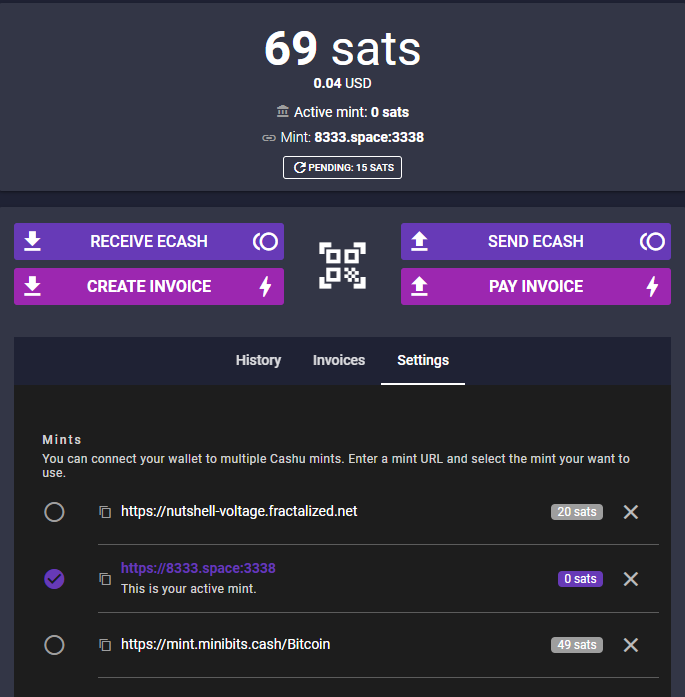

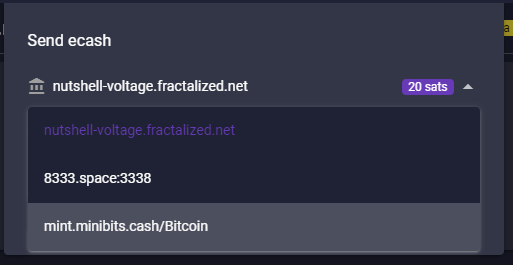

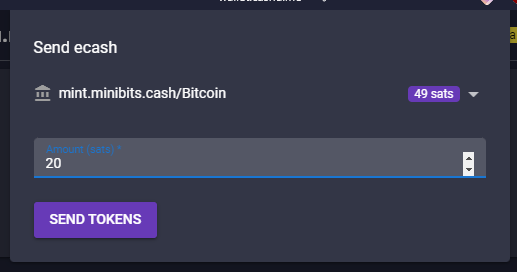

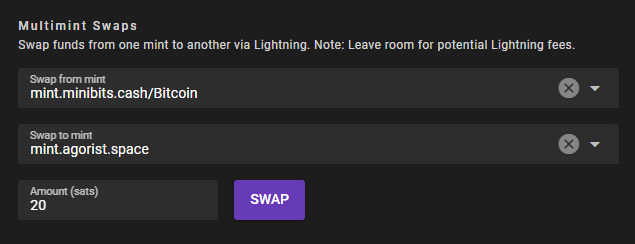

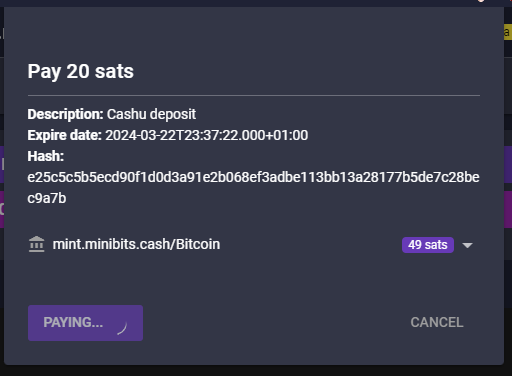

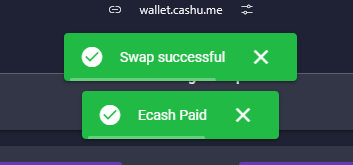

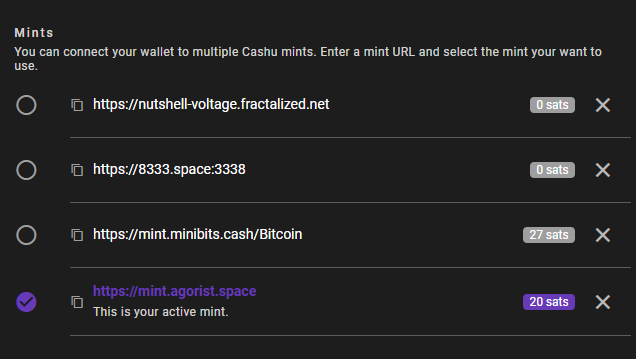

2024-03-23 16:42:49CASHU AND ECASH ARE EXPERIMENTAL PROJECTS. BY THE OWN NATURE OF CASHU ECASH, IT'S REALLY EASY TO LOSE YOUR SATS BY LACKING OF KNOWLEDGE OF THE SYSTEM MECHANICS. PLEASE, FOR YOUR OWN GOOD, ALWAYS USE FEW SATS AMOUNT IN THE BEGINNING TO FULLY UNDERSTAND HOW WORKS THE SYSTEM. ECASH IS BASED ON A TRUST RELATIONSHIP BETWEEN YOU AND THE MINT OWNER, PLEASE DONT TRUST ECASH MINT YOU DONT KNOW. IT IS POSSIBLE TO GENERATE UNLIMITED ECASH TOKENS FROM A MINT, THE ONLY WAY TO VALIDATE THE REAL EXISTENCE OF THE ECASH TOKENS IS TO DO A MULTIMINT SWAP (BETWEEN MINTS). PLEASE, ALWAYS DO A MULTISWAP MINT IF YOU RECEIVE SOME ECASH FROM SOMEONE YOU DON'T KNOW/TRUST. NEVER TRUST A MINT YOU DONT KNOW!

IF YOU WANT TO RUN AN ECASH MINT WITH A BTC LIGHTNING NODE IN BACK-END, PLEASE DEDICATE THIS LN NODE TO YOUR ECASH MINT. A BAD MANAGEMENT OF YOUR LN NODE COULD LET PEOPLE TO LOOSE THEIR SATS BECAUSE THEY HAD ONCE TRUSTED YOUR MINT AND YOU DID NOT MANAGE THE THINGS RIGHT.

What's ecash/Cashu ?

I recently listened a passionnating interview from calle 👁️⚡👁 invited by the podcast channel What Bitcoin Did about the new (not so much now) Cashu protocol.

Cashu is a a free and open-source Chaumian ecash project built for Bitcoin protocol, recently created in order to let users send/receive Ecash over BTC Lightning network. The main Cashu ecash goal is to finally give you a "by-design" privacy mechanism to allow us to do anonymous Bitcoin transactions.

Ecash for your privacy.\ A Cashu mint does not know who you are, what your balance is, or who you're transacting with. Users of a mint can exchange ecash privately without anyone being able to know who the involved parties are. Bitcoin payments are executed without anyone able to censor specific users.

Here are some useful links to begin with Cashu ecash :

Github repo: https://github.com/cashubtc

Documentation: https://docs.cashu.space

To support the project: https://docs.cashu.space/contribute

A Proof of Liabilities Scheme for Ecash Mints: https://gist.github.com/callebtc/ed5228d1d8cbaade0104db5d1cf63939

Like NOSTR and its own NIPS, here is the list of the Cashu ecash NUTs (Notation, Usage, and Terminology): https://github.com/cashubtc/nuts?tab=readme-ov-file

I won't explain you at lot more on what's Casu ecash, you need to figured out by yourself. It's really important in order to avoid any mistakes you could do with your sats (that you'll probably regret).

If you don't have so much time, you can check their FAQ right here: https://docs.cashu.space/faq

I strongly advise you to listen Calle's interviews @whatbbitcoindid to "fully" understand the concept and the Cashu ecash mechanism before using it:

Scaling Bitcoin Privacy with Calle

In the meantime I'm writing this article, Calle did another really interesting interview with ODELL from CitadelDispatch:

CD120: BITCOIN POWERED CHAUMIAN ECASH WITH CALLE

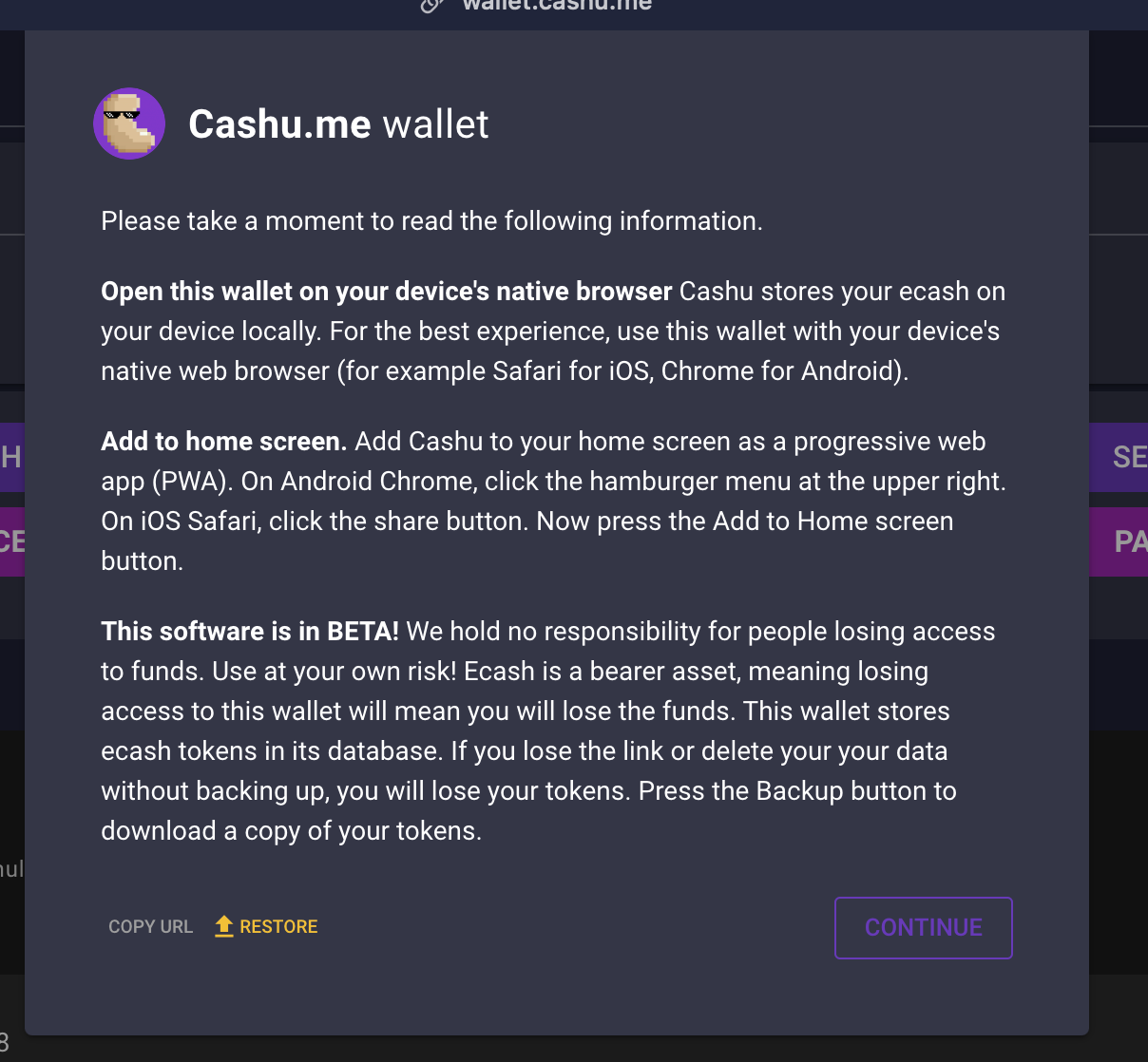

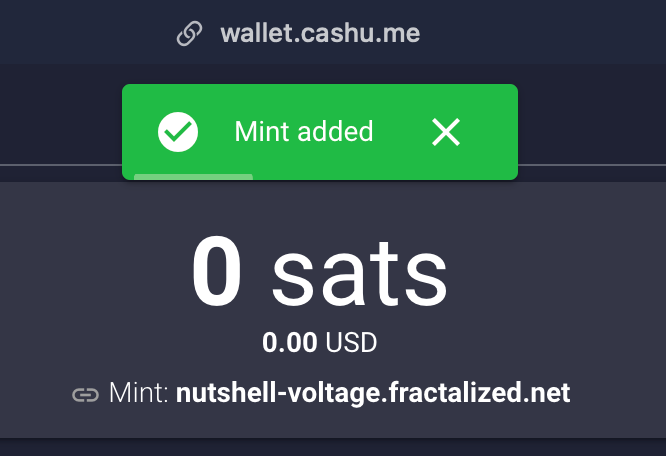

Which ecash apps?

There are several ways to send/receive some Ecash tokens, you can do it by using mobile applications like eNuts, Minibits or by using Web applications like Cashu.me, Nustrache or even npub.cash. On these topics, BTC Session Youtube channel offers high quality contents and very easy to understand key knowledge on how to use these applications :

Minibits BTC Wallet: Near Perfect Privacy and Low Fees - FULL TUTORIAL

Cashu Tutorial - Chaumian Ecash On Bitcoin

Unlock Perfect Privacy with eNuts: Instant, Free Bitcoin Transactions Tutorial

Cashu ecash is a very large and complex topic for beginners. I'm still learning everyday how it works and the project moves really fast due to its commited developpers community. Don't forget to follow their updates on Nostr to know more about the project but also to have a better undertanding of the Cashu ecash technical and political implications.

There is also a Matrix chat available if you want to participate to the project:

https://matrix.to/#/#cashu:matrix.org

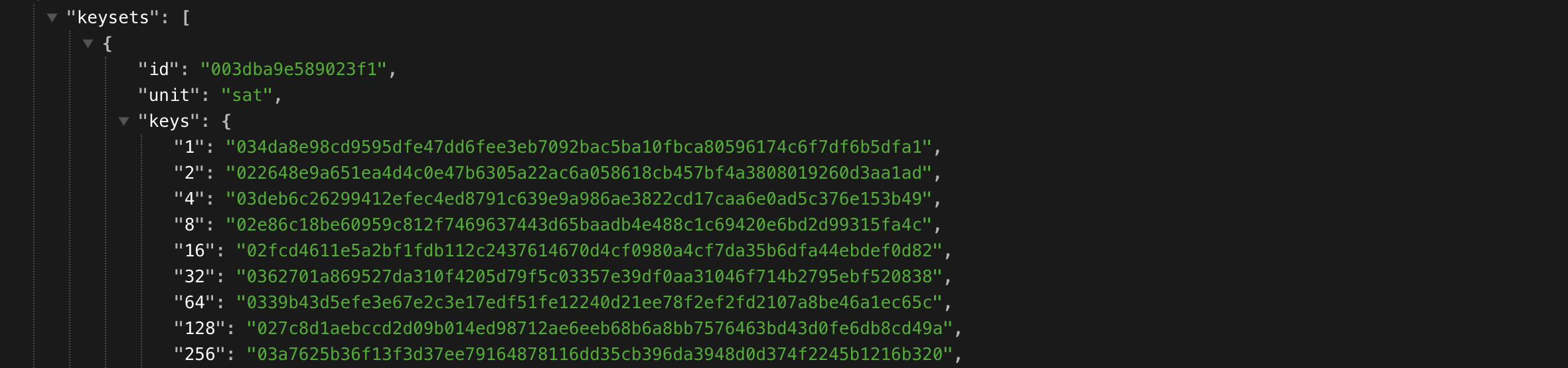

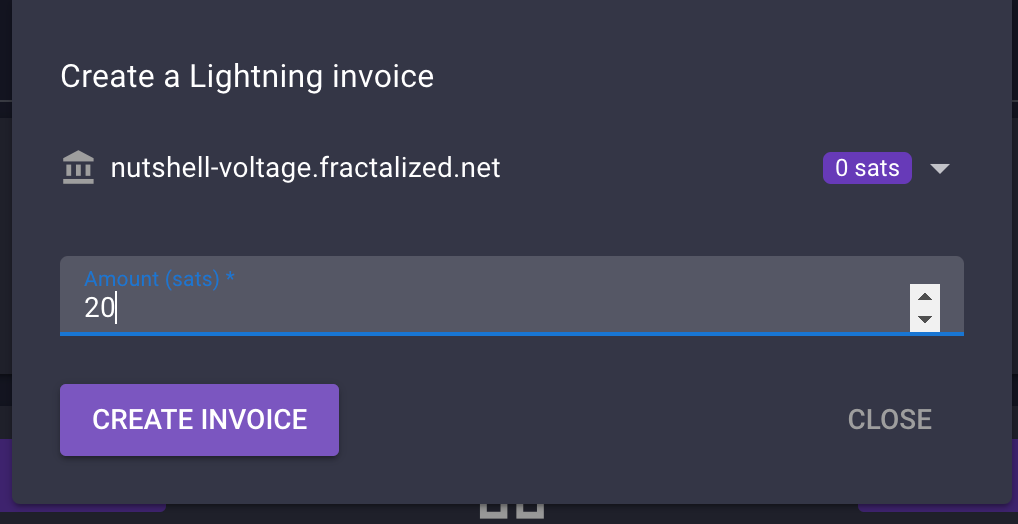

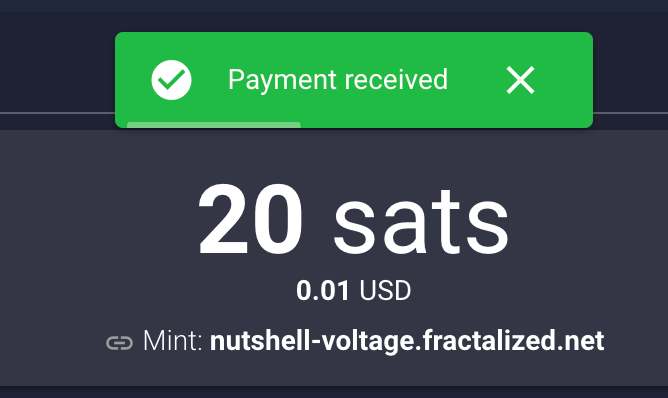

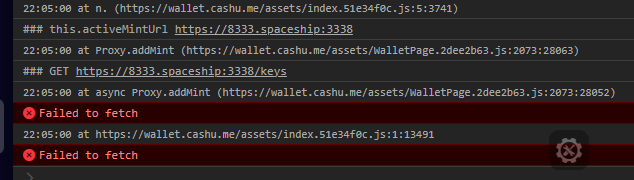

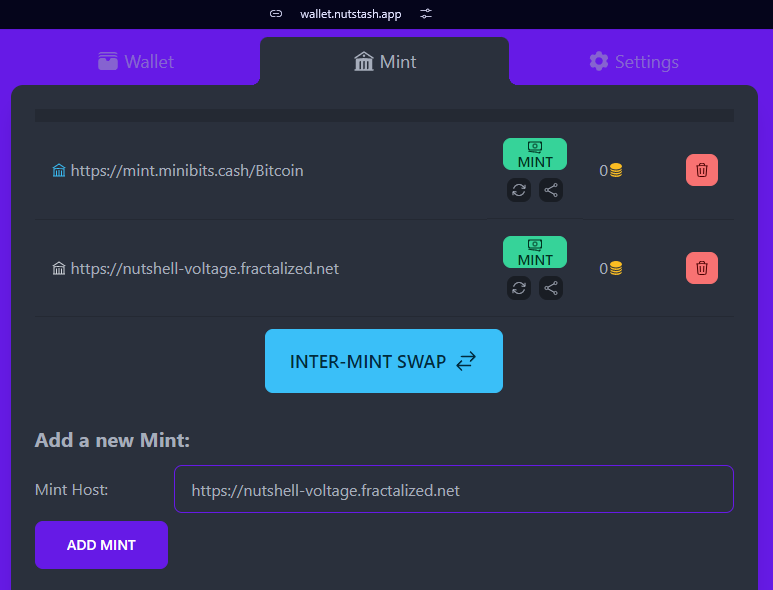

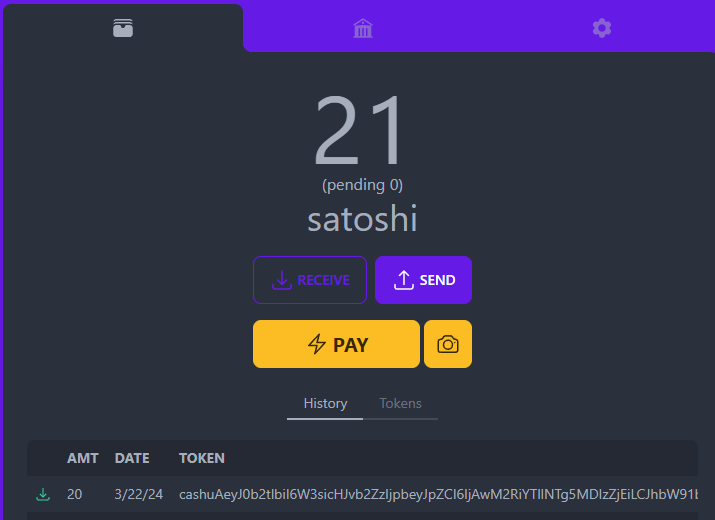

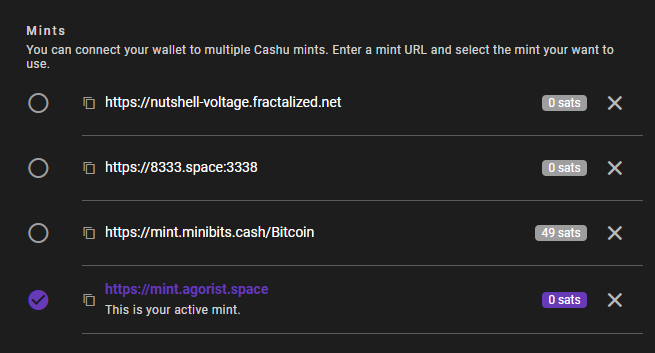

How to self-host your ecash mint with Nutshell

Cashu Nutshell is a Chaumian Ecash wallet and mint for Bitcoin Lightning. Cashu Nutshell is the reference implementation in Python.

Github repo:

https://github.com/cashubtc/nutshell

Today, Nutshell is the most advanced mint in town to self-host your ecash mint. The installation is relatively straightforward with Docker because a docker-compose file is available from the github repo.

Nutshell is not the only cashu ecash mint server available, you can check other server mint here :

https://docs.cashu.space/mints

The only "external" requirement is to have a funding source. One back-end funding source where ecash will mint your ecash from your Sats and initiate BTC Lightning Netwok transactions between ecash mints and BTC Ligtning nodes during a multimint swap. Current backend sources supported are: FakeWallet*, LndRestWallet, CoreLightningRestWallet, BlinkWallet, LNbitsWallet, StrikeUSDWallet.