-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28O Planetinha

Fumaça verde me entrando pelas narinas e um coro desafinado fazia uma base melódica.

nos confins da galáxia havia um planetinha isolado. Era um planeta feliz.

O homem vestido de mago começava a aparecer por detrás da fumaça verde.

O planetinha recebeu três presentes, mas o seu habitante, o homem, estava num estado de confusão tão grande que ameaçava estragá-los. Os homens já havia escravizado o primeiro presente, a vida; lutavam contra o segundo presente, a morte; e havia alguns que achavam que deviam destruir totalmente o terceiro, o amor, e com isto levar a desordem total ao pobre planetinha perdido, que se chamava Terra.

O coro desafinado entrou antes do "Terra" cantando várias vezes, como se imitasse um eco, "terra-terra-terraaa". Depois de uma pausa dramática, o homem vestido de mago voltou a falar.

Terra, nossa nave mãe.

Neste momento eu me afastei. À frente do palco onde o mago e seu coral faziam apelos à multidão havia vários estandes cobertos com a tradicional armação de quatro pernas e lona branca. Em todos os cantos da praça havia gente, gente dos mais variados tipos. Visitantes curiosos que se aproximavam atraídos pela fumaça verde e as barraquinhas, gente que aproveitava o movimento para vender doces sem pagar imposto, casais que se abraçavam de pé para espantar o frio, os tradicionais corredores que faziam seu cooper, gente cheia de barba e vestida para imitar os hippies dos anos 60 e vender colares estendidos no chão, transeuntes novos e velhos, vestidos como baladeiros ou como ativistas do ônibus grátis, grupos de ciclistas entusiastas.

O mago fazia agora apelos para que nós, os homens, habitantes do isolado planetinha, passássemos a ver o planetinha, nossa nave mãe, como um todo, e adquiríssemos a consciência de que ele estava entrando em maus lençóis. A idéia, reforçada pela logomarca do evento, era que parássemos de olhar só para a nossa vida e pensássemos no planeta.

A logomarca do evento, um desenho estilizado do planeta Terra, nada tinha a ver com seu nome: "Festival Andando de Bem com a Vida", mas havia sido ali colocada estrategicamente pelos organizadores, de quem parecia justamente sair a mensagem dita pelo mago.

Aquela multidão de pessoas que, assim como eu, tinham suas próprias preocupações, não podiam ver o quadro caótico que formavam, cada uma com seus atos isolados, ali naquela praça isolada, naquele planeta isolado. Quando o hippie barbudo, quase um Osho, assustava um casal para tentar vender-lhes um colar, a quantidade de caos que isto acrescentava à cena era gigantesca. Por um segundo, pude ver, como se estivesse de longe e acima, com toda a pretensão que este estado imaginativo carrega, a cena completa do caos.

Uma nave-mãe, dessas de ficção científica, habitada por milhões de pessoas, seguia no espaço sem rumo, e sem saber que logo à frente um longo precipício espacial a esperava, para a desgraça completa sua e de seus habitantes.

Acostumados àquela nave tanto quanto outrora estiveram acostumados à sua terra natal, os homens viviam as próprias vidas sem nem se lembrar que estavam vagando pelo espaço. Ninguém sabia quem estava conduzindo a nave, e ninguém se importava.

No final do filme descobre-se que era a soma completa do caos que cada habitante produzia, com seus gestos egoístas e incapazes de levar em conta a totalidade, é que determinava a direção da nave-mãe. O efeito, no entanto, não era imediato, como nunca é. Havia gente de verdade encarregada de conduzir a nave, mas era uma gente bêbada, mau-caráter, que vivia brigando pelo controle da nave e o poder que isto lhes dava. Poder, status, dinheiro!

Essa gente bêbada era atraída até ali pela corrupção das instituições e da moral comum que, no fundo no fundo, era causada pelo egoísmo da população, através de um complexo -- mas que no filme aparece simplificado pela ação individual de um magnata do divertimento público -- processo social.

O homem vestido de mago era mais um agente causador de caos, com sua cena cheia de fumaça e sua roupa estroboscópica, ele achava que estava fazendo o bem ao alertar sua platéia, todos as sextas-feiras, de que havia algo que precisava ser feito, que cada um que estava ali ouvindo era responsável pelo planeta. A sua incapacidade, porém, de explicar o que precisava ser feito só aumentava a angústia geral; a culpa que ele jogava sobre seu público, e que era prontamente aceita e passada em frente, aos familiares e amigos de cada um, atormentava-os diariamente e os impedia de ter uma vida decente no trabalho e em casa. As famílias, estressadas, estavam constantemente brigando e os motivos mais insignificantes eram responsáveis pelas mais horrendas conseqüências.

O mago, que após o show tirava o chapéu entortado e ia tomar cerveja num boteco, era responsável por uma parcela considerável do caos que levava a nave na direção do seu desgraçado fim. No filme, porém, um dos transeuntes que de passagem ouviu um pedaço do discurso do mago despertou em si mesmo uma consiência transformadora e, com poderes sobre-humanos que lhe foram então concedidos por uma ordem iniciática do bem ou não, usando só os seus poderes humanos mesmo, o transeunte -- na primeira versão do filme um homem, na segunda uma mulher -- consegue consertar as instituições e retirar os bêbados da condução da máquina. A questão da moral pública é ignorada para abreviar a trama, já com duas horas e quarenta de duração, mas subentende-se que ela também fora resolvida.

No planeta Terra real, que não está indo em direção alguma, preso pela gravidade ao Sol, e onde as pessoas vivem a própria vida porque lhes é impossível viver a dos outros, não têm uma consciência global de nada porque só é possível mesmo ter a consciência delas mesmas, e onde a maioria, de uma maneira ou de outra, está tentando como pode, fazer as coisas direito, o filme é exibido.

Para a maioria dos espectadores, é um filme que evoca reflexões, um filme forte. Por um segundo elas têm o mesmo vislumbre do caos generalizado que eu tive ali naquela praça. Para uma pequena parcela dos espectadores -- entre eles alguns dos que estavam na platéia do mago, o próprio mago, o seguidor do Osho, o casal de duas mulheres e o vendedor de brigadeiros, mas aos quais se somam também críticos de televisão e jornal e gente que fala pelos cotovelos na internet -- o filme é um horror, o filme é uma vulgarização de um problema real e sério, o filme apela para a figura do herói salvador e passa uma mensagem totalmente errada, de que a maioria da população pode continuar vivendo as suas própria vidinhas miseráveis enquanto espera por um herói que vem do Olimpo e os salva da mixórdia que eles mesmos causaram, é um filme que presta um enorme desserviço à causa.

No dia seguinte ao lançamento, num bar meio caro ali perto da praça, numa mesa com oito pessoas, entre elas seis do primeiro grupo e oito do segundo, discute-se se o filme levará ou não o Oscar. Eu estou em casa dormindo e não escuto nada.

-

@ f977c464:32fcbe00

2024-01-11 18:47:47

@ f977c464:32fcbe00

2024-01-11 18:47:47Kendisini aynada ilk defa gördüğü o gün, diğerleri gibi olduğunu anlamıştı. Oysaki her insan biricik olmalıydı. Sözgelimi sinirlendiğinde bir kaşı diğerinden birkaç milimetre daha az çatılabilirdi veya sevindiğinde dudağı ona has bir açıyla dalgalanabilirdi. Hatta bunların hiçbiri mümkün değilse, en azından, gözlerinin içinde sadece onun sahip olabileceği bir ışık parlayabilirdi. Çok sıradan, öyle sıradan ki kimsenin fark etmediği o milyonlarca minik şeyden herhangi biri. Ne olursa.

Ama yansımasına bakarken bunların hiçbirini bulamadı ve diğer günlerden hiç de farklı başlamamış o gün, işe gitmek için vagonunun gelmesini beklediği alelade bir metro istasyonunda, içinde kaybolduğu illüzyon dağılmaya başladı.

İlk önce derisi döküldü. Tam olarak dökülmedi aslında, daha çok kıvılcımlara dönüşüp bedeninden fırlamış ve bir an sonra sönerek külleşmiş, havada dağılmıştı. Ardında da, kaybolmadan hemen önce, kısa süre için hayal meyal görülebilen, bir ruhun yok oluşuna ağıt yakan rengârenk peri cesetleri bırakmıştı. Beklenenin aksine, havaya toz kokusu yayıldı.

Dehşete düştü elbette. Dehşete düştüler. Panikle üstlerini yırtan 50 işçi. Her şeyin sebebiyse o vagon.

Saçları da döküldü. Her tel, yere varmadan önce, her santimde ikiye ayrıla ayrıla yok oldu.

Bütün yüzeylerin mat olduğu, hiçbir şeyin yansımadığı, suyun siyah aktığı ve kendine ancak kameralarla bakabildiğin bir dünyada, vagonun içine yerleştirilmiş bir aynadan ilk defa kendini görmek.

Gözlerinin akları buharlaşıp havada dağıldı, mercekleri boşalan yeri doldurmak için eriyip yayıldı. Gerçeği görmemek için yaratılmış, bu yüzden görmeye hazır olmayan ve hiç olmayacak gözler.

Her şeyin o anda sona erdiğini sanabilirdi insan. Derin bir karanlık ve ölüm. Görmenin görmek olduğu o anın bitişi.

Ben geldiğimde ölmüşlerdi.

Yani bozulmuşlardı demek istiyorum.

Belleklerini yeni taşıyıcılara takmam mümkün olmadı. Fiziksel olarak kusursuz durumdaydılar, olmayanları da tamir edebilirdim ama tüm o hengamede kendilerini baştan programlamış ve girdilerini modifiye etmişlerdi.

Belleklerden birini masanın üzerinden ileriye savurdu. Hınca hınç dolu bir barda oturuyorlardı. O ve arkadaşı.

Sırf şu kendisini insan sanan androidler travma geçirip delirmesin diye neler yapıyoruz, insanın aklı almıyor.

Eliyle arkasını işaret etti.

Polislerin söylediğine göre biri vagonun içerisine ayna yerleştirmiş. Bu zavallılar da kapı açılıp bir anda yansımalarını görünce kafayı kırmışlar.

Arkadaşı bunların ona ne hissettirdiğini sordu. Yani o kadar bozuk, insan olduğunu sanan androidi kendilerini parçalamış olarak yerde görmek onu sarsmamış mıydı?

Hayır, sonuçta belirli bir amaç için yaratılmış şeyler onlar. Kaliteli bir bilgisayarım bozulduğunda üzülürüm çünkü parasını ben vermişimdir. Bunlarsa devletin. Bana ne ki?

Arkadaşı anlayışla kafasını sallayıp suyundan bir yudum aldı. Kravatını biraz gevşetti.

Bira istemediğinden emin misin?

İstemediğini söyledi. Sahi, neden deliriyordu bu androidler?

Basit. Onların yapay zekâlarını kodlarken bir şeyler yazıyorlar. Yazılımcılar. Biliyorsun, ben donanımdayım. Bunlar da kendilerini insan sanıyorlar. Tiplerine bak.

Sesini alçalttı.

Arabalarda kaza testi yapılan mankenlere benziyor hepsi. Ağızları burunları bile yok ama şu geldiğimizden beri sakalını düzeltip duruyor mesela. Hayır, hepsi de diğerleri onun sakalı varmış sanıyor, o manyak bir şey.

Arkadaşı bunun delirmeleriyle bağlantısını çözemediğini söyledi. O da normal sesiyle konuşmaya devam etti.

Anlasana, aynayı falan ayırt edemiyor mercekleri. Lönk diye kendilerini görüyorlar. Böyle, olduğu gibi...

Nedenmiş peki? Ne gerek varmış?

Ne bileyim be abicim! Ahiret soruları gibi.

Birasına bakarak dalıp gitti. Sonra masaya abanarak arkadaşına iyice yaklaştı. Bulanık, bir tünelin ucundaki biri gibi, şekli şemalı belirsiz bir adam.

Ben seni nereden tanıyorum ki ulan? Kimsin sen?

Belleği makineden çıkardılar. İki kişiydiler. Soruşturmadan sorumlu memurlar.

─ Baştan mı başlıyoruz, diye sordu belleği elinde tutan ilk memur.

─ Bir kere daha deneyelim ama bu sefer direkt aynayı sorarak başla, diye cevapladı ikinci memur.

─ Bence de. Yeterince düzgün çalışıyor.

Simülasyon yüklenirken, ayakta, biraz arkada duran ve alnını kaşıyan ikinci memur sormaktan kendisini alamadı:

─ Bu androidleri niye böyle bir olay yerine göndermişler ki? Belli tost olacakları. İsraf. Gidip biz baksak aynayı kırıp delilleri mahvetmek zorunda da kalmazlar.

Diğer memur sandalyesinde hafifçe dönecek oldu, o sırada soruyu bilgisayarın hoparlöründen teknisyen cevapladı.

Hangi işimizde bir yamukluk yok ki be abi.

Ama bir son değildi. Üstlerindeki tüm illüzyon dağıldığında ve çıplak, cinsiyetsiz, birbirinin aynı bedenleriyle kaldıklarında sıra dünyaya gelmişti.

Yere düştüler. Elleri -bütün bedeni gibi siyah turmalinden, boğumları çelikten- yere değdiği anda, metronun zemini dağıldı.

Yerdeki karolar öncesinde beyazdı ve çok parlaktı. Tepelerindeki floresan, ışığını olduğu gibi yansıtıyor, tek bir lekenin olmadığı ve tek bir tozun uçmadığı istasyonu aydınlatıyorlardı.

Duvarlara duyurular asılmıştı. Örneğin, yarın akşam kültür merkezinde 20.00’da başlayacak bir tekno blues festivalinin cıvıl cıvıl afişi vardı. Onun yanında daha geniş, sarı puntolu harflerle yazılmış, yatay siyah kesiklerle çerçevesi çizilmiş, bir platformdan düşen çöp adamın bulunduğu “Dikkat! Sarı bandı geçmeyin!” uyarısı. Biraz ilerisinde günlük resmi gazete, onun ilerisinde bir aksiyon filminin ve başka bir romantik komedi filminin afişleri, yapılacakların ve yapılmayacakların söylendiği küçük puntolu çeşitli duyurular... Duvar uzayıp giden bir panoydu. On, on beş metrede bir tekrarlanıyordu.

Tüm istasyonun eni yüz metre kadar. Genişliği on metre civarı.

Önlerinde, açık kapısından o mendebur aynanın gözüktüğü vagon duruyordu. Metro, istasyona sığmayacak kadar uzundu. Bir kılıcın keskinliğiyle uzanıyor ama yer yer vagonların ek yerleriyle bölünüyordu.

Hiçbir vagonda pencere olmadığı için metronun içi, içlerindekiler meçhuldü.

Sonrasında karolar zerrelerine ayrılarak yükseldi. Floresanın ışığında her yeri toza boğdular ve ortalığı gri bir sisin altına gömdüler. Çok kısa bir an. Afişleri dalgalandırmadılar. Dalgalandırmaya vakitleri olmadı. Yerlerinden söküp aldılar en fazla. Işık birkaç kere sönüp yanarak direndi. Son kez söndüğünde bir daha geri gelmedi.

Yine de etraf aydınlıktı. Kırmızı, her yere eşit dağılan soluk bir ışıkla.

Yer tamamen tele dönüşmüştü. Altında çapraz hatlarla desteklenmiş demir bir iskelet. Işık birkaç metreden daha fazla aşağıya uzanamıyordu. Sonsuzluğa giden bir uçurum.

Duvarın yerini aynı teller ve demir iskelet almıştı. Arkasında, birbirine vidalarla tutturulmuş demir plakalardan oluşan, üstünden geçen boruların ek yerlerinden bazen ince buharların çıktığı ve bir süre asılı kaldıktan sonra ağır, yağlı bir havayla sürüklendiği bir koridor.

Diğer tarafta paslanmış, pencerelerindeki camlar kırıldığı için demir plakalarla kapatılmış külüstür bir metro. Kapının karşısındaki aynadan her şey olduğu gibi yansıyordu.

Bir konteynırın içini andıran bir evde, gerçi gayet de birbirine eklenmiş konteynırlardan oluşan bir şehirde “andıran” demek doğru olmayacağı için düpedüz bir konteynırın içinde, masaya mum görüntüsü vermek için koyulmuş, yarı katı yağ atıklarından şekillendirilmiş kütleleri yakmayı deniyordu. Kafasında hayvan kıllarından yapılmış grili siyahlı bir peruk. Aynı kıllardan kendisine gür bir bıyık da yapmıştı.

Üstünde mavi çöp poşetlerinden yapılmış, kravatlı, şık bir takım.

Masanın ayakları yerine oradan buradan çıkmış parçalar konulmuştu: bir arabanın şaft mili, üst üste konulmuş ve üstünde yazı okunamayan tenekeler, boş kitaplar, boş gazete balyaları... Hiçbir şeye yazı yazılmıyordu, gerek yoktu da zaten çünkü merkez veri bankası onları fark ettirmeden, merceklerden giren veriyi sentezleyerek insanlar için dolduruyordu. Yani, androidler için. Farklı şekilde isimlendirmek bir fark yaratacaksa.

Onların mercekleri için değil. Bağlantıları çok önceden kopmuştu.

─ Hayatım, sofra hazır, diye bağırdı yatak odasındaki karısına.

Sofrada tabak yerine düz, bardak yerine bükülmüş, çatal ve bıçak yerine sivriltilmiş plakalar.

Karısı salonun kapısında durakladı ve ancak kulaklarına kadar uzanan, kocasınınkine benzeyen, cansız, ölü hayvanların kıllarından ibaret peruğunu eliyle düzeltti. Dudağını, daha doğrusu dudağının olması gereken yeri koyu kırmızı bir yağ tabakasıyla renklendirmeyi denemişti. Biraz da yanaklarına sürmüştü.

─ Nasıl olmuş, diye sordu.

Sesi tek düzeydi ama hafif bir neşe olduğunu hissettiğinize yemin edebilirdiniz.

Üzerinde, çöp poşetlerinin içini yazısız gazete kağıtlarıyla doldurarak yaptığı iki parça giysi.

─ Çok güzelsin, diyerek kravatını düzeltti kocası.

─ Sen de öylesin, sevgilim.

Yaklaşıp kocasını öptü. Kocası da onu. Sonra nazikçe elinden tutarak, sandalyesini geriye çekerek oturmasına yardım etti.

Sofrada yemek niyetine hiçbir şey yoktu. Gerek de yoktu zaten.

Konteynırın kapısı gürültüyle tekmelenip içeri iki memur girene kadar birbirlerine öyküler anlattılar. O gün neler yaptıklarını. İşten erken çıkıp yemyeşil çimenlerde gezdiklerini, uçurtma uçurduklarını, kadının nasıl o elbiseyi bulmak için saatlerce gezip yorulduğunu, kocasının kısa süreliğine işe dönüp nasıl başarılı bir hamleyle yaşanan krizi çözdüğünü ve kadının yanına döndükten sonra, alışveriş merkezinde oturdukları yeni dondurmacının dondurmalarının ne kadar lezzetli olduğunu, boğazlarının ağrımasından korktuklarını...

Akşam film izleyebilirlerdi, televizyonda -boş ve mat bir plaka- güzel bir film oynayacaktı.

İki memur. Çıplak bedenleriyle birbirinin aynı. Ellerindeki silahları onlara doğrultmuşlardı. Mum ışığında, tertemiz bir örtünün serili olduğu masada, bardaklarında şaraplarla oturan ve henüz sofranın ortasındaki hindiye dokunmamış çifti gördüklerinde bocaladılar.

Hiç de androidlere bilinçli olarak zarar verebilecek gibi gözükmüyorlardı.

─ Sessiz kalma hakkına sahipsiniz, diye bağırdı içeri giren ikinci memur. Söylediğiniz her şey...

Cümlesini bitiremedi. Yatak odasındaki, masanın üzerinden gördüğü o şey, onunla aynı hareketleri yapan android, yoksa, bir aynadaki yansıması mıydı?

Bütün illüzyon o anda dağılmaya başladı.

Not: Bu öykü ilk olarak 2020 yılında Esrarengiz Hikâyeler'de yayımlanmıştır.

-

@ 8fb140b4:f948000c

2023-11-21 21:37:48

@ 8fb140b4:f948000c

2023-11-21 21:37:48Embarking on the journey of operating your own Lightning node on the Bitcoin Layer 2 network is more than just a tech-savvy endeavor; it's a step into a realm of financial autonomy and cutting-edge innovation. By running a node, you become a vital part of a revolutionary movement that's reshaping how we think about money and digital transactions. This role not only offers a unique perspective on blockchain technology but also places you at the heart of a community dedicated to decentralization and network resilience. Beyond the technicalities, it's about embracing a new era of digital finance, where you contribute directly to the network's security, efficiency, and growth, all while gaining personal satisfaction and potentially lucrative rewards.

In essence, running your own Lightning node is a powerful way to engage with the forefront of blockchain technology, assert financial independence, and contribute to a more decentralized and efficient Bitcoin network. It's an adventure that offers both personal and communal benefits, from gaining in-depth tech knowledge to earning a place in the evolving landscape of cryptocurrency.

Running your own Lightning node for the Bitcoin Layer 2 network can be an empowering and beneficial endeavor. Here are 10 reasons why you might consider taking on this task:

-

Direct Contribution to Decentralization: Operating a node is a direct action towards decentralizing the Bitcoin network, crucial for its security and resistance to control or censorship by any single entity.

-

Financial Autonomy: Owning a node gives you complete control over your financial transactions on the network, free from reliance on third-party services, which can be subject to fees, restrictions, or outages.

-

Advanced Network Participation: As a node operator, you're not just a passive participant but an active player in shaping the network, influencing its efficiency and scalability through direct involvement.

-

Potential for Higher Revenue: With strategic management and optimal channel funding, your node can become a preferred route for transactions, potentially increasing the routing fees you can earn.

-

Cutting-Edge Technological Engagement: Running a node puts you at the forefront of blockchain and bitcoin technology, offering insights into future developments and innovations.

-

Strengthened Network Security: Each new node adds to the robustness of the Bitcoin network, making it more resilient against attacks and failures, thus contributing to the overall security of the ecosystem.

-

Personalized Fee Structures: You have the flexibility to set your own fee policies, which can balance earning potential with the service you provide to the network.

-

Empowerment Through Knowledge: The process of setting up and managing a node provides deep learning opportunities, empowering you with knowledge that can be applied in various areas of blockchain and fintech.

-

Boosting Transaction Capacity: By running a node, you help to increase the overall capacity of the Lightning Network, enabling more transactions to be processed quickly and at lower costs.

-

Community Leadership and Reputation: As an active node operator, you gain recognition within the Bitcoin community, which can lead to collaborative opportunities and a position of thought leadership in the space.

These reasons demonstrate the impactful and transformative nature of running a Lightning node, appealing to those who are deeply invested in the principles of bitcoin and wish to actively shape its future. Jump aboard, and embrace the journey toward full independence. 🐶🐾🫡🚀🚀🚀

-

-

@ 8fb140b4:f948000c

2023-11-18 23:28:31

@ 8fb140b4:f948000c

2023-11-18 23:28:31Chef's notes

Serving these two dishes together will create a delightful centerpiece for your Thanksgiving meal, offering a perfect blend of traditional flavors with a homemade touch.

Details

- ⏲️ Prep time: 30 min

- 🍳 Cook time: 1 - 2 hours

- 🍽️ Servings: 4-6

Ingredients

- 1 whole turkey (about 12-14 lbs), thawed and ready to cook

- 1 cup unsalted butter, softened

- 2 tablespoons fresh thyme, chopped

- 2 tablespoons fresh rosemary, chopped

- 2 tablespoons fresh sage, chopped

- Salt and freshly ground black pepper

- 1 onion, quartered

- 1 lemon, halved

- 2-3 cloves of garlic

- Apple and Sage Stuffing

- 1 loaf of crusty bread, cut into cubes

- 2 apples, cored and chopped

- 1 onion, diced

- 2 stalks celery, diced

- 3 cloves garlic, minced

- 1/4 cup fresh sage, chopped

- 1/2 cup unsalted butter

- 2 cups chicken broth

- Salt and pepper, to taste

Directions

- Preheat the Oven: Set your oven to 325°F (165°C).

- Prepare the Herb Butter: Mix the softened butter with the chopped thyme, rosemary, and sage. Season with salt and pepper.

- Prepare the Turkey: Remove any giblets from the turkey and pat it dry. Loosen the skin and spread a generous amount of herb butter under and over the skin.

- Add Aromatics: Inside the turkey cavity, place the quartered onion, lemon halves, and garlic cloves.

- Roast: Place the turkey in a roasting pan. Tent with aluminum foil and roast. A general guideline is about 15 minutes per pound, or until the internal temperature reaches 165°F (74°C) at the thickest part of the thigh.

- Rest and Serve: Let the turkey rest for at least 20 minutes before carving.

- Next: Apple and Sage Stuffing

- Dry the Bread: Spread the bread cubes on a baking sheet and let them dry overnight, or toast them in the oven.

- Cook the Vegetables: In a large skillet, melt the butter and cook the onion, celery, and garlic until soft.

- Combine Ingredients: Add the apples, sage, and bread cubes to the skillet. Stir in the chicken broth until the mixture is moist. Season with salt and pepper.

- Bake: Transfer the stuffing to a baking dish and bake at 350°F (175°C) for about 30-40 minutes, until golden brown on top.

-

@ 8fb140b4:f948000c

2023-11-02 01:13:01

@ 8fb140b4:f948000c

2023-11-02 01:13:01Testing a brand new YakiHonne native client for iOS. Smooth as butter (not penis butter 🤣🍆🧈) with great visual experience and intuitive navigation. Amazing work by the team behind it! * lists * work

Bold text work!

Images could have used nostr.build instead of raw S3 from us-east-1 region.

Very impressive! You can even save the draft and continue later, before posting the long-form note!

🐶🐾🤯🤯🤯🫂💜

-

@ 8fb140b4:f948000c

2023-08-22 12:14:34

@ 8fb140b4:f948000c

2023-08-22 12:14:34As the title states, scratch behind my ear and you get it. 🐶🐾🫡

-

@ 8fb140b4:f948000c

2023-07-30 00:35:01

@ 8fb140b4:f948000c

2023-07-30 00:35:01Test Bounty Note

-

@ 8fb140b4:f948000c

2023-07-22 09:39:48

@ 8fb140b4:f948000c

2023-07-22 09:39:48Intro

This short tutorial will help you set up your own Nostr Wallet Connect (NWC) on your own LND Node that is not using Umbrel. If you are a user of Umbrel, you should use their version of NWC.

Requirements

You need to have a working installation of LND with established channels and connectivity to the internet. NWC in itself is fairly light and will not consume a lot of resources. You will also want to ensure that you have a working installation of Docker, since we will use a docker image to run NWC.

- Working installation of LND (and all of its required components)

- Docker (with Docker compose)

Installation

For the purpose of this tutorial, we will assume that you have your lnd/bitcoind running under user bitcoin with home directory /home/bitcoin. We will also assume that you already have a running installation of Docker (or docker.io).

Prepare and verify

git version - we will need git to get the latest version of NWC. docker version - should execute successfully and show the currently installed version of Docker. docker compose version - same as before, but the version will be different. ss -tupln | grep 10009- should produce the following output: tcp LISTEN 0 4096 0.0.0.0:10009 0.0.0.0: tcp LISTEN 0 4096 [::]:10009 [::]:**

For things to work correctly, your Docker should be version 20.10.0 or later. If you have an older version, consider installing a new one using instructions here: https://docs.docker.com/engine/install/

Create folders & download NWC

In the home directory of your LND/bitcoind user, create a new folder, e.g., "nwc" mkdir /home/bitcoin/nwc. Change to that directory cd /home/bitcoin/nwc and clone the NWC repository: git clone https://github.com/getAlby/nostr-wallet-connect.git

Creating the Docker image

In this step, we will create a Docker image that you will use to run NWC.

- Change directory to

nostr-wallet-connect:cd nostr-wallet-connect - Run command to build Docker image:

docker build -t nwc:$(date +'%Y%m%d%H%M') -t nwc:latest .(there is a dot at the end) - The last line of the output (after a few minutes) should look like

=> => naming to docker.io/library/nwc:latest nwc:latestis the name of the Docker image with a tag which you should note for use later.

Creating docker-compose.yml and necessary data directories

- Let's create a directory that will hold your non-volatile data (DB):

mkdir data - In

docker-compose.ymlfile, there are fields that you want to replace (<> comments) and port “4321” that you want to make sure is open (check withss -tupln | grep 4321which should return nothing). - Create

docker-compose.ymlfile with the following content, and make sure to update fields that have <> comment:

version: "3.8" services: nwc: image: nwc:latest volumes: - ./data:/data - ~/.lnd:/lnd:ro ports: - "4321:8080" extra_hosts: - "localhost:host-gateway" environment: NOSTR_PRIVKEY: <use "openssl rand -hex 32" to generate a fresh key and place it inside ""> LN_BACKEND_TYPE: "LND" LND_ADDRESS: localhost:10009 LND_CERT_FILE: "/lnd/tls.cert" LND_MACAROON_FILE: "/lnd/data/chain/bitcoin/mainnet/admin.macaroon" DATABASE_URI: "/data/nostr-wallet-connect.db" COOKIE_SECRET: <use "openssl rand -hex 32" to generate fresh secret and place it inside ""> PORT: 8080 restart: always stop_grace_period: 1mStarting and testing

Now that you have everything ready, it is time to start the container and test.

- While you are in the

nwcdirectory (important), execute the following command and check the log output,docker compose up - You should see container logs while it is starting, and it should not exit if everything went well.

- At this point, you should be able to go to

http://<ip of the host where nwc is running>:4321and get to the interface of NWC - To stop the test run of NWC, simply press

Ctrl-C, and it will shut the container down. - To start NWC permanently, you should execute

docker compose up -d, “-d” tells Docker to detach from the session. - To check currently running NWC logs, execute

docker compose logsto run it in tail mode add-fto the end. - To stop the container, execute

docker compose down

That's all, just follow the instructions in the web interface to get started.

Updating

As with any software, you should expect fixes and updates that you would need to perform periodically. You could automate this, but it falls outside of the scope of this tutorial. Since we already have all of the necessary configuration in place, the update execution is fairly simple.

- Change directory to the clone of the git repository,

cd /home/bitcoin/nwc/nostr-wallet-connect - Run command to build Docker image:

docker build -t nwc:$(date +'%Y%m%d%H%M') -t nwc:latest .(there is a dot at the end) - Change directory back one level

cd .. - Restart (stop and start) the docker compose config

docker compose down && docker compose up -d - Done! Optionally you may want to check the logs:

docker compose logs

-

@ 82341f88:fbfbe6a2

2023-04-11 19:36:53

@ 82341f88:fbfbe6a2

2023-04-11 19:36:53There’s a lot of conversation around the #TwitterFiles. Here’s my take, and thoughts on how to fix the issues identified.

I’ll start with the principles I’ve come to believe…based on everything I’ve learned and experienced through my past actions as a Twitter co-founder and lead:

- Social media must be resilient to corporate and government control.

- Only the original author may remove content they produce.

- Moderation is best implemented by algorithmic choice.

The Twitter when I led it and the Twitter of today do not meet any of these principles. This is my fault alone, as I completely gave up pushing for them when an activist entered our stock in 2020. I no longer had hope of achieving any of it as a public company with no defense mechanisms (lack of dual-class shares being a key one). I planned my exit at that moment knowing I was no longer right for the company.

The biggest mistake I made was continuing to invest in building tools for us to manage the public conversation, versus building tools for the people using Twitter to easily manage it for themselves. This burdened the company with too much power, and opened us to significant outside pressure (such as advertising budgets). I generally think companies have become far too powerful, and that became completely clear to me with our suspension of Trump’s account. As I’ve said before, we did the right thing for the public company business at the time, but the wrong thing for the internet and society. Much more about this here: https://twitter.com/jack/status/1349510769268850690

I continue to believe there was no ill intent or hidden agendas, and everyone acted according to the best information we had at the time. Of course mistakes were made. But if we had focused more on tools for the people using the service rather than tools for us, and moved much faster towards absolute transparency, we probably wouldn’t be in this situation of needing a fresh reset (which I am supportive of). Again, I own all of this and our actions, and all I can do is work to make it right.

Back to the principles. Of course governments want to shape and control the public conversation, and will use every method at their disposal to do so, including the media. And the power a corporation wields to do the same is only growing. It’s critical that the people have tools to resist this, and that those tools are ultimately owned by the people. Allowing a government or a few corporations to own the public conversation is a path towards centralized control.

I’m a strong believer that any content produced by someone for the internet should be permanent until the original author chooses to delete it. It should be always available and addressable. Content takedowns and suspensions should not be possible. Doing so complicates important context, learning, and enforcement of illegal activity. There are significant issues with this stance of course, but starting with this principle will allow for far better solutions than we have today. The internet is trending towards a world were storage is “free” and infinite, which places all the actual value on how to discover and see content.

Which brings me to the last principle: moderation. I don’t believe a centralized system can do content moderation globally. It can only be done through ranking and relevance algorithms, the more localized the better. But instead of a company or government building and controlling these solely, people should be able to build and choose from algorithms that best match their criteria, or not have to use any at all. A “follow” action should always deliver every bit of content from the corresponding account, and the algorithms should be able to comb through everything else through a relevance lens that an individual determines. There’s a default “G-rated” algorithm, and then there’s everything else one can imagine.

The only way I know of to truly live up to these 3 principles is a free and open protocol for social media, that is not owned by a single company or group of companies, and is resilient to corporate and government influence. The problem today is that we have companies who own both the protocol and discovery of content. Which ultimately puts one person in charge of what’s available and seen, or not. This is by definition a single point of failure, no matter how great the person, and over time will fracture the public conversation, and may lead to more control by governments and corporations around the world.

I believe many companies can build a phenomenal business off an open protocol. For proof, look at both the web and email. The biggest problem with these models however is that the discovery mechanisms are far too proprietary and fixed instead of open or extendable. Companies can build many profitable services that complement rather than lock down how we access this massive collection of conversation. There is no need to own or host it themselves.

Many of you won’t trust this solution just because it’s me stating it. I get it, but that’s exactly the point. Trusting any one individual with this comes with compromises, not to mention being way too heavy a burden for the individual. It has to be something akin to what bitcoin has shown to be possible. If you want proof of this, get out of the US and European bubble of the bitcoin price fluctuations and learn how real people are using it for censorship resistance in Africa and Central/South America.

I do still wish for Twitter, and every company, to become uncomfortably transparent in all their actions, and I wish I forced more of that years ago. I do believe absolute transparency builds trust. As for the files, I wish they were released Wikileaks-style, with many more eyes and interpretations to consider. And along with that, commitments of transparency for present and future actions. I’m hopeful all of this will happen. There’s nothing to hide…only a lot to learn from. The current attacks on my former colleagues could be dangerous and doesn’t solve anything. If you want to blame, direct it at me and my actions, or lack thereof.

As far as the free and open social media protocol goes, there are many competing projects: @bluesky is one with the AT Protocol, nostr another, Mastodon yet another, Matrix yet another…and there will be many more. One will have a chance at becoming a standard like HTTP or SMTP. This isn’t about a “decentralized Twitter.” This is a focused and urgent push for a foundational core technology standard to make social media a native part of the internet. I believe this is critical both to Twitter’s future, and the public conversation’s ability to truly serve the people, which helps hold governments and corporations accountable. And hopefully makes it all a lot more fun and informative again.

💸🛠️🌐 To accelerate open internet and protocol work, I’m going to open a new category of #startsmall grants: “open internet development.” It will start with a focus of giving cash and equity grants to engineering teams working on social media and private communication protocols, bitcoin, and a web-only mobile OS. I’ll make some grants next week, starting with $1mm/yr to Signal. Please let me know other great candidates for this money.

-

@ 3bf0c63f:aefa459d

2024-01-14 14:52:16

@ 3bf0c63f:aefa459d

2024-01-14 14:52:16Drivechain

Understanding Drivechain requires a shift from the paradigm most bitcoiners are used to. It is not about "trustlessness" or "mathematical certainty", but game theory and incentives. (Well, Bitcoin in general is also that, but people prefer to ignore it and focus on some illusion of trustlessness provided by mathematics.)

Here we will describe the basic mechanism (simple) and incentives (complex) of "hashrate escrow" and how it enables a 2-way peg between the mainchain (Bitcoin) and various sidechains.

The full concept of "Drivechain" also involves blind merged mining (i.e., the sidechains mine themselves by publishing their block hashes to the mainchain without the miners having to run the sidechain software), but this is much easier to understand and can be accomplished either by the BIP-301 mechanism or by the Spacechains mechanism.

How does hashrate escrow work from the point of view of Bitcoin?

A new address type is created. Anything that goes in that is locked and can only be spent if all miners agree on the Withdrawal Transaction (

WT^) that will spend it for 6 months. There is one of these special addresses for each sidechain.To gather miners' agreement

bitcoindkeeps track of the "score" of all transactions that could possibly spend from that address. On every block mined, for each sidechain, the miner can use a portion of their coinbase to either increase the score of oneWT^by 1 while decreasing the score of all others by 1; or they can decrease the score of allWT^s by 1; or they can do nothing.Once a transaction has gotten a score high enough, it is published and funds are effectively transferred from the sidechain to the withdrawing users.

If a timeout of 6 months passes and the score doesn't meet the threshold, that

WT^is discarded.What does the above procedure mean?

It means that people can transfer coins from the mainchain to a sidechain by depositing to the special address. Then they can withdraw from the sidechain by making a special withdraw transaction in the sidechain.

The special transaction somehow freezes funds in the sidechain while a transaction that aggregates all withdrawals into a single mainchain

WT^, which is then submitted to the mainchain miners so they can start voting on it and finally after some months it is published.Now the crucial part: the validity of the

WT^is not verified by the Bitcoin mainchain rules, i.e., if Bob has requested a withdraw from the sidechain to his mainchain address, but someone publishes a wrongWT^that instead takes Bob's funds and sends them to Alice's main address there is no way the mainchain will know that. What determines the "validity" of theWT^is the miner vote score and only that. It is the job of miners to vote correctly -- and for that they may want to run the sidechain node in SPV mode so they can attest for the existence of a reference to theWT^transaction in the sidechain blockchain (which then ensures it is ok) or do these checks by some other means.What? 6 months to get my money back?

Yes. But no, in practice anyone who wants their money back will be able to use an atomic swap, submarine swap or other similar service to transfer funds from the sidechain to the mainchain and vice-versa. The long delayed withdraw costs would be incurred by few liquidity providers that would gain some small profit from it.

Why bother with this at all?

Drivechains solve many different problems:

It enables experimentation and new use cases for Bitcoin

Issued assets, fully private transactions, stateful blockchain contracts, turing-completeness, decentralized games, some "DeFi" aspects, prediction markets, futarchy, decentralized and yet meaningful human-readable names, big blocks with a ton of normal transactions on them, a chain optimized only for Lighting-style networks to be built on top of it.

These are some ideas that may have merit to them, but were never actually tried because they couldn't be tried with real Bitcoin or inferfacing with real bitcoins. They were either relegated to the shitcoin territory or to custodial solutions like Liquid or RSK that may have failed to gain network effect because of that.

It solves conflicts and infighting

Some people want fully private transactions in a UTXO model, others want "accounts" they can tie to their name and build reputation on top; some people want simple multisig solutions, others want complex code that reads a ton of variables; some people want to put all the transactions on a global chain in batches every 10 minutes, others want off-chain instant transactions backed by funds previously locked in channels; some want to spend, others want to just hold; some want to use blockchain technology to solve all the problems in the world, others just want to solve money.

With Drivechain-based sidechains all these groups can be happy simultaneously and don't fight. Meanwhile they will all be using the same money and contributing to each other's ecosystem even unwillingly, it's also easy and free for them to change their group affiliation later, which reduces cognitive dissonance.

It solves "scaling"

Multiple chains like the ones described above would certainly do a lot to accomodate many more transactions that the current Bitcoin chain can. One could have special Lightning Network chains, but even just big block chains or big-block-mimblewimble chains or whatnot could probably do a good job. Or even something less cool like 200 independent chains just like Bitcoin is today, no extra features (and you can call it "sharding"), just that would already multiply the current total capacity by 200.

Use your imagination.

It solves the blockchain security budget issue

The calculation is simple: you imagine what security budget is reasonable for each block in a world without block subsidy and divide that for the amount of bytes you can fit in a single block: that is the price to be paid in satoshis per byte. In reasonable estimative, the price necessary for every Bitcoin transaction goes to very large amounts, such that not only any day-to-day transaction has insanely prohibitive costs, but also Lightning channel opens and closes are impracticable.

So without a solution like Drivechain you'll be left with only one alternative: pushing Bitcoin usage to trusted services like Liquid and RSK or custodial Lightning wallets. With Drivechain, though, there could be thousands of transactions happening in sidechains and being all aggregated into a sidechain block that would then pay a very large fee to be published (via blind merged mining) to the mainchain. Bitcoin security guaranteed.

It keeps Bitcoin decentralized

Once we have sidechains to accomodate the normal transactions, the mainchain functionality can be reduced to be only a "hub" for the sidechains' comings and goings, and then the maximum block size for the mainchain can be reduced to, say, 100kb, which would make running a full node very very easy.

Can miners steal?

Yes. If a group of coordinated miners are able to secure the majority of the hashpower and keep their coordination for 6 months, they can publish a

WT^that takes the money from the sidechains and pays to themselves.Will miners steal?

No, because the incentives are such that they won't.

Although it may look at first that stealing is an obvious strategy for miners as it is free money, there are many costs involved:

- The cost of ceasing blind-merged mining returns -- as stealing will kill a sidechain, all the fees from it that miners would be expected to earn for the next years are gone;

- The cost of Bitcoin price going down: If a steal is successful that will mean Drivechains are not safe, therefore Bitcoin is less useful, and miner credibility will also be hurt, which are likely to cause the Bitcoin price to go down, which in turn may kill the miners' businesses and savings;

- The cost of coordination -- assuming miners are just normal businesses, they just want to do their work and get paid, but stealing from a Drivechain will require coordination with other miners to conduct an immoral act in a way that has many pitfalls and is likely to be broken over the months;

- The cost of miners leaving your mining pool: when we talked about "miners" above we were actually talking about mining pools operators, so they must also consider the risk of miners migrating from their mining pool to others as they begin the process of stealing;

- The cost of community goodwill -- when participating in a steal operation, a miner will suffer a ton of backlash from the community. Even if the attempt fails at the end, the fact that it was attempted will contribute to growing concerns over exaggerated miners power over the Bitcoin ecosystem, which may end up causing the community to agree on a hard-fork to change the mining algorithm in the future, or to do something to increase participation of more entities in the mining process (such as development or cheapment of new ASICs), which have a chance of decreasing the profits of current miners.

Another point to take in consideration is that one may be inclined to think a newly-created sidechain or a sidechain with relatively low usage may be more easily stolen from, since the blind merged mining returns from it (point 1 above) are going to be small -- but the fact is also that a sidechain with small usage will also have less money to be stolen from, and since the other costs besides 1 are less elastic at the end it will not be worth stealing from these too.

All of the above consideration are valid only if miners are stealing from good sidechains. If there is a sidechain that is doing things wrong, scamming people, not being used at all, or is full of bugs, for example, that will be perceived as a bad sidechain, and then miners can and will safely steal from it and kill it, which will be perceived as a good thing by everybody.

What do we do if miners steal?

Paul Sztorc has suggested in the past that a user-activated soft-fork could prevent miners from stealing, i.e., most Bitcoin users and nodes issue a rule similar to this one to invalidate the inclusion of a faulty

WT^and thus cause any miner that includes it in a block to be relegated to their own Bitcoin fork that other nodes won't accept.This suggestion has made people think Drivechain is a sidechain solution backed by user-actived soft-forks for safety, which is very far from the truth. Drivechains must not and will not rely on this kind of soft-fork, although they are possible, as the coordination costs are too high and no one should ever expect these things to happen.

If even with all the incentives against them (see above) miners do still steal from a good sidechain that will mean the failure of the Drivechain experiment. It will very likely also mean the failure of the Bitcoin experiment too, as it will be proven that miners can coordinate to act maliciously over a prolonged period of time regardless of economic and social incentives, meaning they are probably in it just for attacking Bitcoin, backed by nation-states or something else, and therefore no Bitcoin transaction in the mainchain is to be expected to be safe ever again.

Why use this and not a full-blown trustless and open sidechain technology?

Because it is impossible.

If you ever heard someone saying "just use a sidechain", "do this in a sidechain" or anything like that, be aware that these people are either talking about "federated" sidechains (i.e., funds are kept in custody by a group of entities) or they are talking about Drivechain, or they are disillusioned and think it is possible to do sidechains in any other manner.

No, I mean a trustless 2-way peg with correctness of the withdrawals verified by the Bitcoin protocol!

That is not possible unless Bitcoin verifies all transactions that happen in all the sidechains, which would be akin to drastically increasing the blocksize and expanding the Bitcoin rules in tons of ways, i.e., a terrible idea that no one wants.

What about the Blockstream sidechains whitepaper?

Yes, that was a way to do it. The Drivechain hashrate escrow is a conceptually simpler way to achieve the same thing with improved incentives, less junk in the chain, more safety.

Isn't the hashrate escrow a very complex soft-fork?

Yes, but it is much simpler than SegWit. And, unlike SegWit, it doesn't force anything on users, i.e., it isn't a mandatory blocksize increase.

Why should we expect miners to care enough to participate in the voting mechanism?

Because it's in their own self-interest to do it, and it costs very little. Today over half of the miners mine RSK. It's not blind merged mining, it's a very convoluted process that requires them to run a RSK full node. For the Drivechain sidechains, an SPV node would be enough, or maybe just getting data from a block explorer API, so much much simpler.

What if I still don't like Drivechain even after reading this?

That is the entire point! You don't have to like it or use it as long as you're fine with other people using it. The hashrate escrow special addresses will not impact you at all, validation cost is minimal, and you get the benefit of people who want to use Drivechain migrating to their own sidechains and freeing up space for you in the mainchain. See also the point above about infighting.

See also

-

@ 3bf0c63f:aefa459d

2024-01-14 14:52:16

@ 3bf0c63f:aefa459d

2024-01-14 14:52:16bitcoinddecentralizationIt is better to have multiple curator teams, with different vetting processes and release schedules for

bitcoindthan a single one."More eyes on code", "Contribute to Core", "Everybody should audit the code".

All these points repeated again and again fell to Earth on the day it was discovered that Bitcoin Core developers merged a variable name change from "blacklist" to "blocklist" without even discussing or acknowledging the fact that that innocent pull request opened by a sybil account was a social attack.

After a big lot of people manifested their dissatisfaction with that event on Twitter and on GitHub, most Core developers simply ignored everybody's concerns or even personally attacked people who were complaining.

The event has shown that:

1) Bitcoin Core ultimately rests on the hands of a couple maintainers and they decide what goes on the GitHub repository[^pr-merged-very-quickly] and the binary releases that will be downloaded by thousands; 2) Bitcoin Core is susceptible to social attacks; 2) "More eyes on code" don't matter, as these extra eyes can be ignored and dismissed.

Solution:

bitcoinddecentralizationIf usage was spread across 10 different

bitcoindflavors, the network would be much more resistant to social attacks to a single team.This has nothing to do with the question on if it is better to have multiple different Bitcoin node implementations or not, because here we're basically talking about the same software.

Multiple teams, each with their own release process, their own logo, some subtle changes, or perhaps no changes at all, just a different name for their

bitcoindflavor, and that's it.Every day or week or month or year, each flavor merges all changes from Bitcoin Core on their own fork. If there's anything suspicious or too leftist (or perhaps too rightist, in case there's a leftist

bitcoindflavor), maybe they will spot it and not merge.This way we keep the best of both worlds: all software development, bugfixes, improvements goes on Bitcoin Core, other flavors just copy. If there's some non-consensus change whose efficacy is debatable, one of the flavors will merge on their fork and test, and later others -- including Core -- can copy that too. Plus, we get resistant to attacks: in case there is an attack on Bitcoin Core, only 10% of the network would be compromised. the other flavors would be safe.

Run Bitcoin Knots

The first example of a

bitcoindsoftware that follows Bitcoin Core closely, adds some small changes, but has an independent vetting and release process is Bitcoin Knots, maintained by the incorruptible Luke DashJr.Next time you decide to run

bitcoind, run Bitcoin Knots instead and contribute tobitcoinddecentralization!

See also:

[^pr-merged-very-quickly]: See PR 20624, for example, a very complicated change that could be introducing bugs or be a deliberate attack, merged in 3 days without time for discussion.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Gerador de tabelas de todos contra todos

I don't remember exactly when I did this, but I think a friend wanted to do software that would give him money over the internet without having to work. He didn't know how to program. He mentioned this idea he had which was some kind of football championship manager solution, but I heard it like this: a website that generated a round-robin championship table for people to print.

It is actually not obvious to anyone how to do it, it requires an algorithm that people will not reach casually while thinking, and there was no website doing it in Portuguese at the time, so I made this and it worked and it had a couple hundred daily visitors, and it even generated money from Google Ads (not much)!

First it was a Python web app running on Heroku, then Heroku started charging or limiting the amount of free time I could have on their platform, so I migrated it to a static site that ran everything on the client. Since I didn't want to waste my Python code that actually generated the tables I used Brython to run Python on JavaScript, which was an interesting experience.

In hindsight I could have just taken one of the many

round-robinJavaScript libraries that exist on NPM, so eventually after a couple of more years I did that.I also removed Google Ads when Google decided it had so many requirements to send me the money it was impossible, and then the money started to vanished.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28How being "flexible" can bloat a protocol

(A somewhat absurd example, but you'll get the idea)

Iimagine some client decides to add support for a variant of nip05 that checks for values at /.well-known/nostr.yaml besides /.well-known/nostr.json. "Why not?", they think, "I like YAML more than JSON, this can't hurt anyone".

Then some user makes a nip05 file in YAML and it will work on that client, they will think their file is good since it works on that client. When the user sees that other clients are not recognizing their YAML file, they will complain to the other client developers: "Hey, your client is broken, it is not supporting my YAML file!".

The developer of the other client, astonished, replies: "Oh, I am sorry, I didn't know that was part of the nip05 spec!"

The user, thinking it is doing a good thing, replies: "I don't know, but it works on this other client here, see?"

Now the other client adds support. The cycle repeats now with more users making YAML files, more and more clients adding YAML support, for fear of providing a client that is incomplete or provides bad user experience.

The end result of this is that now nip05 extra-officially requires support for both JSON and YAML files. Every client must now check for /.well-known/nostr.yaml too besides just /.well-known/nostr.json, because a user's key could be in either of these. A lot of work was wasted for nothing. And now, going forward, any new clients will require the double of work than before to implement.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28idea: Custom multi-use database app

Since 2015 I have this idea of making one app that could be repurposed into a full-fledged app for all kinds of uses, like powering small businesses accounts and so on. Hackable and open as an Excel file, but more efficient, without the hassle of making tables and also using ids and indexes under the hood so different kinds of things can be related together in various ways.

It is not a concrete thing, just a generic idea that has taken multiple forms along the years and may take others in the future. I've made quite a few attempts at implementing it, but never finished any.

I used to refer to it as a "multidimensional spreadsheet".

Can also be related to DabbleDB.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28idea: a website for feedback exchange

I thought a community of people sharing feedback on mutual interests would be a good thing, so as always I broadened and generalized the idea and mixed with my old criticue-inspired idea-feedback project and turned it into a "token". You give feedback on other people's things, they give you a "point". You can then use that point to request feedback from others.

This could be made as an Etleneum contract so these points were exchanged for satoshis using the shitswap contract (yet to be written).

In this case all the Bitcoin/Lightning side of the website must be hidden until the user has properly gone through the usage flow and earned points.

If it was to be built on Etleneum then it needs to emphasize the login/password login method instead of the lnurl-auth method. And then maybe it could be used to push lnurl-auth to normal people, but with a different name.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28bolt12 problems

- clients can't programatically build new offers by changing a path or query params (services like zbd.gg or lnurl-pay.me won't work)

- impossible to use in a load-balanced custodian way -- since offers would have to be pregenerated and tied to a specific lightning node.

- the existence of fiat currency fields makes it so wallets have to fetch exchange rates from somewhere on the internet (or offer a bad user experience), using HTTP which hurts user privacy.

- the vendor field is misleading, can be phished very easily, not as safe as a domain name.

- onion messages are an improvement over fake HTLC-based payments as a way of transmitting data, for sure. but we must decide if they are (i) suitable for transmitting all kinds of data over the internet, a replacement for tor; or (ii) not something that will scale well or on which we can count on for the future. if there was proper incentivization for data transmission it could end up being (i), the holy grail of p2p communication over the internet, but that is a very hard problem to solve and not guaranteed to yield the desired scalability results. since not even hints of attempting to solve that are being made, it's safer to conclude it is (ii).

bolt12 limitations

- not flexible enough. there are some interesting fields defined in the spec, but who gets to add more fields later if necessary? very unclear.

- services can't return any actionable data to the users who paid for something. it's unclear how business can be conducted without an extra communication channel.

bolt12 illusions

- recurring payments is not really solved, it is just a spec that defines intervals. the actual implementation must still be done by each wallet and service. the recurring payment cannot be enforced, the wallet must still initiate the payment. even if the wallet is evil and is willing to initiate a payment without the user knowing it still needs to have funds, channels, be online, connected etc., so it's not as if the services could rely on the payments being delivered in time.

- people seem to think it will enable pushing payments to mobile wallets, which it does not and cannot.

- there is a confusion of contexts: it looks like offers are superior to lnurl-pay, for example, because they don't require domain names. domain names, though, are common and well-established among internet services and stores, because these services have websites, so this is not really an issue. it is an issue, though, for people that want to receive payments in their homes. for these, indeed, bolt12 offers a superior solution -- but at the same time bolt12 seems to be selling itself as a tool for merchants and service providers when it includes and highlights features as recurring payments and refunds.

- the privacy gains for the receiver that are promoted as being part of bolt12 in fact come from a separate proposal, blinded paths, which should work for all normal lightning payments and indeed are a very nice solution. they are (or at least were, and should be) independent from the bolt12 proposal. a separate proposal, which can be (and already is being) used right now, also improves privacy for the receiver very much anway, it's called trampoline routing.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Sol e Terra

A Terra não gira em torno do Sol. Tudo depende do ponto de referência e não existe um ponto de referência absoluto. Só é melhor dizer que a Terra gira em torno do Sol porque há outros planetas fazendo movimentos análogos e aí fica mais fácil para todo mundo entender os movimentos tomando o Sol como ponto de referência.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28IPFS problems: Community

I was an avid IPFS user until yesterday. Many many times I asked simple questions for which I couldn't find an answer on the internet in the #ipfs IRC channel on Freenode. Most of the times I didn't get an answer, and even when I got it was rarely by someone who knew IPFS deeply. I've had issues go unanswered on js-ipfs repositories for year – one of these was raising awareness of a problem that then got fixed some months later by a complete rewrite, I closed my own issue after realizing that by myself some couple of months later, I don't think the people responsible for the rewrite were ever acknowledge that he had fixed my issue.

Some days ago I asked some questions about how the IPFS protocol worked internally, sincerely trying to understand the inefficiencies in finding and fetching content over IPFS. I pointed it would be a good idea to have a drawing showing that so people would understand the difficulties (which I didn't) and wouldn't be pissed off by the slowness. I was told to read the whitepaper. I had already the whitepaper, but read again the relevant parts. The whitepaper doesn't explain anything about the DHT and how IPFS finds content. I said that in the room, was told to read again.

Before anyone misread this section, I want to say I understand it's a pain to keep answering people on IRC if you're busy developing stuff of interplanetary importance, and that I'm not paying anyone nor I have the right to be answered. On the other hand, if you're developing a super-important protocol, financed by many millions of dollars and a lot of people are hitting their heads against your software and there's no one to help them; you're always busy but never delivers anything that brings joy to your users, something is very wrong. I sincerely don't know what IPFS developers are working on, I wouldn't doubt they're working on important things if they said that, but what I see – and what many other users see (take a look at the IPFS Discourse forum) is bugs, bugs all over the place, confusing UX, and almost no help.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28A list of things artificial intelligence is not doing

If AI is so good why can't it:

- write good glue code that wraps a documented HTTP API?

- make good translations using available books and respective published translations?

- extract meaningful and relevant numbers from news articles?

- write mathematical models that fit perfectly to available data better than any human?

- play videogames without cheating (i.e. simulating human vision, attention and click speed)?

- turn pure HTML pages into pretty designs by generating CSS

- predict the weather

- calculate building foundations

- determine stock values of companies from publicly available numbers

- smartly and automatically test software to uncover bugs before releases

- predict sports matches from the ball and the players' movement on the screen

- continuously improve niche/local search indexes based on user input and and reaction to results

- control traffic lights

- predict sports matches from news articles, and teams and players' history

This was posted first on Twitter.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

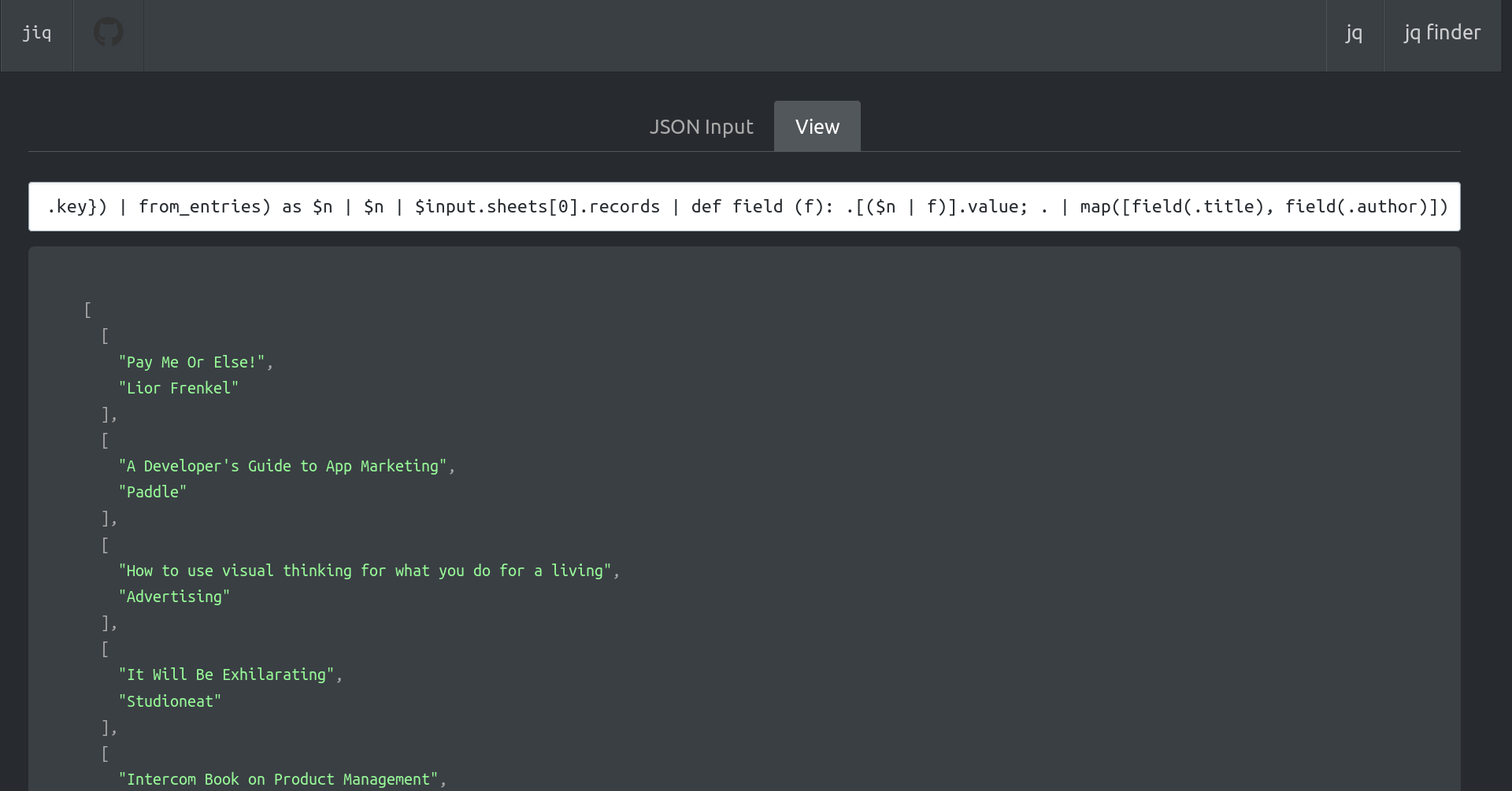

2024-01-14 13:55:28ijq

An interactive REPL for

jqwith smart helpers (for example, it automatically assigns each line of input to a variable so you can reference it later, it also always referenced the previous line automatically).See also

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Trelew

A CLI tool for navigating Trello boards. It used vorpal for an "immersive" experience and was pretty good.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28OP_CHECKTEMPLATEVERIFYand the "covenants" dramaThere are many ideas for "covenants" (I don't think this concept helps in the specific case of examining proposals, but fine). Some people think "we" (it's not obvious who is included in this group) should somehow examine them and come up with the perfect synthesis.

It is not clear what form this magic gathering of ideas will take and who (or which ideas) will be allowed to speak, but suppose it happens and there is intense research and conversations and people (ideas) really enjoy themselves in the process.

What are we left with at the end? Someone has to actually commit the time and put the effort and come up with a concrete proposal to be implemented on Bitcoin, and whatever the result is it will have trade-offs. Some great features will not make into this proposal, others will make in a worsened form, and some will be contemplated very nicely, there will be some extra costs related to maintenance or code complexity that will have to be taken. Someone, a concreate person, will decide upon these things using their own personal preferences and biases, and many people will not be pleased with their choices.

That has already happened. Jeremy Rubin has already conjured all the covenant ideas in a magic gathering that lasted more than 3 years and came up with a synthesis that has the best trade-offs he could find. CTV is the result of that operation.

The fate of CTV in the popular opinion illustrated by the thoughtless responses it has evoked such as "can we do better?" and "we need more review and research and more consideration of other ideas for covenants" is a preview of what would probably happen if these suggestions were followed again and someone spent the next 3 years again considering ideas, talking to other researchers and came up with a new synthesis. Again, that person would be faced with "can we do better?" responses from people that were not happy enough with the choices.

And unless some famous Bitcoin Core or retired Bitcoin Core developers were personally attracted by this synthesis then they would take some time to review and give their blessing to this new synthesis.

To summarize the argument of this article, the actual question in the current CTV drama is that there exists hidden criteria for proposals to be accepted by the general community into Bitcoin, and no one has these criteria clear in their minds. It is not as simple not as straightforward as "do research" nor it is as humanly impossible as "get consensus", it has a much bigger social element into it, but I also do not know what is the exact form of these hidden criteria.

This is said not to blame anyone -- except the ignorant people who are not aware of the existence of these things and just keep repeating completely false and unhelpful advice for Jeremy Rubin and are not self-conscious enough to ever realize what they're doing.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28idea: clarity.fm on Lightning

Getting money from clients very easily, dispatching that money to "world class experts" (what a silly way to market things, but I guess it works) very easily are the job for Bitcoin and the Lightning Network.

EDIT 2020-09-04

My idea was that people would advertise themselves, so you would book an hour with people you know already, but it seems that clarify.fm has gone through the route of offering a "catalog of experts" to potential clients, all full of verification processes probably and marketing. So I guess this is not a thing I can do.

Actually I did https://s4a.etleneum.com/ (on Etleneum) that is somewhat similar, but of course doesn't have the glamour and network effect and marketing -- also it's just text, when in Clarity is fancy calls.

Thinking about it, this is just a simple and obvious idea: just copy things from the fiat world and make them on Lightning, but maybe it is still worth pointing these out as there are hundreds of developers out there trying to make yet another lottery game with Lightning.

It may also be a good idea to not just copy fiat-businesses models, but also change them experimenting with new paradigms, like idea: Patreon, but simple, and without subscription.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Channels without HTLCs

HTLCs below the dust limit are not possible, because they're uneconomical.

So currently whenever a payment below the dust limit is to be made Lightning peers adjust their commitment transactions to pay that amount as fees in case the channel is closed. That's a form of reserving that amount and incentivizing peers to resolve the payment, either successfully (in case it goes to the receiving node's balance) or not (it then goes back to the sender's balance).

SOLUTION

I didn't think too much about if it is possible to do what I think can be done in the current implementation on Lightning channels, but in the context of Eltoo it seems possible.

Eltoo channels have UPDATE transactions that can be published to the blockchain and SETTLEMENT transactions that spend them (after a relative time) to each peer. The barebones script for UPDATE transactions is something like (copied from the paper, because I don't understand these things):

OP_IF # to spend from a settlement transaction (presigned) 10 OP_CSV 2 As,i Bs,i 2 OP_CHECKMULTISIGVERIFY OP_ELSE # to spend from a future update transaction <Si+1> OP_CHECKLOCKTIMEVERIFY 2 Au Bu 2 OP_CHECKMULTISIGVERIFY OP_ENDIFDuring a payment of 1 satoshi it could be updated to something like (I'll probably get this thing completely wrong):

OP_HASH256 <payment_hash> OP_EQUAL OP_IF # for B to spend from settlement transaction 1 in case the payment went through # and they have a preimage 10 OP_CSV 2 As,i1 Bs,i1 2 OP_CHECKMULTISIGVERIFY OP_ELSE OP_IF # for A to spend from settlement transaction 2 in case the payment didn't went through # and the other peer is uncooperative <now + 1day> OP_CHECKLOCKTIMEVERIFY 2 As,i2 Bs,i2 2 OP_CHECKMULTISIGVERIFY OP_ELSE # to spend from a future update transaction <Si+1> OP_CHECKLOCKTIMEVERIFY 2 Au Bu 2 OP_CHECKMULTISIGVERIFY OP_ENDIF OP_ENDIFThen peers would have two presigned SETTLEMENT transactions, 1 and 2 (with different signature pairs, as badly shown in the script). On SETTLEMENT 1, funds are, say, 999sat for A and 1001sat for B, while on SETTLEMENT 2 funds are 1000sat for A and 1000sat for B.

As soon as B gets the preimage from the next peer in the route it can give it to A and them can sign a new UPDATE transaction that replaces the above gimmick with something simpler without hashes involved.

If the preimage doesn't come in viable time, peers can agree to make a new UPDATE transaction anyway. Otherwise A will have to close the channel, which may be bad, but B wasn't a good peer anyway.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28A response to Achim Warner's "Drivechain brings politics to miners" article

I mean this article: https://achimwarner.medium.com/thoughts-on-drivechain-i-miners-can-do-things-about-which-we-will-argue-whether-it-is-actually-a5c3c022dbd2

There are basically two claims here:

1. Some corporate interests might want to secure sidechains for themselves and thus they will bribe miners to have these activated

First, it's hard to imagine why they would want such a thing. Are they going to make a proprietary KYC chain only for their users? They could do that in a corporate way, or with a federation, like Facebook tried to do, and that would provide more value to their users than a cumbersome pseudo-decentralized system in which they don't even have powers to issue currency. Also, if Facebook couldn't get away with their federated shitcoin because the government was mad, what says the government won't be mad with a sidechain? And finally, why would Facebook want to give custody of their proprietary closed-garden Bitcoin-backed ecosystem coins to a random, open and always-changing set of miners?

But even if they do succeed in making their sidechain and it is very popular such that it pays miners fees and people love it. Well, then why not? Let them have it. It's not going to hurt anyone more than a proprietary shitcoin would anyway. If Facebook really wants a closed ecosystem backed by Bitcoin that probably means we are winning big.

2. Miners will be required to vote on the validity of debatable things

He cites the example of a PoS sidechain, an assassination market, a sidechain full of nazists, a sidechain deemed illegal by the US government and so on.

There is a simple solution to all of this: just kill these sidechains. Either miners can take the money from these to themselves, or they can just refuse to engage and freeze the coins there forever, or they can even give the coins to governments, if they want. It is an entirely good thing that evil sidechains or sidechains that use horrible technology that doesn't even let us know who owns each coin get annihilated. And it was the responsibility of people who put money in there to evaluate beforehand and know that PoS is not deterministic, for example.

About government censoring and wanting to steal money, or criminals using sidechains, I think the argument is very weak because these same things can happen today and may even be happening already: i.e., governments ordering mining pools to not mine such and such transactions from such and such people, or forcing them to reorg to steal money from criminals and whatnot. All this is expected to happen in normal Bitcoin. But both in normal Bitcoin and in Drivechain decentralization fixes that problem by making it so governments cannot catch all miners required to control the chain like that -- and in fact fixing that problem is the only reason we need decentralization.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28A entrevista da Flávia Tavares com o Olavo de Carvalho

Não li todas as reclamações que o Olavo fez, mas li algumas. Também não li toda a matéria que saiu na Época, porque não tive paciência, mas assisti aos dois vídeos da entrevista que o Olavo publicou.

Tendo lido primeiro as muitas reclamações do Olavo, esperei encontrar no vídeo uma pessoa falsa, que fingiu-se de amigável para obter informações que usaria depois para destruir a imagem do Olavo, mas não vi nada disso.

Claro que ela poderia ter me enganado também, se enganou ao Olavo. Mas na matéria em si, também não vi nada além de sinceridade -- talvez não excelência jornalística, mas nada que eu não esperasse de qualquer matéria de qualquer revista. Flavia Tavares não entendeu muitas coisas, mas não fingiu que não entendeu nada, foi simples e honestamente Flavia Tavares, como ela mesma declarou no final do vídeo da entrevista: "olha, eu não fingi nada aqui, viu?".

O mais importante de tudo isso, porém, são as partes da matéria que apresentam idéias difíceis de conceber, como as que Olavo tem sobre o governo mundial ou a disseminação da pedofilia. Em toda discussão pública ou privada, essas idéias são proibidas. Muita gente pode concordar que a esquerda não presta, mas ninguém em sã consciência admitirá a possibilidade de que haja qualquer intenção significativa de implantação de um governo mundial ou da disseminação da pedofilia. A mesma carinha de deboche que seu amigo esquerdista faria à simples menção desses assuntos é a que Flavia Tavares usa no seu texto quando quer mostrar que Olavo é meio tantã. A carinha de deboche vem desacompanhada de qualquer reflexão séria ou tentativa de refutação, sempre.

Link da tal matéria: http://epoca.globo.com/sociedade/noticia/2017/10/olavo-de-carvalho-o-guru-da-direita-que-rejeita-o-que-dizem-seus-fas.html?utm_source=twitter&utm_medium=social&utm_campaign=post Vídeos: https://www.youtube.com/watch?v=C0TUsKluhok, https://www.youtube.com/watch?v=yR0F1haQ07Y&t=5s

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d