-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Bluesky is a scam

Bluesky advertises itself as an open network, they say people won't lose followers or their identity, they advertise themselves as a protocol ("atproto") and because of that they are tricking a lot of people into using them. These three claims are false.

protocolness

Bluesky is a company. "atproto" is the protocol. Supposedly they are two different things, right? Bluesky just releases software that implements the protocol, but others can also do that, it's open!

And yet, the protocol has an official webpage with a waitlist and a private beta? Why is the protocol advertised as a company product? Because it is. The "protocol" is just a description of whatever the Bluesky app and servers do, it can and does change anytime the Bluesky developers decide they want to change it, and it will keep changing for as long as Bluesky apps and servers control the biggest part of the network.

Oh, so there is the possibility of other players stepping in and then it becomes an actual interoperable open protocol? Yes, but what is the likelihood of that happening? It is very low. No serious competitor is likely to step in and build serious apps using a protocol that is directly controlled by Bluesky. All we will ever see are small "community" apps made by users and small satellite small businesses -- not unlike the people and companies that write plugins, addons and alternative clients for popular third-party centralized platforms.

And last, even if it happens that someone makes an app so good that it displaces the canonical official Bluesky app, then that company may overtake the protocol itself -- not because they're evil, but because there is no way it cannot be like this.

identity

According to their own documentation, the Bluesky people were looking for an identity system that provided global ids, key rotation and human-readable names.

They must have realized that such properties are not possible in an open and decentralized system, but instead of accepting a tradeoff they decided they wanted all their desired features and threw away the "decentralized" part, quite literally and explicitly (although they make sure to hide that piece in the middle of a bunch of code and text that very few will read).

The "DID Placeholder" method they decided to use for their global identities is nothing more than a normal old boring trusted server controlled by Bluesky that keeps track of who is who and can, at all times, decide to ban a person and deprive them from their identity (they dismissively call a "denial of service attack").

They decided to adopt this method as a placeholder until someone else doesn't invent the impossible alternative that would provide all their desired properties in a decentralized manner -- which is nothing more than a very good excuse: "yes, it's not great now, but it will improve!".

openness

Months after launching their product with an aura of decentralization and openness and getting a bunch of people inside that believed, falsely, they were joining an actually open network, Bluesky has decided to publish a part of their idea of how other people will be able to join their open network.

When I first saw their app and how they were very prominently things like follower counts, like counts and other things that are typical of centralized networks and can't be reliable or exact on truly open networks (like Nostr), I asked myself how were they going to do that once they became and open "federated" network as they were expected to be.

Turns out their decentralization plan is to just allow you, as a writer, to host your own posts on "personal data stores", but not really have any control over the distribution of the posts. All posts go through the Bluesky central server, called BGS, and they decide what to do with it. And you, as a reader, doesn't have any control of what you're reading from either, all you can do is connect to the BGS and ask for posts. If the BGS decides to ban, shadow ban, reorder, miscount, hide, deprioritize, trick or maybe even to serve ads, then you are out of luck.

Oh, but anyone can run their own BGS!, they will say. Even in their own blog post announcing the architecture they assert that "it’s a fairly resource-demanding service" and "there may be a few large full-network providers". But I fail to see why even more than one network provider will exist, if Bluesky is already doing that job, and considering the fact there are very little incentives for anyone to switch providers -- because the app does not seem to be at all made to talk to multiple providers, one would have to stop using the reliable, fast and beefy official BGS and start using some half-baked alternative and risk losing access to things.

When asked about the possibility of switching, one of Bluesky overlords said: "it would look something like this: bluesky has gone evil. there's a new alternative called freesky that people are rushing to. I'm switching to freesky".

The quote is very naïve and sounds like something that could be said about Twitter itself: "if Twitter is evil you can just run your own social network". Both are fallacies because they ignore the network-effect and the fact that people will never fully agree that something is "evil". In fact these two are the fundamental reasons why -- for social networks specifically (and not for other things like commerce) -- we need truly open protocols with no owners and no committees.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28SummaDB

This was a hierarchical database server similar to the original Firebase. Records were stored on a LevelDB on different paths, like:

/fruits/banana/color:yellow/fruits/banana/flavor:sweet

And could be queried by path too, using HTTP, for example, a call to

http://hostname:port/fruits/banana, for example, would return a JSON document likejson { "color": "yellow", "flavor": "sweet" }While a call to

/fruitswould returnjson { "banana": { "color": "yellow", "flavor": "sweet" } }POST,PUTandPATCHrequests also worked.In some cases the values would be under a special

"_val"property to disambiguate them from paths. (I may be missing some other details that I forgot.)GraphQL was also supported as a query language, so a query like

graphql query { fruits { banana { color } } }would return

{"fruits": {"banana": {"color": "yellow"}}}.SummulaDB

SummulaDB was a browser/JavaScript build of SummaDB. It ran on the same Go code compiled with GopherJS, and using PouchDB as the storage backend, if I remember correctly.

It had replication between browser and server built-in, and one could replicate just subtrees of the main tree, so you could have stuff like this in the server:

json { "users": { "bob": {}, "alice": {} } }And then only allow Bob to replicate

/users/boband Alice to replicate/users/alice. I am sure the require auth stuff was also built in.There was also a PouchDB plugin to make this process smoother and data access more intuitive (it would hide the

_valstuff and allow properties to be accessed directly, today I wouldn't waste time working on these hidden magic things).The computed properties complexity

The next step, which I never managed to get fully working and caused me to give it up because of the complexity, was the ability to automatically and dynamically compute materialized properties based on data in the tree.

The idea was partly inspired on CouchDB computed views and how limited they were, I wanted a thing that would be super powerful, like, given

json { "matches": { "1": { "team1": "A", "team2": "B", "score": "2x1", "date": "2020-01-02" }, "1": { "team1": "D", "team2": "C", "score": "3x2", "date": "2020-01-07" } } }One should be able to add a computed property at

/matches/standingsthat computed the scores of all teams after all matches, for example.I tried to complete this in multiple ways but they were all adding much more complexity I could handle. Maybe it would have worked better on a more flexible and powerful and functional language, or if I had more time and patience, or more people.

Screenshots

This is just one very simple unfinished admin frontend client view of the hierarchical dataset.

- https://github.com/fiatjaf/summadb

- https://github.com/fiatjaf/summuladb

- https://github.com/fiatjaf/pouch-summa

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28On "zk-rollups" applied to Bitcoin

ZK rollups make no sense in bitcoin because there is no "cheap calldata". all data is already ~~cheap~~ expensive calldata.

There could be an onchain zk verification that allows succinct signatures maybe, but never a rollup.

What happens is: you can have one UTXO that contains multiple balances on it and in each transaction you can recreate that UTXOs but alter its state using a zk to compress all internal transactions that took place.

The blockchain must be aware of all these new things, so it is in no way "L2".

And you must have an entity responsible for that UTXO and for conjuring the state changes and zk proofs.

But on bitcoin you also must keep the data necessary to rebuild the proofs somewhere else, I'm not sure how can the third party responsible for that UTXO ensure that happens.

I think such a construct is similar to a credit card corporation: one central party upon which everybody depends, zero interoperability with external entities, every vendor must have an account on each credit card company to be able to charge customers, therefore it is not clear that such a thing is more desirable than solutions that are truly open and interoperable like Lightning, which may have its defects but at least fosters a much better environment, bringing together different conflicting parties, custodians, anyone.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Gerador de tabelas de todos contra todos

I don't remember exactly when I did this, but I think a friend wanted to do software that would give him money over the internet without having to work. He didn't know how to program. He mentioned this idea he had which was some kind of football championship manager solution, but I heard it like this: a website that generated a round-robin championship table for people to print.

It is actually not obvious to anyone how to do it, it requires an algorithm that people will not reach casually while thinking, and there was no website doing it in Portuguese at the time, so I made this and it worked and it had a couple hundred daily visitors, and it even generated money from Google Ads (not much)!

First it was a Python web app running on Heroku, then Heroku started charging or limiting the amount of free time I could have on their platform, so I migrated it to a static site that ran everything on the client. Since I didn't want to waste my Python code that actually generated the tables I used Brython to run Python on JavaScript, which was an interesting experience.

In hindsight I could have just taken one of the many

round-robinJavaScript libraries that exist on NPM, so eventually after a couple of more years I did that.I also removed Google Ads when Google decided it had so many requirements to send me the money it was impossible, and then the money started to vanished.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28How being "flexible" can bloat a protocol

(A somewhat absurd example, but you'll get the idea)

Iimagine some client decides to add support for a variant of nip05 that checks for values at /.well-known/nostr.yaml besides /.well-known/nostr.json. "Why not?", they think, "I like YAML more than JSON, this can't hurt anyone".

Then some user makes a nip05 file in YAML and it will work on that client, they will think their file is good since it works on that client. When the user sees that other clients are not recognizing their YAML file, they will complain to the other client developers: "Hey, your client is broken, it is not supporting my YAML file!".

The developer of the other client, astonished, replies: "Oh, I am sorry, I didn't know that was part of the nip05 spec!"

The user, thinking it is doing a good thing, replies: "I don't know, but it works on this other client here, see?"

Now the other client adds support. The cycle repeats now with more users making YAML files, more and more clients adding YAML support, for fear of providing a client that is incomplete or provides bad user experience.

The end result of this is that now nip05 extra-officially requires support for both JSON and YAML files. Every client must now check for /.well-known/nostr.yaml too besides just /.well-known/nostr.json, because a user's key could be in either of these. A lot of work was wasted for nothing. And now, going forward, any new clients will require the double of work than before to implement.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28The problem with ION

ION is a DID method based on a thing called "Sidetree".

I can't say for sure what is the problem with ION, because I don't understand the design, even though I have read all I could and asked everybody I knew. All available information only touches on the high-level aspects of it (and of course its amazing wonders) and no one has ever bothered to explain the details. I've also asked the main designer of the protocol, Daniel Buchner, but he may have thought I was trolling him on Twitter and refused to answer, instead pointing me to an incomplete spec on the Decentralized Identity Foundation website that I had already read before. I even tried to join the DIF as a member so I could join their closed community calls and hear what they say, maybe eventually ask a question, so I could understand it, but my entrance was ignored, then after many months and a nudge from another member I was told I had to do a KYC process to be admitted, which I refused.

One thing I know is:

- ION is supposed to provide a way to rotate keys seamlessly and automatically without losing the main identity (and the ION proponents also claim there are no "master" keys because these can also be rotated).

- ION is also not a blockchain, i.e. it doesn't have a deterministic consensus mechanism and it is decentralized, i.e. anyone can publish data to it, doesn't have to be a single central server, there may be holes in the available data and the protocol doesn't treat that as a problem.

- From all we know about years of attempts to scale Bitcoins and develop offchain protocols it is clear that you can't solve the double-spend problem without a central authority or a kind of blockchain (i.e. a decentralized system with deterministic consensus).

- Rotating keys also suffer from the double-spend problem: whenever you rotate a key it is as if it was "spent", you aren't supposed to be able to use it again.

The logic conclusion of the 4 assumptions above is that ION is flawed: it can't provide the key rotation it says it can if it is not a blockchain.

See also

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28idea: Custom multi-use database app

Since 2015 I have this idea of making one app that could be repurposed into a full-fledged app for all kinds of uses, like powering small businesses accounts and so on. Hackable and open as an Excel file, but more efficient, without the hassle of making tables and also using ids and indexes under the hood so different kinds of things can be related together in various ways.

It is not a concrete thing, just a generic idea that has taken multiple forms along the years and may take others in the future. I've made quite a few attempts at implementing it, but never finished any.

I used to refer to it as a "multidimensional spreadsheet".

Can also be related to DabbleDB.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28rosetta.alhur.es

A service that grabs code samples from two chosen languages on RosettaCode and displays them side-by-side.

The code-fetching is done in real time and snippet-by-snippet (there is also a prefetch of which snippets are available in each language, so we only compare apples to apples).

This was my first Golang web application if I remember correctly.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28idea: a website for feedback exchange

I thought a community of people sharing feedback on mutual interests would be a good thing, so as always I broadened and generalized the idea and mixed with my old criticue-inspired idea-feedback project and turned it into a "token". You give feedback on other people's things, they give you a "point". You can then use that point to request feedback from others.

This could be made as an Etleneum contract so these points were exchanged for satoshis using the shitswap contract (yet to be written).

In this case all the Bitcoin/Lightning side of the website must be hidden until the user has properly gone through the usage flow and earned points.

If it was to be built on Etleneum then it needs to emphasize the login/password login method instead of the lnurl-auth method. And then maybe it could be used to push lnurl-auth to normal people, but with a different name.

-

@ f977c464:32fcbe00

2024-01-11 18:47:47

@ f977c464:32fcbe00

2024-01-11 18:47:47Kendisini aynada ilk defa gördüğü o gün, diğerleri gibi olduğunu anlamıştı. Oysaki her insan biricik olmalıydı. Sözgelimi sinirlendiğinde bir kaşı diğerinden birkaç milimetre daha az çatılabilirdi veya sevindiğinde dudağı ona has bir açıyla dalgalanabilirdi. Hatta bunların hiçbiri mümkün değilse, en azından, gözlerinin içinde sadece onun sahip olabileceği bir ışık parlayabilirdi. Çok sıradan, öyle sıradan ki kimsenin fark etmediği o milyonlarca minik şeyden herhangi biri. Ne olursa.

Ama yansımasına bakarken bunların hiçbirini bulamadı ve diğer günlerden hiç de farklı başlamamış o gün, işe gitmek için vagonunun gelmesini beklediği alelade bir metro istasyonunda, içinde kaybolduğu illüzyon dağılmaya başladı.

İlk önce derisi döküldü. Tam olarak dökülmedi aslında, daha çok kıvılcımlara dönüşüp bedeninden fırlamış ve bir an sonra sönerek külleşmiş, havada dağılmıştı. Ardında da, kaybolmadan hemen önce, kısa süre için hayal meyal görülebilen, bir ruhun yok oluşuna ağıt yakan rengârenk peri cesetleri bırakmıştı. Beklenenin aksine, havaya toz kokusu yayıldı.

Dehşete düştü elbette. Dehşete düştüler. Panikle üstlerini yırtan 50 işçi. Her şeyin sebebiyse o vagon.

Saçları da döküldü. Her tel, yere varmadan önce, her santimde ikiye ayrıla ayrıla yok oldu.

Bütün yüzeylerin mat olduğu, hiçbir şeyin yansımadığı, suyun siyah aktığı ve kendine ancak kameralarla bakabildiğin bir dünyada, vagonun içine yerleştirilmiş bir aynadan ilk defa kendini görmek.

Gözlerinin akları buharlaşıp havada dağıldı, mercekleri boşalan yeri doldurmak için eriyip yayıldı. Gerçeği görmemek için yaratılmış, bu yüzden görmeye hazır olmayan ve hiç olmayacak gözler.

Her şeyin o anda sona erdiğini sanabilirdi insan. Derin bir karanlık ve ölüm. Görmenin görmek olduğu o anın bitişi.

Ben geldiğimde ölmüşlerdi.

Yani bozulmuşlardı demek istiyorum.

Belleklerini yeni taşıyıcılara takmam mümkün olmadı. Fiziksel olarak kusursuz durumdaydılar, olmayanları da tamir edebilirdim ama tüm o hengamede kendilerini baştan programlamış ve girdilerini modifiye etmişlerdi.

Belleklerden birini masanın üzerinden ileriye savurdu. Hınca hınç dolu bir barda oturuyorlardı. O ve arkadaşı.

Sırf şu kendisini insan sanan androidler travma geçirip delirmesin diye neler yapıyoruz, insanın aklı almıyor.

Eliyle arkasını işaret etti.

Polislerin söylediğine göre biri vagonun içerisine ayna yerleştirmiş. Bu zavallılar da kapı açılıp bir anda yansımalarını görünce kafayı kırmışlar.

Arkadaşı bunların ona ne hissettirdiğini sordu. Yani o kadar bozuk, insan olduğunu sanan androidi kendilerini parçalamış olarak yerde görmek onu sarsmamış mıydı?

Hayır, sonuçta belirli bir amaç için yaratılmış şeyler onlar. Kaliteli bir bilgisayarım bozulduğunda üzülürüm çünkü parasını ben vermişimdir. Bunlarsa devletin. Bana ne ki?

Arkadaşı anlayışla kafasını sallayıp suyundan bir yudum aldı. Kravatını biraz gevşetti.

Bira istemediğinden emin misin?

İstemediğini söyledi. Sahi, neden deliriyordu bu androidler?

Basit. Onların yapay zekâlarını kodlarken bir şeyler yazıyorlar. Yazılımcılar. Biliyorsun, ben donanımdayım. Bunlar da kendilerini insan sanıyorlar. Tiplerine bak.

Sesini alçalttı.

Arabalarda kaza testi yapılan mankenlere benziyor hepsi. Ağızları burunları bile yok ama şu geldiğimizden beri sakalını düzeltip duruyor mesela. Hayır, hepsi de diğerleri onun sakalı varmış sanıyor, o manyak bir şey.

Arkadaşı bunun delirmeleriyle bağlantısını çözemediğini söyledi. O da normal sesiyle konuşmaya devam etti.

Anlasana, aynayı falan ayırt edemiyor mercekleri. Lönk diye kendilerini görüyorlar. Böyle, olduğu gibi...

Nedenmiş peki? Ne gerek varmış?

Ne bileyim be abicim! Ahiret soruları gibi.

Birasına bakarak dalıp gitti. Sonra masaya abanarak arkadaşına iyice yaklaştı. Bulanık, bir tünelin ucundaki biri gibi, şekli şemalı belirsiz bir adam.

Ben seni nereden tanıyorum ki ulan? Kimsin sen?

Belleği makineden çıkardılar. İki kişiydiler. Soruşturmadan sorumlu memurlar.

─ Baştan mı başlıyoruz, diye sordu belleği elinde tutan ilk memur.

─ Bir kere daha deneyelim ama bu sefer direkt aynayı sorarak başla, diye cevapladı ikinci memur.

─ Bence de. Yeterince düzgün çalışıyor.

Simülasyon yüklenirken, ayakta, biraz arkada duran ve alnını kaşıyan ikinci memur sormaktan kendisini alamadı:

─ Bu androidleri niye böyle bir olay yerine göndermişler ki? Belli tost olacakları. İsraf. Gidip biz baksak aynayı kırıp delilleri mahvetmek zorunda da kalmazlar.

Diğer memur sandalyesinde hafifçe dönecek oldu, o sırada soruyu bilgisayarın hoparlöründen teknisyen cevapladı.

Hangi işimizde bir yamukluk yok ki be abi.

Ama bir son değildi. Üstlerindeki tüm illüzyon dağıldığında ve çıplak, cinsiyetsiz, birbirinin aynı bedenleriyle kaldıklarında sıra dünyaya gelmişti.

Yere düştüler. Elleri -bütün bedeni gibi siyah turmalinden, boğumları çelikten- yere değdiği anda, metronun zemini dağıldı.

Yerdeki karolar öncesinde beyazdı ve çok parlaktı. Tepelerindeki floresan, ışığını olduğu gibi yansıtıyor, tek bir lekenin olmadığı ve tek bir tozun uçmadığı istasyonu aydınlatıyorlardı.

Duvarlara duyurular asılmıştı. Örneğin, yarın akşam kültür merkezinde 20.00’da başlayacak bir tekno blues festivalinin cıvıl cıvıl afişi vardı. Onun yanında daha geniş, sarı puntolu harflerle yazılmış, yatay siyah kesiklerle çerçevesi çizilmiş, bir platformdan düşen çöp adamın bulunduğu “Dikkat! Sarı bandı geçmeyin!” uyarısı. Biraz ilerisinde günlük resmi gazete, onun ilerisinde bir aksiyon filminin ve başka bir romantik komedi filminin afişleri, yapılacakların ve yapılmayacakların söylendiği küçük puntolu çeşitli duyurular... Duvar uzayıp giden bir panoydu. On, on beş metrede bir tekrarlanıyordu.

Tüm istasyonun eni yüz metre kadar. Genişliği on metre civarı.

Önlerinde, açık kapısından o mendebur aynanın gözüktüğü vagon duruyordu. Metro, istasyona sığmayacak kadar uzundu. Bir kılıcın keskinliğiyle uzanıyor ama yer yer vagonların ek yerleriyle bölünüyordu.

Hiçbir vagonda pencere olmadığı için metronun içi, içlerindekiler meçhuldü.

Sonrasında karolar zerrelerine ayrılarak yükseldi. Floresanın ışığında her yeri toza boğdular ve ortalığı gri bir sisin altına gömdüler. Çok kısa bir an. Afişleri dalgalandırmadılar. Dalgalandırmaya vakitleri olmadı. Yerlerinden söküp aldılar en fazla. Işık birkaç kere sönüp yanarak direndi. Son kez söndüğünde bir daha geri gelmedi.

Yine de etraf aydınlıktı. Kırmızı, her yere eşit dağılan soluk bir ışıkla.

Yer tamamen tele dönüşmüştü. Altında çapraz hatlarla desteklenmiş demir bir iskelet. Işık birkaç metreden daha fazla aşağıya uzanamıyordu. Sonsuzluğa giden bir uçurum.

Duvarın yerini aynı teller ve demir iskelet almıştı. Arkasında, birbirine vidalarla tutturulmuş demir plakalardan oluşan, üstünden geçen boruların ek yerlerinden bazen ince buharların çıktığı ve bir süre asılı kaldıktan sonra ağır, yağlı bir havayla sürüklendiği bir koridor.

Diğer tarafta paslanmış, pencerelerindeki camlar kırıldığı için demir plakalarla kapatılmış külüstür bir metro. Kapının karşısındaki aynadan her şey olduğu gibi yansıyordu.

Bir konteynırın içini andıran bir evde, gerçi gayet de birbirine eklenmiş konteynırlardan oluşan bir şehirde “andıran” demek doğru olmayacağı için düpedüz bir konteynırın içinde, masaya mum görüntüsü vermek için koyulmuş, yarı katı yağ atıklarından şekillendirilmiş kütleleri yakmayı deniyordu. Kafasında hayvan kıllarından yapılmış grili siyahlı bir peruk. Aynı kıllardan kendisine gür bir bıyık da yapmıştı.

Üstünde mavi çöp poşetlerinden yapılmış, kravatlı, şık bir takım.

Masanın ayakları yerine oradan buradan çıkmış parçalar konulmuştu: bir arabanın şaft mili, üst üste konulmuş ve üstünde yazı okunamayan tenekeler, boş kitaplar, boş gazete balyaları... Hiçbir şeye yazı yazılmıyordu, gerek yoktu da zaten çünkü merkez veri bankası onları fark ettirmeden, merceklerden giren veriyi sentezleyerek insanlar için dolduruyordu. Yani, androidler için. Farklı şekilde isimlendirmek bir fark yaratacaksa.

Onların mercekleri için değil. Bağlantıları çok önceden kopmuştu.

─ Hayatım, sofra hazır, diye bağırdı yatak odasındaki karısına.

Sofrada tabak yerine düz, bardak yerine bükülmüş, çatal ve bıçak yerine sivriltilmiş plakalar.

Karısı salonun kapısında durakladı ve ancak kulaklarına kadar uzanan, kocasınınkine benzeyen, cansız, ölü hayvanların kıllarından ibaret peruğunu eliyle düzeltti. Dudağını, daha doğrusu dudağının olması gereken yeri koyu kırmızı bir yağ tabakasıyla renklendirmeyi denemişti. Biraz da yanaklarına sürmüştü.

─ Nasıl olmuş, diye sordu.

Sesi tek düzeydi ama hafif bir neşe olduğunu hissettiğinize yemin edebilirdiniz.

Üzerinde, çöp poşetlerinin içini yazısız gazete kağıtlarıyla doldurarak yaptığı iki parça giysi.

─ Çok güzelsin, diyerek kravatını düzeltti kocası.

─ Sen de öylesin, sevgilim.

Yaklaşıp kocasını öptü. Kocası da onu. Sonra nazikçe elinden tutarak, sandalyesini geriye çekerek oturmasına yardım etti.

Sofrada yemek niyetine hiçbir şey yoktu. Gerek de yoktu zaten.

Konteynırın kapısı gürültüyle tekmelenip içeri iki memur girene kadar birbirlerine öyküler anlattılar. O gün neler yaptıklarını. İşten erken çıkıp yemyeşil çimenlerde gezdiklerini, uçurtma uçurduklarını, kadının nasıl o elbiseyi bulmak için saatlerce gezip yorulduğunu, kocasının kısa süreliğine işe dönüp nasıl başarılı bir hamleyle yaşanan krizi çözdüğünü ve kadının yanına döndükten sonra, alışveriş merkezinde oturdukları yeni dondurmacının dondurmalarının ne kadar lezzetli olduğunu, boğazlarının ağrımasından korktuklarını...

Akşam film izleyebilirlerdi, televizyonda -boş ve mat bir plaka- güzel bir film oynayacaktı.

İki memur. Çıplak bedenleriyle birbirinin aynı. Ellerindeki silahları onlara doğrultmuşlardı. Mum ışığında, tertemiz bir örtünün serili olduğu masada, bardaklarında şaraplarla oturan ve henüz sofranın ortasındaki hindiye dokunmamış çifti gördüklerinde bocaladılar.

Hiç de androidlere bilinçli olarak zarar verebilecek gibi gözükmüyorlardı.

─ Sessiz kalma hakkına sahipsiniz, diye bağırdı içeri giren ikinci memur. Söylediğiniz her şey...

Cümlesini bitiremedi. Yatak odasındaki, masanın üzerinden gördüğü o şey, onunla aynı hareketleri yapan android, yoksa, bir aynadaki yansıması mıydı?

Bütün illüzyon o anda dağılmaya başladı.

Not: Bu öykü ilk olarak 2020 yılında Esrarengiz Hikâyeler'de yayımlanmıştır.

-

@ a023a5e8:ff29191d

2024-01-06 20:47:50

@ a023a5e8:ff29191d

2024-01-06 20:47:50What are all the side incomes you earn other than your regular income? I know these questions are not always easily answered, but by sharing yours, you may be providing someone with a regular income that you see for yourself as a side income or a passive income. You know this world, and Bitcoin is sufficient for everyone. Let this be a fruitful discussion for many, including me. 2023 was fine, and 2024 is already here.

I will be bookmarking this thread.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28bolt12 problems

- clients can't programatically build new offers by changing a path or query params (services like zbd.gg or lnurl-pay.me won't work)

- impossible to use in a load-balanced custodian way -- since offers would have to be pregenerated and tied to a specific lightning node.

- the existence of fiat currency fields makes it so wallets have to fetch exchange rates from somewhere on the internet (or offer a bad user experience), using HTTP which hurts user privacy.

- the vendor field is misleading, can be phished very easily, not as safe as a domain name.

- onion messages are an improvement over fake HTLC-based payments as a way of transmitting data, for sure. but we must decide if they are (i) suitable for transmitting all kinds of data over the internet, a replacement for tor; or (ii) not something that will scale well or on which we can count on for the future. if there was proper incentivization for data transmission it could end up being (i), the holy grail of p2p communication over the internet, but that is a very hard problem to solve and not guaranteed to yield the desired scalability results. since not even hints of attempting to solve that are being made, it's safer to conclude it is (ii).

bolt12 limitations

- not flexible enough. there are some interesting fields defined in the spec, but who gets to add more fields later if necessary? very unclear.

- services can't return any actionable data to the users who paid for something. it's unclear how business can be conducted without an extra communication channel.

bolt12 illusions

- recurring payments is not really solved, it is just a spec that defines intervals. the actual implementation must still be done by each wallet and service. the recurring payment cannot be enforced, the wallet must still initiate the payment. even if the wallet is evil and is willing to initiate a payment without the user knowing it still needs to have funds, channels, be online, connected etc., so it's not as if the services could rely on the payments being delivered in time.

- people seem to think it will enable pushing payments to mobile wallets, which it does not and cannot.

- there is a confusion of contexts: it looks like offers are superior to lnurl-pay, for example, because they don't require domain names. domain names, though, are common and well-established among internet services and stores, because these services have websites, so this is not really an issue. it is an issue, though, for people that want to receive payments in their homes. for these, indeed, bolt12 offers a superior solution -- but at the same time bolt12 seems to be selling itself as a tool for merchants and service providers when it includes and highlights features as recurring payments and refunds.

- the privacy gains for the receiver that are promoted as being part of bolt12 in fact come from a separate proposal, blinded paths, which should work for all normal lightning payments and indeed are a very nice solution. they are (or at least were, and should be) independent from the bolt12 proposal. a separate proposal, which can be (and already is being) used right now, also improves privacy for the receiver very much anway, it's called trampoline routing.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Thoughts on Nostr key management

On Why I don't like NIP-26 as a solution for key management I talked about multiple techniques that could be used to tackle the problem of key management on Nostr.

Here are some ideas that work in tandem:

- NIP-41 (stateless key invalidation)

- NIP-46 (Nostr Connect)

- NIP-07 (signer browser extension)

- Connected hardware signing devices

- other things like musig or frostr keys used in conjunction with a semi-trusted server; or other kinds of trusted software, like a dedicated signer on a mobile device that can sign on behalf of other apps; or even a separate protocol that some people decide to use as the source of truth for their keys, and some clients might decide to use that automatically

- there are probably many other ideas

Some premises I have in my mind (that may be flawed) that base my thoughts on these matters (and cause me to not worry too much) are that

- For the vast majority of people, Nostr keys aren't a target as valuable as Bitcoin keys, so they will probably be ok even without any solution;

- Even when you lose everything, identity can be recovered -- slowly and painfully, but still --, unlike money;

- Nostr is not trying to replace all other forms of online communication (even though when I think about this I can't imagine one thing that wouldn't be nice to replace with Nostr) or of offline communication, so there will always be ways.

- For the vast majority of people, losing keys and starting fresh isn't a big deal. It is a big deal when you have followers and an online persona and your life depends on that, but how many people are like that? In the real world I see people deleting social media accounts all the time and creating new ones, people losing their phone numbers or other accounts associated with their phone numbers, and not caring very much -- they just find a way to notify friends and family and move on.

We can probably come up with some specs to ease the "manual" recovery process, like social attestation and explicit signaling -- i.e., Alice, Bob and Carol are friends; Alice loses her key; Bob sends a new Nostr event kind to the network saying what is Alice's new key; depending on how much Carol trusts Bob, she can automatically start following that and remove the old key -- or something like that.

One nice thing about some of these proposals, like NIP-41, or the social-recovery method, or the external-source-of-truth-method, is that they don't have to be implemented in any client, they can live in standalone single-purpose microapps that users open or visit only every now and then, and these can then automatically update their follow lists with the latest news from keys that have changed according to multiple methods.

-

@ 6bd128bf:bb9002f8

2024-01-04 15:07:13

@ 6bd128bf:bb9002f8

2024-01-04 15:07:13Disclaimer : บทความนี้เกิดขึ้นจากการที่ผมสงสัยว่า seed phrase ที่เราจด ๆ กันไว้เนี่ย มันสร้างมาได้ยังไง ผมจึงเข้าไปอ่าน BIP-39 ที่ว่าด้วยเรื่องการสร้าง seed phrase (mnemonic code) สำหรับนำมาสร้าง seed และได้เขียนสรุปความเข้าใจของผมเพื่อเรียบเรียงความรู้ในหัวเก็บไว้ เผื่อว่าจะเป็นประโยชน์กับคนที่เกิดความสงสัยเหมือนกันกับผม ถ้าผมเข้าใจผิดตรงไหนสามารถบอกกันได้เลยนะครับ

ทำไมต้องมี BIP-39 ?

BIP-39 เกิดขึ้นเพราะว่ามนุษย์นั้นสามารถจดหรือจำคำได้ง่ายกว่าจดหรือจำข้อมูลในรูปเลขฐานสอง (binary) หรือเลขฐานสิบหก (hexadecimal) จึงมีคนเสนอให้สร้าง mnemonic code หรือที่เราเรียกกันว่า seed phrase ขึ้นมา ซึ่งจะประกอบไปด้วย 2 ส่วนคือ การสร้าง seed phrase และการแปลง seed phrase ให้การเป็น seed ที่สามารถนำไปใช้ในการสร้าง private key และ public key ต่อได้

Seed Phrase สร้างยังไง ?

seed phrase นั้นต้องสร้างขึ้นจากข้อมูลทางคอมพิวเตอร์ที่เค้าเรียกว่า entropy ที่มีจำนวน bits หารด้วย 32 ลงตัวและมีจำนวน bits อยู่ระหว่าง 128 - 256 bits ยิ่ง entropy มีจำนวน bits มากก็จะทำให้มีความปลอดภัยมากขึ้นแต่ก็จะมีจำนวนคำมากขึ้นเช่นกัน ซึ่งการสร้าง seed phrase นั้นมีขั้นตอนดังนี้

Step - 1

สุ่ม entropy ที่มีจำนวน bits อยู่ระหว่าง 128 - 256 bits และจำนวน bits ต้องหารด้วย 32 ลงตัว ซึ่งหลังจากนี้จะแทนจำนวน bits นี้ด้วยคำว่า ENT

ตัวอย่าง entropy ที่มี ENT เท่ากับ 128 bits

00110010010101010111100101001011001101100100101001000100001100010111001101110100001110010011011101000101011010100101000101010011Step - 2

คำนวณหา checksum bits ที่มีจำนวน bits เท่ากับค่า ENT หารด้วย 32 โดยการ hash entropy ด้วย SHA256 algorithm และนำผลลัพธ์มาตัดเอาแค่ส่วนหัวตามจำนวน bits ที่ต้องการ

ผมนำ entropy จาก step ก่อนหน้ามาทำ

sha256_hashing(entropy)ได้ผลลัพธ์ออกมาเป็น01100111.........และจะได้ (128 / 32) = 4 bits แรกคือ0110ซึ่งหลังจากนี้จะแทนความยาวของ checksum bits ด้วยคำว่า CSStep - 3

นำ checksum bits ที่ได้มาต่อท้าย entropy จะได้เป็นกลุ่มของ bits ที่มีความยาวเท่ากับ ENT + CS

ผมนำ 4 bits แรกจาก step ก่อนหน้ามาต่อท้าย entropy ที่มี 128 bits ดังนี้

"00110010010101010111100101001011001101100100101001000100001100010111001101110100001110010011011101000101011010100101000101010011" + "0110"Step - 4

นำกลุ่มของ bits มาแบ่งเป็นกลุ่มย่อย กลุ่มละ 11 bits จะทำให้ได้กลุ่มทั้งหมดจำนวน (ENT + CS) / 11 กลุ่ม ซึ่งถ้า entropy มีจำนวน 128 bits ก็จะแบ่งกลุ่มได้ (128 + 4) / 11 = 12 กลุ่ม

ผมแบ่งกลุ่มได้ตามนี้

00110010010 10101011110 01010010110 01101100100 10100100010 0001100010111001101110 10000111001 00110111010 00101011010 10010100010 10100110110Step - 5

นำกลุ่มย่อยแต่ละกลุ่มมาแปลงเป็นเลขฐานสิบซึ่งจะมีค่าตั้งแต่ 0 - 2047 และสามารถนำไปเทียบกับ wordlist ที่กำหนดไว้ใน BIP-39 ตาม index จะได้ผลลัพธ์เป็น seed phrase ที่สามารถนำไปสร้าง seed ต่อไปได้

ผม map เลขฐานสิบกับ wordlist ได้เป็น seed phrase ตามนี้

00110010010 => 402 = crane10101011110 => 1374 = profit01010010110 => 662 = fan01101100100 => 868 = hold10100100010 => 1314 = picture00011000101 => 197 = board11001101110 => 1646 = soccer10000111001 => 1081 = mango00110111010 => 442 = dance00101011010 => 346 = clip10010100010 => 1186 = nephew10100110110 => 1334 = plugWordlist

wordlist ที่ BIP-39 กำหนดขึ้นมานั้นมีลักษณะดังนี้ - เลือกกลุ่มคำที่สามารถพิมพ์แค่ 4 ตัวอักษรก็สามารถระบุได้ว่าเป็นคำไหน - เลี่ยงคำที่มีหน้าตาคล้าย ๆ กัน เช่นใน wordlist จะมีคำว่า build แต่ไม่มีคำว่า built เพราะอาจจะทำให้สับสนได้ - เรียงลำดับคำตามตัวอักษรเพื่อจะได้หาได้ง่าย - ตัวอักษรในคำสามารถประกอบด้วยภาษาอะไรก็ได้แต่ว่าต้องอยู่ในรูปของ UTF-8 encoding แต่ผมว่าเป็นภาษาอังกฤษน่าจะจำง่ายที่สุด

จำนวน bits ของ entropy และจำนวน word ที่ได้

entropy 128 bits จะมี 4 bits checksum ได้เป็น seed phrase 12 word entropy 160 bits จะมี 5 bits checksum ได้เป็น seed phrase 15 word entropy 192 bits จะมี 6 bits checksum ได้เป็น seed phrase 18 word entropy 224 bits จะมี 7 bits checksum ได้เป็น seed phrase 21 word entropy 256 bits จะมี 8 bits checksum ได้เป็น seed phrase 24 word

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Sol e Terra

A Terra não gira em torno do Sol. Tudo depende do ponto de referência e não existe um ponto de referência absoluto. Só é melhor dizer que a Terra gira em torno do Sol porque há outros planetas fazendo movimentos análogos e aí fica mais fácil para todo mundo entender os movimentos tomando o Sol como ponto de referência.

-

@ 8fb140b4:f948000c

2024-01-02 08:43:31

@ 8fb140b4:f948000c

2024-01-02 08:43:31After my second attempt at running Lightning Network node went wrong, mainly due to my own mistake and bad cli interface of LND, I have decided to look deeply into the alternative implementation of the lightning node software. Eclair was more final choice driven by multiple reasons that I am planning to further talk about in this write-up. The main reason I even considered Eclair despite my dislike of Java and JVM, was that one of the largest lightning nodes on the network is ACINQ, and they are the company behind the implementation and maintenance of this open source software.

I’ve noticed them first after reading their blog about how they run $100M Lightning node and what level of care and thought they put into the whole implementation. Lightning is used to transfer large quantities of value across the internet, and I would only trust something well designed and actively maintained by the people who have to lose the most if they make a wrong choice.

Eclair in itself is not a complex implementation of the lightning network standards, and highly modular, which allows for an easy segregation of duties among different components of the node. One of the biggest sales-points for me was their approach of crash-only software which guarantees consistency of the state regardless of what happens with the running software. By that extension, to shutdown the node, you simply kill the process and start it again. It doesn’t matter what transactions were in-flight, or what stage they were in. This is huge, since anything can happen to a running node (e.g., failed disk, failed RAM, CPU, kernel panic, etc.)

Getting back to the architecture, the Eclair is simple and elegant in design and implementation. The node is separated into three main components that comprise the node: eclair-core, eclair-node, eclair-front. All of the entities are sandboxed actors (e.g., peer, channel, payment), which allows for scalabilities across CPUs and faults. This ensures high availability and security.

Clustering is also an option and can be achieved by migrating from the single node to a multi-server node. There is no need to dive into complexities of that, and by the time you need to scale, I am sure you’ll be able to figure it out.

Simple and robust API, is yet another major reason that keeps the node simple and fast. One downside of the API, is that it is protected by a single password and is not designed for RBAC (Role Based Access Control). One solution could be an implementation of another API wrapper that would implement things similar to Runes or Macaroons, which should not be a challenge considering the REST API simplicity.

On-the-fly HTLC max size adjustment, which will prevent your node accepting forwarded payments that would fail due to lack of liquidity on your side. This also makes routing better for the rest of the lightning network, but may “leak” your channel balances if not done right.

Experimentation and adjustment of path-finding algorithm. I am not there yet myself, but I see this as a great option in the future if I need it. This will allow me to make my own choices how I want to route payments and what parameters I would use to determine the best path.

Full production support of PostgreSQL server. Not only Eclair itself is not a beta release unlike LND, but also has full production support of the very reliable and battle tested database as its backend data storage. You are able to(and should) to run Active/Passive PostgreSQL cluster in synchronous mode, and ensure that all of the written data by the node are backed-up in real-time. This removes the worry of corrupted database that I have seen happen all too often.

Excellent monitoring and metrics, that can be collected by Prometheus and viewed in Grafana. Eclair provides template dashboards that you can import into Grafana to make your life easier. You can also use Kamon (external service) where you could send the metrics and monitor your node.

Support for all common networking protocols and support Socks5.

Last, but not least, support for plug-ins. Even if you are not well versed in writing plug-ins, you could take some of the available ones and modify them to your liking.

There are many more features and limitations that I didn’t mention, but you can explore them yourself here.

One down-side that you should consider, is not such a great availability of the readily available tools. So far I found that Ride-The-Lightning works well; LNBits works but I am yet to see if it is reliable; BTCPayServer has support but I have failed to use it with API directly, and only was able to use it via LNBits.

Lightning is still reckless, but nothing stops you from doing it carefully and reliably. Good luck and happy node-running! 🐶🐾🫡⚡️

My Node - RAϟKO

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28IPFS problems: Community

I was an avid IPFS user until yesterday. Many many times I asked simple questions for which I couldn't find an answer on the internet in the #ipfs IRC channel on Freenode. Most of the times I didn't get an answer, and even when I got it was rarely by someone who knew IPFS deeply. I've had issues go unanswered on js-ipfs repositories for year – one of these was raising awareness of a problem that then got fixed some months later by a complete rewrite, I closed my own issue after realizing that by myself some couple of months later, I don't think the people responsible for the rewrite were ever acknowledge that he had fixed my issue.

Some days ago I asked some questions about how the IPFS protocol worked internally, sincerely trying to understand the inefficiencies in finding and fetching content over IPFS. I pointed it would be a good idea to have a drawing showing that so people would understand the difficulties (which I didn't) and wouldn't be pissed off by the slowness. I was told to read the whitepaper. I had already the whitepaper, but read again the relevant parts. The whitepaper doesn't explain anything about the DHT and how IPFS finds content. I said that in the room, was told to read again.

Before anyone misread this section, I want to say I understand it's a pain to keep answering people on IRC if you're busy developing stuff of interplanetary importance, and that I'm not paying anyone nor I have the right to be answered. On the other hand, if you're developing a super-important protocol, financed by many millions of dollars and a lot of people are hitting their heads against your software and there's no one to help them; you're always busy but never delivers anything that brings joy to your users, something is very wrong. I sincerely don't know what IPFS developers are working on, I wouldn't doubt they're working on important things if they said that, but what I see – and what many other users see (take a look at the IPFS Discourse forum) is bugs, bugs all over the place, confusing UX, and almost no help.

-

@ 8fb140b4:f948000c

2023-12-30 10:58:49

@ 8fb140b4:f948000c

2023-12-30 10:58:49Disclaimer, this tutorial may have a real financial impact on you, follow at you own risk.

Step 1: execute

lncli closeallchannelsThat’s it, that was easy as it could be. Now all your node’s channels are in the process of being closed and all your liquidity is being moved to your onchain wallet. The best part, you don’t even need to confirm anything, it just does it in one go with no questions asked! You can check the status by using a very similar looking command,

lncli closedchannels!Good job! Now you are ready to start from scratch and use any other reasonable solution!

🐶🐾🫡🤣🤣🤣

Disclaimer: this is a satirical tutorial that will 💯 cost you a lot of funds and headaches.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28A list of things artificial intelligence is not doing

If AI is so good why can't it:

- write good glue code that wraps a documented HTTP API?

- make good translations using available books and respective published translations?

- extract meaningful and relevant numbers from news articles?

- write mathematical models that fit perfectly to available data better than any human?

- play videogames without cheating (i.e. simulating human vision, attention and click speed)?

- turn pure HTML pages into pretty designs by generating CSS

- predict the weather

- calculate building foundations

- determine stock values of companies from publicly available numbers

- smartly and automatically test software to uncover bugs before releases

- predict sports matches from the ball and the players' movement on the screen

- continuously improve niche/local search indexes based on user input and and reaction to results

- control traffic lights

- predict sports matches from news articles, and teams and players' history

This was posted first on Twitter.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28lnurl-auth explained

You may have seen the lnurl-auth spec or heard about it, but might not know how it works or what is its relationship with other lnurl protocols. This document attempts to solve that.

Relationship between lnurl-auth and other lnurl protocols

First, what is the relationship of lnurl-auth with other lnurl protocols? The answer is none, except the fact that they all share the lnurl format for specifying

httpsURLs.In fact, lnurl-auth is very unique in the sense that it doesn't even need a Lightning wallet to work, it is a standalone authentication protocol that can work anywhere.

How does it work

Now, how does it work? The basic idea is that each wallet has a seed, which is a random value (you may think of the BIP39 seed words, for example). Usually from that seed different keys are derived, each of these yielding a Bitcoin address, and also from that same seed may come the keys used to generate and manage Lightning channels.

What lnurl-auth does is to generate a new key from that seed, and from that a new key for each service (identified by its domain) you try to authenticate with.

That way, you effectively have a new identity for each website. Two different services cannot associate your identities.

The flow goes like this: When you visit a website, the website presents you with a QR code containing a callback URL and a challenge. The challenge should be a random value.

When your wallet scans or opens that QR code it uses the domain in the callback URL plus the main lnurl-auth key to derive a key specific for that website, uses that key to sign the challenge and then sends both the public key specific for that for that website plus the signed challenge to the specified URL.

When the service receives the public key it checks it against the challenge signature and start a session for that user. The user is then identified only by its public key. If the service wants it can, of course, request more details from the user, associate it with an internal id or username, it is free to do anything. lnurl-auth's goals end here: no passwords, maximum possible privacy.

FAQ

-

What is the advantage of tying this to Bitcoin and Lightning?

One big advantage is that your wallet is already keeping track of one seed, it is already a precious thing. If you had to keep track of a separate auth seed it would be arguably worse, more difficult to bootstrap the protocol, and arguably one of the reasons similar protocols, past and present, weren't successful.

-

Just signing in to websites? What else is this good for?

No, it can be used for authenticating to installable apps and physical places, as long as there is a service running an HTTP server somewhere to read the signature sent from the wallet. But yes, signing in to websites is the main problem to solve here.

-

Phishing attack! Can a malicious website proxy the QR from a third website and show it to the user to it will steal the signature and be able to login on the third website?

No, because the wallet will only talk to the the callback URL, and it will either be controlled by the third website, so the malicious won't see anything; or it will have a different domain, so the wallet will derive a different key and frustrate the malicious website's plan.

-

I heard SQRL had that same idea and it went nowhere.

Indeed. SQRL in its first version was basically the same thing as lnurl-auth, with one big difference: it was vulnerable to phishing attacks (see above). That was basically the only criticism it got everywhere, so the protocol creators decided to solve that by introducing complexity to the protocol. While they were at it they decided to add more complexity for managing accounts and so many more crap that in the the spec which initially was a single page ended up becoming 136 pages of highly technical gibberish. Then all the initial network effect it had, libraries and apps were trashed and nowadays no one can do anything with it (but, see, there are still people who love the protocol writing in a 90's forum with no clue of anything besides their own Java).

-

We don't need this, we need WebAuthn!

WebAuthn is essentially the same thing as lnurl-auth, but instead of being simple it is complex, instead of being open and decentralized it is centralized in big corporations, and instead of relying on a key generated by your own device it requires an expensive hardware HSM you must buy and trust the manufacturer. If you like WebAuthn and you like Bitcoin you should like lnurl-auth much more.

-

What about BitID?

This is another one that is very similar to lnurl-auth, but without the anti-phishing prevention and extra privacy given by making one different key for each service.

-

What about LSAT?

It doesn't compete with lnurl-auth. LSAT, as far as I understand it, is for when you're buying individual resources from a server, not authenticating as a user. Of course, LSAT can be repurposed as a general authentication tool, but then it will lack features that lnurl-auth has, like the property of having keys generated independently by the user from a common seed and a standard way of passing authentication info from one medium to another (like signing in to a website at the desktop from the mobile phone, for example).

-

-

@ a023a5e8:ff29191d

2023-12-20 04:43:48

@ a023a5e8:ff29191d

2023-12-20 04:43:48Yesterday I was sleeping with my wife and 2 kids in same bed and in middle of night i just woke up to see my wife and kids sleeping aside me in a moonlight like ambience and it was a vivid feeling. And I just got panicked with strange feeling and in a bit I woke up really to see them again. All was in just a moment. Did you ever had such experience?

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

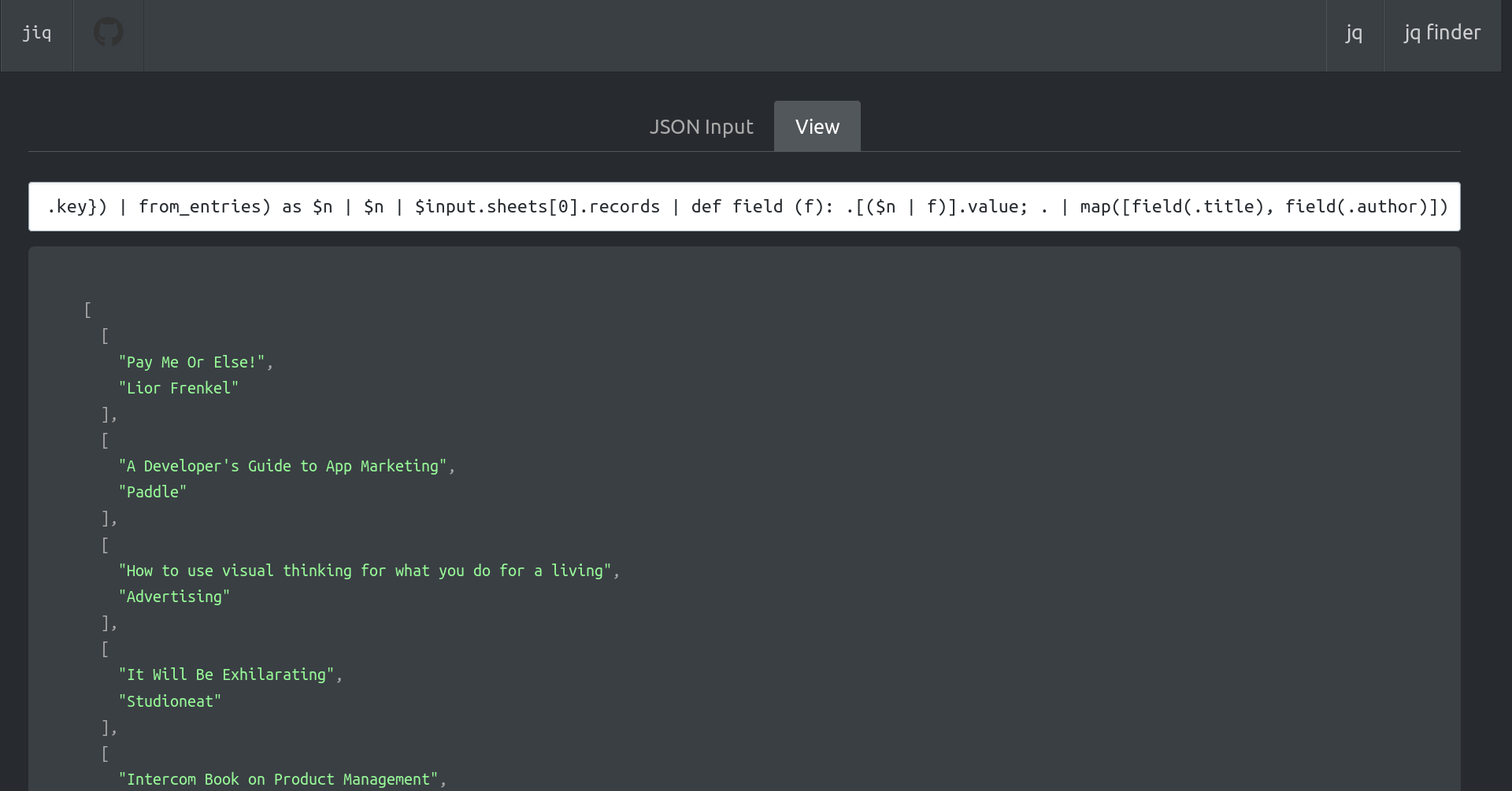

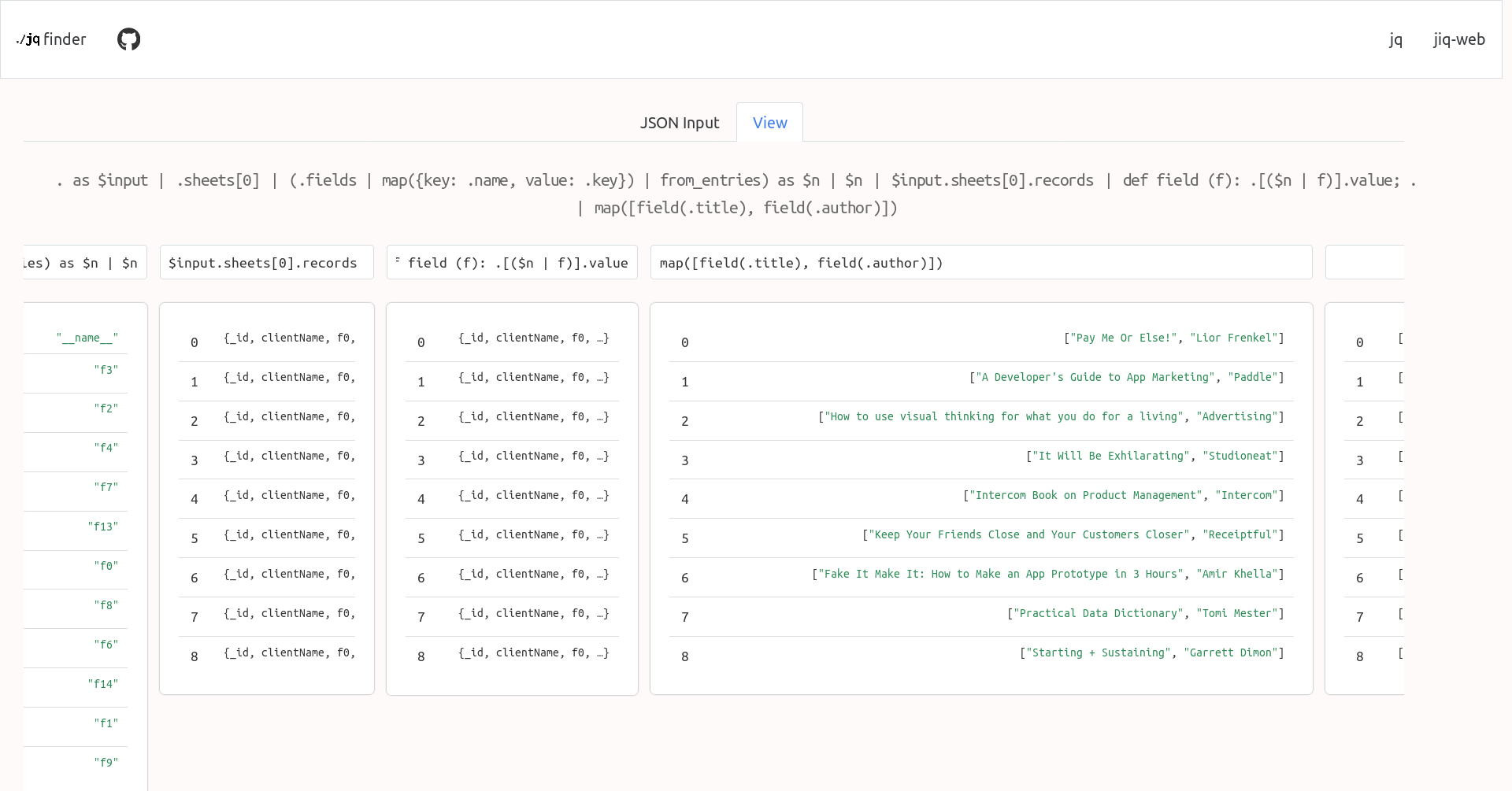

2024-01-14 13:55:28ijq

An interactive REPL for

jqwith smart helpers (for example, it automatically assigns each line of input to a variable so you can reference it later, it also always referenced the previous line automatically).See also

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28A Causa

o Princípios de Economia Política de Menger é o único livro que enfatiza a CAUSA o tempo todo. os cientistas todos parecem não saber, ou se esquecer sempre, que as coisas têm causa, e que o conhecimento verdadeiro é o conhecimento da causa das coisas.

a causa é uma categoria metafísica muito superior a qualquer correlação ou resultado de teste de hipótese, ela não pode ser descoberta por nenhum artifício econométrico ou reduzida à simples antecedência temporal estatística. a causa dos fenômenos não pode ser provada cientificamente, mas pode ser conhecida.

o livro de Menger conta para o leitor as causas de vários fenômenos econômicos e as interliga de forma que o mundo caótico da economia parece adquirir uma ordem no momento em que você lê. é uma sensação mágica e indescritível.

quando eu te o recomendei, queria é te imbuir com o espírito da busca pela causa das coisas. depois de ler aquilo, você está apto a perceber continuidade causal nos fenômenos mais complexos da economia atual, enxergar as causas entre toda a ação governamental e as suas várias consequências na vida humana. eu faço isso todos os dias e é a melhor sensação do mundo quando o caos das notícias do caderno de Economia do jornal -- que para o próprio jornalista que as escreveu não têm nenhum sentido (tanto é que ele escreve tudo errado) -- se incluem num sistema ordenado de causas e consequências.

provavelmente eu sempre erro em alguns ou vários pontos, mas ainda assim é maravilhoso. ou então é mais maravilhoso ainda quando eu descubro o erro e reinsiro o acerto naquela racionalização bela da ordem do mundo econômico que é a ordem de Deus.

em scrap para T.P.

-

@ a023a5e8:ff29191d

2023-12-19 12:14:53

@ a023a5e8:ff29191d

2023-12-19 12:14:53Shit shit shit

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Trelew

A CLI tool for navigating Trello boards. It used vorpal for an "immersive" experience and was pretty good.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28A biblioteca infinita

Agora esqueci o nome do conto de Jorge Luis Borges em que a tal biblioteca é descrita, ou seus detalhes específicos. Eu tinha lido o conto e nunca havia percebido que ele matava a questão da aleatoriedade ser capaz de produzir coisas valiosas. Precisei mesmo da Wikipédia me dizer isso.

Alguns anos atrás levantei essa questão para um grupo de amigos sem saber que era uma questão tão batida e baixa. No meu exemplo era um cachorro andando sobre letras desenhadas e não um macaco numa máquina de escrever. A minha conclusão da discussão foi que não importa o que o cachorro escrevesse, sem uma inteligência capaz de compreender aquilo nada passaria de letras aleatórias.

Borges resolve tudo imaginando uma biblioteca que contém tudo o que o cachorro havia escrito durante todo o infinito em que fez o experimento, e portanto contém todo o conhecimento sobre tudo e todas as obras literárias possíveis -- mas entre cada página ou frase muito boa ou pelo menos legívei há toneladas de livros completamente aleatórios e uma pessoa pode passar a vida dentro dessa biblioteca que contém tanto conhecimento importante e mesmo assim não aprender nada porque nunca vai achar os livros certos.

Everything would be in its blind volumes. Everything: the detailed history of the future, Aeschylus' The Egyptians, the exact number of times that the waters of the Ganges have reflected the flight of a falcon, the secret and true nature of Rome, the encyclopedia Novalis would have constructed, my dreams and half-dreams at dawn on August 14, 1934, the proof of Pierre Fermat's theorem, the unwritten chapters of Edwin Drood, those same chapters translated into the language spoken by the Garamantes, the paradoxes Berkeley invented concerning Time but didn't publish, Urizen's books of iron, the premature epiphanies of Stephen Dedalus, which would be meaningless before a cycle of a thousand years, the Gnostic Gospel of Basilides, the song the sirens sang, the complete catalog of the Library, the proof of the inaccuracy of that catalog. Everything: but for every sensible line or accurate fact there would be millions of meaningless cacophonies, verbal farragoes, and babblings. Everything: but all the generations of mankind could pass before the dizzying shelves – shelves that obliterate the day and on which chaos lies – ever reward them with a tolerable page.

Tenho a impressão de que a publicação gigantesca de artigos, posts, livros e tudo o mais está transformando o mundo nessa biblioteca. Há tanta coisa pra ler que é difícil achar o que presta. As pessoas precisam parar de escrever.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28OP_CHECKTEMPLATEVERIFYand the "covenants" dramaThere are many ideas for "covenants" (I don't think this concept helps in the specific case of examining proposals, but fine). Some people think "we" (it's not obvious who is included in this group) should somehow examine them and come up with the perfect synthesis.

It is not clear what form this magic gathering of ideas will take and who (or which ideas) will be allowed to speak, but suppose it happens and there is intense research and conversations and people (ideas) really enjoy themselves in the process.

What are we left with at the end? Someone has to actually commit the time and put the effort and come up with a concrete proposal to be implemented on Bitcoin, and whatever the result is it will have trade-offs. Some great features will not make into this proposal, others will make in a worsened form, and some will be contemplated very nicely, there will be some extra costs related to maintenance or code complexity that will have to be taken. Someone, a concreate person, will decide upon these things using their own personal preferences and biases, and many people will not be pleased with their choices.

That has already happened. Jeremy Rubin has already conjured all the covenant ideas in a magic gathering that lasted more than 3 years and came up with a synthesis that has the best trade-offs he could find. CTV is the result of that operation.

The fate of CTV in the popular opinion illustrated by the thoughtless responses it has evoked such as "can we do better?" and "we need more review and research and more consideration of other ideas for covenants" is a preview of what would probably happen if these suggestions were followed again and someone spent the next 3 years again considering ideas, talking to other researchers and came up with a new synthesis. Again, that person would be faced with "can we do better?" responses from people that were not happy enough with the choices.

And unless some famous Bitcoin Core or retired Bitcoin Core developers were personally attracted by this synthesis then they would take some time to review and give their blessing to this new synthesis.

To summarize the argument of this article, the actual question in the current CTV drama is that there exists hidden criteria for proposals to be accepted by the general community into Bitcoin, and no one has these criteria clear in their minds. It is not as simple not as straightforward as "do research" nor it is as humanly impossible as "get consensus", it has a much bigger social element into it, but I also do not know what is the exact form of these hidden criteria.

This is said not to blame anyone -- except the ignorant people who are not aware of the existence of these things and just keep repeating completely false and unhelpful advice for Jeremy Rubin and are not self-conscious enough to ever realize what they're doing.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28idea: clarity.fm on Lightning

Getting money from clients very easily, dispatching that money to "world class experts" (what a silly way to market things, but I guess it works) very easily are the job for Bitcoin and the Lightning Network.

EDIT 2020-09-04

My idea was that people would advertise themselves, so you would book an hour with people you know already, but it seems that clarify.fm has gone through the route of offering a "catalog of experts" to potential clients, all full of verification processes probably and marketing. So I guess this is not a thing I can do.

Actually I did https://s4a.etleneum.com/ (on Etleneum) that is somewhat similar, but of course doesn't have the glamour and network effect and marketing -- also it's just text, when in Clarity is fancy calls.

Thinking about it, this is just a simple and obvious idea: just copy things from the fiat world and make them on Lightning, but maybe it is still worth pointing these out as there are hundreds of developers out there trying to make yet another lottery game with Lightning.

It may also be a good idea to not just copy fiat-businesses models, but also change them experimenting with new paradigms, like idea: Patreon, but simple, and without subscription.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Channels without HTLCs

HTLCs below the dust limit are not possible, because they're uneconomical.

So currently whenever a payment below the dust limit is to be made Lightning peers adjust their commitment transactions to pay that amount as fees in case the channel is closed. That's a form of reserving that amount and incentivizing peers to resolve the payment, either successfully (in case it goes to the receiving node's balance) or not (it then goes back to the sender's balance).

SOLUTION

I didn't think too much about if it is possible to do what I think can be done in the current implementation on Lightning channels, but in the context of Eltoo it seems possible.

Eltoo channels have UPDATE transactions that can be published to the blockchain and SETTLEMENT transactions that spend them (after a relative time) to each peer. The barebones script for UPDATE transactions is something like (copied from the paper, because I don't understand these things):

OP_IF # to spend from a settlement transaction (presigned) 10 OP_CSV 2 As,i Bs,i 2 OP_CHECKMULTISIGVERIFY OP_ELSE # to spend from a future update transaction <Si+1> OP_CHECKLOCKTIMEVERIFY 2 Au Bu 2 OP_CHECKMULTISIGVERIFY OP_ENDIFDuring a payment of 1 satoshi it could be updated to something like (I'll probably get this thing completely wrong):

OP_HASH256 <payment_hash> OP_EQUAL OP_IF # for B to spend from settlement transaction 1 in case the payment went through # and they have a preimage 10 OP_CSV 2 As,i1 Bs,i1 2 OP_CHECKMULTISIGVERIFY OP_ELSE OP_IF # for A to spend from settlement transaction 2 in case the payment didn't went through # and the other peer is uncooperative <now + 1day> OP_CHECKLOCKTIMEVERIFY 2 As,i2 Bs,i2 2 OP_CHECKMULTISIGVERIFY OP_ELSE # to spend from a future update transaction <Si+1> OP_CHECKLOCKTIMEVERIFY 2 Au Bu 2 OP_CHECKMULTISIGVERIFY OP_ENDIF OP_ENDIFThen peers would have two presigned SETTLEMENT transactions, 1 and 2 (with different signature pairs, as badly shown in the script). On SETTLEMENT 1, funds are, say, 999sat for A and 1001sat for B, while on SETTLEMENT 2 funds are 1000sat for A and 1000sat for B.

As soon as B gets the preimage from the next peer in the route it can give it to A and them can sign a new UPDATE transaction that replaces the above gimmick with something simpler without hashes involved.

If the preimage doesn't come in viable time, peers can agree to make a new UPDATE transaction anyway. Otherwise A will have to close the channel, which may be bad, but B wasn't a good peer anyway.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28IPFS problems: Shitcoinery

IPFS was advertised to the Ethereum community since the beggining as a way to "store" data for their "dApps". I don't think this is harmful in any way, but for some reason it may have led IPFS developers to focus too much on Ethereum stuff. Once I watched a talk showing libp2p developers – despite being ignored by the Ethereum team (that ended up creating their own agnostic p2p library) – dedicating an enourmous amount of work on getting a libp2p app running in the browser talking to a normal Ethereum node.

The always somewhat-abandoned "Awesome IPFS" site is a big repository of "dApps", some of which don't even have their landing page up anymore, useless Ethereum smart contracts that for some reason use IPFS to store whatever the useless data their users produce.

Again, per se it isn't a problem that Ethereum people are using IPFS, but it is at least confusing, maybe misleading, that when you search for IPFS most of the use-cases are actually Ethereum useless-cases.

See also

- Bitcoin, the only non-shitcoin

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28A response to Achim Warner's "Drivechain brings politics to miners" article

I mean this article: https://achimwarner.medium.com/thoughts-on-drivechain-i-miners-can-do-things-about-which-we-will-argue-whether-it-is-actually-a5c3c022dbd2

There are basically two claims here:

1. Some corporate interests might want to secure sidechains for themselves and thus they will bribe miners to have these activated

First, it's hard to imagine why they would want such a thing. Are they going to make a proprietary KYC chain only for their users? They could do that in a corporate way, or with a federation, like Facebook tried to do, and that would provide more value to their users than a cumbersome pseudo-decentralized system in which they don't even have powers to issue currency. Also, if Facebook couldn't get away with their federated shitcoin because the government was mad, what says the government won't be mad with a sidechain? And finally, why would Facebook want to give custody of their proprietary closed-garden Bitcoin-backed ecosystem coins to a random, open and always-changing set of miners?

But even if they do succeed in making their sidechain and it is very popular such that it pays miners fees and people love it. Well, then why not? Let them have it. It's not going to hurt anyone more than a proprietary shitcoin would anyway. If Facebook really wants a closed ecosystem backed by Bitcoin that probably means we are winning big.

2. Miners will be required to vote on the validity of debatable things

He cites the example of a PoS sidechain, an assassination market, a sidechain full of nazists, a sidechain deemed illegal by the US government and so on.

There is a simple solution to all of this: just kill these sidechains. Either miners can take the money from these to themselves, or they can just refuse to engage and freeze the coins there forever, or they can even give the coins to governments, if they want. It is an entirely good thing that evil sidechains or sidechains that use horrible technology that doesn't even let us know who owns each coin get annihilated. And it was the responsibility of people who put money in there to evaluate beforehand and know that PoS is not deterministic, for example.

About government censoring and wanting to steal money, or criminals using sidechains, I think the argument is very weak because these same things can happen today and may even be happening already: i.e., governments ordering mining pools to not mine such and such transactions from such and such people, or forcing them to reorg to steal money from criminals and whatnot. All this is expected to happen in normal Bitcoin. But both in normal Bitcoin and in Drivechain decentralization fixes that problem by making it so governments cannot catch all miners required to control the chain like that -- and in fact fixing that problem is the only reason we need decentralization.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28A entrevista da Flávia Tavares com o Olavo de Carvalho

Não li todas as reclamações que o Olavo fez, mas li algumas. Também não li toda a matéria que saiu na Época, porque não tive paciência, mas assisti aos dois vídeos da entrevista que o Olavo publicou.

Tendo lido primeiro as muitas reclamações do Olavo, esperei encontrar no vídeo uma pessoa falsa, que fingiu-se de amigável para obter informações que usaria depois para destruir a imagem do Olavo, mas não vi nada disso.

Claro que ela poderia ter me enganado também, se enganou ao Olavo. Mas na matéria em si, também não vi nada além de sinceridade -- talvez não excelência jornalística, mas nada que eu não esperasse de qualquer matéria de qualquer revista. Flavia Tavares não entendeu muitas coisas, mas não fingiu que não entendeu nada, foi simples e honestamente Flavia Tavares, como ela mesma declarou no final do vídeo da entrevista: "olha, eu não fingi nada aqui, viu?".

O mais importante de tudo isso, porém, são as partes da matéria que apresentam idéias difíceis de conceber, como as que Olavo tem sobre o governo mundial ou a disseminação da pedofilia. Em toda discussão pública ou privada, essas idéias são proibidas. Muita gente pode concordar que a esquerda não presta, mas ninguém em sã consciência admitirá a possibilidade de que haja qualquer intenção significativa de implantação de um governo mundial ou da disseminação da pedofilia. A mesma carinha de deboche que seu amigo esquerdista faria à simples menção desses assuntos é a que Flavia Tavares usa no seu texto quando quer mostrar que Olavo é meio tantã. A carinha de deboche vem desacompanhada de qualquer reflexão séria ou tentativa de refutação, sempre.

Link da tal matéria: http://epoca.globo.com/sociedade/noticia/2017/10/olavo-de-carvalho-o-guru-da-direita-que-rejeita-o-que-dizem-seus-fas.html?utm_source=twitter&utm_medium=social&utm_campaign=post Vídeos: https://www.youtube.com/watch?v=C0TUsKluhok, https://www.youtube.com/watch?v=yR0F1haQ07Y&t=5s

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Why IPFS cannot work, again

Imagine someone comes up with a solution for P2P content-addressed data-sharing that involves storing all the files' contents in all computers of the network. That wouldn't work, right? Too much data, if you think this can work then you're a BSV enthusiast.

Then someone comes up with the idea of not storing everything in all computers, but only some things on some computers, based on some algorithm to determine what data a node would store given its pubkey or something like that. Still wouldn't work, right? Still too much data no matter how much you spread it, but mostly incentives not aligned, would implode in the first day.

Now imagine someone says they will do the same thing, but instead of storing the full contents each node would only store a pointer to where each data is actually available. Does that make it better? Hardly so. Still, you're just moving the problem.

This is IPFS.

Now you have less data on each computer, but on a global scale that is still a lot of data.

No incentives.

And now you have the problem of finding the data. First if you have some data you want the world to access you have to broadcast information about that, flooding the network -- and everybody has to keep doing this continuously for every single file (or shard of file) that is available.

And then whenever someone wants some data they must find the people who know about that, which means they will flood the network with requests that get passed from peer to peer until they get to the correct peer.

The more you force each peer to store the worse it becomes to run a node and to store data on behalf of others -- but the less your force each peer to store the more flooding you'll have on the global network, and the slower will be for anyone to actually get any file.

But if everybody just saves everything to Infura or Cloudflare then it works, magic decentralized technology.

Related

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Who will build the roads?

Who will build the roads? Em Lagoa Santa, as mais novas e melhores ruas -- que na verdade acabam por formar enormes teias de bairros que se interligam -- são construídas pelos loteadores que querem as ruas para que seus lotes valham mais -- e querem que outras pessoas usem as ruas também. Também são esses mesmos loteadores que colocam os postes de luz e os encanamentos de água, não sem antes terem que se submeter a extorsões de praxe praticadas por COPASA e CEMIG.

Se ao abrir um loteamento, condomínio, prédio um indivíduo ou uma empresa consegue sem muito problema passar rua, eletricidade, água e esgoto, por que não seria possível existir livre-concorrência nesses mercados? Mesmo aquela velha estória de que é ineficiente passar cabos de luz duplicados para que companhias elétricas possam competir já me parece bobagem.

-

@ 1abc40c5:6cd60e41

2023-12-17 07:42:37

@ 1abc40c5:6cd60e41

2023-12-17 07:42:37สวัสดีครับ ยินดีต้อนรับสมาชิกใหม่สู่สังคมชาวม่วง ด้วยความยินดียิ่ง

ในเบื้องต้นเข้าไปเรียนรู้และศึกษาการใช้งานตามโน๊ตของพี่แจ๊ค กู๊ดเดย์ พี่ใหญ่ของเราก่อนเลยครับ (จะรู้ว่าโลกใบนี้กว้างใหญ่กว่าที่เห็น)

===================== แล้วก็ไปเพิ่มกระเป๋า LN Wallet สำหรับรับ zap ง่าย ๆ ได้จากบทความของจารย์ Notoshi (อาจารย์อาร์ม RS)

-

หากใช้ Nostr บนคอมพิวเตอร์เป็นประจำ สามารถผูกกับ Browser Extension เพื่อใช้งานง่าย ๆ แนะนำ "Getalby" ครับ

-

หากเล่นบนมือถืออาจจะเหมาะกับ "Wallet of Satoshi" และ "Blink wallet" มากกว่า

แต่แนะนำว่าควรมีทั้ง 2 แบบครับ ท่านจะได้ใช้ประโยชน์ในเวลาที่เหมาะสมอย่างแน่นอน 5555

===================== จากนั้นไปเพิ่ม Siamstr Relay - wss://relay.siamstr.com - wss://teemie1-relay.duckdns.org

ที่สนับสนุนโดยพี่ตี๋ เพื่อให้เราชาวไทยเชื่อมสายสัมพันธ์ต่อกันและมองเห็นกันได้ ตามนี้เลยครับ

และก็ลืมไม่ได้ที่จะเพิ่ม Siamstr Relay อีกหนึ่ง ..ที่สนับสนุนโดยอาจารย์อาร์ม RS ของเราเองเช่นกันครับ - wss://relay.notoshi.win

ทั้ง 3 Relay นี้ เป็น Free paid Relay นะครับ สามารถใช้งานได้เลย ^^

หรือหากใครยังไม่จุใจ.. อยากได้รีเลย์เพิ่มเติมอีก แนะนำไปดูที่ อัพเดทลีเรย์ 9/12/2023 จากพี่ใหญ่ของเราได้เลยครับ

===================== และอย่าลืมไปสร้าง NIP-05 NOSTR ADDRESSES "@siamstr.com" เท่ห์ ๆ ได้ที่ siamstr FREE NOSTR ADDRESSES. เพื่อทุกคน เพื่ออิสรภาพ ใครมาก่อนได้จับจองชื่อก่อนน้าาา ... ^^

===================== อ้อ ... หากอยากเรียนรู้พื้นฐาน Nostr แบบเชิงเทคนิคคอล หรือการเก็บ nsec (Private key) ของเราให้ปลอดภัย ... เชิญได้ที่บทความของพี่อาร์ม Righshift ของเราครับ

หรือจะเข้าไปอ่านจากในนี้ก็ได้เช่นกัน

Nostr: โซเชียลมีเดียเสรีไร้ศูนย์กลาง

ภาพ : Network diagram จาก https://nostr.how/en/the-protocol

ภาพ : Network diagram จาก https://nostr.how/en/the-protocol===================== สุดท้ายผมหวังว่าเราจะได้รู้จักกันมากขึ้นกว่านี้ เพื่อร่วมกันสร้างสังคมที่เต็มไปด้วยคุณค่า และความรู้สึกดี ๆ ให้แก่กันต่อไปครับ

ขอบคุณที่เข้ามาเป็นส่วนหนึ่งของเราครับ

-

-

@ ecda4328:1278f072

2023-12-16 20:46:23

@ ecda4328:1278f072

2023-12-16 20:46:23Introduction: Crypto.com Exchange for European Traders

Reside in Europe, including non-EUR countries? Frustrated with the exorbitant spreads on the Crypto.com App (up to 5%) and high credit card fees (up to 3%) when you're eager to buy or sell cryptocurrency? This guide is tailored for you!

Quick Transfer with SEPA Instant

Did you know that you can transfer EUR to your Crypto.com Exchange account within minutes, and sometimes even seconds, including weekends and bank holidays? Yes, you absolutely can, thanks to SEPA Instant Credit Transfer (SCT Inst).

Supported Banks