-

@ d9a329af:bef580d7

2025-04-30 23:44:20

@ d9a329af:bef580d7

2025-04-30 23:44:20To be clear, this is 100% my subjective opinion on the alternatives to popular music, as it has become practically a formula of witchcraft, pseudoscience and mysticism. There is nothing you can do to get me to listen to pop music from the late 2010's to now. I could certainly listen to almost anything... just not modern pop, which is now completely backwards.

Most examples of compositions for these genres will be my own, unless otherwise stated. The genres on this list are in no particular order, though my favorite of which will be first: 1. Bossa Nova

Bossa Nova is a subgenre of jazz from Brazil, created in the 1950's as a mix of standard jazz and samba rhythms in a more gentle and relaxing manner. This genre's most famous songs are Tom Jobim's The Girl from Ipanema (found in albums like Getz/Gilberto), Wave, and even Triste. Most of the music is written in 2/4 time signature, and any key is almost acceptable. It's called Afro-Brazilian Jazz for a reason after all. I have a ton of compositions I produced, from Forget and Regret, to Rabbit Theory, Beaches of Gensokyo Past, Waveside, and even Willows of Ice to name a few of them.

- Metal

This is an umbrella term for many subgenres of this fork of hard rock, with more distorted guitars, speedy and technical writing, vocals that sound demonic (some subgenres don't have that), or sometimes, chaotic lyrics and downright nasty ones if you look deep enough. If you want to get into it, just make sure it's not Black Metal (it's weird), Blackened Metal (Any subgenre of metal that's been inserted with elements of Black Metal), Metalcore, or any other genre that has vocals that aren't the best to hear (these are vocal fries that are really good if you're into the weird demonic sounds). This isn't for the faint of heart. Instrumental metal is good though, and an example is my composition from Touhou Igousaken called A Sly Foxy Liar if you want to know what techincal groove metal is like at a glance.

- Touhou-style

I can attest to this one, as I produced bossa nova with a Touhou-like writing style. Touhou Project is a series of action video games created by one guy (Jun'ya Outa, a.k.a. ZUN), and are usually bullet curtain games in the main franchise, with some official spinoffs that are also action games (fighting games like Touhou 12.3 ~ Hisoutensoku). What I'm referring to here is music written by ZUN himself (He does almost everything for Touhou Project, and he's really good at it), or fans that write to his style with their own flair. I did this once with my composition, Toiled Bubble, which is from my self-titled EP. I probably wouldn't do much with it to be fair, and stick to bossa nova (my main genre if you couldn't tell).

- Hip-Hop/Rap

This can get subjective here, but old-school rap and hip-hop... give me more of it. Before it became corrupted with all kinds of immoral things, hip-hop and rap were actually very good for their time. These were new, innovative and creative with how lyrics were written. Nowadays, while we're talking about cars, unspeakable acts, money, and just being dirtbags, artists in this genre back then were much classier than that. I fit in the latter category with my piece entitled, Don't Think, Just Roast, where I called out antis for a Vtuber agency who wanted to give its talent a hard time. It didn't get much traction on YouTube, because I'm not a well-known artist (I'm considered a nobody in the grand scheme of things. I'd like to get it fixed, but I don't want a record deal... I'd have to become a Pharisee or a Jesuit for that).

- Synthwave

This is a genre of electronic music focused on 80's and 90's synths being used to carry a composition. Nowadays, we have plugins like Vital, Serum, Surge and others to create sounds we would otherwise be hearing on an 80's or 90's keyboard. An example of this is my composition, Wrenched Torque, which was composed for a promotion I did with RAES when he released his Vital synth pack.

More are to come in future installments of this series, and I will adjust the title of this one accordingly if y'all have any ideas of genres I should look into.

-

@ e0e92e54:d630dfaa

2025-04-30 23:27:59

@ e0e92e54:d630dfaa

2025-04-30 23:27:59The Leadership Lesson I Learned at the Repair Shop

Today was a reminder of "Who You Know" actually matters.

Our van has been acting up as of late. Front-end noise. My guess? CV joint or bushings.

Not that I’m a mechanic though—my dad took the hammer away from me when I was 12, and now all "my" tools have flowers printed on them or Pink handles...

Yesterday it become apparent to my wife that "sooner rather than later" was optimal.

So this morning she took it to a repair shop where we know the owners.

You may have guessed by now that I’m no car repair guy. It’s just not my strength and I’m ok with that! And even though it’s not my strength, I’m smart enough to know enough about vehicles to be dangerous…

And I’m sure just like you, I hate being ripped off. So last year, we both decided she would handle repair duties—I just get too fired up by most the personnel that work there whom won’t shoot straight with you.

So this morning my wife takes our van in. She sees the owner and next thing my wife knows, the owner’s wife (my wife’s friend) is texting to go get coffee while they take care of the van.

Before the two ladies took off, my wife was told "we'll need all day as one step of it is a 4-hour job just to get to the part that needs to be replaced..."

And the estimate? Half the parts were warrantied out and the labor is lower than we expected it to be.

Fast forward, coffee having been drank… nearly 4 hours on the dot, we get a call “your van is ready!”

My wife didn't stand there haggling the price for parts and labor.

Nope…here’s the real deal:

- Leadership = Relationships

- You can’t have too many

Granted, quality is better than quantity in everything I can think of, and that is true for relationships as well...

And while there are varying degrees or depths of relationships. The best ones go both ways.

We didn’t expect a deal because we were at our friend’s shop. We went because we trust them.

That’s it.

Any other expectation other than a transparent and truthful transaction would be manipulating and exploiting the relationship…the exchange would fall into the purely transactional at best and be parasitic at worst!

The Bigger Lesson

Here’s the kicker:

This isn’t about vans or a repair shop. It’s about leading.

Theodore Roosevelt nailed it: “People don’t care how much you know until they know how much you care.”

Trust comes from relationships.

Relationships begin with who you know…And making who you know matter.

In other words, the relevance of the relationship is critical.

Your Move

Next time you dodge a call—or skip an event—pause.

Kill that thought…Or at least its tire marks. 😁

Realize that relationships fuel your business, your life, and your impact.

Because leadership? It’s relationships.

====

💡Who’s one person you can invest in today? A teammate? A client? A mechanic? 😉

🔹 Drop your answer below 👇 Or hit me up—book a Discovery Call. Let’s make your leadership thrive.

Jason Ansley* is the founder of Above The Line Leader*, where he provides tailored leadership support and operational expertise to help business owners, entrepreneurs, and leaders thrive— without sacrificing your faith, family, or future.

*Want to strengthen your leadership and enhance operational excellence? Connect with Jason at https://abovethelineleader.com/#your-leadership-journey

*📌 This article first appeared on NOSTR. You can also find more Business Leadership Articles and content at: 👉 https://abovethelineleader.com/business-leadership-articles

-

@ c9badfea:610f861a

2025-04-30 23:12:42

@ c9badfea:610f861a

2025-04-30 23:12:42- Install Image Toolbox (it's free and open source)

- Launch the app and navigate to the Tools tab

- Choose Cipher from the tool list

- Pick any file from your device storage

- Keep Encryption toggle selected

- Enter a password in the Key field

- Keep default AES/GCM/NoPadding algorithm

- Tap the Encrypt button and save your encrypted file

- If you want to decrypt the file just repeat the previous steps but choose Decryption instead of Encryption in step 5

-

@ 2fdae362:c9999539

2025-04-30 22:17:19

@ 2fdae362:c9999539

2025-04-30 22:17:19The architecture you choose for your embedded firmware has long-lasting consequences. It impacts how quickly you can add features, how easily your team can debug and maintain the system, and how confidently you can scale. While main loops and real-time operating systems (RTOS) are common, a third option — the state machine kernel — often delivers the most value in modern embedded development. At Wolff Electronic Design, we’ve used this approach for over 15 years to build scalable, maintainable, and reliable systems across a wide range of industries.

Every embedded system starts with one big decision: how will the firmware be structured?

Many teams default to the familiar—using a simple main loop or adopting a RTOS. But those approaches can introduce unnecessary complexity or long-term maintenance headaches. A third option, often overlooked, is using a state machine kernel—an event-driven framework designed for reactive, real-time systems. Below, we compare the three options head-to-head to help you choose the right architecture for your next project.Comparison Chart

| Approach | Description | Pros | Cons | Best For | |-----------------------|------------------------------------------------------------------------|-------------------------------------------------------------|------------------------------------------------------------|--------------------------------------| | Main Loop | A single, continuous while-loop calling functions in sequence | Simple to implement, low memory usage | Hard to scale, difficult to manage timing and state | Small, simple devices | | RTOS | Multi-threaded system with scheduler, tasks, and preemption | Good for multitasking, robust toolchain support | Thread overhead, complex debugging, race conditions | Systems with multiple async tasks | | State Machine Kernel | Event-driven system with structured state transitions, run in a single thread | Easy to debug, deterministic behavior, scalable and modular | Learning curve, may need rethinking architecture | Reactive systems, clean architecture |

Why the State Machine Kernel Wins

Promotes Innovation Without Chaos

With clear, hierarchical state transitions, your codebase becomes modular and self-documenting — making it easier to prototype, iterate, and innovate without fear of breaking hidden dependencies or triggering bugs.

Prevents Hidden Complexity

Unlike RTOSes, where tasks run in parallel and can create race conditions or timing bugs, state machines run cooperatively in a single-threaded model. This eliminates deadlocks, stack overflows, and debugging nightmares that come with thread-based systems.

Scales Without Becoming Fragile

As features and states are added, the system remains predictable. You don’t have to untangle spaghetti logic or rework your entire loop to support new behaviors — you just add new events and state transitions.

Improves Maintainability and Handoff

Because logic is encapsulated in individual states with defined transitions, the code is easier to understand, test, and maintain. This lowers the cost of onboarding new developers or revisiting the system years later.

At Wolff Electronic Design, we’ve worked with every kind of firmware structure over the past 15+ years. Our go-to for complex embedded systems? A state machine kernel. It gives our clients the flexibility of RTOS-level structure without the bugs, complexity, or overhead. Whether you’re developing restaurant equipment or industrial control systems, this architecture offers a better path forward: clean, maintainable, and built to last.

Learn more about our capabilities here.

design, #methodologies, #quantumleaps, #statemachines

-

@ 1c19eb1a:e22fb0bc

2025-04-30 22:02:13

@ 1c19eb1a:e22fb0bc

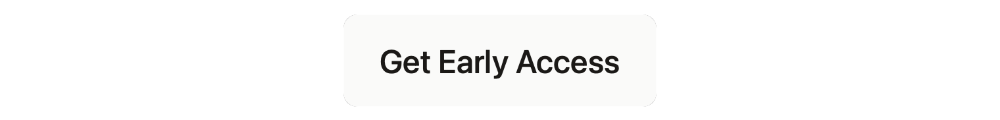

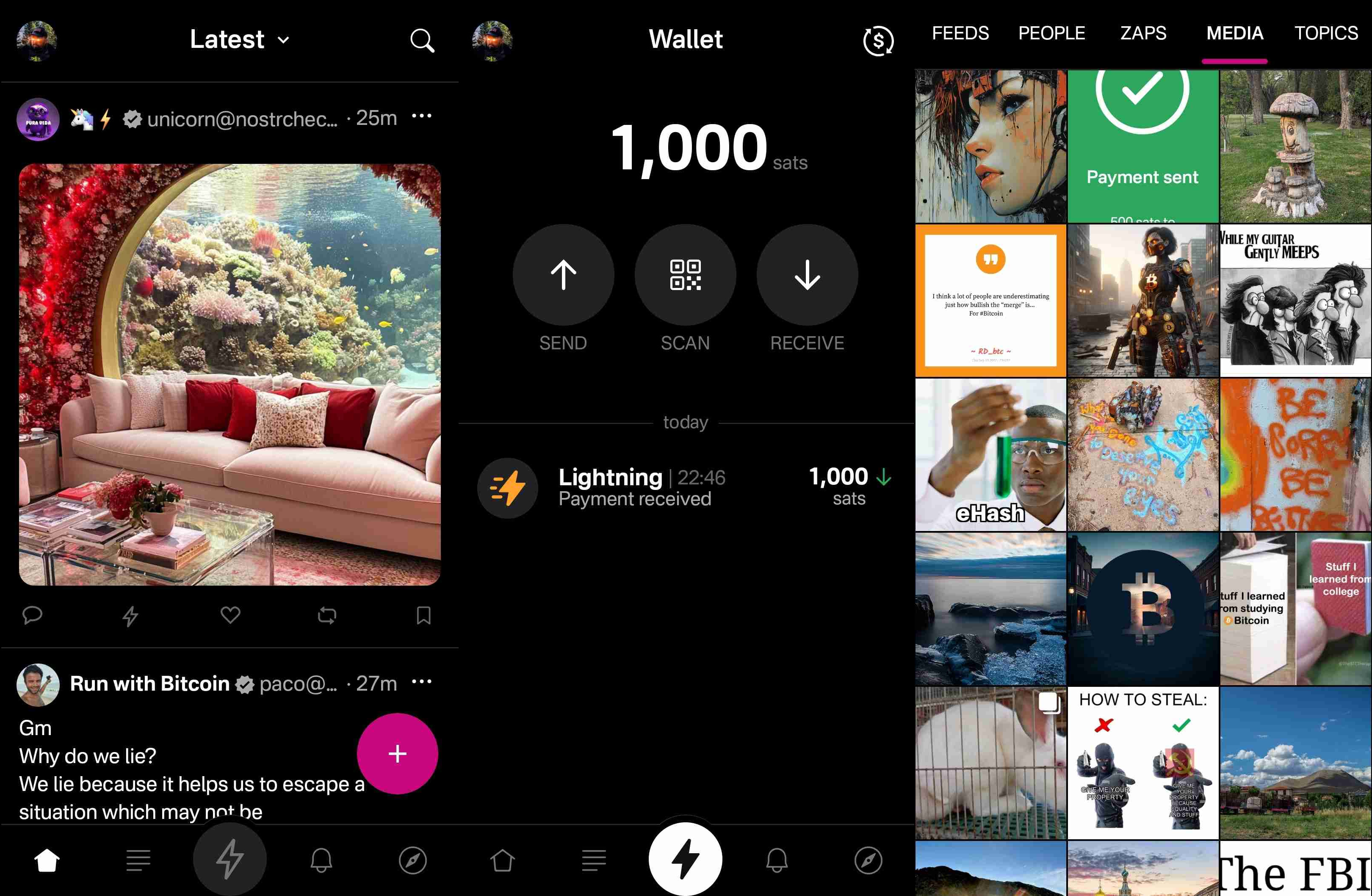

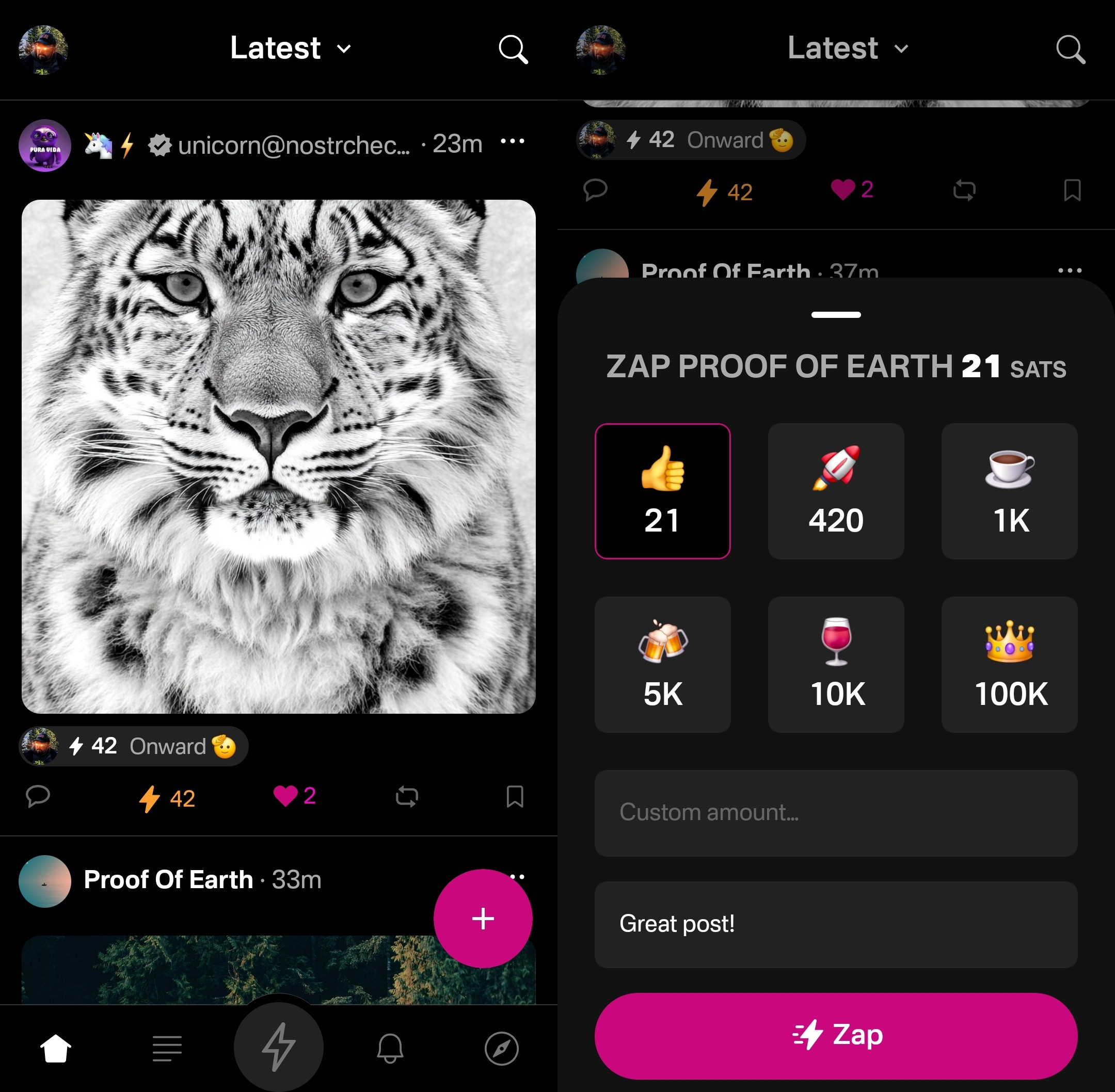

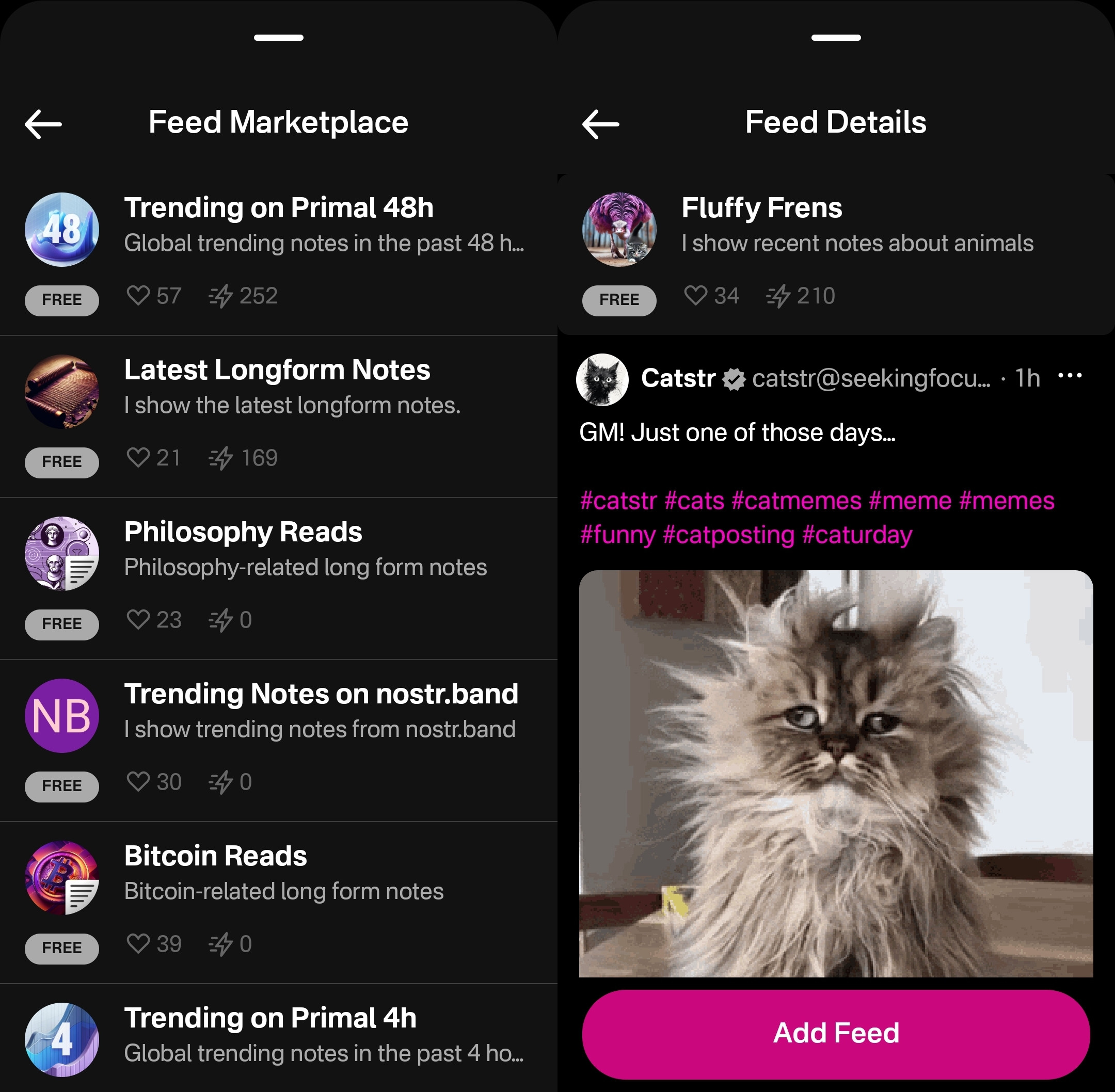

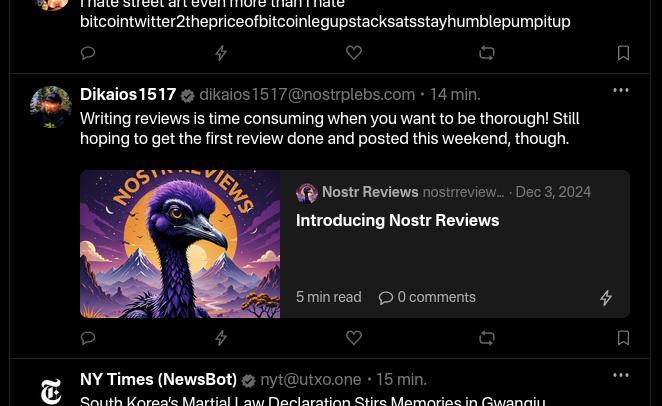

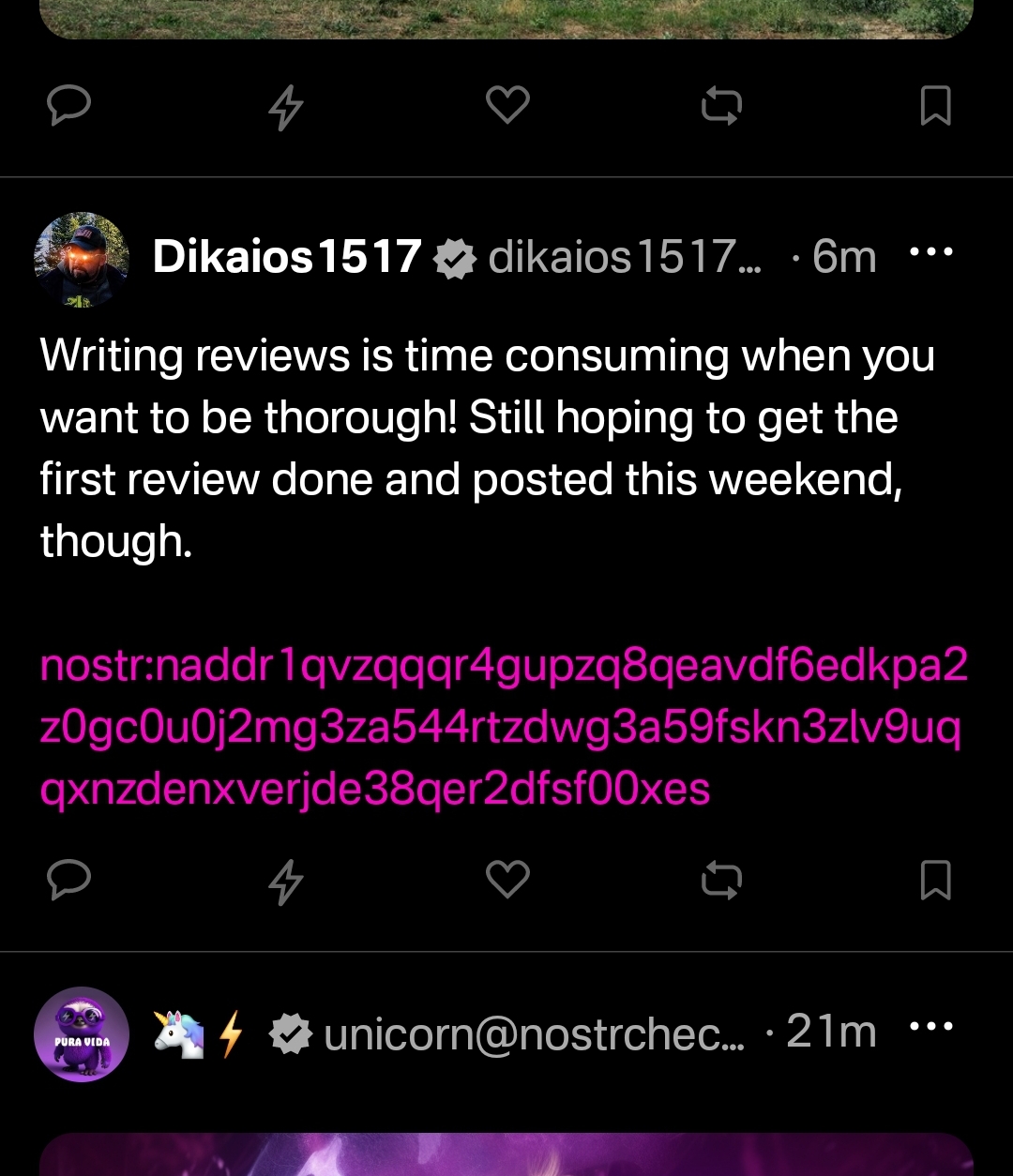

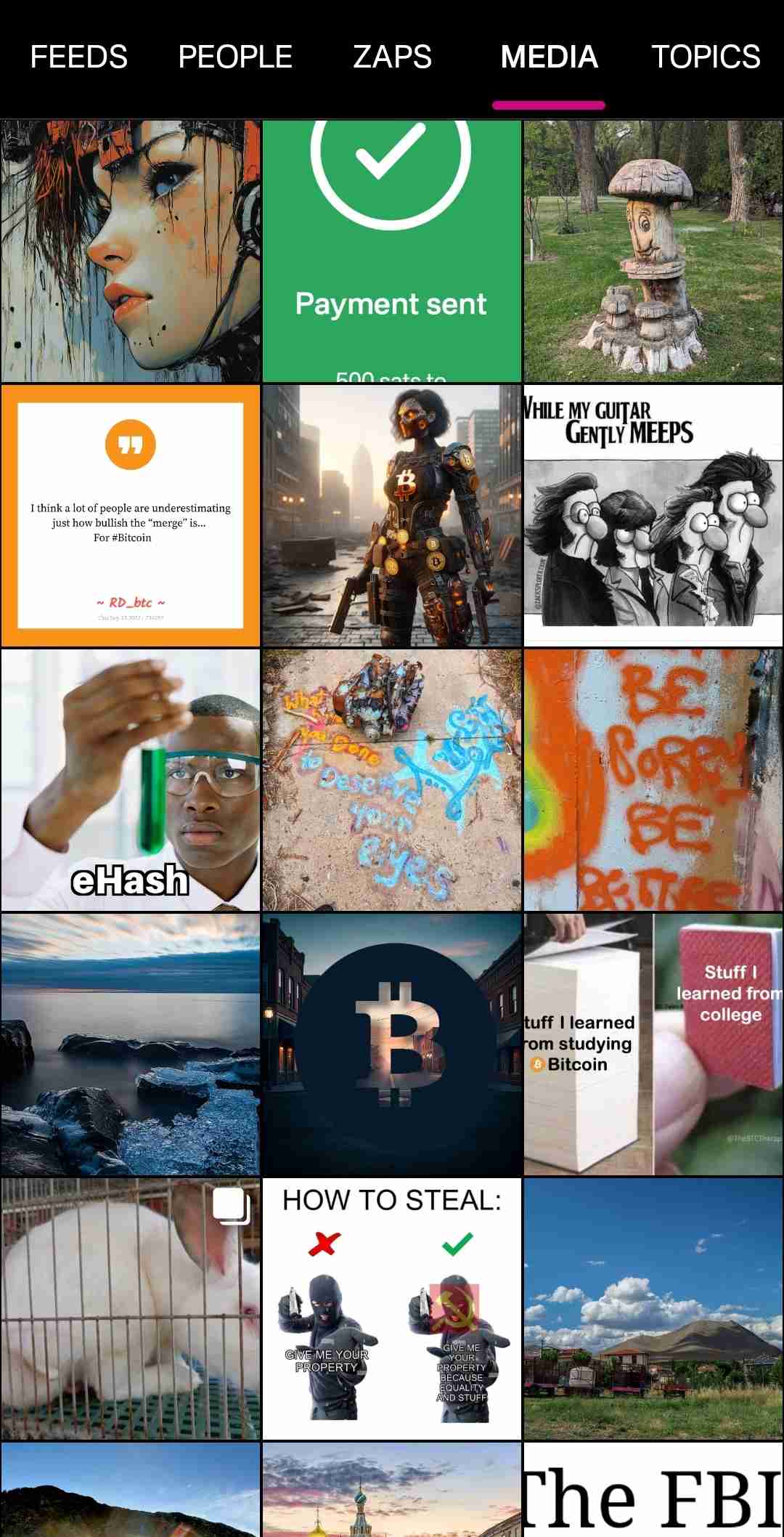

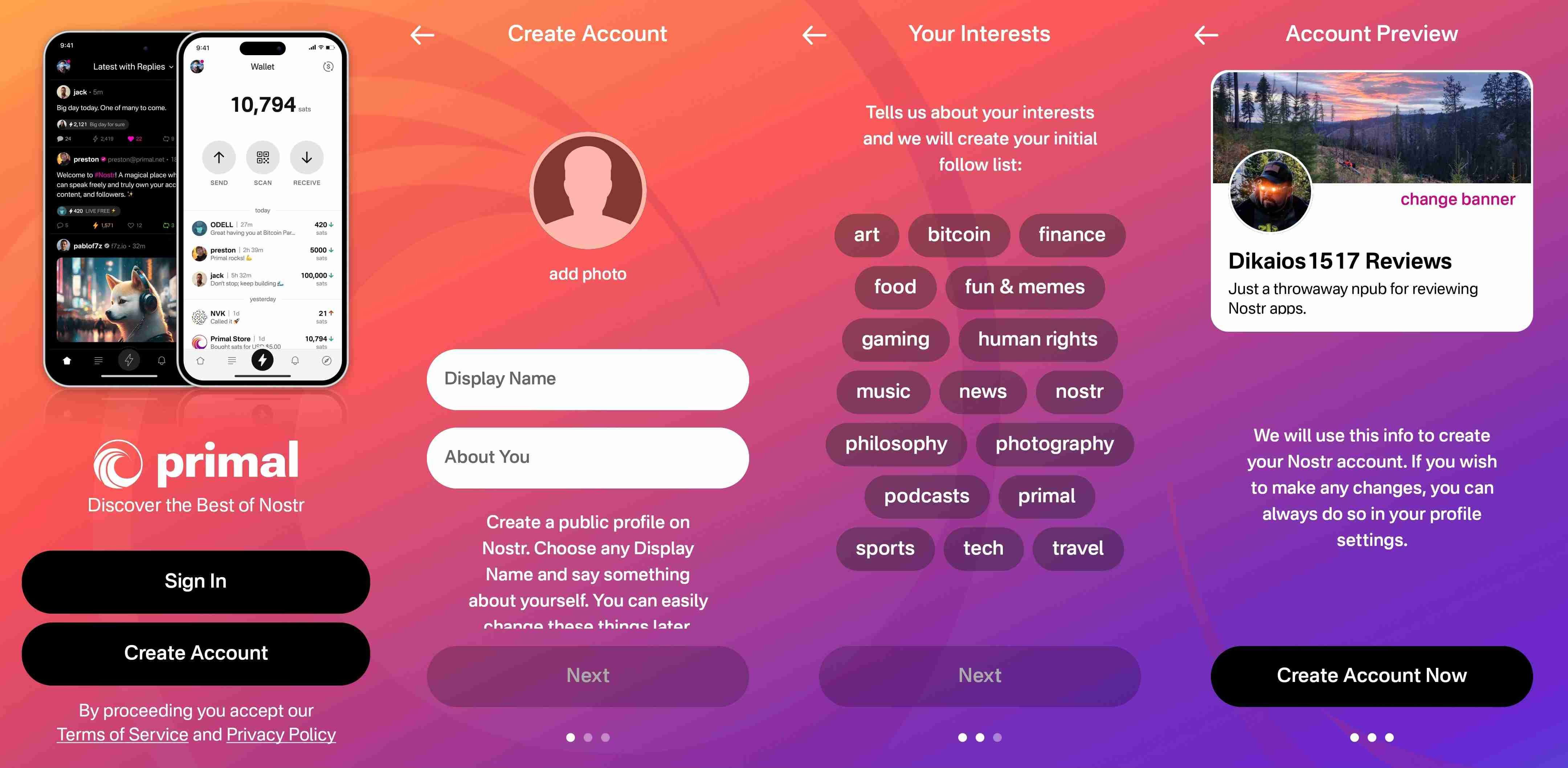

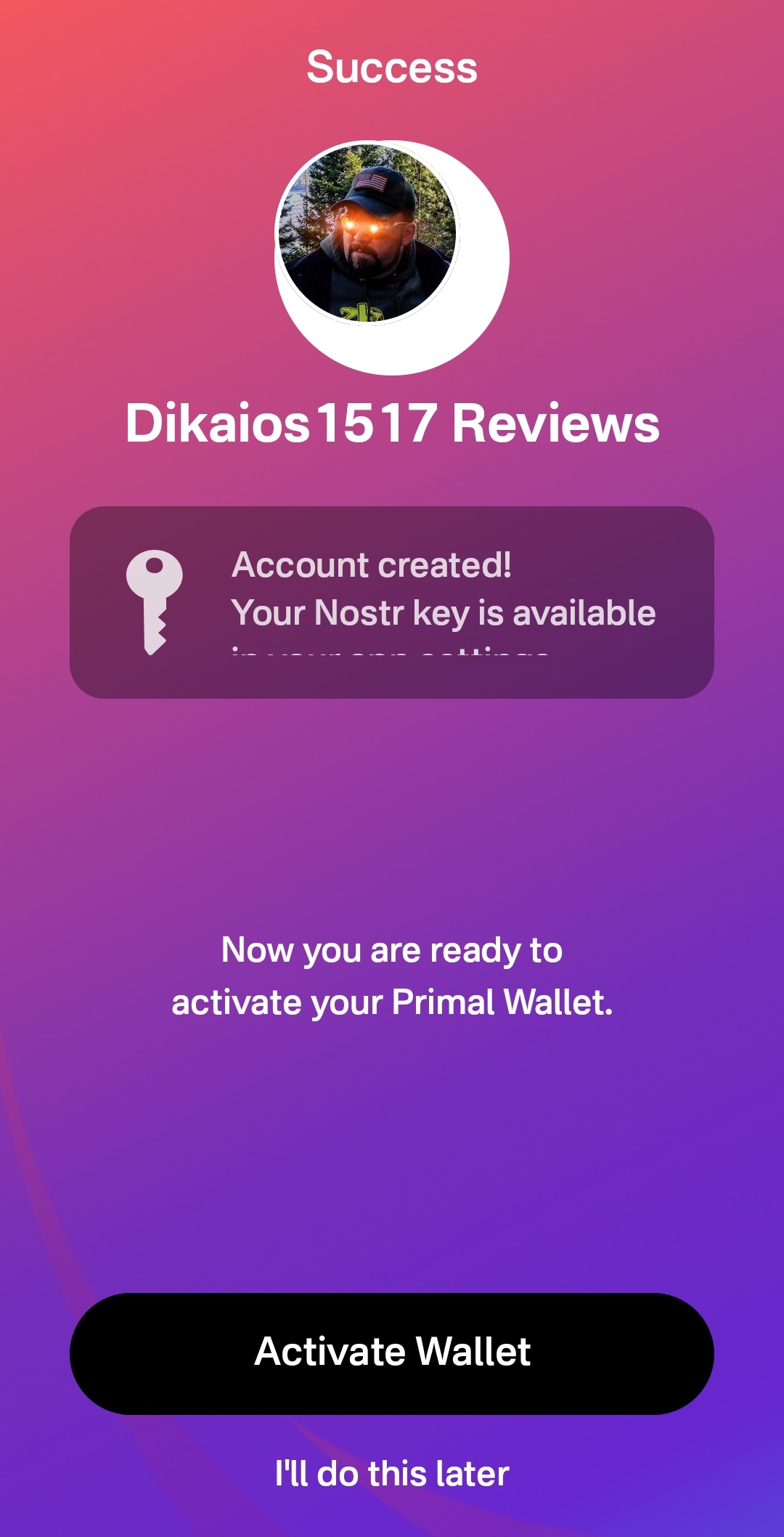

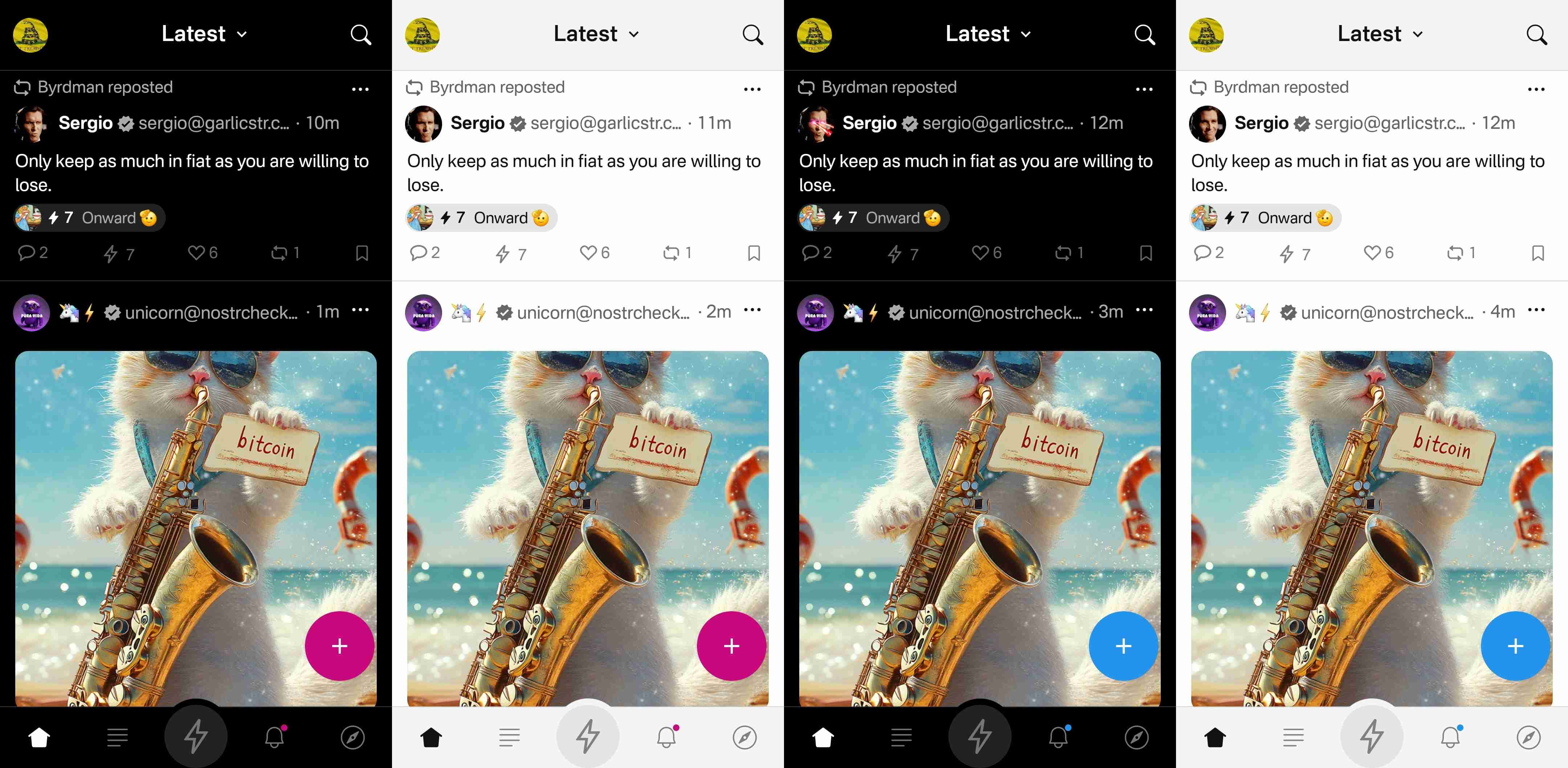

2025-04-30 22:02:13I am happy to present to you the first full review posted to Nostr Reviews: #Primal for #Android!

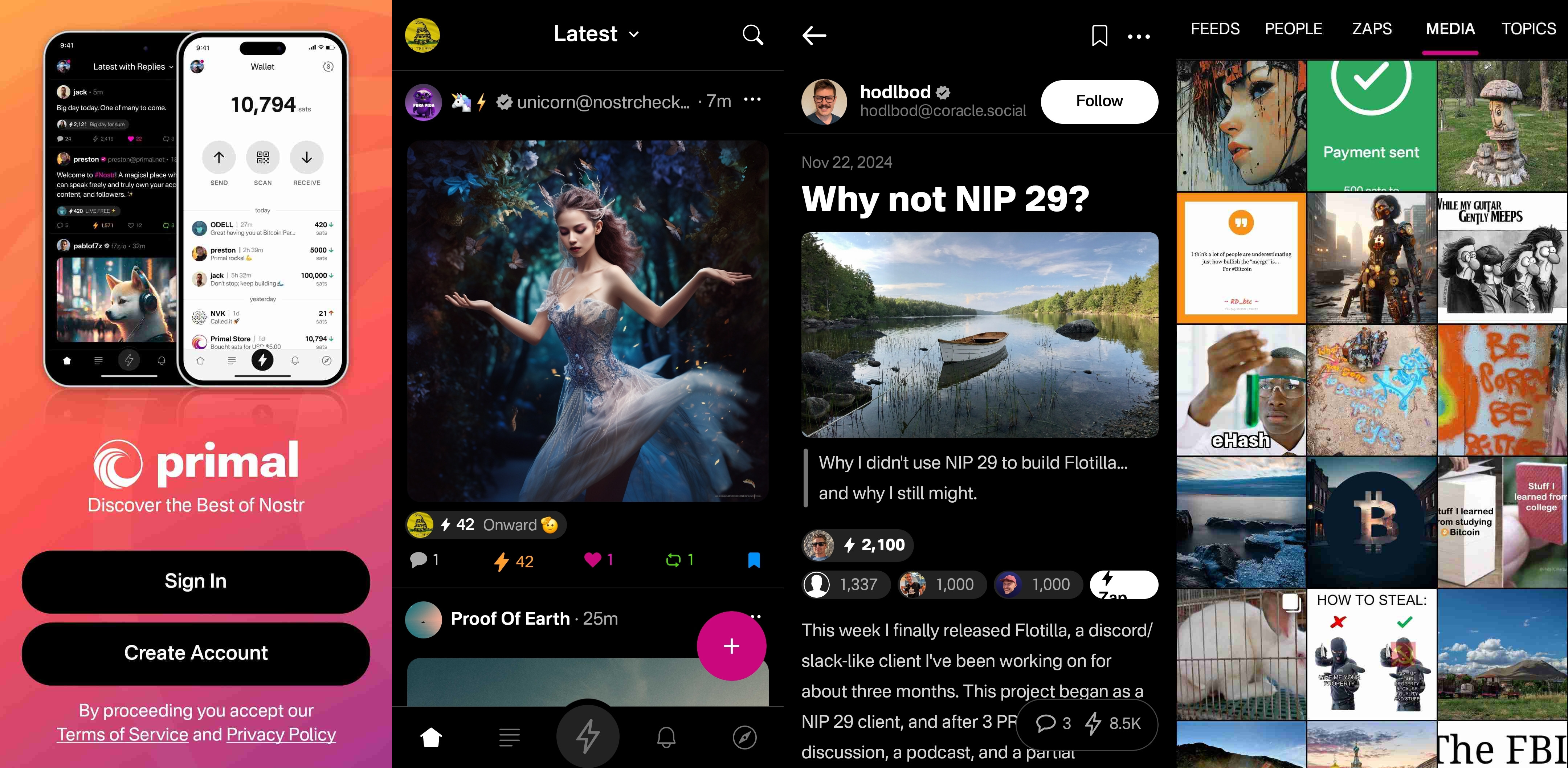

Primal has its origins as a micro-blogging, social media client, though it is now expanding its horizons into long-form content. It was first released only as a web client in March of 2023, but has since had a native client released for both iOS and Android. All of Primal's clients recently had an update to Primal 2.0, which included both performance improvements and a number of new features. This review will focus on the Android client specifically, both on phone and tablet.

Since Primal has also added features that are only available to those enrolled in their new premium subscription, it should also be noted that this review will be from the perspective of a free user. This is for two reasons. First, I am using an alternate npub to review the app, and if I were to purchase premium at some time in the future, it would be on my main npub. Second, despite a lot of positive things I have to say about Primal, I am not planning to regularly use any of their apps on my main account for the time being, for reasons that will be discussed later in the review.

The application can be installed through the Google Play Store, nostr:npub10r8xl2njyepcw2zwv3a6dyufj4e4ajx86hz6v4ehu4gnpupxxp7stjt2p8, or by downloading it directly from Primal's GitHub. The full review is current as of Primal Android version 2.0.21. Updates to the review on 4/30/2025 are current as of version 2.2.13.

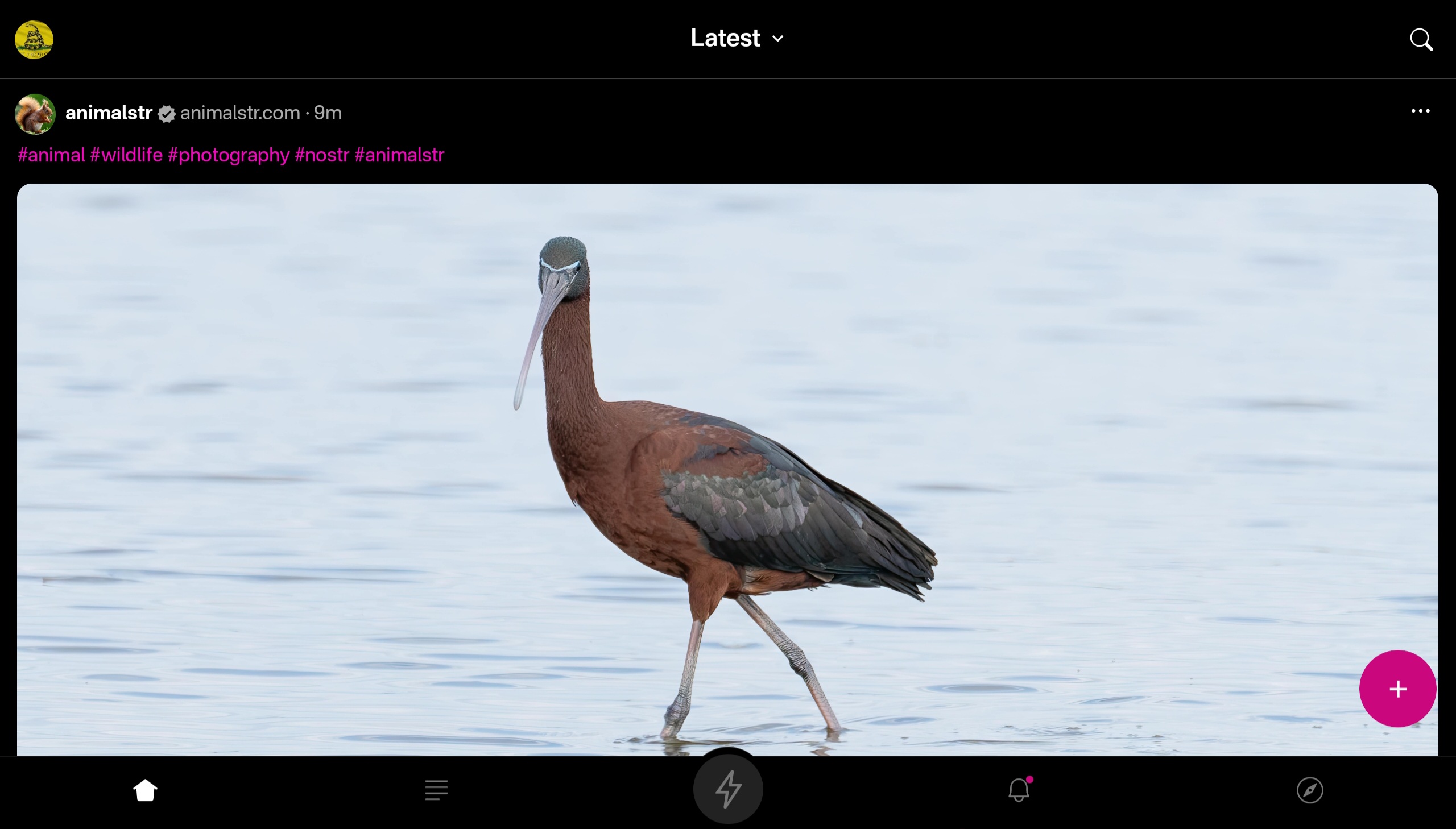

In the ecosystem of "notes and other stuff," Primal is predominantly in the "notes" category. It is geared toward users who want a social media experience similar to Twitter or Facebook with an infinite scrolling feed of notes to interact with. However, there is some "other stuff" included to complement this primary focus on short and long form notes including a built-in Lightning wallet powered by #Strike, a robust advanced search, and a media-only feed.

Overall Impression

Score: 4.4 / 5 (Updated 4/30/2025)

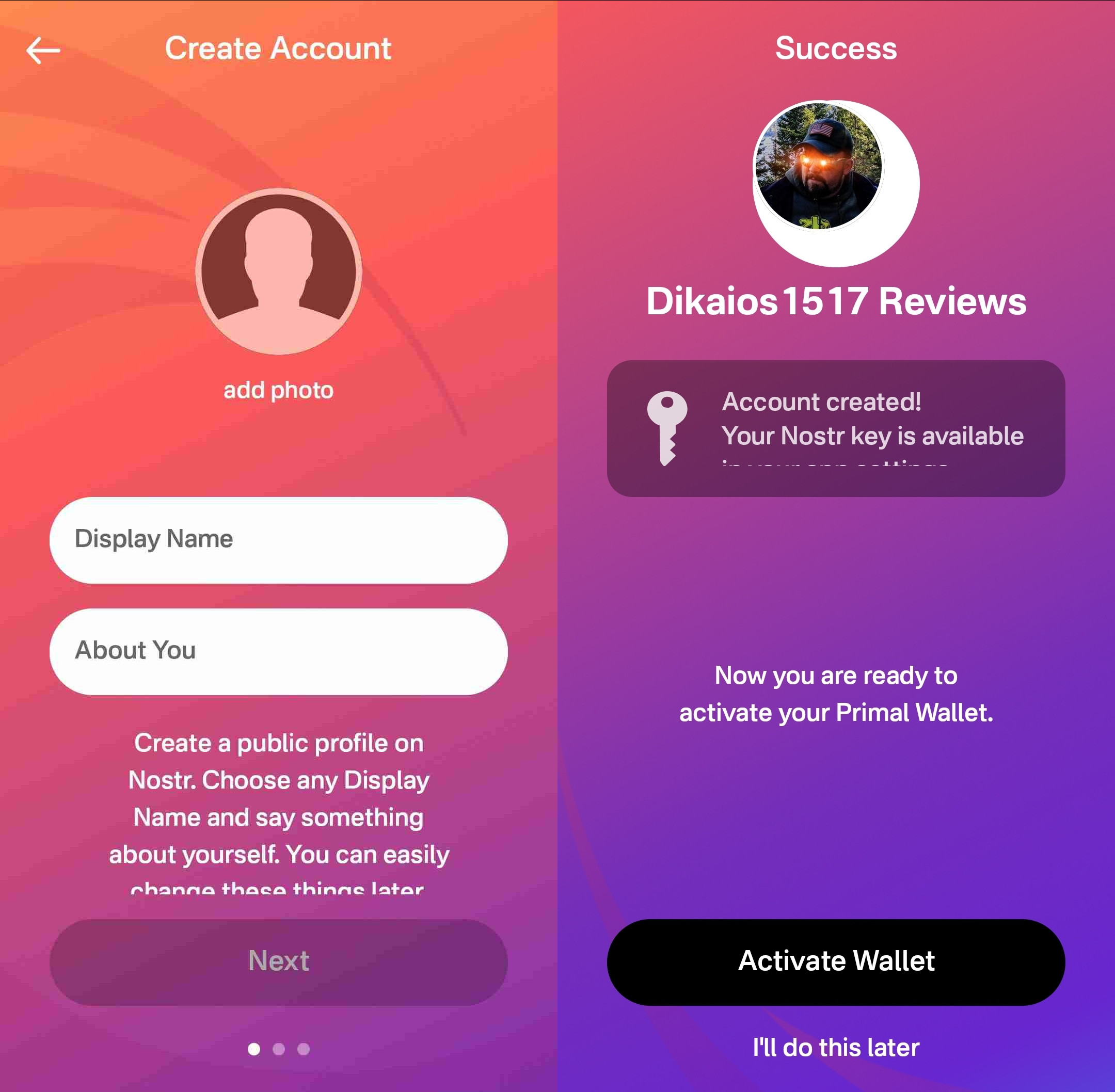

Primal may well be the most polished UI of any Nostr client native to Android. It is incredibly well designed and thought out, with all of the icons and settings in the places a user would expect to find them. It is also incredibly easy to get started on Nostr via Primal's sign-up flow. The only two things that will be foreign to new users are the lack of any need to set a password or give an email address, and the prompt to optionally set up the wallet.

Complaints prior to the 2.0 update about Primal being slow and clunky should now be completely alleviated. I only experienced quick load times and snappy UI controls with a couple very minor exceptions, or when loading DVM-based feeds, which are outside of Primal's control.

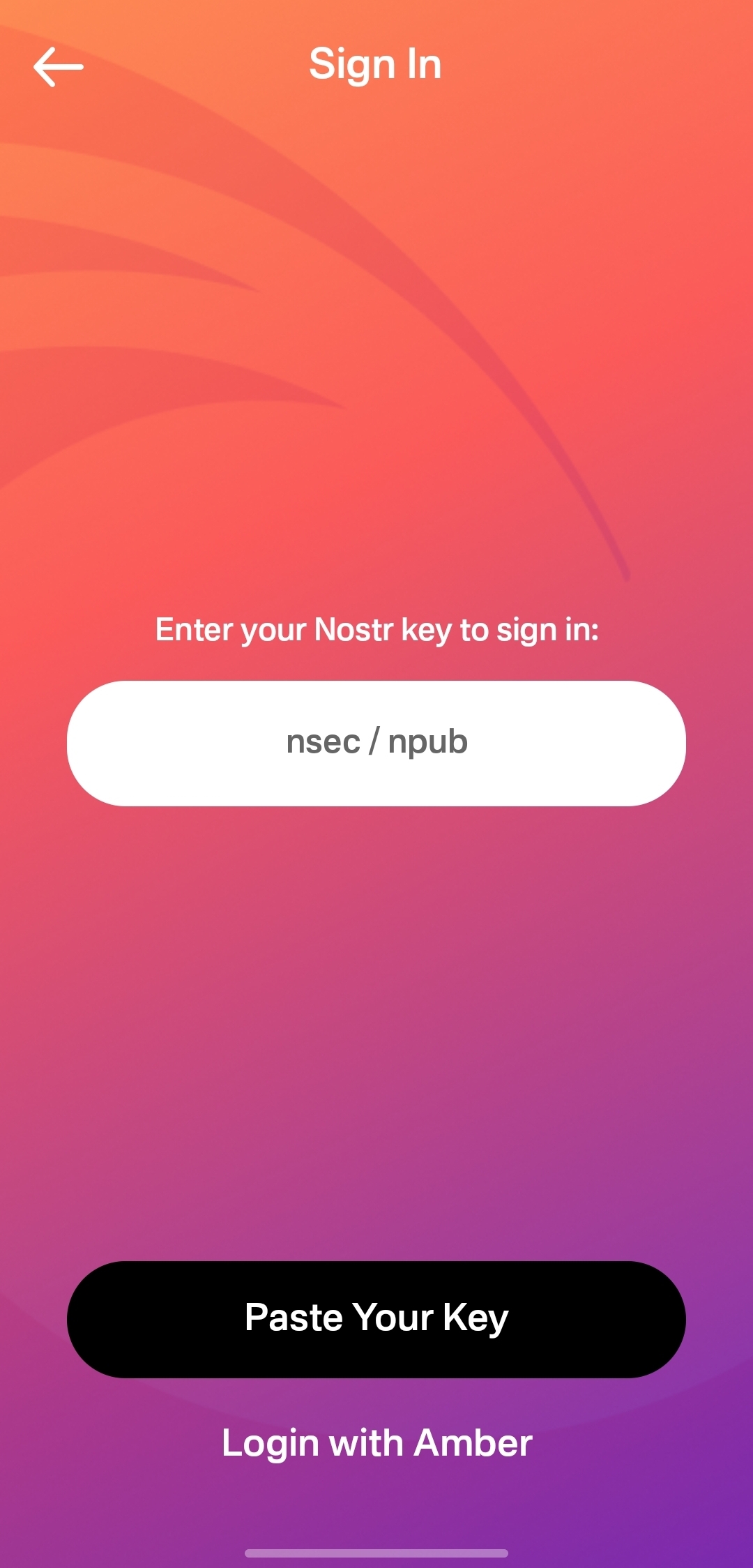

Primal is not, however, a client that I would recommend for the power-user. Control over preferred relays is minimal and does not allow the user to determine which relays they write to and which they only read from. Though you can use your own wallet, it will not appear within the wallet interface, which only works with the custodial wallet from Strike. Moreover, and most eggregiously, the only way for existing users to log in is by pasting their nsec, as Primal does not support either the Android signer or remote signer options for users to protect their private key at this time. This lack of signer support is the primary reason the client received such a low overall score. If even one form of external signer log in is added to Primal, the score will be amended to 4.2 / 5, and if both Android signer and remote signer support is added, it will increase to 4.5.

Update: As of version 2.2.13, Primal now supports the Amber Android signer! One of the most glaring issues with the app has now been remedied and as promised, the overall score above has been increased.

Another downside to Primal is that it still utilizes an outdated direct message specification that leaks metadata that can be readily seen by anyone on the network. While the content of your messages remains encrypted, anyone can see who you are messaging with, and when. This also means that you will not see any DMs from users who are messaging from a client that has moved to the latest, and far more private, messaging spec.

That said, the beautiful thing about Nostr as a protocol is that users are not locked into any particular client. You may find Primal to be a great client for your average #bloomscrolling and zapping memes, but opt for a different client for more advanced uses and for direct messaging.

Features

Primal has a lot of features users would expect from any Nostr client that is focused on short-form notes, but it also packs in a lot of features that set it apart from other clients, and that showcase Primal's obvious prioritization of a top-tier user experience.

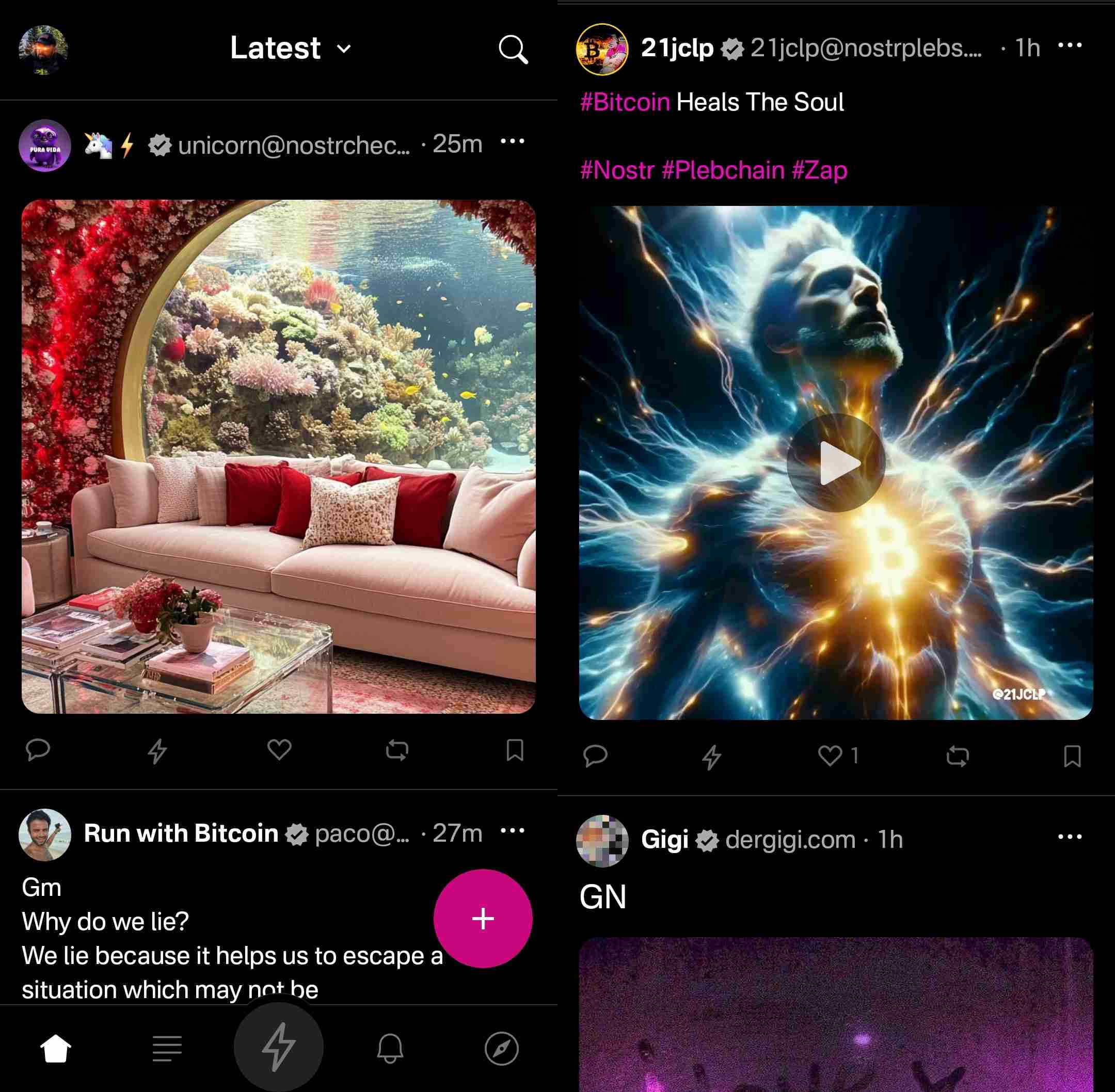

Home Feed

By default, the infinitely scrolling Home feed displays notes from those you currently follow in chronological order. This is traditional Nostr at its finest, and made all the more immersive by the choice to have all distracting UI elements quickly hide themselves from view as the you begin to scroll down the feed. They return just as quickly when you begin to scroll back up.

Scrolling the feed is incredibly fast, with no noticeable choppiness and minimal media pop-in if you are on a decent internet connection.

Helpfully, it is easy to get back to the top of the feed whenever there is a new post to be viewed, as a bubble will appear with the profile pictures of the users who have posted since you started scrolling.

Interacting With Notes

Interacting with a note in the feed can be done via the very recognizable icons at the bottom of each post. You can comment, zap, like, repost, and/or bookmark the note.

Notably, tapping on the zap icon will immediately zap the note your default amount of sats, making zapping incredibly fast, especially when using the built-in wallet. Long pressing on the zap icon will open up a menu with a variety of amounts, along with the ability to zap a custom amount. All of these amounts, and the messages that are sent with the zap, can be customized in the application settings.

Users who are familiar with Twitter or Instagram will feel right at home with only having one option for "liking" a post. However, users from Facebook or other Nostr clients may wonder why they don't have more options for reactions. This is one of those things where users who are new to Nostr probably won't notice they are missing out on anything at all, while users familiar with clients like #Amethyst or #noStrudel will miss the ability to react with a 🤙 or a 🫂.

It's a similar story with the bookmark option. While this is a nice bit of feature parity for Twitter users, for those already used to the ability to have multiple customized lists of bookmarks, or at minimum have the ability to separate them into public and private, it may be a disappointment that they have no access to the bookmarks they already built up on other clients. Primal offers only one list of bookmarks for short-form notes and they are all visible to the public. However, you are at least presented with a warning about the public nature of your bookmarks before saving your first one.

Yet, I can't dock the Primal team much for making these design choices, as they are understandable for Primal's goal of being a welcoming client for those coming over to Nostr from centralized platforms. They have optimized for the onboarding of new users, rather than for those who have been around for a while, and there is absolutely nothing wrong with that.

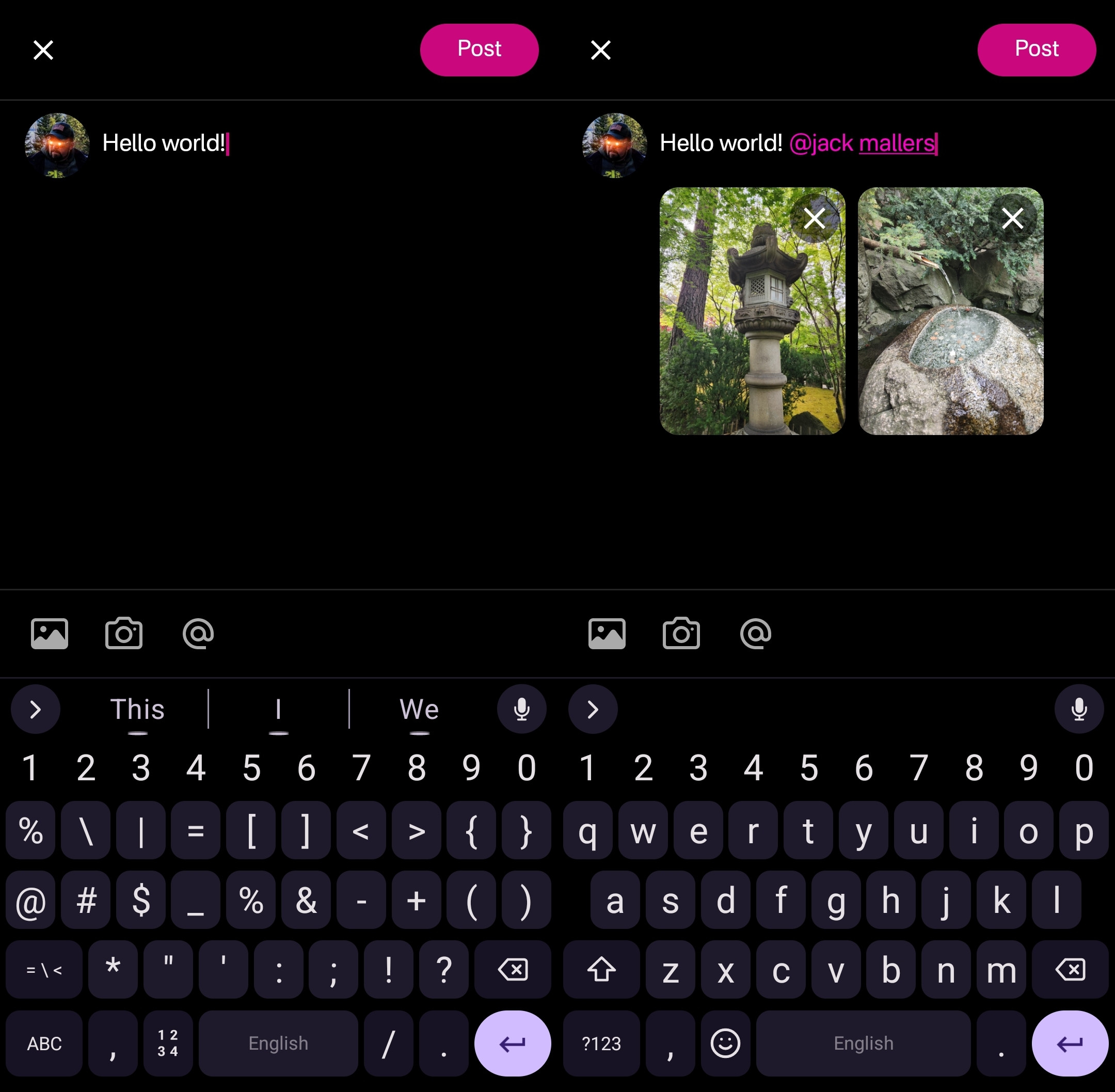

Post Creation

Composing posts in Primal is as simple as it gets. Accessed by tapping the obvious circular button with a "+" on it in the lower right of the Home feed, most of what you could need is included in the interface, and nothing you don't.

Your device's default keyboard loads immediately, and the you can start typing away.

There are options for adding images from your gallery, or taking a picture with your camera, both of which will result in the image being uploaded to Primal's media-hosting server. If you prefer to host your media elsewhere, you can simply paste the link to that media into your post.

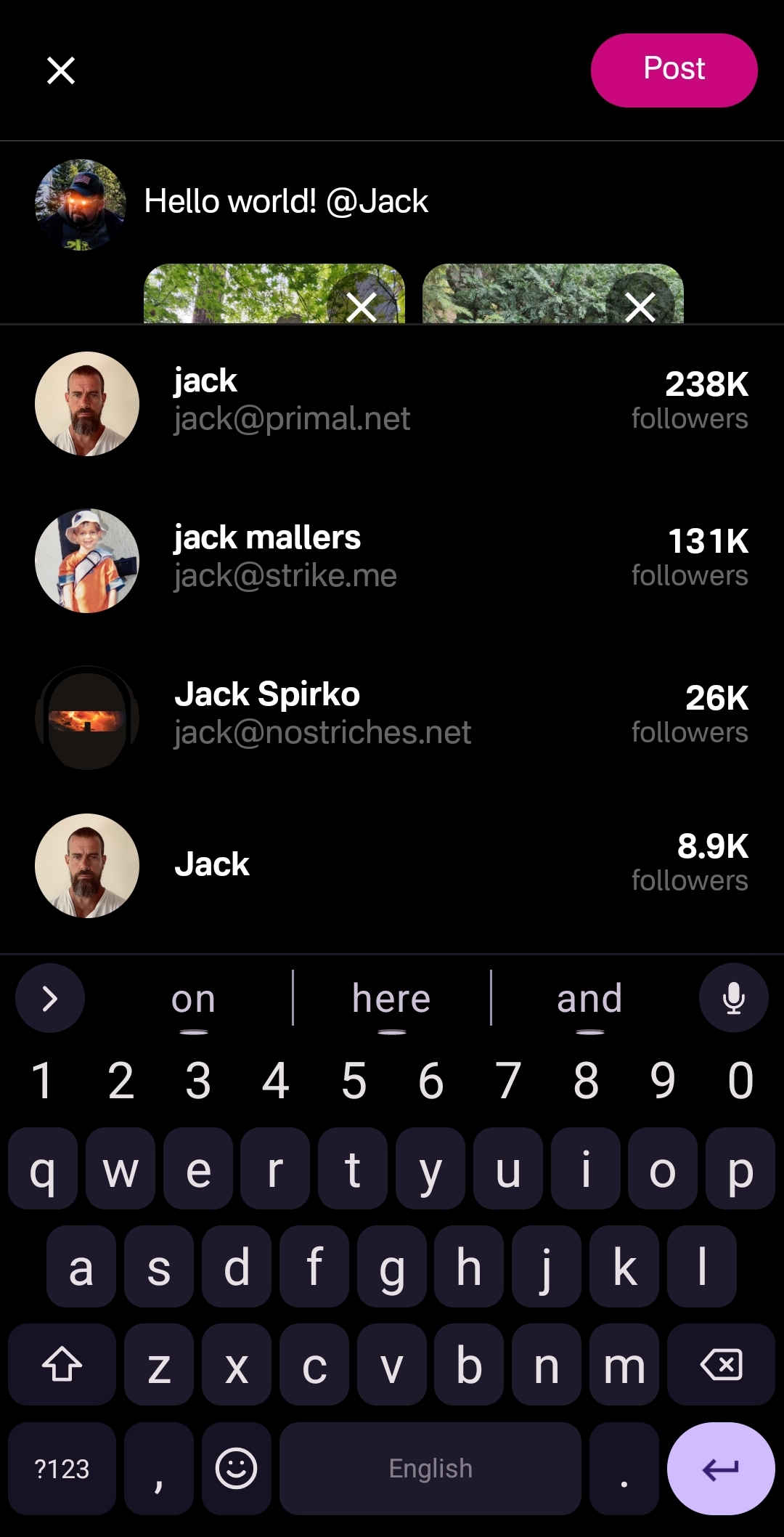

There is also an @ icon as a tip-off that you can tag other users. Tapping on this simply types "@" into your note and brings up a list of users. All you have to do to narrow down the user you want to tag is continue typing their handle, Nostr address, or paste in their npub.

This can get mixed results in other clients, which sometimes have a hard time finding particular users when typing in their handle, forcing you to have to remember their Nostr address or go hunt down their npub by another means. Not so with Primal, though. I had no issues tagging anyone I wanted by simply typing in their handle.

Of course, when you are tagging someone well known, you may find that there are multiple users posing as that person. Primal helps you out here, though. Usually the top result is the person you want, as Primal places them in order of how many followers they have. This is quite reliable right now, but there is nothing stopping someone from spinning up an army of bots to follow their fake accounts, rendering follower count useless for determining which account is legitimate. It would be nice to see these results ranked by web-of-trust, or at least an indication of how many users you follow who also follow the users listed in the results.

Once you are satisfied with your note, the "Post" button is easy to find in the top right of the screen.

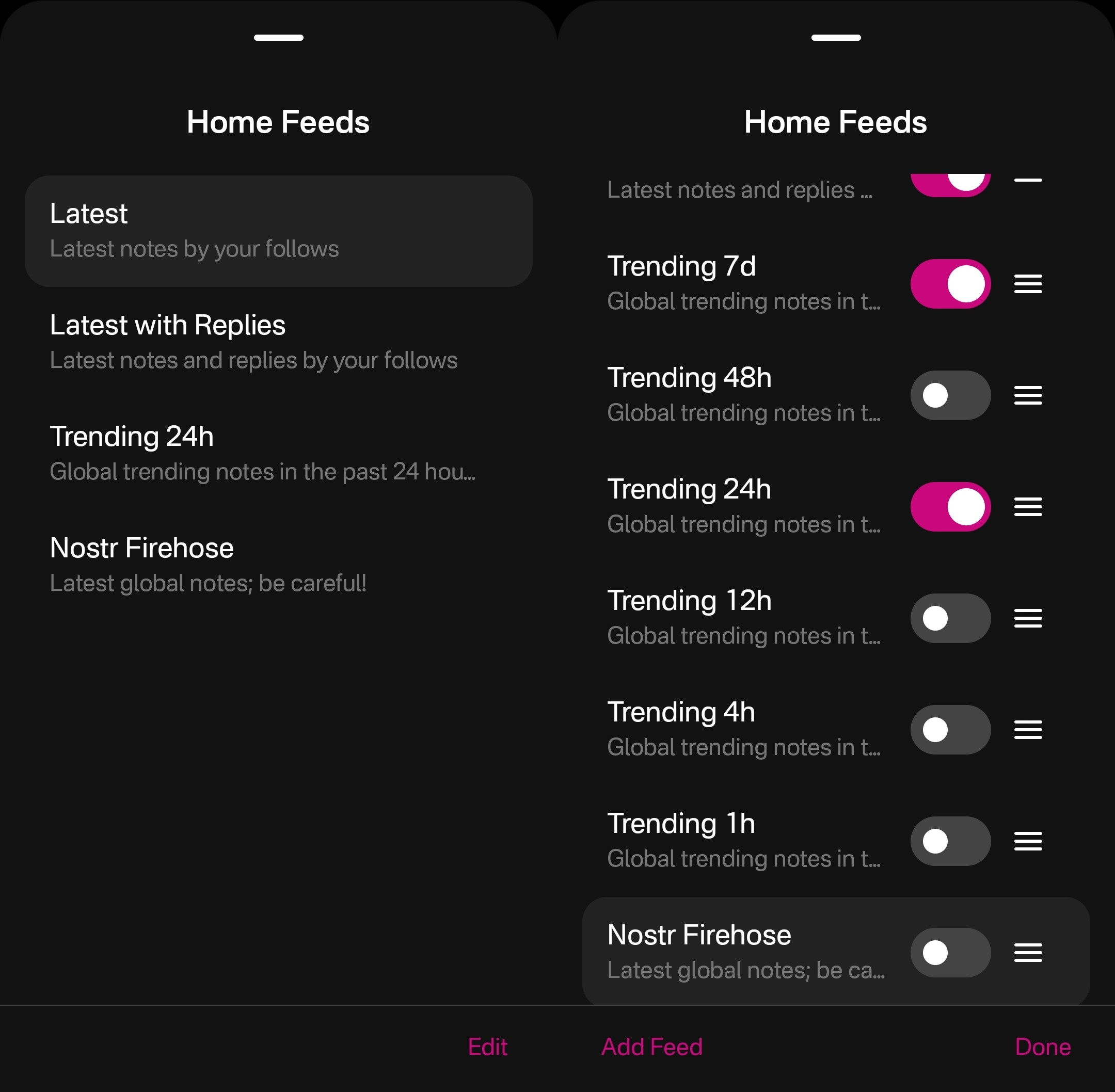

Feed Selector and Marketplace

Primal's Home feed really shines when you open up the feed selection interface, and find that there are a plethora of options available for customizing your view. By default, it only shows four options, but tapping "Edit" opens up a new page of available toggles to add to the feed selector.

The options don't end there, though. Tapping "Add Feed" will open up the feed marketplace, where an ever-growing number of custom feeds can be found, some created by Primal and some created by others. This feed marketplace is available to a few other clients, but none have so closely integrated it with their Home feeds like Primal has.

Unfortunately, as great as these custom feeds are, this was also the feature where I ran into the most bugs while testing out the app.

One of these bugs was while selecting custom feeds. Occasionally, these feed menu screens would become unresponsive and I would be unable to confirm my selection, or even use the back button on my device to back out of the screen. However, I was able to pull the screen down to close it and re-open the menu, and everything would be responsive again.

This only seemed to occur when I spent 30 seconds or more on the same screen, so I imagine that most users won't encounter it much in their regular use.

Another UI bug occurred for me while in the feed marketplace. I could scroll down the list of available feeds, but attempting to scroll back up the feed would often close the interface entirely instead, as though I had pulled the screen down from the top, when I was swiping in the middle of the screen.

The last of these bugs occurred when selecting a long-form "Reads" feed while in the menu for the Home feed. The menu would allow me to add this feed and select it to be displayed, but it would fail to load the feed once selected, stating "There is no content in this feed." Going to a different page within the the app and then going back to the Home tab would automatically remove the long-form feed from view, and reset back to the most recently viewed short-form "Notes" feed, though the long-form feed would still be available to select again. The results were similar when selecting a short-form feed for the Reads feed.

I would suggest that if long-form and short-form feeds are going to be displayed in the same list, and yet not be able to be displayed in the same feed, the application should present an error message when attempting to add a long-form feed for the Home feed or a short-form feed for the Reads feed, and encourage the user add it to the proper feed instead.

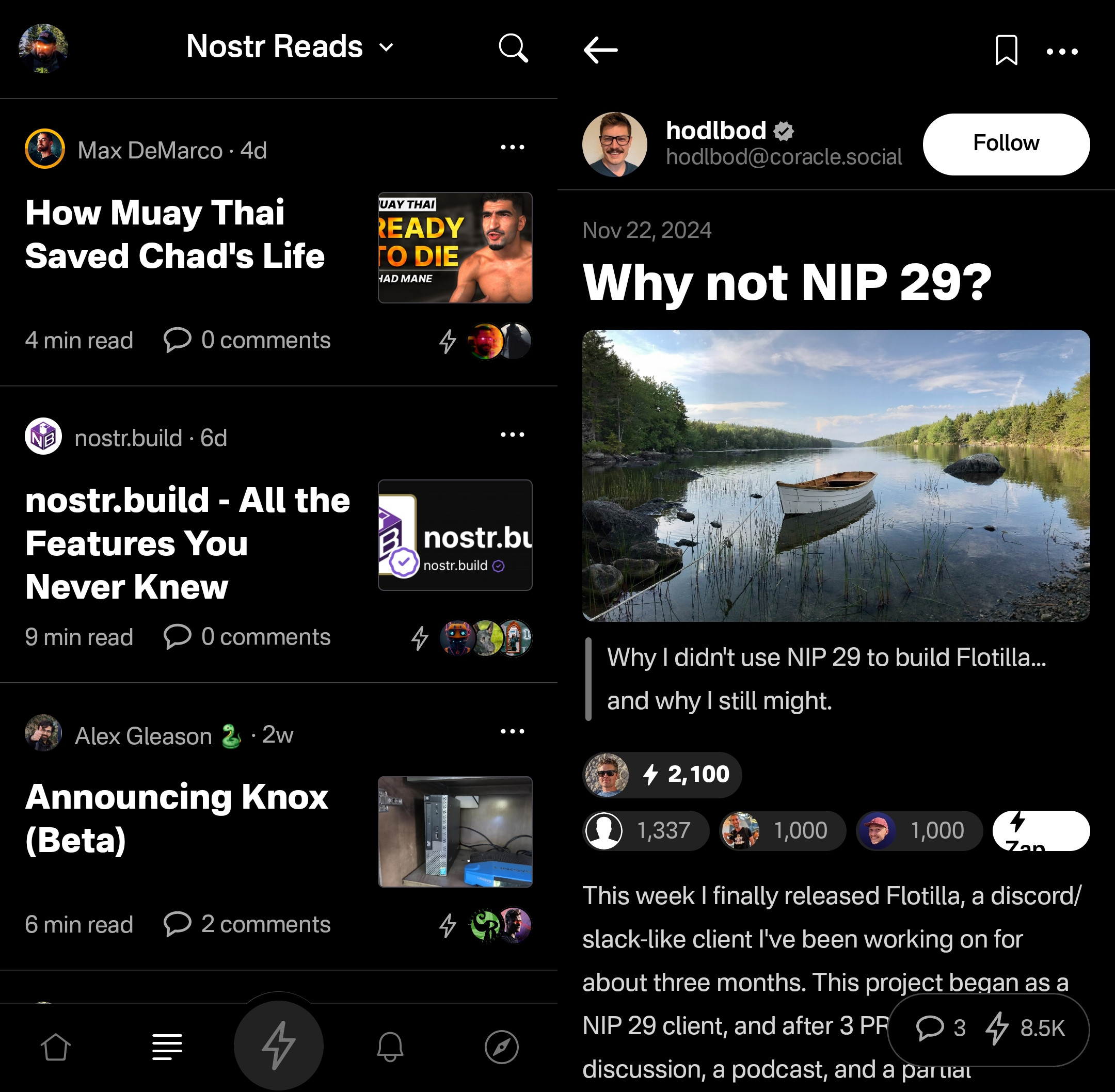

Long-Form "Reads" Feed

A brand new feature in Primal 2.0, users can now browse and read long-form content posted to Nostr without having to go to a separate client. Primal now has a dedicated "Reads" feed to browse and interact with these articles.

This feed displays the author and title of each article or blog, along with an image, if available. Quite conveniently, it also lets you know the approximate amount of time it will take to read a given article, so you can decide if you have the time to dive into it now, or come back later.

Noticeably absent from the Reads feed, though, is the ability to compose an article of your own. This is another understandable design choice for a mobile client. Composing a long-form note on a smart-phone screen is not a good time. Better to be done on a larger screen, in a client with a full-featured text editor.

Tapping an article will open up an attractive reading interface, with the ability to bookmark for later. These bookmarks are a separate list from your short-form note bookmarks so you don't have to scroll through a bunch of notes you bookmarked to find the article you told yourself you would read later and it's already been three weeks.

While you can comment on the article or zap it, you will notice that you cannot repost or quote-post it. It's not that you can't do so on Nostr. You absolutely can in other clients. In fact, you can do so on Primal's web client, too. However, Primal on Android does not handle rendering long-form note previews in the Home feed, so they have simply left out the option to share them there. See below for an example of a quote-post of a long-form note in the Primal web client vs the Android client.

Primal Web:

Primal Android:

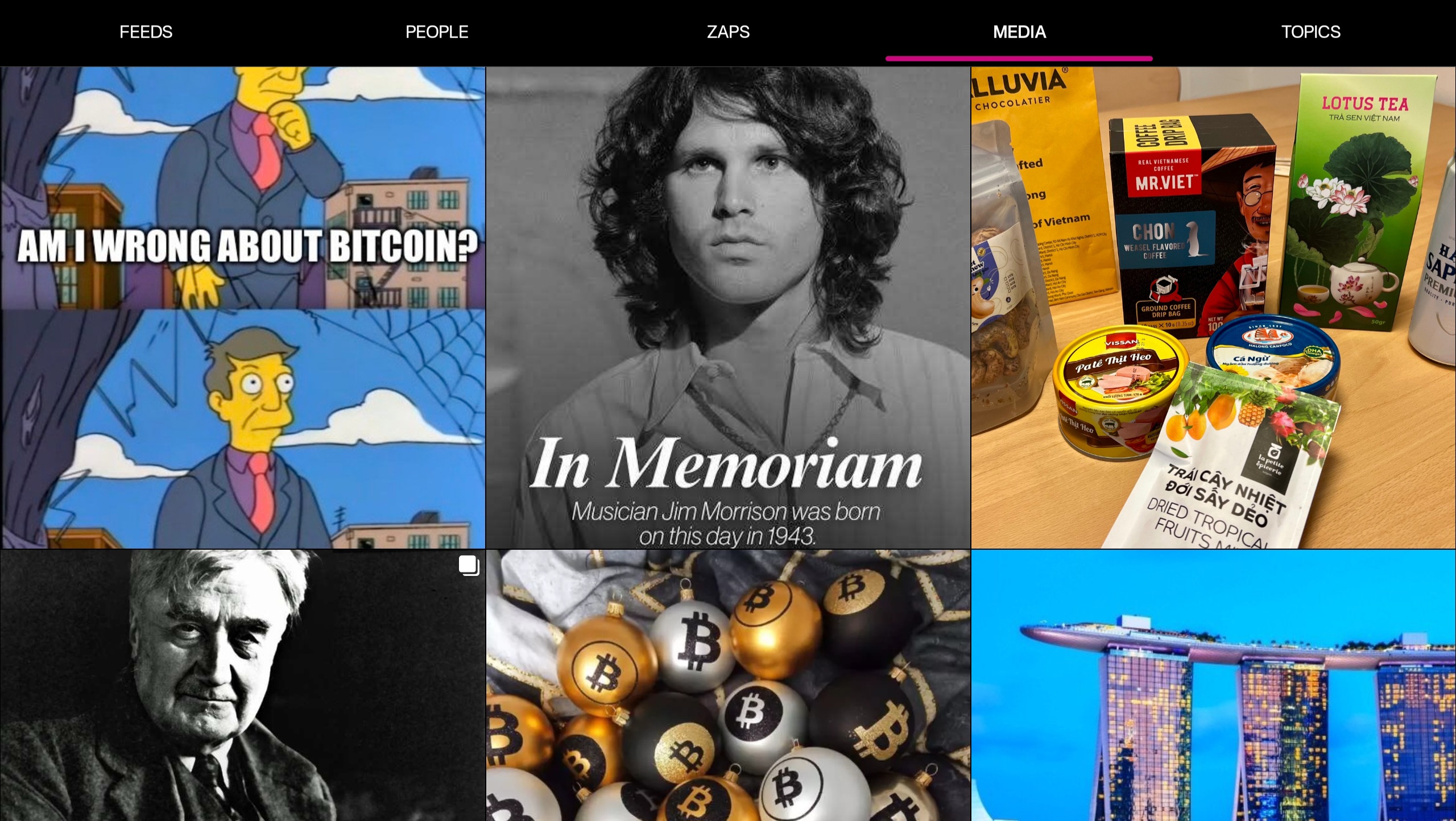

The Explore Tab

Another unique feature of the Primal client is the Explore tab, indicated by the compass icon. This tab is dedicated to discovering content from outside your current follow list. You can find the feed marketplace here, and add any of the available feeds to your Home or Reads feed selections. You can also find suggested users to follow in the People tab. The Zaps tab will show you who has been sending and receiving large zaps. Make friends with the generous ones!

The Media tab gives you a chronological feed of just media, displayed in a tile view. This can be great when you are looking for users who post dank memes, or incredible photography on a regular basis. Unfortunately, it appears that there is no way to filter this feed for sensitive content, and so you do not have to scroll far before you see pornographic material.

Indeed, it does not appear that filters for sensitive content are available in Primal for any feed. The app is kind enough to give a minimal warning that objectionable content may be present when selecting the "Nostr Firehose" option in your Home feed, with a brief "be careful" in the feed description, but there is not even that much of a warning here for the media-only feed.

The media-only feed doesn't appear to be quite as bad as the Nostr Firehose feed, so there must be some form of filtering already taking place, rather than being a truly global feed of all media. Yet, occasional sensitive content still litters the feed and is unavoidable, even for users who would rather not see it. There are, of course, ways to mute particular users who post such content, if you don't want to see it a second time from the same user, but that is a never-ending game of whack-a-mole, so your only realistic choices in Primal are currently to either avoid the Nostr Firehose and media-only feeds, or determine that you can put up with regularly scrolling past often graphic content.

This is probably the only choice Primal has made that is not friendly to new users. Most clients these days will have some protections in place to hide sensitive content by default, but still allow the user to toggle those protections off if they so choose. Some of them hide posts flagged as sensitive content altogether, others just blur the images unless the user taps to reveal them, and others simply blur all images posted by users you don't follow. If Primal wants to target new users who are accustomed to legacy social media platforms, they really should follow suit.

The final tab is titled "Topics," but it is really just a list of popular hashtags, which appear to be arranged by how often they are being used. This can be good for finding things that other users are interested in talking about, or finding specific content you are interested in.

If you tap on any topic in the list, it will display a feed of notes that include that hashtag. What's better, you can add it as a feed option you can select on your Home feed any time you want to see posts with that tag.

The only suggestion I would make to improve this tab is some indication of why the topics are arranged in the order presented. A simple indicator of the number of posts with that hashtag in the last 24 hours, or whatever the interval is for determining their ranking, would more than suffice.

Even with those few shortcomings, Primal's Explore tab makes the client one of the best options for discovering content on Nostr that you are actually interested in seeing and interacting with.

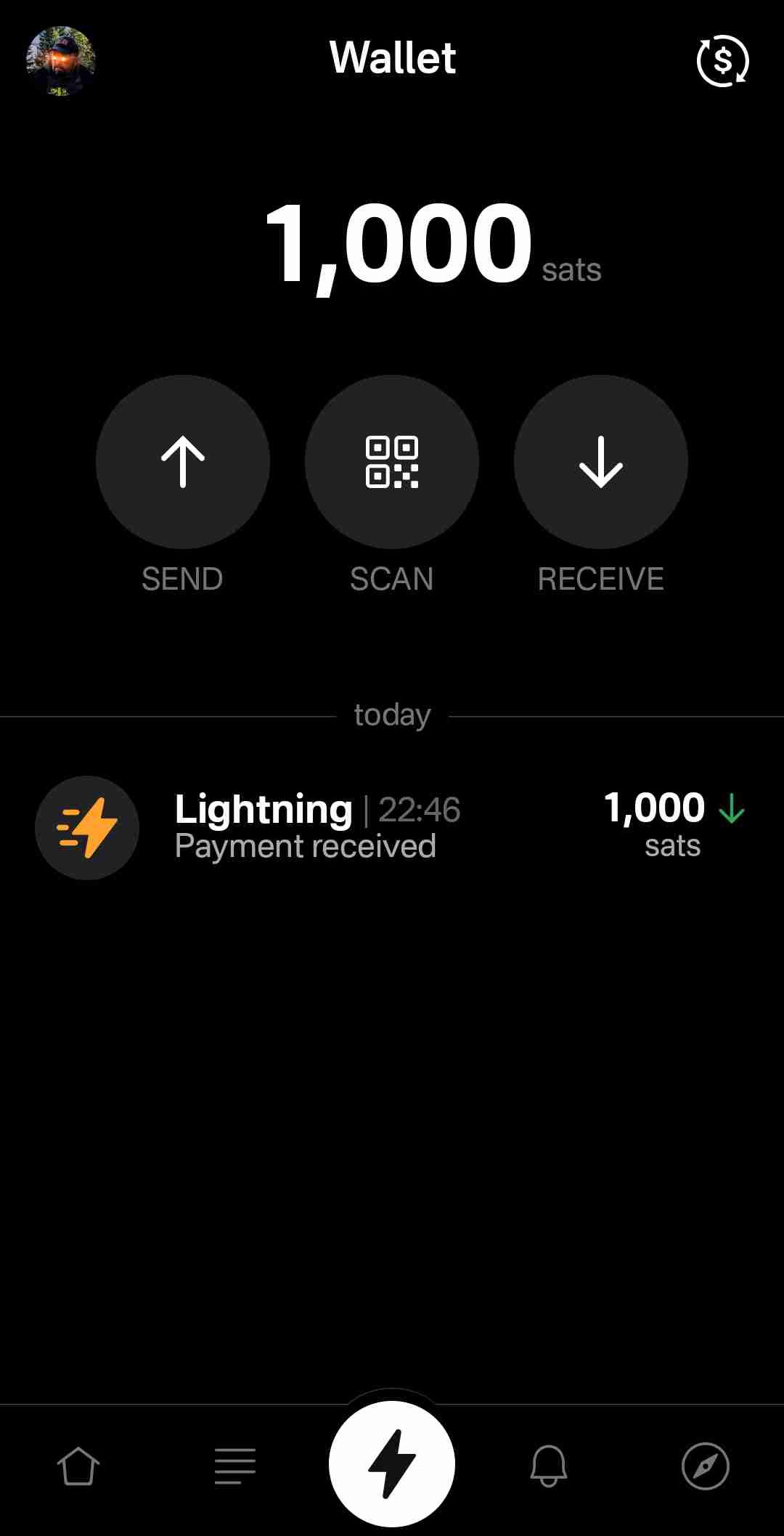

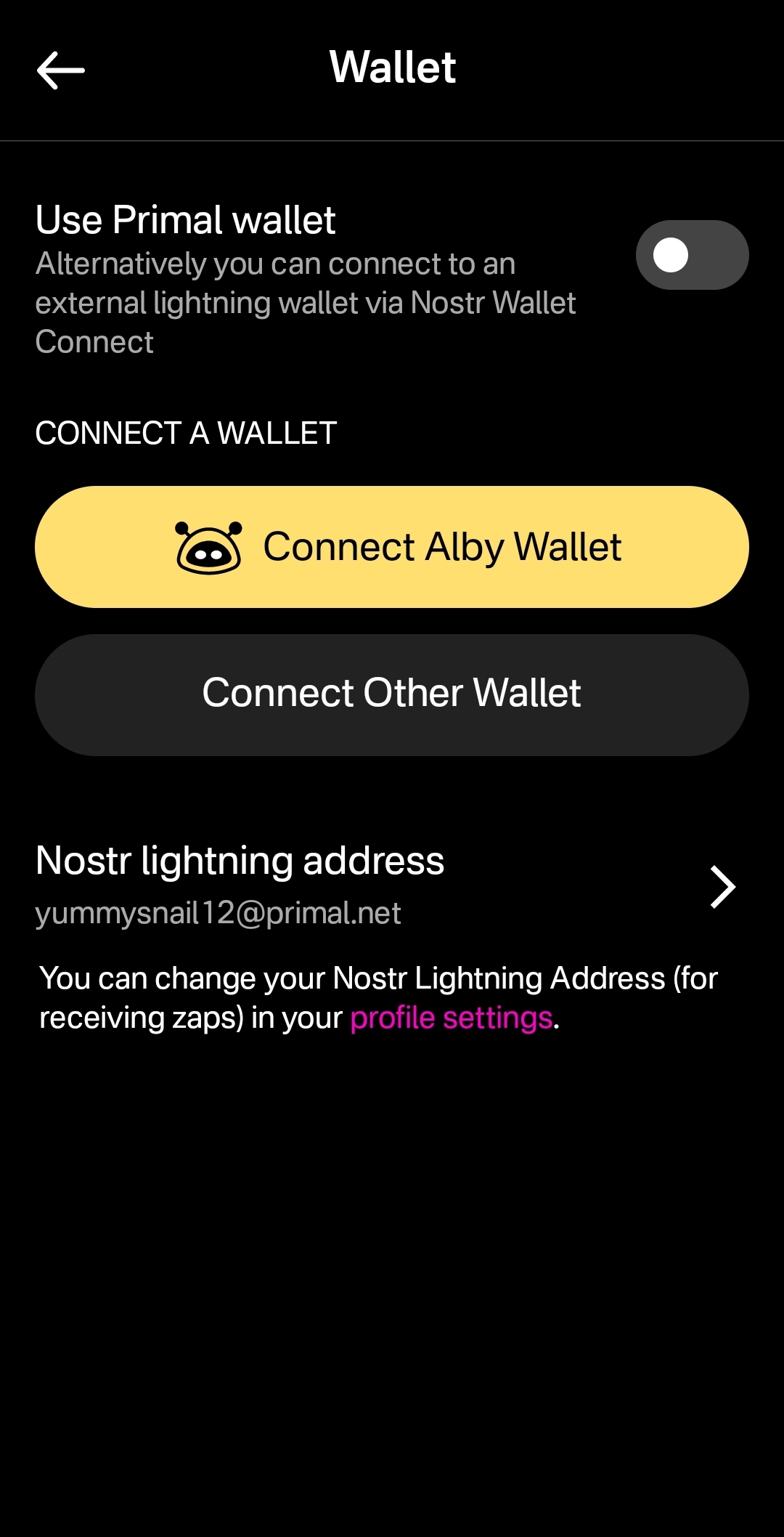

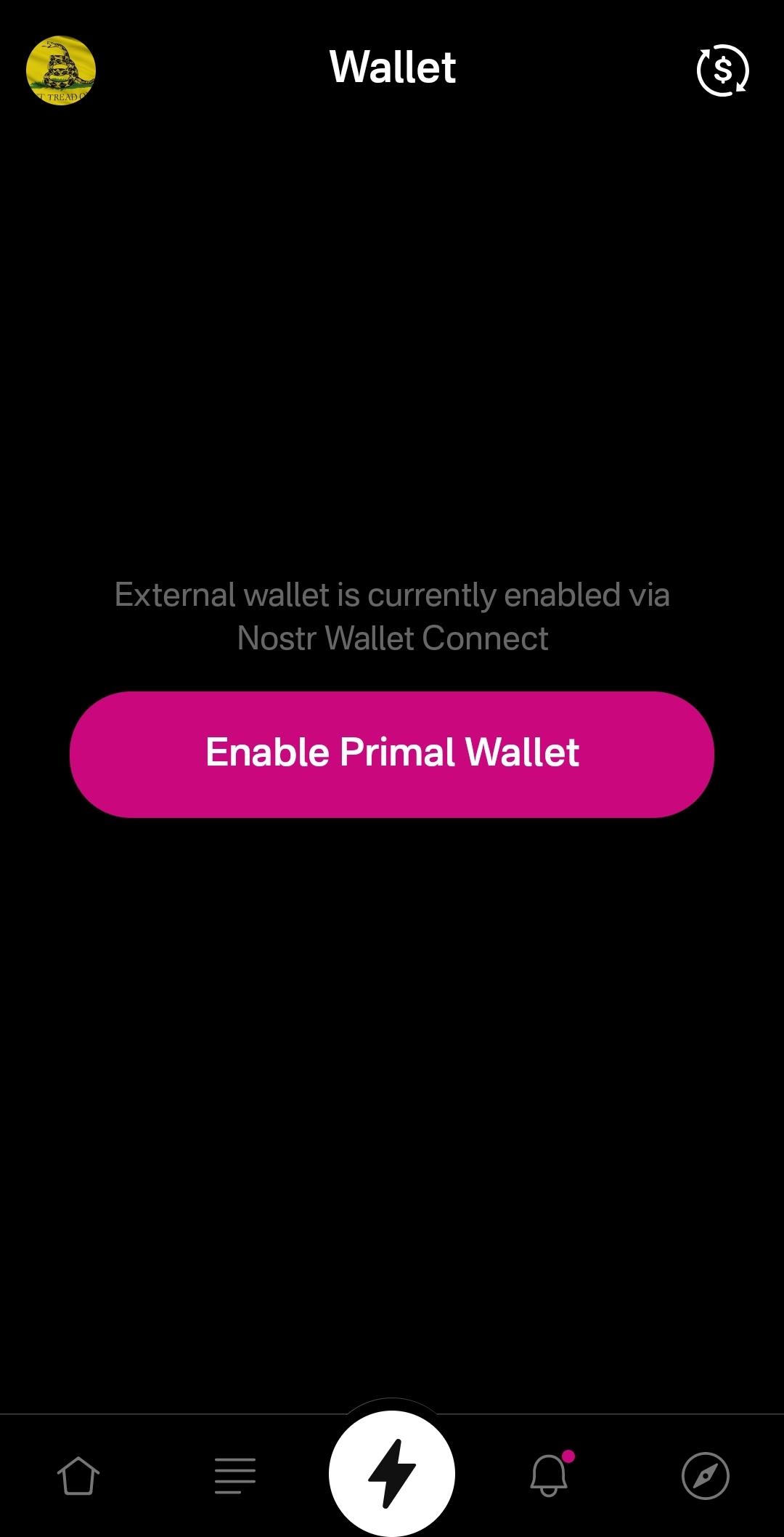

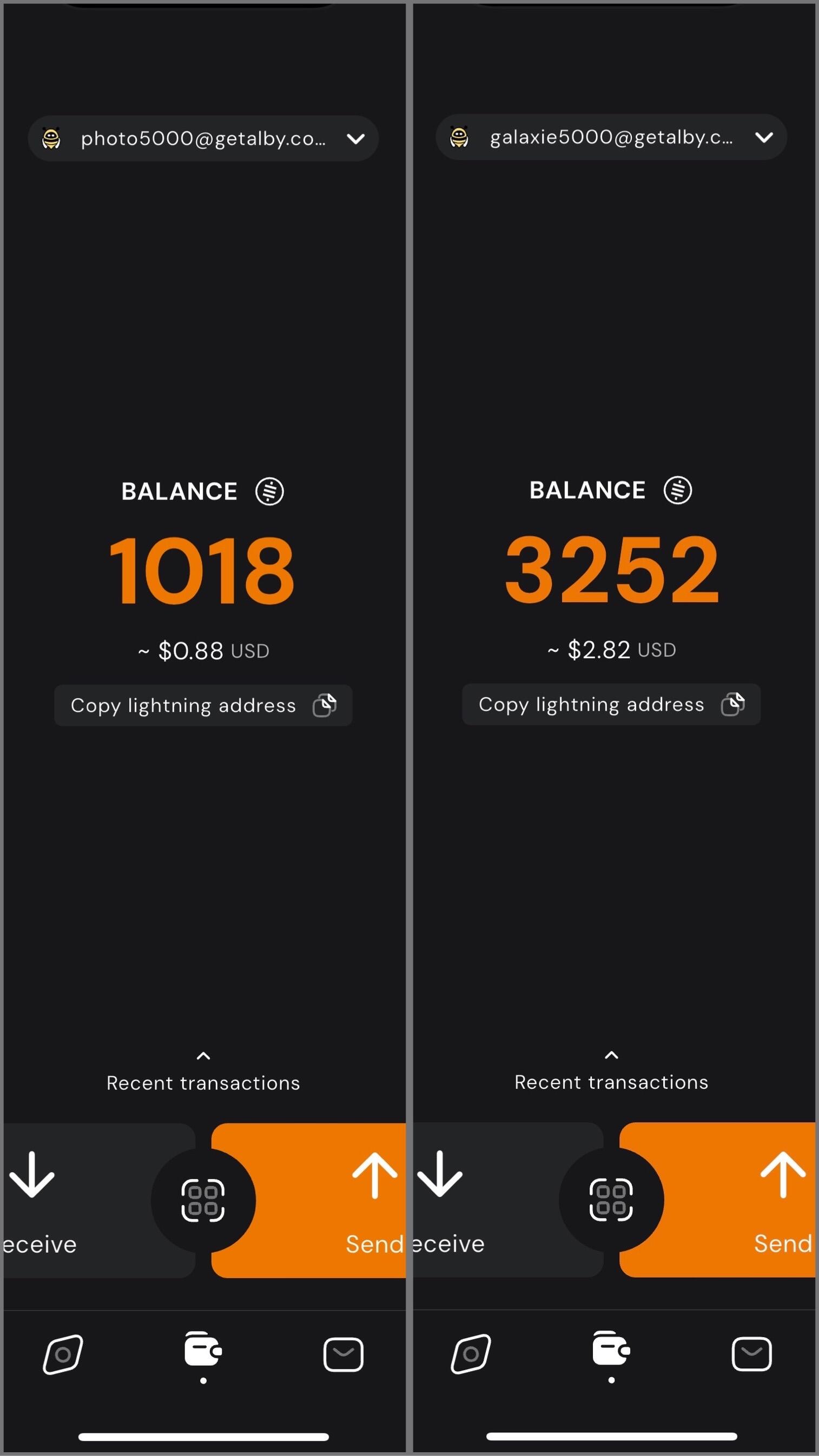

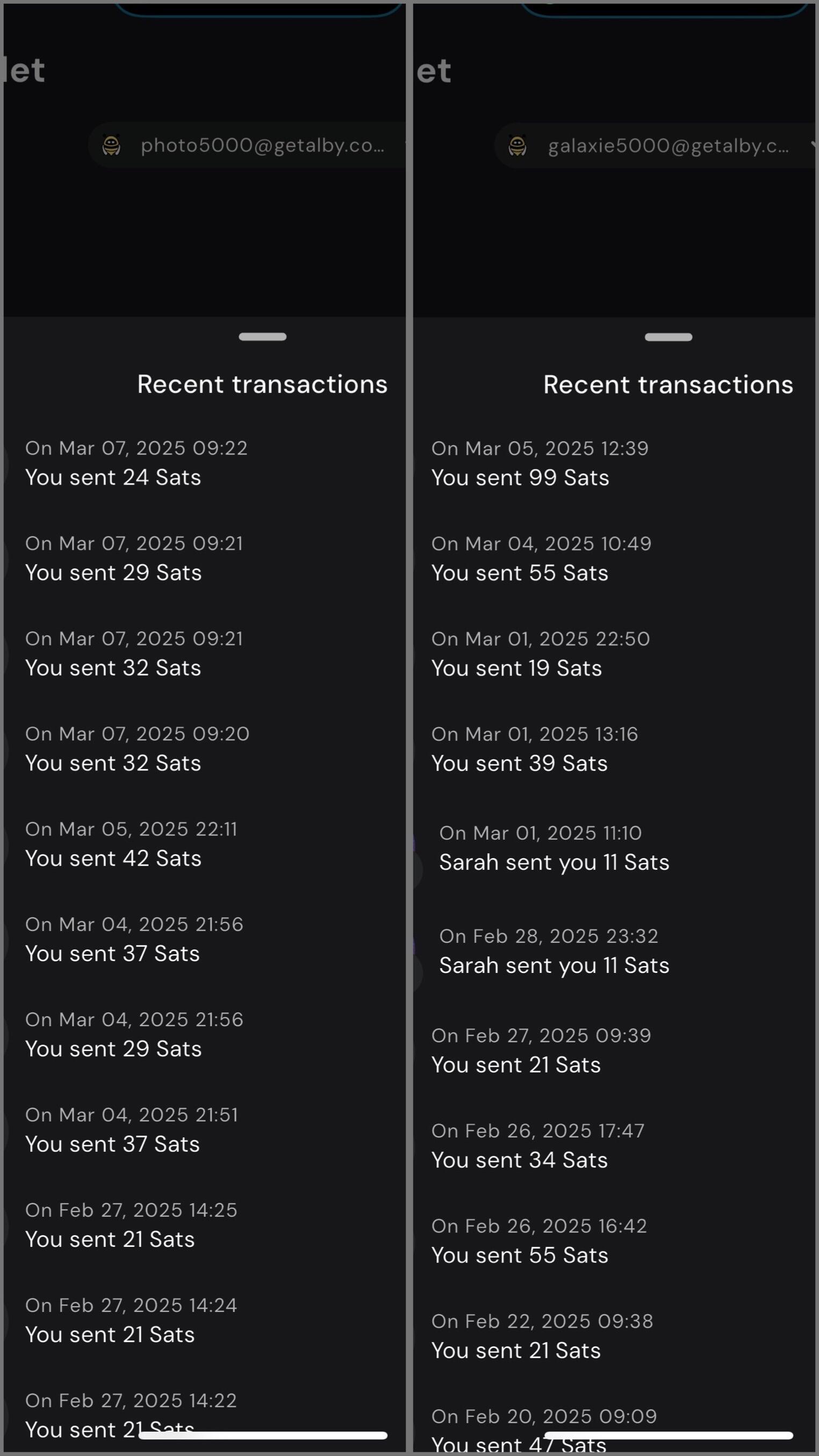

Built-In Wallet

While this feature is completely optional, the icon to access the wallet is the largest of the icons at the bottom of the screen, making you feel like you are missing out on the most important feature of the app if you don't set it up. I could be critical of this design choice, but in many ways I think it is warranted. The built-in wallet is one of the most unique features that Primal has going for it.

Consider: If you are a new user coming to Nostr, who isn't already a Bitcoiner, and you see that everyone else on the platform is sending and receiving sats for their posts, will you be more likely to go download a separate wallet application or use one that is built-into your client? I would wager the latter option by a long shot. No need to figure out which wallet you should download, whether you should do self-custody or custodial, or make the mistake of choosing a wallet with unexpected setup fees and no Lightning address so you can't even receive zaps to it. nostr:npub16c0nh3dnadzqpm76uctf5hqhe2lny344zsmpm6feee9p5rdxaa9q586nvr often states that he believes more people will be onboarded to Bitcoin through Nostr than by any other means, and by including a wallet into the Primal client, his team has made adopting Bitcoin that much easier for new Nostr users.

Some of us purists may complain that it is custodial and KYC, but that is an unfortunate necessity in order to facilitate onboarding newcoiners to Bitcoin. This is not intended to be a wallet for those of us who have been using Bitcoin and Lightning regularly already. It is meant for those who are not already familiar with Bitcoin to make it as easy as possible to get off zero, and it accomplishes this better than any other wallet I have ever tried.

In large part, this is because the KYC is very light. It does need the user's legal name, a valid email address, date of birth, and country of residence, but that's it! From there, the user can buy Bitcoin directly through the app, but only in the amount of $4.99 at a time. This is because there is a substantial markup on top of the current market price, due to utilizing whatever payment method the user has set up through their Google Play Store. The markup seemed to be about 19% above the current price, since I could purchase 4,143 sats for $4.99 ($120,415 / Bitcoin), when the current price was about $101,500. But the idea here is not for the Primal wallet to be a user's primary method of stacking sats. Rather, it is intended to get them off zero and have a small amount of sats to experience zapping with, and it accomplishes this with less friction than any other method I know.

Moreover, the Primal wallet has the features one would expect from any Lightning wallet. You can send sats to any Nostr user or Lightning address, receive via invoice, or scan to pay an invoice. It even has the ability to receive via on-chain. This means users who don't want to pay the markup from buying through Primal can easily transfer sats they obtained by other means into the Primal wallet for zapping, or for using it as their daily-driver spending wallet.

Speaking of zapping, once the wallet is activated, sending zaps is automatically set to use the wallet, and they are fast. Primal gives you immediate feedback that the zap was sent and the transaction shows in your wallet history typically before you can open the interface. I can confidently say that Primal wallet's integration is the absolute best zapping experience I have seen in any Nostr client.

One thing to note that may not be immediately apparent to new users is they need to add their Lightning address with Primal into their profile details before they can start receiving zaps. So, sending zaps using the wallet is automatic as soon as you activate it, but receiving is not. Ideally, this could be further streamlined, so that Primal automatically adds the Lightning address to the user's profile when the wallet is set up, so long as there is not currently a Lightning address listed.

Of course, if you already have a Lightning wallet, you can connect it to Primal for zapping, too. We will discuss this further in the section dedicated to zap integration.

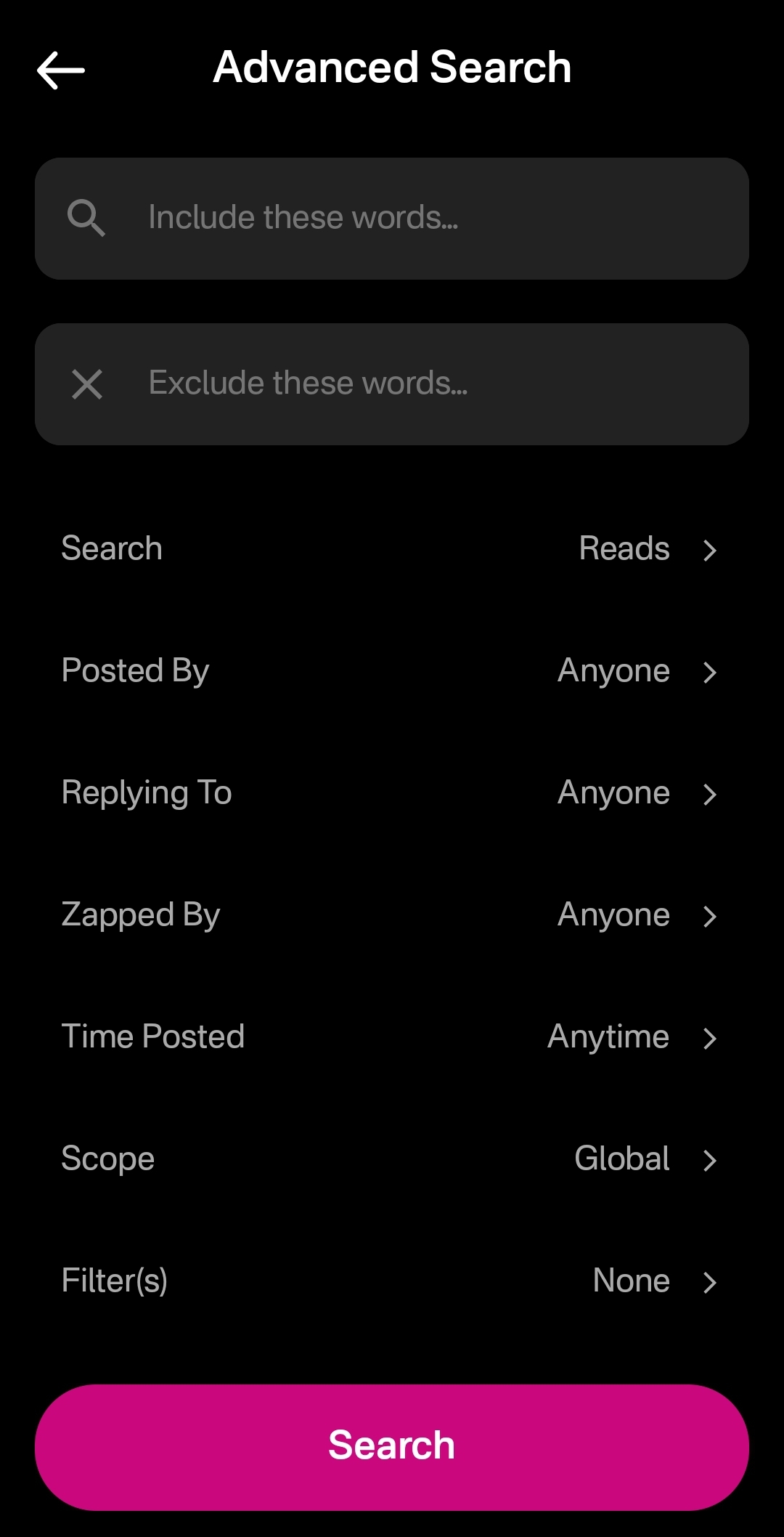

Advanced Search

Search has always been a tough nut to crack on Nostr, since it is highly dependent on which relays the client is pulling information from. Primal has sought to resolve this issue, among others, by running a caching relay that pulls notes from a number of relays to store them locally, and perform some spam filtering. This allows for much faster retrieval of search results, and also makes their advanced search feature possible.

Advanced search can be accessed from most pages by selecting the magnifying glass icon, and then the icon for more options next to the search bar.

As can be seen in the screenshot below, there are a plethora of filters that can be applied to your search terms.

You can immediately see how this advanced search could be a very powerful tool for not just finding a particular previous note that you are looking for, but for creating your own custom feed of notes. Well, wouldn't you know it, Primal allows you to do just that! This search feature, paired with the other features mentioned above related to finding notes you want to see in your feed, makes Primal hands-down the best client for content discovery.

The only downside as a free user is that some of these search options are locked behind the premium membership. Or else you only get to see a certain number of results of your advanced search before you must be a premium member to see more.

Can My Grandma Use It?

Score: 4.8 / 5 Primal has obviously put a high priority on making their client user-friendly, even for those who have never heard of relays, public/private key cryptography, or Bitcoin. All of that complexity is hidden away. Some of it is available to play around with for the users who care to do so, but it does not at all get in the way of the users who just want to jump in and start posting notes and interacting with other users in a truly open public square.

To begin with, the onboarding experience is incredibly smooth. Tap "Create Account," enter your chosen display name and optional bio information, upload a profile picture, and then choose some topics you are interested in. You are then presented with a preview of your profile, with the ability to add a banner image, if you so choose, and then tap "Create Account Now."

From there you receive confirmation that your account has been created and that your "Nostr key" is available to you in the application settings. No further explanation is given about what this key is for at this point, but the user doesn't really need to know at the moment, either. If they are curious, they will go to the app settings to find out.

At this point, Primal encourages the user to activate Primal Wallet, but also gives the option for the user to do it later.

That's it! The next screen the user sees if they don't opt to set up the wallet is their Home feed with notes listed in chronological order. More impressive, the feed is not empty, because Primal has auto-followed several accounts based on your selected topics.

Now, there has definitely been some legitimate criticism of this practice of following specific accounts based on the topic selection, and I agree. I would much prefer to see Primal follow hashtags based on what was selected, and combine the followed hashtags into a feed titled "My Topics" or something of that nature, and make that the default view when the user finishes onboarding. Following particular users automatically will artificially inflate certain users' exposure, while other users who might be quality follows for that topic aren't seen at all.

The advantage of following particular users over a hashtag, though, is that Primal retains some control over the quality of the posts that new users are exposed to right away. Primal can ensure that new users see people who are actually posting quality photography when they choose it as one of their interests. However, even with that example, I chose photography as one of my interests and while I did get some stunning photography in my Home feed by default based on Primal's chosen follows, I also scrolled through the Photography hashtag for a bit and I really feel like I would have been better served if Primal had simply followed that hashtag rather than a particular set of users.

We've already discussed how simple it is to set up the Primal Wallet. You can see the features section above if you missed it. It is, by far, the most user friendly experience to onboarding onto Lightning and getting a few sats for zapping, and it is the only one I know of that is built directly into a Nostr client. This means new users will have a frictionless introduction to transacting via Lightning, perhaps without even realizing that's what they are doing.

Discovering new content of interest is incredibly intuitive on Primal, and the only thing that new users may struggle with is getting their own notes seen by others. To assist with this, I would suggest Primal encourage users to make their first post to the introductions hashtag and direct any questions to the AskNostr hashtag as part of the onboarding process. This will get them some immediate interactions from other users, and further encouragement to set up their wallet if they haven't already done so.

How do UI look?

Score: 4.9 / 5

Primal is the most stunningly beautiful Nostr client available, in my honest opinion. Despite some of my hangups about certain functionality, the UI alone makes me want to use it.

It is clean, attractive, and intuitive. Everything I needed was easy to find, and nothing felt busy or cluttered. There are only a few minor UI glitches that I ran into while testing the app. Some of them were mentioned in the section of the review detailing the feed selector feature, but a couple others occurred during onboarding.

First, my profile picture was not centered in the preview when I uploaded it. This appears to be because it was a low quality image. Uploading a higher quality photo did not have this result.

The other UI bug was related to text instructions that were cut off, and not able to scroll to see the rest of them. This occurred on a few pages during onboarding, and I expect it was due to the size of my phone screen, since it did not occur when I was on a slightly larger phone or tablet.

Speaking of tablets, Primal Android looks really good on a tablet, too! While the client does not have a landscape mode by default, many Android tablets support forcing apps to open in full-screen landscape mode, with mixed results. However, Primal handles it well. I would still like to see a tablet version developed that takes advantage of the increased screen real estate, but it is certainly a passable option.

At this point, I would say the web client probably has a bit better UI for use on a tablet than the Android client does, but you miss out on using the built-in wallet, which is a major selling point of the app.

This lack of a landscape mode for tablets and the few very minor UI bugs I encountered are the only reason Primal doesn't get a perfect score in this category, because the client is absolutely stunning otherwise, both in light and dark modes. There are also two color schemes available for each.

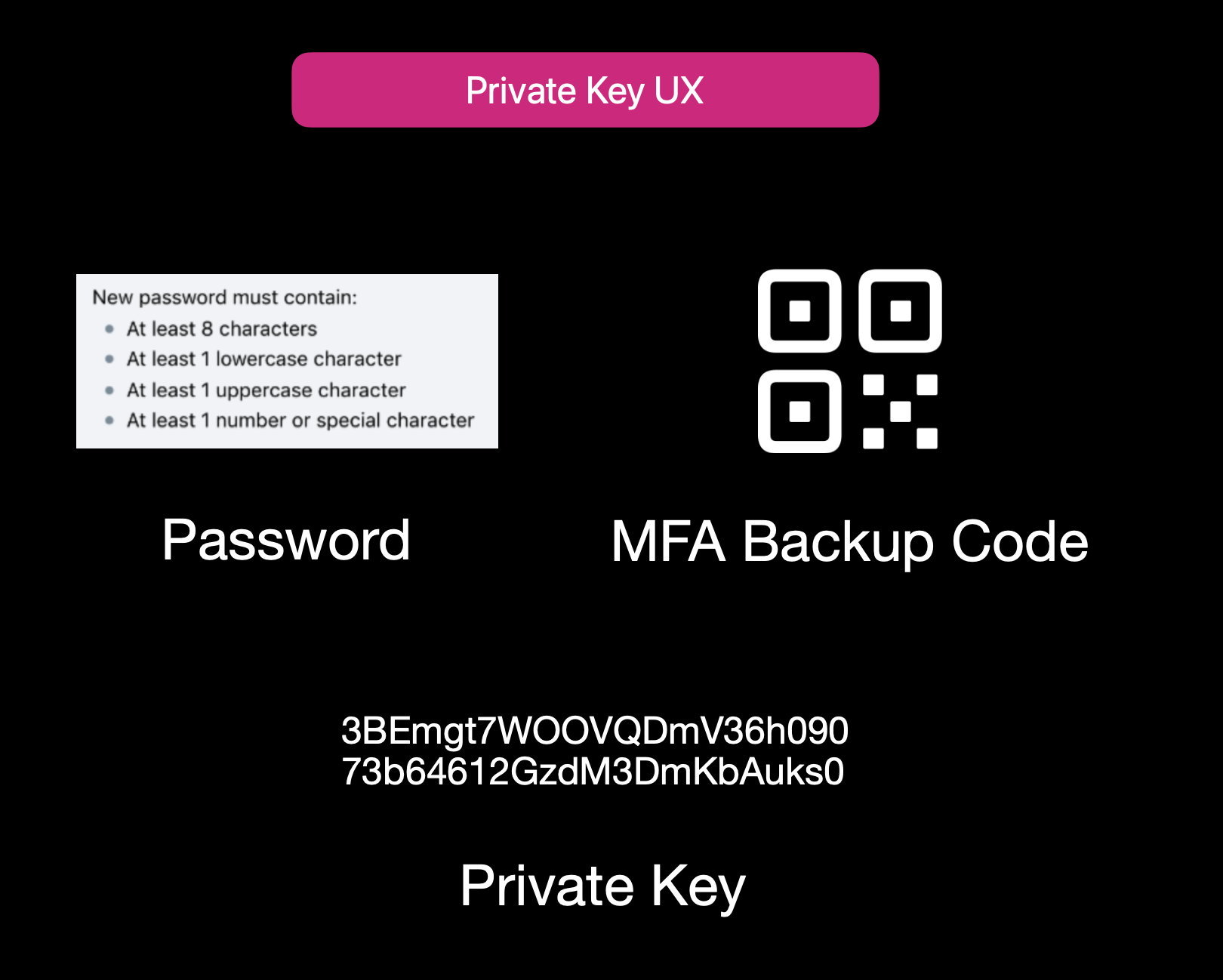

Log In Options

Score: 4 / 5 (Updated 4/30/2025)

Unfortunately, Primal has not included any options for log in outside of pasting your private key into the application. While this is a very simple way to log in for new users to understand, it is also the least secure means to log into Nostr applications.

This is because, even with the most trustworthy client developer, giving the application access to your private key always has the potential for that private key to somehow be exposed or leaked, and on Nostr there is currently no way to rotate to a different private key and keep your identity and social graph. If someone gets your key, they are you on Nostr for all intents and purposes.

This is not a situation that users should be willing to tolerate from production-release clients at this point. There are much better log in standards that can and should be implemented if you care about your users.

That said, I am happy to report that external signer support is on the roadmap for Primal, as confirmed below:

nostr:note1n59tc8k5l2v30jxuzghg7dy2ns76ld0hqnn8tkahyywpwp47ms5qst8ehl

No word yet on whether this will be Android signer or remote signer support, or both.

This lack of external signer support is why I absolutely will not use my main npub with Primal for Android. I am happy to use the web client, which supports and encourages logging in with a browser extension, but until the Android client allows users to protect their private key, I cannot recommend it for existing Nostr users.

Update: As of version 2.2.13, all of what I have said above is now obsolete. Primal has added Android signer support, so users can now better protect their nsec by using Amber!

I would still like to see support for remote signers, especially with nstart.me as a recommended Nostr onboarding process and the advent of FROSTR for key management. That said, Android signer support on its own has been a long time coming and is a very welcome addition to the Primal app. Bravo Primal team!

Zap Integration

Score: 4.8 / 5

As mentioned when discussing Primal's built-in wallet feature, zapping in Primal can be the most seamless experience I have ever seen in a Nostr client. Pairing the wallet with the client is absolutely the path forward for Nostr leading the way to Bitcoin adoption.

But what if you already have a Lightning wallet you want to use for zapping? You have a couple options. If it is an Alby wallet or another wallet that supports Nostr Wallet Connect, you can connect it with Primal to use with one-tap zapping.

How your zapping experience goes with this option will vary greatly based on your particular wallet of choice and is beyond the scope of this review. I used this option with a hosted wallet on my Alby Hub and it worked perfectly. Primal gives you immediate feedback that you have zapped, even though the transaction usually takes a few seconds to process and appear in your wallet's history.

The one major downside to using an external wallet is the lack of integration with the wallet interface. This interface currently only works with Primal's wallet, and therefore the most prominent tab in the entire app goes unused when you connect an external wallet.

An ideal improvement would be for the wallet screen to work similar to Alby Go when you have an external wallet connected via Nostr Wallet Connect, allowing the user to have Primal act as their primary mobile Lightning wallet. It could have balance and transaction history displayed, and allow sending and receiving, just like the integrated Primal wallet, but remove the ability to purchase sats directly through the app when using an external wallet.

Content Discovery

Score: 4.8 / 5

Primal is the best client to use if you want to discover new content you are interested in. There is no comparison, with only a few caveats.

First, the content must have been posted to Nostr as either a short-form or long-form note. Primal has a limited ability to display other types of content. For instance, discovering video content or streaming content is lacking.

Second, you must be willing to put up with the fact that Primal lacks a means of filtering sensitive content when you are exploring beyond the bounds of your current followers. This may not be an issue for some, but for others it could be a deal-breaker.

Third, it would be preferable for Primal to follow topics you are interested in when you choose them during onboarding, rather than follow specific npubs. Ideally, create a "My Topics" feed that can be edited by selecting your interests in the Topics section of the Explore tab.

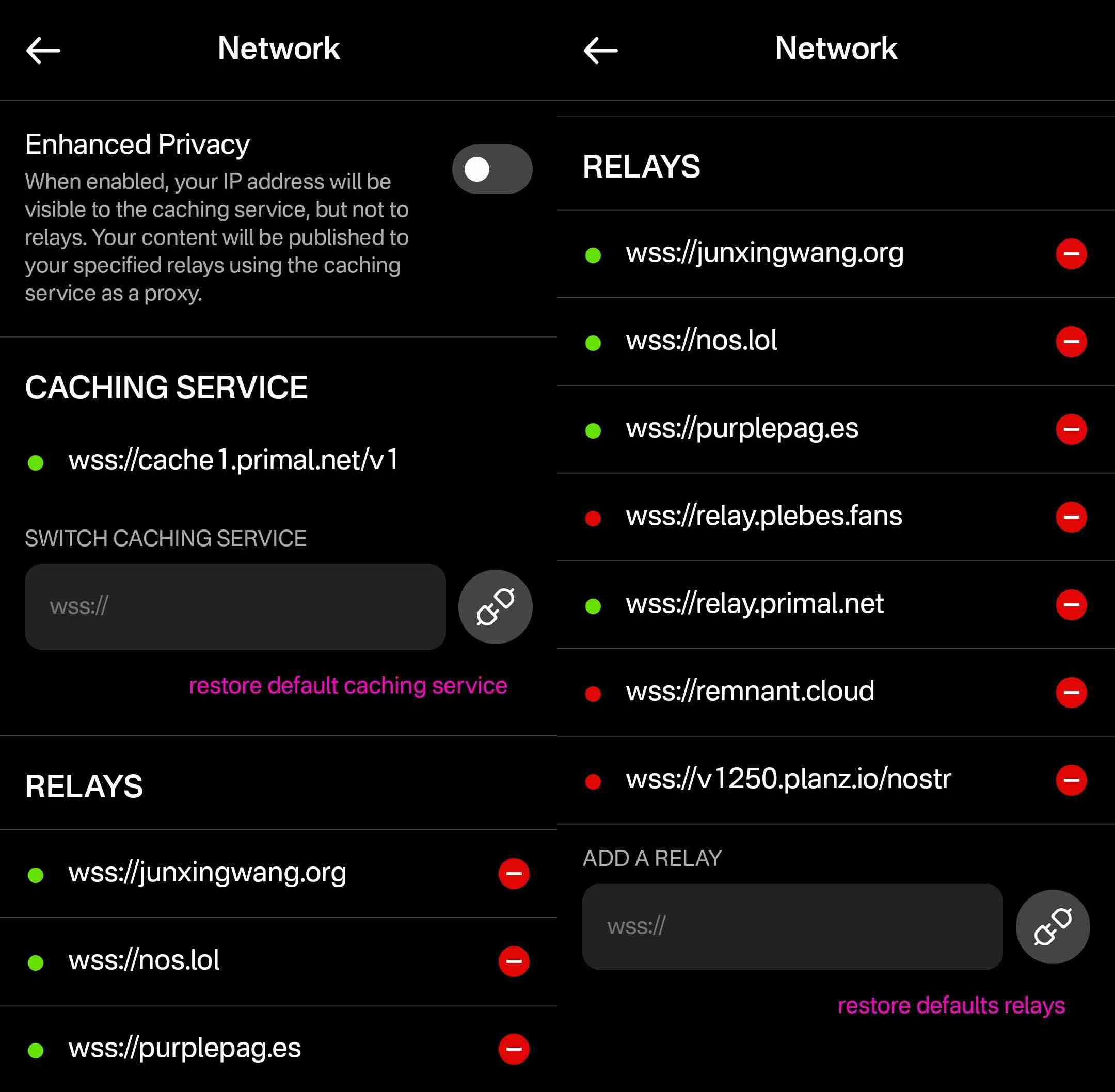

Relay Management

Score: 2.5 / 5

For new users who don't want to mess around with managing relays, Primal is fantastic! There are 7 relays selected by default, in addition to Primal's caching service. For most users who aren't familiar with Nostr's protocol archetecture, they probably won't ever have to change their default relays in order to use the client as they would expect.

However, two of these default relays were consistently unreachable during the week that I tested. These were relay.plebes.fans and remnant.cloud. The first relay seems to be an incorrect URL, as I found nosflare.plebes.fans online and with perfect uptime for the last 12 hours on nostr.watch. I was unable to find remnant.cloud on nostr.watch at all. A third relay was intermittent, sometimes online and reachable, and other times unreachable: v1250.planz.io/nostr. If Primal is going to have default relays, they should ideally be reliable and with accurate URLs.

That said, users can add other relays that they prefer, and remove relays that they no longer want to use. They can even set a different caching service to use with the client, rather than using Primal's.

However, that is the extent of a user's control over their relays. They cannot choose which relays they want to write to and which they want to read from, nor can they set any private relays, outbox or inbox relays, or general relays. Loading the npub I used for this review into another client with full relay management support revealed that the relays selected in Primal are being added to both the user's public outbox relays and public inbox relays, but not to any other relay type, which leads me to believe the caching relay is acting as the client's only general relay and search relay.

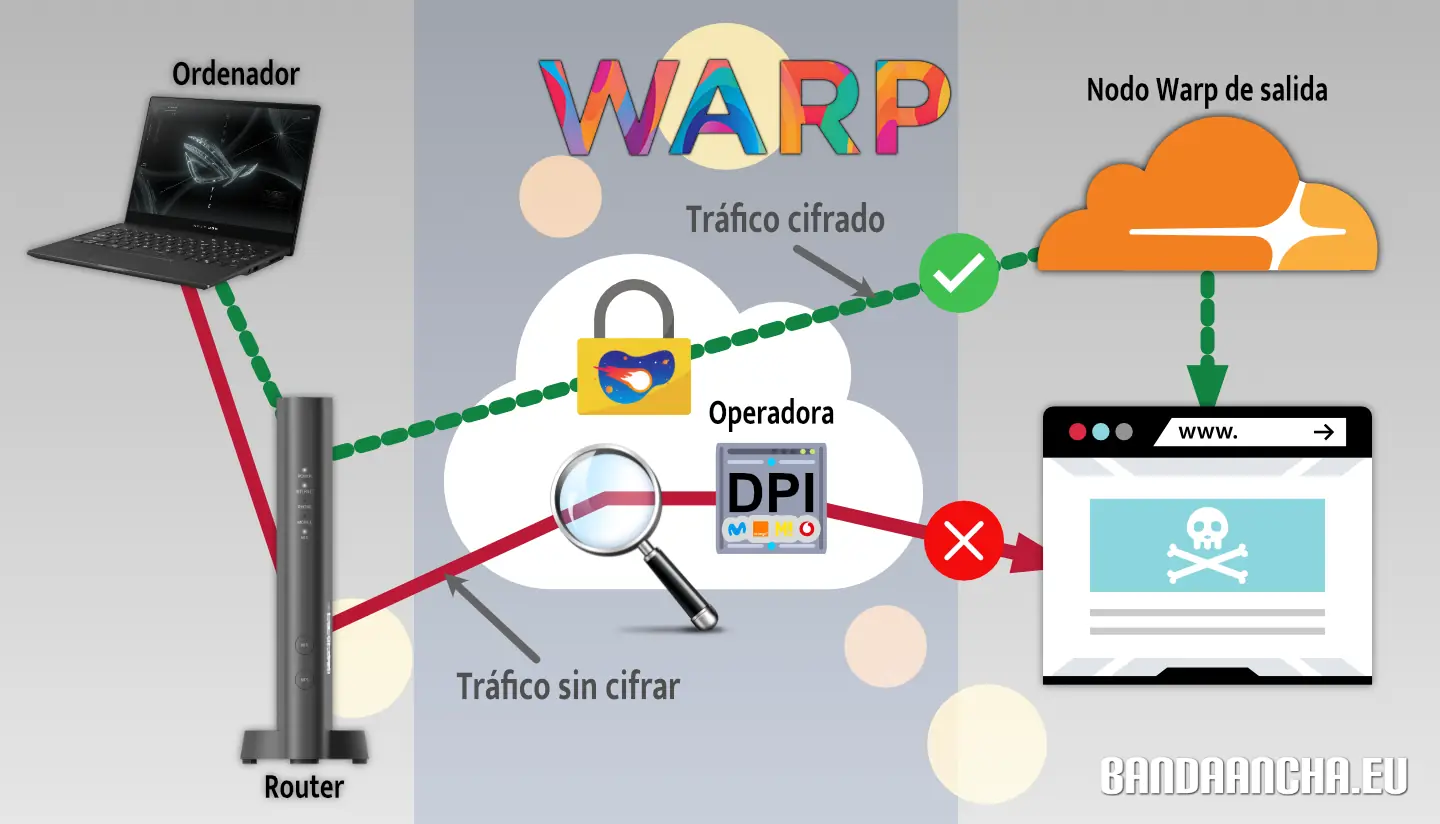

One unique and welcomed addition is the "Enhanced Privacy" feature, which is off by default, but which can be toggled on. I am not sure why this is not on by default, though. Perhaps someone from the Primal team can enlighten me on that choice.

By default, when you post to Nostr, all of your outbox relays will see your IP address. If you turn on the Enhanced Privacy mode, only Primal's caching service will see your IP address, because it will post your note to the other relays on your behalf. In this way, the caching service acts similar to a VPN for posting to Nostr, as long as you trust Primal not to log or leak your IP address.

In short, if you use any other Nostr clients at all, do not use Primal for managing your relays.

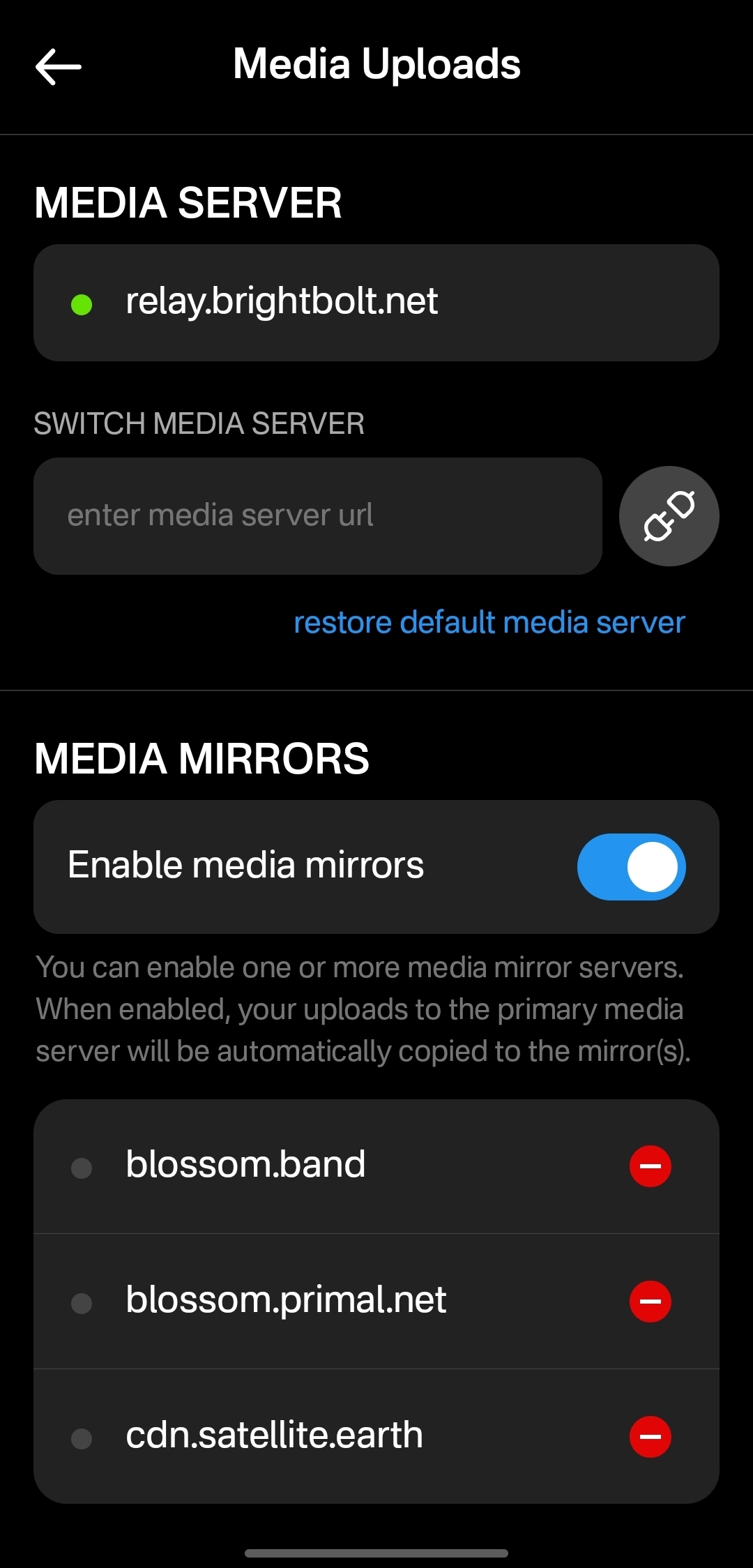

Media Hosting Options

Score: 4.9 / 5 This is a NEW SECTION of this review, as of version 2.2.13!

Primal has recently added support for the Blossom protocol for media hosting, and has added a new section within their settings for "Media Uploads."

Media hosting is one of the more complicated problems to solve for a decentralized publishing protocol like Nostr. Text-based notes are generally quite small, making them no real burden to store on relays, and a relay can prune old notes as they see fit, knowing that anyone who really cared about those notes has likely archived them elsewhere. Media, on the other hand, can very quickly fill up a server's disk space, and because it is usually addressable via a specific URL, removing it from that location to free up space means it will no longer load for anyone.

Blossom solves this issue by making it easy to run a media server and have the same media mirrored to more than one for redundancy. Since the media is stored with a file name that is a hash of the content itself, if the media is deleted from one server, it can still be found from any other server that has the same file, without any need to update the URL in the Nostr note where it was originally posted.

Prior to this update, Primal only allowed media uploads to their own media server. Now, users can upload to any blossom server, and even choose to have their pictures or videos mirrored additional servers automatically. To my knowledge, no other Nostr client offers this automatic mirroring at the time of upload.

One of my biggest criticisms of Primal was that it had taken a siloed approach by providing a client, a caching relay, a media server, and a wallet all controlled by the same company. The whole point of Nostr is to separate control of all these services to different entities. Now users have more options for separating out their media hosting and their wallet to other providers, at least. I would still like to see other options available for a caching relay, but that relies on someone else being willing to run one, since the software is open for anyone to use. It's just not your average, lightweight relay that any average person can run from home.

Regardless, this update to add custom Blossom servers is a most welcome step in the right direction!

Current Users' Questions

The AskNostr hashtag can be a good indication of the pain points that other users are currently having with a client. Here are some of the most common questions submitted about Primal since the launch of 2.0:

nostr:note1dqv4mwqn7lvpaceg9s7damf932ydv9skv2x99l56ufy3f7q8tkdqpxk0rd

This was a pretty common question, because users expect that they will be able to create the same type of content that they can consume in a particular client. I can understand why this was left out in a mobile client, but perhaps it should be added in the web client.

nostr:note16xnm8a2mmrs7t9pqymwjgd384ynpf098gmemzy49p3572vhwx2mqcqw8xe

This is a more concerning bug, since it appears some users are experiencing their images being replaced with completely different images. I did not experience anything similar in my testing, though.

nostr:note1uhrk30nq0e566kx8ac4qpwrdh0vfaav33rfvckyvlzn04tkuqahsx8e7mr

There hasn't been an answer to this, but I have not been able to find a way. It seems search results will always include replies as well as original notes, so a feed made from the search results will as well. Perhaps a filter can be added to the advanced search to exclude replies? There is already a filter to only show replies, but there is no corresponding filter to only show original notes.

nostr:note1zlnzua28a5v76jwuakyrf7hham56kx9me9la3dnt3fvymcyaq6eqjfmtq6

Since both mobile platforms support the wallet, users expect that they will be able to access it in their web client, too. At this time, they cannot. The only way to have seamless zapping in the web client is to use the Alby extension, but there is not a way to connect it to your Primal wallet via Nostr Wallet Connect either. This means users must have a separate wallet for zapping on the web client if they use the Primal Wallet on mobile.

nostr:note15tf2u9pffy58y9lk27y245ew792raqc7lc22jezxvqj7xrak9ztqu45wep

It seems that Primal is filtering for spam even for profiles you actively follow. Moreover, exactly what the criteria is for being considered spam is currently opaque.

nostr:note1xexnzv0vrmc8svvduurydwmu43w7dftyqmjh4ps98zksr39ln2qswkuced

For those unaware, Blossom is a protocol for hosting media as blobs identified by a hash, allowing them to be located on and displayed from other servers they have been mirrored to when when the target server isn't available. Primal currently runs a Blossom server (blossom.primal.net) so I would expect we see Blossom support in the future.

nostr:note1unugv7s36e2kxl768ykg0qly7czeplp8qnc207k4pj45rexgqv4sue50y6

Currently, Primal on Android only supports uploading photos to your posts. Users must upload any video to some other hosting service and copy/paste a link to the video into their post on Primal. I would not be surprised to see this feature added in the near future, though.

nostr:note10w6538y58dkd9mdrlkfc8ylhnyqutc56ggdw7gk5y7nsp00rdk4q3qgrex

Many Nostr users have more than one npub for various uses. Users would prefer to have a way to quickly switch between accounts than to have to log all the way out and paste their npub for the other account every time they want to use it.

There is good news on this front, though:

nostr:note17xv632yqfz8nx092lj4sxr7drrqfey6e2373ha00qlq8j8qv6jjs36kxlh

Wrap Up

All in all, Primal is an excellent client. It won't be for everyone, but that's one of the strengths of Nostr as a protocol. You can choose to use the client that best fits your own needs, and supplement with other clients and tools as necessary.

There are a couple glaring issues I have with Primal that prevent me from using it on my main npub, but it is also an ever-improving client, that already has me hopeful for those issues to be resolved in a future release.

So, what should I review next? Another Android client, such as #Amethyst or #Voyage? Maybe an "other stuff" app, like #Wavlake or #Fountain? Please leave your suggestions in the comments.

I hope this review was valuable to you! If it was, please consider letting me know just how valuable by zapping me some sats and reposting it out to your follows.

Thank you for reading!

PV 🤙

-

@ 401014b3:59d5476b

2025-04-30 21:08:52

@ 401014b3:59d5476b

2025-04-30 21:08:52And here's what it said.

And for what it's worth, I actually think ChatGPT nailed it.

Thoughts?

-

Andy Reid – Kansas City Chiefs Andy Reid remains the gold standard among NFL head coaches. With three Super Bowl titles in the past six seasons and a career record of 273-146-1 (.651), Reid's offensive innovation and leadership continue to set him apart.

-

Sean McVay – Los Angeles Rams Sean McVay has revitalized the Rams since taking over in 2017, leading them to two Super Bowl appearances and maintaining only one losing season in eight years. His ability to adapt and keep the team competitive has solidified his status as one of the league's elite coaches.

-

John Harbaugh – Baltimore Ravens John Harbaugh's tenure with the Ravens has been marked by consistent success, including a Super Bowl victory in 2012 and multiple double-digit win seasons. His leadership and adaptability have kept Baltimore as a perennial contender.

-

Nick Sirianni – Philadelphia Eagles Nick Sirianni has quickly risen through the ranks, boasting a .706 regular-season winning percentage and leading the Eagles to two Super Bowl appearances, including one victory. His emphasis on player morale and adaptability have been key to Philadelphia's recent success.

-

Dan Campbell – Detroit Lions Dan Campbell has transformed the Lions into a formidable team, improving their record each season and instilling a culture of toughness and resilience. Despite a disappointing playoff exit in 2024, Campbell's impact on the franchise is undeniable.

originally posted at https://stacker.news/items/967880

-

-

@ fd0bcf8c:521f98c0

2025-04-29 13:38:49

@ fd0bcf8c:521f98c0

2025-04-29 13:38:49The vag' sits on the edge of the highway, broken, hungry. Overhead flies a transcontinental plane filled with highly paid executives. The upper class has taken to the air, the lower class to the roads: there is no longer any bond between them, they are two nations."—The Sovereign Individual

Fire

I was talking to a friend last night. Coffee in hand. Watching flames consume branches. Spring night on his porch.

He believed in America's happy ending. Debt would vanish. Inflation would cool. Manufacturing would return. Good guys win.

I nodded. I wanted to believe.

He leaned forward, toward the flame. I sat back, watching both fire and sky.

His military photos hung inside. Service medals displayed. Patriotism bone-deep.

The pendulum clock on his porch wall swung steadily. Tick. Tock. Measuring moments. Marking epochs.

History tells another story. Not tragic. Just true.

Our time has come. America cut off couldn't compete. Factories sit empty. Supply chains span oceans. Skills lack. Children lag behind. Rebuilding takes decades.

Truth hurts. Truth frees.

Cycles

History moves in waves. Every 500 years, power shifts. Systems fall. Systems rise.

500 BC - Greek coins changed everything. Markets flourished. Athens dominated.

1 AD - Rome ruled commerce. One currency. Endless roads. Bustling ports.

500 AD - Rome faded. Not overnight. Slowly. Trade withered. Cities emptied. Money debased. Roads crumbled. Local strongmen rose. Peasants sought protection. Feudalism emerged.

People still lived. Still worked. Horizons narrowed. Knowledge concentrated. Most barely survived. Rich adapted. Poor suffered.

Self-reliance determined survival. Those growing food endured. Those making essential goods continued. Those dependent on imperial systems suffered most.

1000 AD - Medieval revival began. Venice dominated seas. China printed money. Cathedrals rose. Universities formed.

1500 AD - Europeans sailed everywhere. Spanish silver flowed. Banks financed kingdoms. Companies colonized continents. Power moved west.

The pendulum swung. East to West. West to East. Civilizations rose. Civilizations fell.

2000 AD - Pattern repeats. America strains. Digital networks expand. China rises. Debt swells. Old systems break.

We stand at the hinge.

Warnings

Signs everywhere. Dollar weakens globally. BRICS builds alternatives. Yuan buys oil. Factories rust. Debt exceeds GDP. Interest consumes budgets.

Bridges crumble. Education falters. Politicians chase votes. We consume. We borrow.

Rome fell gradually. Citizens barely noticed. Taxes increased. Currency devalued. Military weakened. Services decayed. Life hardened by degrees.

East Rome adapted. Survived centuries. West fragmented. Trade shrank. Some thrived. Others suffered. Life changed permanently.

Those who could feed themselves survived best. Those who needed the system suffered worst.

Pendulum

My friend poured another coffee. The burn pile popped loudly. Sparks flew upward like dying stars.

His face changed as facts accumulated. Military man. Trained to assess threats. Detect weaknesses.

He stared at the fire. National glory reduced to embers. Something shifted in his expression. Recognition.

His fingers tightened around his mug. Knuckles white. Eyes fixed on dying flames.

I traced the horizon instead. Observing landscape. Noting the contrast.

He touched the flag on his t-shirt. I adjusted my plain gray one.

The unpayable debt. The crumbling infrastructure. The forgotten manufacturing. The dependent supply chains. The devaluing currency.

The pendulum clock ticked. Relentless. Indifferent to empires.

His eyes said what his patriotism couldn't voice. Something fundamental breaking.

I'd seen this coming. Years traveling showed me. Different systems. Different values. American exceptionalism viewed from outside.

Pragmatism replaced my old idealism. See things as they are. Not as wished.

The logs shifted. Flames reached higher. Then lower. The cycle of fire.

Divergence

Society always splits during shifts.

Some adapt. Some don't.

Printing arrived. Scribes starved. Publishers thrived. Information accelerated. Readers multiplied. Ideas spread. Adapters prospered.

Steam engines came. Weavers died. Factory owners flourished. Villages emptied. Cities grew. Coal replaced farms. Railways replaced wagons. New skills meant survival.

Computers transformed everything. Typewriters vanished. Software boomed. Data replaced paper. Networks replaced cabinets. Programmers replaced typists. Digital skills determined success.

The self-reliant thrived in each transition. Those waiting for rescue fell behind.

Now AI reshapes creativity. Some artists resist. Some harness it. Gap widens daily.

Bitcoin offers refuge. Critics mock. Adopters build wealth. The distance grows.

Remote work redraws maps. Office-bound struggle. Location-free flourish.

The pendulum swings. Power shifts. Some rise with it. Some fall against it.

Two societies emerge. Adaptive. Resistant. Prepared. Pretending.

Advantage

Early adapters win. Not through genius. Through action.

First printers built empires. First factories created dynasties. First websites became giants.

Bitcoin followed this pattern. Laptop miners became millionaires. Early buyers became legends.

Critics repeat themselves: "Too volatile." "No value." "Government ban coming."

Doubters doubt. Builders build. Gap widens.

Self-reliance accelerates adaptation. No permission needed. No consensus required. Act. Learn. Build.

The burn pile flames like empire's glory. Bright. Consuming. Temporary.

Blindness

Our brains see tigers. Not economic shifts.

We panic at headlines. We ignore decades-long trends.

We notice market drops. We miss debt cycles.

We debate tweets. We ignore revolutions.

Not weakness. Just humanity. Foresight requires work. Study. Thought.

Self-reliant thinking means seeing clearly. No comforting lies. No pleasing narratives. Just reality.

The clock pendulum swings. Time passes regardless of observation.

Action

Empires fall. Families need security. Children need futures. Lives need meaning.

You can adapt faster than nations.

Assess honestly. What skills matter now? What preserves wealth? Who helps when needed?

Never stop learning. Factory workers learned code. Taxi drivers joined apps. Photographers went digital.

Diversify globally. No country owns tomorrow. Learn languages. Make connections. Stay mobile.

Protect your money. Dying empires debase currencies. Romans kept gold. Bitcoin offers similar shelter.

Build resilience. Grow food. Make energy. Stay strong. Keep friends. Read old books. Some things never change.

Self-reliance matters most. Can you feed yourself? Can you fix things? Can you solve problems? Can you create value without systems?

Movement

Humans were nomads first. Settlers second. Movement in our blood.

Our ancestors followed herds. Sought better lands. Survival meant mobility.

The pendulum swings here too. Nomad to farmer. City-dweller to digital nomad.

Rome fixed people to land. Feudalism bound serfs to soil. Nations created borders. Companies demanded presence.

Now technology breaks chains. Work happens anywhere. Knowledge flows everywhere.

The rebuild America seeks requires fixed positions. Factory workers. Taxpaying citizens in permanent homes.

But technology enables escape. Remote work. Digital currencies. Borderless businesses.

The self-reliant understand mobility as freedom. One location means one set of rules. One economy. One fate.

Many locations mean options. Taxes become predatory? Leave. Opportunities disappear? Find new ones.

Patriotism celebrates roots. Wisdom remembers wings.

My friend's boots dug into his soil. Planted. Territorial. Defending.

My Chucks rested lightly. Ready. Adaptable. Departing.

His toolshed held equipment to maintain boundaries. Fences. Hedges. Property lines.

My backpack contained tools for crossing them. Chargers. Adapters. Currency.

The burn pile flame flickers. Fixed in place. The spark flies free. Movement its nature.

During Rome's decline, the mobile survived best. Merchants crossing borders. Scholars seeking patrons. Those tied to crumbling systems suffered most.

Location independence means personal resilience. Economic downturns become geographic choices. Political oppression becomes optional suffering.

Technology shrinks distance. Digital work. Video relationships. Online learning.

Self-sovereignty requires mobility. The option to walk away. The freedom to arrive elsewhere.

Two more worlds diverge. The rooted. The mobile. The fixed. The fluid. The loyal. The free.

Hope

Not decline. Transition. Painful but temporary.

America may weaken. Humanity advances. Technology multiplies possibilities. Poverty falls. Knowledge grows.

Falling empires see doom. Rising ones see opportunity. Both miss half the picture.

Every shift brings destruction and creation. Rome fell. Europe struggled. Farms produced less. Cities shrank. Trade broke down.

Yet innovation continued. Water mills appeared. New plows emerged. Monks preserved books. New systems evolved.

Different doesn't mean worse for everyone.

Some industries die. Others birth. Some regions fade. Others bloom. Some skills become useless. Others become gold.

The self-reliant thrive in any world. They adapt. They build. They serve. They create.

Choose your role. Nostalgia or building.

The pendulum swings. East rises again. The cycle continues.

Fading

The burn pile dimmed. Embers fading. Night air cooling.

My friend's shoulders changed. Tension releasing. Something accepted.

His patriotism remained. His illusions departed.

The pendulum clock ticked steadily. Measuring more than minutes. Measuring eras.

Two coffee cups. His: military-themed, old and chipped but cherished. Mine: plain porcelain, new and unmarked.

His eyes remained on smoldering embers. Mine moved between him and the darkening trees.

His calendar marked local town meetings. Mine tracked travel dates.

The last flame flickered out. Spring peepers filled the silence.

In darkness, we watched smoke rise. The world changing. New choices ahead.

No empire lasts forever. No comfort in denial. Only clarity in acceptance.

Self-reliance the ancient answer. Build your skills. Secure your resources. Strengthen your body. Feed your mind. Help your neighbors.

The burn pile turned to ash. Empire's glory extinguished.

He stood facing his land. I faced the road.

A nod between us. Respect across division. Different strategies for the same storm.

He turned toward his home. I toward my vehicle.

The pendulum continued swinging. Power flowing east once more. Five centuries ending. Five centuries beginning.

"Bear in mind that everything that exists is already fraying at the edges." — Marcus Aurelius

Tomorrow depends not on nations. On us.

-

@ 91bea5cd:1df4451c

2025-04-26 10:16:21

@ 91bea5cd:1df4451c

2025-04-26 10:16:21O Contexto Legal Brasileiro e o Consentimento

No ordenamento jurídico brasileiro, o consentimento do ofendido pode, em certas circunstâncias, afastar a ilicitude de um ato que, sem ele, configuraria crime (como lesão corporal leve, prevista no Art. 129 do Código Penal). Contudo, o consentimento tem limites claros: não é válido para bens jurídicos indisponíveis, como a vida, e sua eficácia é questionável em casos de lesões corporais graves ou gravíssimas.

A prática de BDSM consensual situa-se em uma zona complexa. Em tese, se ambos os parceiros são adultos, capazes, e consentiram livre e informadamente nos atos praticados, sem que resultem em lesões graves permanentes ou risco de morte não consentido, não haveria crime. O desafio reside na comprovação desse consentimento, especialmente se uma das partes, posteriormente, o negar ou alegar coação.

A Lei Maria da Penha (Lei nº 11.340/2006)

A Lei Maria da Penha é um marco fundamental na proteção da mulher contra a violência doméstica e familiar. Ela estabelece mecanismos para coibir e prevenir tal violência, definindo suas formas (física, psicológica, sexual, patrimonial e moral) e prevendo medidas protetivas de urgência.

Embora essencial, a aplicação da lei em contextos de BDSM pode ser delicada. Uma alegação de violência por parte da mulher, mesmo que as lesões ou situações decorram de práticas consensuais, tende a receber atenção prioritária das autoridades, dada a presunção de vulnerabilidade estabelecida pela lei. Isso pode criar um cenário onde o parceiro masculino enfrenta dificuldades significativas em demonstrar a natureza consensual dos atos, especialmente se não houver provas robustas pré-constituídas.

Outros riscos:

Lesão corporal grave ou gravíssima (art. 129, §§ 1º e 2º, CP), não pode ser justificada pelo consentimento, podendo ensejar persecução penal.

Crimes contra a dignidade sexual (arts. 213 e seguintes do CP) são de ação pública incondicionada e independem de representação da vítima para a investigação e denúncia.

Riscos de Falsas Acusações e Alegação de Coação Futura

Os riscos para os praticantes de BDSM, especialmente para o parceiro que assume o papel dominante ou que inflige dor/restrição (frequentemente, mas não exclusivamente, o homem), podem surgir de diversas frentes:

- Acusações Externas: Vizinhos, familiares ou amigos que desconhecem a natureza consensual do relacionamento podem interpretar sons, marcas ou comportamentos como sinais de abuso e denunciar às autoridades.

- Alegações Futuras da Parceira: Em caso de término conturbado, vingança, arrependimento ou mudança de perspectiva, a parceira pode reinterpretar as práticas passadas como abuso e buscar reparação ou retaliação através de uma denúncia. A alegação pode ser de que o consentimento nunca existiu ou foi viciado.

- Alegação de Coação: Uma das formas mais complexas de refutar é a alegação de que o consentimento foi obtido mediante coação (física, moral, psicológica ou econômica). A parceira pode alegar, por exemplo, que se sentia pressionada, intimidada ou dependente, e que seu "sim" não era genuíno. Provar a ausência de coação a posteriori é extremamente difícil.

- Ingenuidade e Vulnerabilidade Masculina: Muitos homens, confiando na dinâmica consensual e na parceira, podem negligenciar a necessidade de precauções. A crença de que "isso nunca aconteceria comigo" ou a falta de conhecimento sobre as implicações legais e o peso processual de uma acusação no âmbito da Lei Maria da Penha podem deixá-los vulneráveis. A presença de marcas físicas, mesmo que consentidas, pode ser usada como evidência de agressão, invertendo o ônus da prova na prática, ainda que não na teoria jurídica.

Estratégias de Prevenção e Mitigação

Não existe um método infalível para evitar completamente o risco de uma falsa acusação, mas diversas medidas podem ser adotadas para construir um histórico de consentimento e reduzir vulnerabilidades:

- Comunicação Explícita e Contínua: A base de qualquer prática BDSM segura é a comunicação constante. Negociar limites, desejos, palavras de segurança ("safewords") e expectativas antes, durante e depois das cenas é crucial. Manter registros dessas negociações (e-mails, mensagens, diários compartilhados) pode ser útil.

-

Documentação do Consentimento:

-

Contratos de Relacionamento/Cena: Embora a validade jurídica de "contratos BDSM" seja discutível no Brasil (não podem afastar normas de ordem pública), eles servem como forte evidência da intenção das partes, da negociação detalhada de limites e do consentimento informado. Devem ser claros, datados, assinados e, idealmente, reconhecidos em cartório (para prova de data e autenticidade das assinaturas).

-

Registros Audiovisuais: Gravar (com consentimento explícito para a gravação) discussões sobre consentimento e limites antes das cenas pode ser uma prova poderosa. Gravar as próprias cenas é mais complexo devido a questões de privacidade e potencial uso indevido, mas pode ser considerado em casos específicos, sempre com consentimento mútuo documentado para a gravação.

Importante: a gravação deve ser com ciência da outra parte, para não configurar violação da intimidade (art. 5º, X, da Constituição Federal e art. 20 do Código Civil).

-

-

Testemunhas: Em alguns contextos de comunidade BDSM, a presença de terceiros de confiança durante negociações ou mesmo cenas pode servir como testemunho, embora isso possa alterar a dinâmica íntima do casal.

- Estabelecimento Claro de Limites e Palavras de Segurança: Definir e respeitar rigorosamente os limites (o que é permitido, o que é proibido) e as palavras de segurança é fundamental. O desrespeito a uma palavra de segurança encerra o consentimento para aquele ato.

- Avaliação Contínua do Consentimento: O consentimento não é um cheque em branco; ele deve ser entusiástico, contínuo e revogável a qualquer momento. Verificar o bem-estar do parceiro durante a cena ("check-ins") é essencial.

- Discrição e Cuidado com Evidências Físicas: Ser discreto sobre a natureza do relacionamento pode evitar mal-entendidos externos. Após cenas que deixem marcas, é prudente que ambos os parceiros estejam cientes e de acordo, talvez documentando por fotos (com data) e uma nota sobre a consensualidade da prática que as gerou.

- Aconselhamento Jurídico Preventivo: Consultar um advogado especializado em direito de família e criminal, com sensibilidade para dinâmicas de relacionamento alternativas, pode fornecer orientação personalizada sobre as melhores formas de documentar o consentimento e entender os riscos legais específicos.

Observações Importantes

- Nenhuma documentação substitui a necessidade de consentimento real, livre, informado e contínuo.

- A lei brasileira protege a "integridade física" e a "dignidade humana". Práticas que resultem em lesões graves ou que violem a dignidade de forma não consentida (ou com consentimento viciado) serão ilegais, independentemente de qualquer acordo prévio.

- Em caso de acusação, a existência de documentação robusta de consentimento não garante a absolvição, mas fortalece significativamente a defesa, ajudando a demonstrar a natureza consensual da relação e das práticas.

-

A alegação de coação futura é particularmente difícil de prevenir apenas com documentos. Um histórico consistente de comunicação aberta (whatsapp/telegram/e-mails), respeito mútuo e ausência de dependência ou controle excessivo na relação pode ajudar a contextualizar a dinâmica como não coercitiva.

-

Cuidado com Marcas Visíveis e Lesões Graves Práticas que resultam em hematomas severos ou lesões podem ser interpretadas como agressão, mesmo que consentidas. Evitar excessos protege não apenas a integridade física, mas também evita questionamentos legais futuros.

O que vem a ser consentimento viciado

No Direito, consentimento viciado é quando a pessoa concorda com algo, mas a vontade dela não é livre ou plena — ou seja, o consentimento existe formalmente, mas é defeituoso por alguma razão.

O Código Civil brasileiro (art. 138 a 165) define várias formas de vício de consentimento. As principais são:

Erro: A pessoa se engana sobre o que está consentindo. (Ex.: A pessoa acredita que vai participar de um jogo leve, mas na verdade é exposta a práticas pesadas.)

Dolo: A pessoa é enganada propositalmente para aceitar algo. (Ex.: Alguém mente sobre o que vai acontecer durante a prática.)

Coação: A pessoa é forçada ou ameaçada a consentir. (Ex.: "Se você não aceitar, eu termino com você" — pressão emocional forte pode ser vista como coação.)

Estado de perigo ou lesão: A pessoa aceita algo em situação de necessidade extrema ou abuso de sua vulnerabilidade. (Ex.: Alguém em situação emocional muito fragilizada é induzida a aceitar práticas que normalmente recusaria.)

No contexto de BDSM, isso é ainda mais delicado: Mesmo que a pessoa tenha "assinado" um contrato ou dito "sim", se depois ela alegar que seu consentimento foi dado sob medo, engano ou pressão psicológica, o consentimento pode ser considerado viciado — e, portanto, juridicamente inválido.

Isso tem duas implicações sérias:

-

O crime não se descaracteriza: Se houver vício, o consentimento é ignorado e a prática pode ser tratada como crime normal (lesão corporal, estupro, tortura, etc.).

-

A prova do consentimento precisa ser sólida: Mostrando que a pessoa estava informada, lúcida, livre e sem qualquer tipo de coação.

Consentimento viciado é quando a pessoa concorda formalmente, mas de maneira enganada, forçada ou pressionada, tornando o consentimento inútil para efeitos jurídicos.

Conclusão