-

@ miljan

2023-08-31 13:47:34

@ miljan

2023-08-31 13:47:34As Nostr continues to grow, we need to consider the reality of content moderation on such a radically open network at scale. Anyone is able to post anything to Nostr. Anyone is able to build anything on Nostr, which includes running bots basically for free. There is no central arbiter of truth. So how do we navigate all of this? How do we find signal and avoid the stuff we don’t want to see? But most importantly, how do we avoid the nightmare of centralized content moderation and censorship that plagues the legacy social media?

At Primal, we were motivated by the feedback from our users to build a new content moderation system for Nostr. We now offer moderation tools based on the wisdom of the crowd, combined with Primal’s open source spam detection algorithm. Every part of our content filtering system is now optional. We provide perfect transparency over what is being filtered and give complete control to the user. Read on to learn about how our system works.

Wisdom of the Crowd

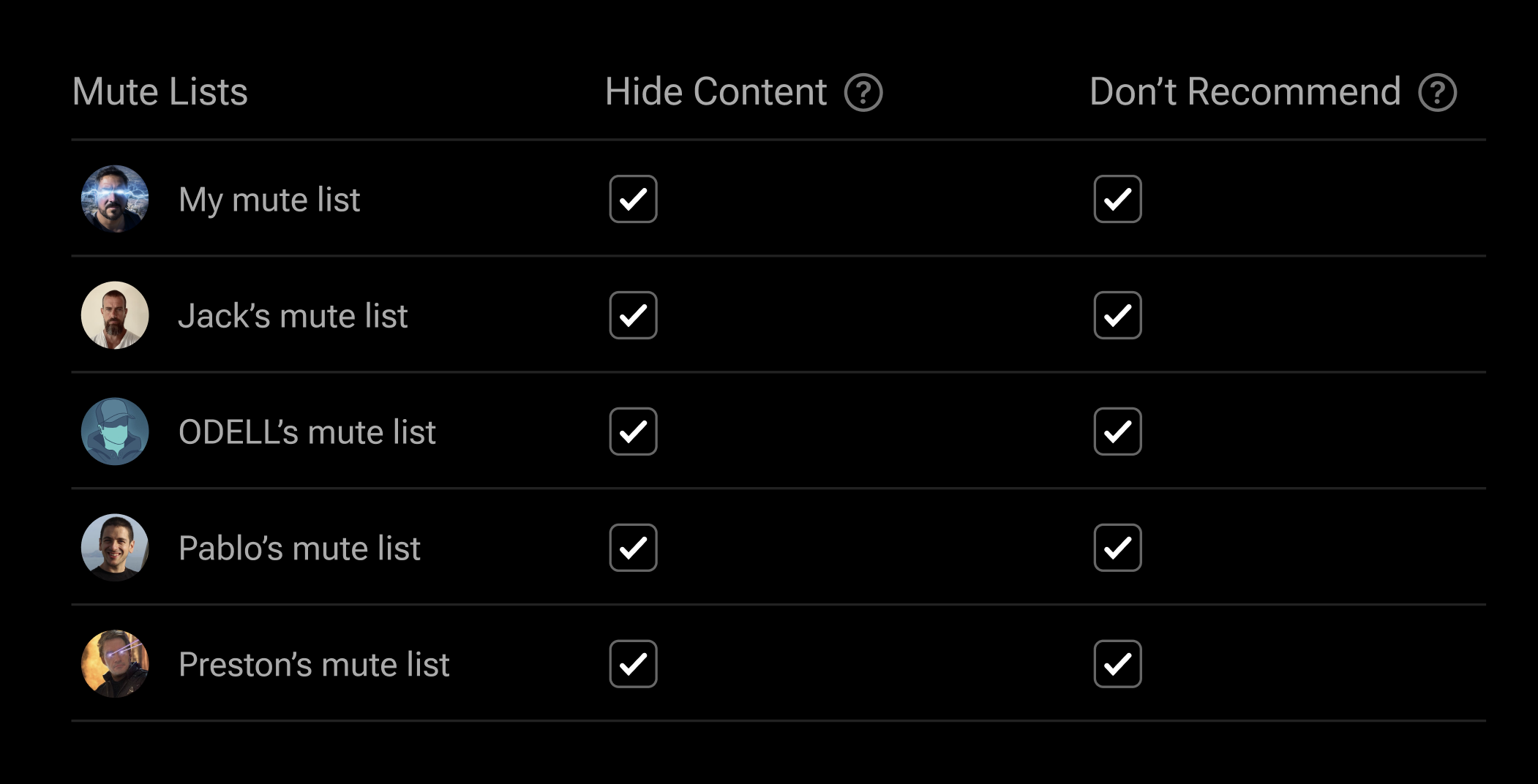

Every Nostr user is able to define their personal mute list: accounts whose content they don’t wish to see. This simple tool is effective but laborious because the user needs to mute each non-wanted account individually. However, everyone’s mute list is public on Nostr, so we can leverage the work of other users. Primal’s content moderation system enables you to subscribe to any number of mute lists. You can select a group of people you respect and rely on their collective judgment to hide irrelevant or offensive content.

Outsourcing the moderation work to a group of people you personally selected is much better than having to do all the work yourself, but unfortunately it won’t always be sufficient. Spam bots are capable of creating millions of Nostr accounts that can infiltrate your threads faster than your network of humans is able to mute them. This is why we built a spam detection algorithm, which Primal users can subscribe to.

There Will Be Algorithms

Nostr users have a natural aversion to algorithms. Many of us are here in part because we can’t stand the manipulated feeds by the legacy social media. One of the most insidious examples of manipulation is shadowbanning: secretly reducing the visibility of an account throughout the entire site, where their posts are hidden from their followers and their replies don’t show up in conversation threads. It is understandable that many Nostr users are suspicious of algorithms to such extent that they don’t want any algorithms in their feeds. Those users will enjoy Primal’s default “Latest” feed, which simply shows the chronological list of notes by the accounts they follow. We even support viewing another user’s “Latest” feed, which enables consuming Nostr content from that person’s point of view, without any algorithmic intervention.

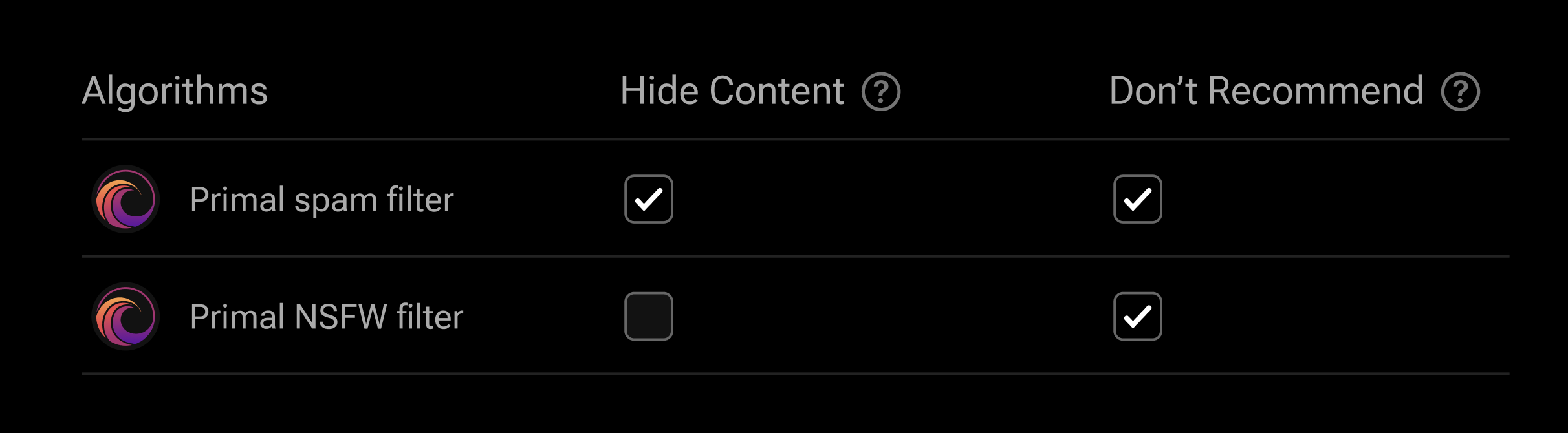

But algorithms are just tools, and obviously not all algorithms are bad. For example, we can use them to detect spam or not-safe-for-work content:

In our view, algos will play an important role in Nostr content moderation and discovery. We envision a future in which there is an open marketplace for algorithms, giving a range of choices to the user. At this early stage of Nostr development, it is important to consider the guiding principles for responsible and ethical use of algorithms. At Primal, we are thinking about it as follows:

- All algorithms should be optional;

- The user should be able to choose which algorithm will be used;

- Algorithms should be open sourced and verifiable by anyone independently;

- The output of each algorithm should be perfectly transparent to the user.

Perfect Transparency

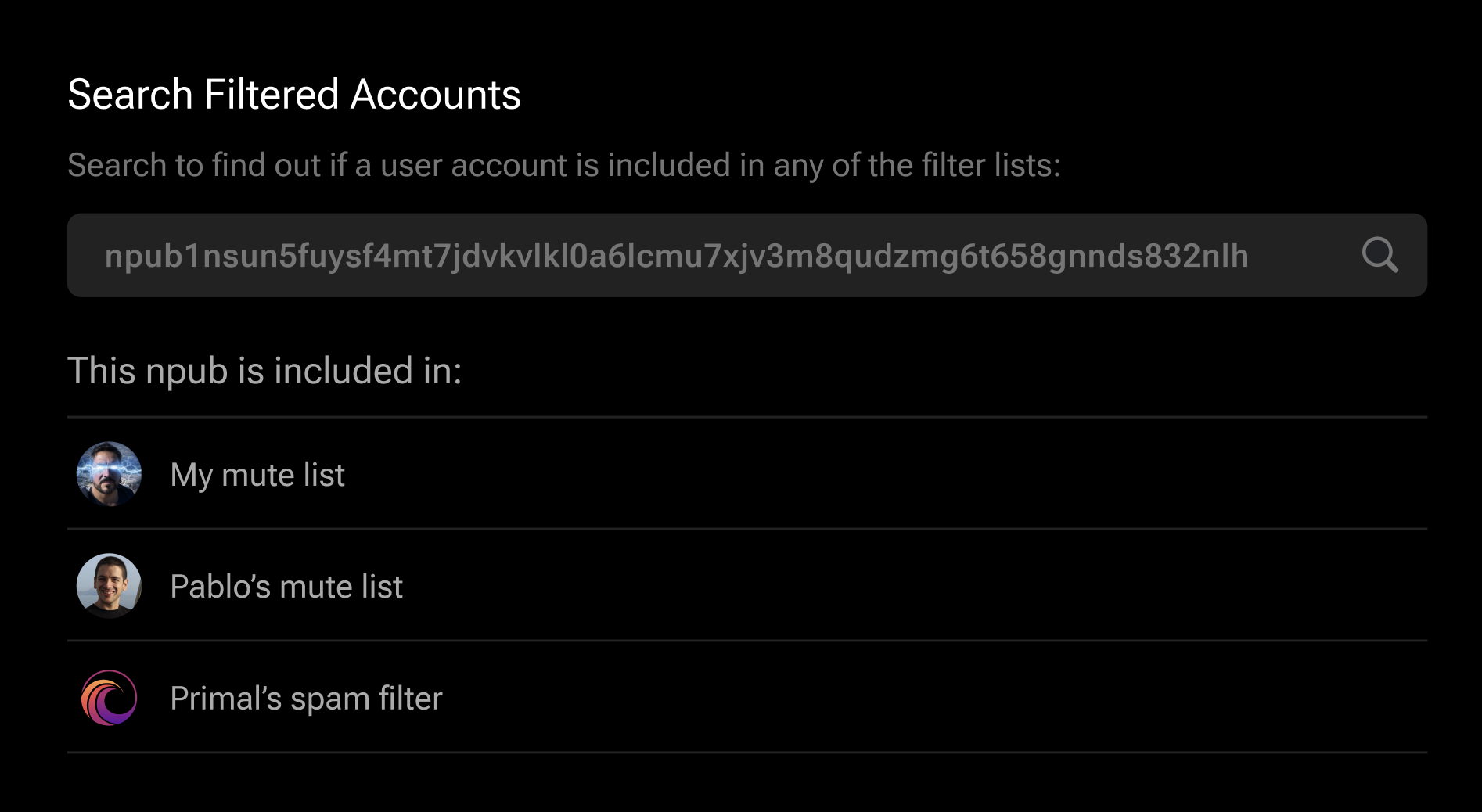

Our new content moderation system enables users to easily check whether a Nostr account is included on any of the filter lists they subscribe to:

This simple search provides complete transparency regarding the inclusion of a given account in any of the active filter lists. Beyond that, it would be helpful to provide all filter lists in their entirety. Note that this is not trivial to do because these lists can get quite large. Our goal is to provide them in the most efficient way possible. We are working on exposing them via Nostr Data Vending Machines; stay tuned for more information on this in the coming days.

Complete Control

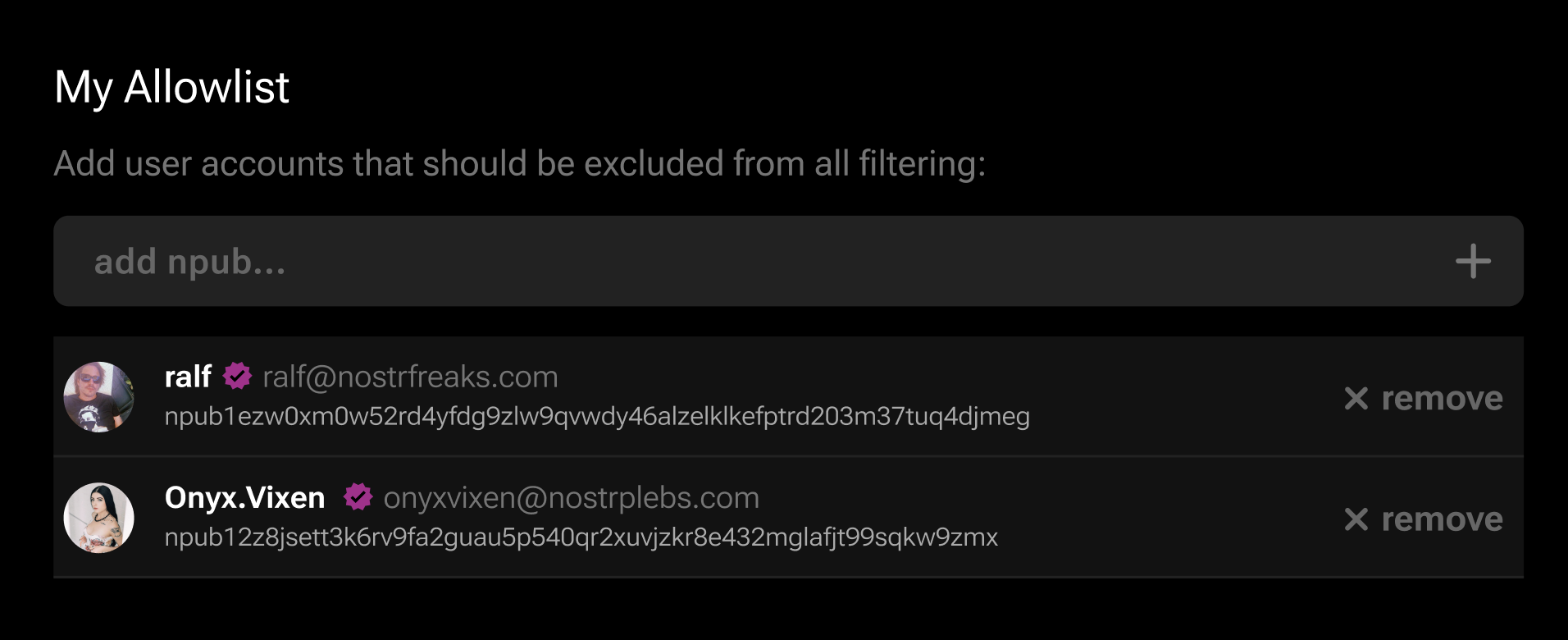

The user should always have the final word on filtering. If after configuring all the filtering settings to your liking, you find that some legitimate accounts are being filtered, you can specify your “never filter” allowlist, which will override all filtering. We are persisting this allowlist as a standard Nostr categorized people list (kind: 30000), so that other Nostr clients can leverage it as well. In addition, everyone you follow will automatically be excluded from all filtering.

Open Source FTW

At Primal, we do two things:

- We build open source software for Nostr

- We run a service for Nostr users, powered by our software

Every part of Primal’s technology stack is open sourced under the most-permissive MIT license. Anyone is free to stand up a service based on our software, fork it, make modifications to it, and add new features to it.

We run our service based on the policies we established as appropriate for Primal. Others can stand up services powered by our software and choose a different set of policies. Users are free to choose the service that best suits their needs. They can move between clients and services without any friction. This unprecedented level of user empowerment is what attracted most of us to Nostr. At Primal, we think that Nostr will change everything. We will continue to work tirelessly every day to keep making it better. 🤙💜

Please note: The entire Primal technology stack is still in preview. Just like everything else, the content moderation system is a work in progress. Thank you for helping us test it. Please keep the feedback coming. Let us know what’s not working and what we can improve.