-

@ OTI

2025-06-16 19:10:38

@ OTI

2025-06-16 19:10:38

Almost everyone knows about ChatGPT and its maker OpenAI. OpenAI has of last year quietly removed the language from its usage policy which prohibited military use of its technology a move with serious implications given the increased use of artificial intelligence on battlefields all over the world.

As AI advances so does its weaponization.

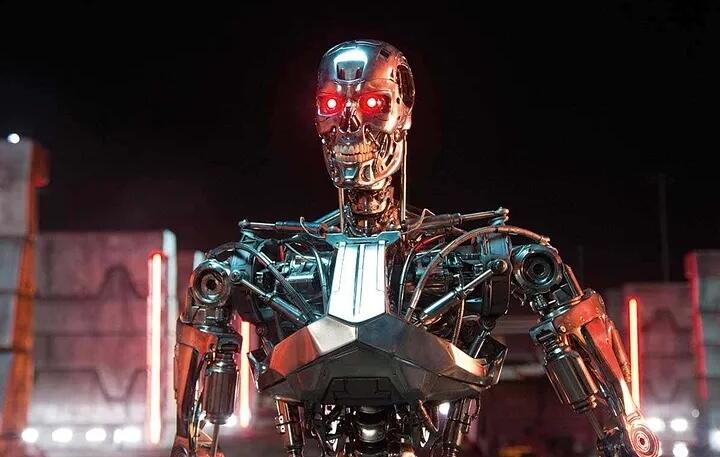

Experts warn that AI applications including lethal weapons systems commonly called “killer robots,” could pose a potentially existential threat to humanity that underestimate the imperative of arms control measures to slow the pace of weaponization.

There was already trouble in Ukraine and in Gaza. With Yemen also coming into the scene now are we stepping into the perfect storm powered by AI?

Just for more clarity around this whole issue OpenAI’s new policy still states that users should not harm human beings or “develop or use weapons”, but it has removed the clause prohibiting use for “military and warfare”. Many AI experts are concerned with this move.

Could this be terminator or i robot scene playing in world 🤔?

There was already trouble in Ukraine and in Gaza. With Yemen also coming into the scene now are we stepping into the perfect storm powered by AI?

Just for more clarity around this whole issue OpenAI’s new policy still states that users should not harm human beings or “develop or use weapons”, but it has removed the clause prohibiting use for “military and warfare”. Many AI experts are concerned with this move.

Could this be terminator or i robot scene playing in world 🤔?